最近,Qwen 团队发布了 QwQ-32B 模型,这款推理模型在诸多 评测基准 上展现出媲美 DeepSeek-R1 的卓越性能。然而,不少用户在使用过程中遇到了 无限生成 、 重复内容过多 、token 问题以及微调难题。本文旨在提供一份详尽的指南,助力大家调试并解决这些问题,充分释放 QwQ-32B 的潜力。

Unsloth 团队上传的模型修复了上述错误,可以更好地支持微调、vLLM 和 Transformers 等工具和框架。对于使用 llama.cpp 以及其他以 llama.cpp 为后端的引擎的用户,请参考 此链接 获取针对无限生成问题的修复指导。

Unsloth QwQ-32B 模型(已修复 bug):

官方推荐设置

⚙️ 官方推荐设置

根据 Qwen 官方建议,以下是进行模型推理时的推荐参数设置:

- Temperature(温度):0.6

- Top_K:40 (建议范围 20-40)

- Min_P:0.1 (可选,但效果良好)

- Top_P:0.95

- Repetition Penalty(重复惩罚):1.0 (在 llama.cpp 和 transformers 中,1.0 表示禁用)

- Chat template(聊天模板):

<|im_start|>user\nCreate a Flappy Bird game in Python.<|im_end|>\n<|im_start|>assistant\n<think>\n

llama.cpp 推荐设置

👍 llama.cpp 推荐设置

Unsloth 团队注意到,许多用户倾向于使用大于 1.0 的 Repetition Penalty 值,例如 1.1 到 1.5。然而,这种做法实际上会干扰 llama.cpp 的采样机制。重复惩罚的初衷是减少重复生成,但实验表明,这种方式并未达到预期效果。

尽管如此,完全禁用重复惩罚(设置为 1.0)也是一种选择。但 Unsloth 团队发现,适当的重复惩罚对于抑制无限生成仍然有效。

为了有效使用重复惩罚,必须调整 llama.cpp 中采样器的顺序,确保在应用 Repetition Penalty 之前进行采样,否则会导致无限生成。为此,请添加以下参数:

--samplers "top_k;top_p;min_p;temperature;dry;typ_p;xtc"

默认情况下,llama.cpp 使用的采样器顺序如下:

--samplers "dry;top_k;typ_p;top_p;min_p;xtc;temperature"

Unsloth 团队调整后的顺序主要是将 temperature 和 dry 的位置互换,并将 min_p 前移。这意味着采样器将按照以下顺序应用:

top_k=40

top_p=0.95

min_p=0.1

temperature=0.6

dry

typ_p

xtc

如果问题依然存在,可以尝试将 --repeat-penalty 的值从 1.0 略微提高到 1.2 或 1.3。

感谢 @krist486 提醒我们注意 llama.cpp 的采样方向问题。

Dry Repetition Penalty (Dry 重复惩罚)

☀️ Dry 重复惩罚

Unsloth 团队研究了 https://github.com/ggml-org/llama.cpp/blob/master/examples/main/README.md 中建议的 dry penalty 用法,并尝试使用 0.8 的值。但实验结果表明,dry penalty 更容易导致语法错误,尤其是在生成代码时 。如果用户仍然遇到问题,可以尝试将 dry penalty 提高到 0.8。

如果选择使用 dry penalty,调整后的采样顺序同样能有所帮助。

Ollama 运行 QwQ-32B 教程

🦙 Ollama 运行 QwQ-32B 教程

- 如果尚未安装

ollama,请先进行安装!

apt-get update

apt-get install pciutils -y

curl -fSSL [https://ollama.com/install.sh](https://www.google.com/url?sa=E&q=https%3A%2F%2Follama.com%2Finstall.sh) | sh

- 运行模型!如果运行失败,可以尝试在另一个终端运行

ollama serve!Unsloth 团队已将所有修复和建议参数(温度等)包含在 Hugging Face 上传模型的param文件中!

ollama run hf.co/unsloth/QwQ-32B-GGUF:Q4_K_M

llama.cpp 运行 QwQ-32B 教程

📖 llama.cpp 运行 QwQ-32B 教程

- 从 llama.cpp 获取最新版

llama.cpp。可以参考以下构建说明进行构建。如果没有 GPU 或者只想进行 CPU 推理,请将-DGGML_CUDA=ON替换为-DGGML_CUDA=OFF。

apt-get update

apt-get install pciutils build-essential cmake curl libcurl4-openssl-dev -y

git clone [https://github.com/ggerganov/llama.cpp](https://www.google.com/url?sa=E&q=https%3A%2F%2Fgithub.com%2Fggerganov%2Fllama.cpp)

cmake llama.cpp -B llama.cpp/build

-DBUILD_SHARED_LIBS=ON -DGGML_CUDA=ON -DLLAMA_CURL=ON

cmake --build llama.cpp/build --config Release -j --clean-first --target llama-quantize llama-cli llama-gguf-split

cp llama.cpp/build/bin/llama-* llama.cpp

- 下载模型(在安装

pip install huggingface_hub hf_transfer后)。可以选择 Q4_K_M 或其他量化版本(如 BF16 全精度)。更多版本请访问:https://huggingface.co/unsloth/QwQ-32B-GGUF。

# !pip install huggingface_hub hf_transfer

import os

os.environ["HF_HUB_ENABLE_HF_TRANSFER"] = "1"

from huggingface_hub import snapshot_download

snapshot_download(

repo_id="unsloth/QwQ-32B-GGUF",

local_dir="unsloth-QwQ-32B-GGUF",

allow_patterns=[" Q4_K_M "], # For Q4_K_M

)

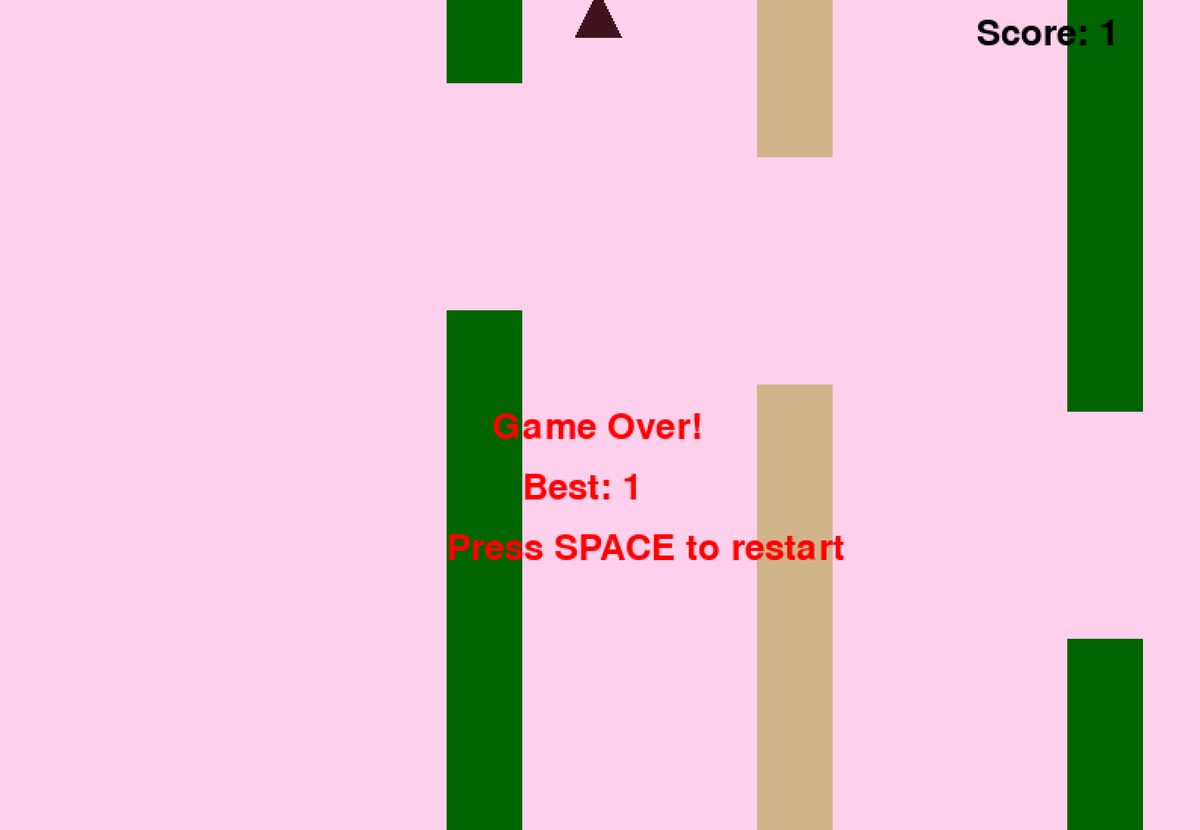

- 运行 Unsloth 提供的 Flappy Bird 测试脚本,输出结果将保存到

Q4_K_M_yes_samplers.txt文件。 - 根据实际情况调整参数。

--threads 32设置 CPU 线程数,--ctx-size 16384设置上下文长度,--n-gpu-layers 99设置 GPU 卸载层数。如果 GPU 显存不足,请尝试调整--n-gpu-layers的值。如果仅使用 CPU 推理,则移除此参数。 --repeat-penalty 1.1和--dry-multiplier 0.5是重复惩罚和 dry penalty 参数,用户可以根据需要进行调整。

./llama.cpp/llama-cli

--model unsloth-QwQ-32B-GGUF/QwQ-32B-Q4_K_M.gguf

--threads 32

--ctx-size 16384

--n-gpu-layers 99

--seed 3407

--prio 2

--temp 0.6

--repeat-penalty 1.1

--dry-multiplier 0.5

--min-p 0.1

--top-k 40

--top-p 0.95

-no-cnv

--samplers "top_k;top_p;min_p;temperature;dry;typ_p;xtc"

--prompt "<|im_start|>user\nCreate a Flappy Bird game in Python. You must include these things:\n1. You must use pygame.\n2. The background color should be randomly chosen and is a light shade. Start with a light blue color.\n3. Pressing SPACE multiple times will accelerate the bird.\n4. The bird's shape should be randomly chosen as a square, circle or triangle. The color should be randomly chosen as a dark color.\n5. Place on the bottom some land colored as dark brown or yellow chosen randomly.\n6. Make a score shown on the top right side. Increment if you pass pipes and don't hit them.\n7. Make randomly spaced pipes with enough space. Color them randomly as dark green or light brown or a dark gray shade.\n8. When you lose, show the best score. Make the text inside the screen. Pressing q or Esc will quit the game. Restarting is pressing SPACE again.\nThe final game should be inside a markdown section in Python. Check your code for errors and fix them before the final markdown section.<|im_end|>\n<|im_start|>assistant\n <think> \n"

2>&1 | tee Q4_K_M_yes_samplers.txt

上述 Flappy Bird 游戏提示词来源于 Unsloth 的 DeepSeekR1-Dynamic 1.58bit 博文。完整提示词如下:

<|im_start|>user

Create a Flappy Bird game in Python. You must include these things:

1. You must use pygame.

2. The background color should be randomly chosen and is a light shade. Start with a light blue color.

3. Pressing SPACE multiple times will accelerate the bird.

4. The bird's shape should be randomly chosen as a square, circle or triangle. The color should be randomly chosen as a dark color.

5. Place on the bottom some land colored as dark brown or yellow chosen randomly.

6. Make a score shown on the top right side. Increment if you pass pipes and don't hit them.

7. Make randomly spaced pipes with enough space. Color them randomly as dark green or light brown or a dark gray shade.

8. When you lose, show the best score. Make the text inside the screen. Pressing q or Esc will quit the game. Restarting is pressing SPACE again.

The final game should be inside a markdown section in Python. Check your code for errors and fix them before the final markdown section.<|im_end|>

<|im_start|>assistant

<think>

以下是模型生成的 Python 代码的开头和结尾部分(已移除思考过程):

import pygame

import random

import sys

pygame.init()

### Continues

class Bird:

def __init__(self):

### Continues

def main():

best_score = 0

current_score = 0

game_over = False

pipes = []

first_time = True # Track first game play

# Initial setup

background_color = (173, 216, 230) # Light blue initially

land_color = random.choice(land_colors)

bird = Bird()

while True:

for event in pygame.event.get():

### Continues

if not game_over:

# Update bird and pipes

bird.update()

### Continues

# Drawing

### Continues

pygame.display.flip()

clock.tick(60)

if __name__ == "__main__":

main()

模型成功生成了一个可运行的 Flappy Bird 游戏!

接下来,尝试移除 --samplers "top_k;top_p;min_p;temperature;dry;typ_p;xtc" 参数,在不使用 Unsloth 修复的情况下运行相同的命令。输出结果将保存到 Q4_K_M_no_samplers.txt 文件。

./llama.cpp/llama-cli

--model unsloth-QwQ-32B-GGUF/QwQ-32B-Q4_K_M.gguf

--threads 32

--ctx-size 16384

--n-gpu-layers 99

--seed 3407

--prio 2

--temp 0.6

--repeat-penalty 1.1

--dry-multiplier 0.5

--min-p 0.1

--top-k 40

--top-p 0.95

-no-cnv

--prompt "<|im_start|>user\nCreate a Flappy Bird game in Python. You must include these things:\n1. You must use pygame.\n2. The background color should be randomly chosen and is a light shade. Start with a light blue color.\n3. Pressing SPACE multiple times will accelerate the bird.\n4. The bird's shape should be randomly chosen as a square, circle or triangle. The color should be randomly chosen as a dark color.\n5. Place on the bottom some land colored as dark brown or yellow chosen randomly.\n6. Make a score shown on the top right side. Increment if you pass pipes and don't hit them.\n7. Make randomly spaced pipes with enough space. Color them randomly as dark green or light brown or a dark gray shade.\n8. When you lose, show the best score. Make the text inside the screen. Pressing q or Esc will quit the game. Restarting is pressing SPACE again.\nThe final game should be inside a markdown section in Python. Check your code for errors and fix them before the final markdown section.<|im_end|>\n<|im_start|>assistant\n<think>\n"

2>&1 | tee Q4_K_M_no_samplers.txt

在不使用修复的情况下,模型生成结果会出现循环,并且 Python 语法错误频出 ,以及其他各种问题。例如,以下代码片段看似正确,但实际上存在错误!第 39 行 pipes.clear() ### <<< NameError: name 'pipes' is not defined. Did you forget to import 'pipes'? 提示 pipes 未定义。

import pygame

import random

pygame.init()

# Constants

WIDTH, HEIGHT = 800, 600

GROUND_HEIGHT = 20

GRAVITY = 0.7

PIPE_SPEED = -3

BIRD_SIZE = 45

MIN_GAP = 130

MAX_GAP = 200

PIPE_COLORS = [(0, 96, 0), (205, 133, 63), (89, 97, 107)]

DARK_BROWN = (94, 72, 4)

YELLOW = (252, 228, 6)

screen = pygame.display.set_mode((WIDTH, HEIGHT))

clock = pygame.time.Clock()

def random_light_color():

return (

random.randint(180, 230),

random.randint(190, 300),

random.randint(250, 255)

)

def reset_game():

global bird_x, bird_y

global pipes, score

global background_color, land_color

global bird_shape, bird_color

# Bird properties

bird_x = WIDTH * 0.3

bird_y = HEIGHT // 2

bird_vel = -5 # Initial upward thrust

pipes.clear() ### <<< NameError: name 'pipes' is not defined. Did you forget to import 'pipes'?

如果进一步将 --repeat-penalty 提高到 1.5,情况会变得更糟,语法错误更加明显,代码也完全无法运行。

import pygame

from random import randint # For generating colors/shapes/positions randomly

pygame.init()

# Constants:

WIDTH, HEIGHT =456 ,702 #

BACKGROUND_COLOR_LIGHTS=['lightskyblue']

GAP_SIZE=189 #

BIRD_RADIUS=3.

PIPE_SPEED=- ( ) ?

class Game():

def __init__(self):

self.screen_size=( )

def reset_game_vars():

global current_scor e

# set to zero and other initial states.

# Main game loop:

while running :

for event in pygame.event.get() :

if quit ... etc

pygame.quit()

print("Code is simplified. Due time constraints, full working version requires further implementation.")

可能有人会认为这只是 Q4_K_M 量化版本的问题?BF16 全精度版本应该没问题吧?然而,事实并非如此。即使使用 BF16 全精度模型,如果不应用 Unsloth 团队提供的 -samplers "top_k;top_p;min_p;temperature;dry;typ_p;xtc" 修复方案,并使用 Repetition Penalty,同样会出现生成失败的情况。

token 未显示?

🤔 token 未显示?

有用户反馈,由于聊天模板默认添加了 token,部分系统可能无法正确输出思考过程。用户需要手动编辑 Jinja 模板,将:

{%- if tools %} {{- '<|im_start|>system\n' }} {%- if messages[0]['role'] == 'system' %} {{- messages[0]['content'] }} {%- else %} {{- '' }} {%- endif %} {{- "\n\n# Tools\n\nYou may call one or more functions to assist with the user query.\n\nYou are provided with function signatures within <tools></tools> XML tags:\n<tools>" }} {%- for tool in tools %} {{- "\n" }} {{- tool | tojson }} {%- endfor %} {{- "\n</tools>\n\nFor each function call, return a json object with function name and arguments within <tool_call></tool_call> XML tags:\n<tool_call>\n{\"name\": <function-name>, \"arguments\": <args-json-object>}\n</tool_call><|im_end|>\n" }} {%- else %} {%- if messages[0]['role'] == 'system' %} {{- '<|im_start|>system\n' + messages[0]['content'] + '<|im_end|>\n' }} {%- endif %} {%- endif %} {%- for message in messages %} {%- if (message.role == "user") or (message.role == "system" and not loop.first) %} {{- '<|im_start|>' + message.role + '\n' + message.content + '<|im_end|>' + '\n' }} {%- elif message.role == "assistant" and not message.tool_calls %} {%- set content = message.content.split('</think>')[-1].lstrip('\n') %} {{- '<|im_start|>' + message.role + '\n' + content + '<|im_end|>' + '\n' }} {%- elif message.role == "assistant" %} {%- set content = message.content.split('</think>')[-1].lstrip('\n') %} {{- '<|im_start|>' + message.role }} {%- if message.content %} {{- '\n' + content }} {%- endif %} {%- for tool_call in message.tool_calls %} {%- if tool_call.function is defined %} {%- set tool_call = tool_call.function %} {%- endif %} {{- '\n<tool_call>\n{"name": "' }} {{- tool_call.name }} {{- '", "arguments": ' }} {{- tool_call.arguments | tojson }} {{- '}\n</tool_call>' }} {%- endfor %} {{- '<|im_end|>\n' }} {%- elif message.role == "tool" %} {%- if (loop.index0 == 0) or (messages[loop.index0 - 1].role != "tool") %} {{- '<|im_start|>user' }} {%- endif %} {{- '\n<tool_response>\n' }} {{- message.content }} {{- '\n</tool_response>' }} {%- if loop.last or (messages[loop.index0 + 1].role != "tool") %} {{- '<|im_end|>\n' }} {%- endif %} {%- endif %} {%- endfor %} {%- if add_generation_prompt %} {{- '<|im_start|>assistant\n<think>\n' }} {%- endif %}

修改为移除末尾 \n 的版本。修改后,模型需要在推理过程中手动添加 \n ,但这可能并非总能成功。DeepSeek 团队也修改了所有模型,默认添加 token,以强制模型进入推理模式。

因此,将 {%- if add_generation_prompt %} {{- '<|im_start|>assistant\n\n' }} {%- endif %} 修改为 {%- if add_generation_prompt %} {{- '<|im_start|>assistant\n' }} {%- endif %} ,即移除 \n 。

删除了 \n 部分的完整 jinja 模板

{%- if tools %} {{- '<|im_start|>system\n' }} {%- if messages[0]['role'] == 'system' %} {{- messages[0]['content'] }} {%- else %} {{- '' }} {%- endif %} {{- "\n\n# Tools\n\nYou may call one or more functions to assist with the user query.\n\nYou are provided with function signatures within <tools></tools> XML tags:\n<tools>" }} {%- for tool in tools %} {{- "\n" }} {{- tool | tojson }} {%- endfor %} {{- "\n</tools>\n\nFor each function call, return a json object with function name and arguments within <tool_call></tool_call> XML tags:\n<tool_call>\n{\"name\": <function-name>, \"arguments\": <args-json-object>}\n</tool_call><|im_end|>\n" }} {%- else %} {%- if messages[0]['role'] == 'system' %} {{- '<|im_start|>system\n' + messages[0]['content'] + '<|im_end|>\n' }} {%- endif %} {%- endif %} {%- for message in messages %} {%- if (message.role == "user") or (message.role == "system" and not loop.first) %} {{- '<|im_start|>' + message.role + '\n' + message.content + '<|im_end|>' + '\n' }} {%- elif message.role == "assistant" and not message.tool_calls %} {%- set content = message.content.split('</think>')[-1].lstrip('\n') %} {{- '<|im_start|>' + message.role + '\n' + content + '<|im_end|>' + '\n' }} {%- elif message.role == "assistant" %} {%- set content = message.content.split('</think>')[-1].lstrip('\n') %} {{- '<|im_start|>' + message.role }} {%- if message.content %} {{- '\n' + content }} {%- endif %} {%- for tool_call in message.tool_calls %} {%- if tool_call.function is defined %} {%- set tool_call = tool_call.function %} {%- endif %} {{- '\n<tool_call>\n{"name": "' }} {{- tool_call.name }} {{- '", "arguments": ' }} {{- tool_call.arguments | tojson }} {{- '}\n</tool_call>' }} {%- endfor %} {{- '<|im_end|>\n' }} {%- elif message.role == "tool" %} {%- if (loop.index0 == 0) or (messages[loop.index0 - 1].role != "tool") %} {{- '<|im_start|>user' }} {%- endif %} {{- '\n<tool_response>\n' }} {{- message.content }} {{- '\n</tool_response>' }} {%- if loop.last or (messages[loop.index0 + 1].role != "tool") %} {{- '<|im_end|>\n' }} {%- endif %} {%- endif %} {%- endfor %} {%- if add_generation_prompt %} {{- '<|im_start|>assistant\n' }} {%- endif %}

额外说明

额外说明

Unsloth 团队最初推测问题可能源于以下几点:

- QwQ 的上下文长度可能并非原生 128K,而是 32K 加上 YaRN 扩展。例如,在 https://huggingface.co/Qwen/QwQ-32B 的 readme 文件中,可以看到:

{

...,

"rope_scaling": {

"factor": 4.0,

"original_max_position_embeddings": 32768,

"type": "yarn"

}

}

Unsloth 团队尝试在 llama.cpp 中重写 YaRN 的处理方式,但问题依旧。

--override-kv qwen2.context_length=int:131072

--override-kv qwen2.rope.scaling.type=str:yarn

--override-kv qwen2.rope.scaling.factor=float:4

--override-kv qwen2.rope.scaling.original_context_length=int:32768

--override-kv qqwen2.rope.scaling.attn_factor=float:1.13862943649292 \

- Unsloth 团队还怀疑 RMS Layernorm epsilon 值可能不正确,或许应该是 1e-6 而非 1e-5。例如, 这个链接 中 rms_norm_eps=1e-06 ,而 这个链接 中 rms_norm_eps=1e-05 。Unsloth 团队也尝试重写了这个值,但问题仍然没有解决:

--override-kv qwen2.attention.layer_norm_rms_epsilon=float:0.000001 \

- 感谢 @kalomaze 的帮助,Unsloth 团队还测试了 llama.cpp 和 Transformers 之间的 tokenizer IDs 是否匹配。结果显示它们是匹配的,因此 tokenizer IDs 不匹配也不是问题根源。

以下是 Unsloth 团队的实验结果:

61KB file_BF16_no_samplers.txt

BF16 全精度,未应用采样修复

55KB file_BF16_yes_samplers.txt

BF16 全精度,已应用采样修复

71KB final_Q4_K_M_no_samplers.txt

Q4_K_M 精度,未应用采样修复

65KB final_Q4_K_M_yes_samplers.txt

Q4_K_M 精度,已应用采样修复

Tokenizer Bug 修复

✏️ Tokenizer Bug 修复

- Unsloth 团队还发现了一些影响微调的具体问题!EOS token 是正确的,但 PAD token 更合理的选择应该是 "<|vision_pad|>" 。Unsloth 团队已在 https://huggingface.co/unsloth/QwQ-32B/blob/main/tokenizer_config.json 中更新了配置。

"eos_token": "<|im_end|>",

"pad_token": "<|endoftext|>",

动态 4-bit 量化

🛠️ 动态 4-bit 量化

Unsloth 团队还上传了动态 4-bit 量化模型,相较于朴素的 4-bit 量化,动态 4-bit 量化可以显著提高模型精度!下图展示了 QwQ 模型激活值和权重在量化过程中的误差分析:

Unsloth 团队已将动态 4-bit 量化模型上传至: https://huggingface.co/unsloth/QwQ-32B-unsloth-bnb-4bit。

自 vLLM 0.7.3 版本(2024 年 2 月 20 日)起 https://github.com/vllm-project/vllm/releases/tag/v0.7.3,vLLM 已经开始支持加载 Unsloth 动态 4-bit 量化模型!

所有 GGUF 格式模型均可在 https://huggingface.co/unsloth/QwQ-32B-GGUF 找到!

© 版权声明

文章版权归 AI分享圈 所有,未经允许请勿转载。

相关文章

暂无评论...