Vision is All You Need: Building an Intelligent Document Retrieval System Using Visual Language Models (Vision RAG)

General Introduction

Vision-is-all-you-need is an innovative visual RAG (Retrieval Augmented Generation) system demonstration project that breaks new ground in applying Visual Language Modeling (VLM) to the document processing domain. Unlike traditional text chunking methods, the system directly uses visual language modeling to process the page images of PDF files, converting them into vector form for storage. The system adopts ColPali as the core visual language model, together with QDrant vector database to realize efficient retrieval, and integrates GPT4 or GPT4-mini model for intelligent Q&A. The project completely realizes the whole process from PDF document import, image conversion, vector storage to intelligent retrieval, and provides a convenient API interface and user-friendly front-end interface, which provides a brand-new solution for the field of document intelligent processing.

Demo address: https://softlandia-ltd-prod--vision-is-all-you-need-web.modal.run/

Function List

- PDF page embedding: Converts pages of a PDF file into images and embeds them as vectors through a visual language model.

- Vector Database Storage: Use Qdrant as a vector database to store embedded image vectors.

- Search: The user can query for vectors similar to the embedded image and generate a response.

- API Interface: Provide RESTful API interface to facilitate file upload, query and retrieval operations.

- Front-end Interaction: By React The front-end interface interacts with the API to provide a user-friendly experience.

Using Help

Installation process

- Installing Python 3.11 or later::

pip install modal

modal setup

- Configuring Environment Variables: Create a

.envfile and add the following:

OPENAI_API_KEY=your_openai_api_key

HF_TOKEN=your_huggingface_token

- running example::

modal serve main.py

usage example

- Upload PDF files: Open your browser, go to the URL provided by Modal, and add the following to the URL

/docs. ClickPOST /collectionsendpoint, select theTry it outbutton to upload the PDF file and execute it. - Query Similar Pages: Use

POST /searchendpoint, sends page images and queries to the OpenAI API, and returns the response.

front-end development

- Installing Node.js::

cd frontend

npm install

npm run dev

- Configuring the front-end environment: Modification

.env.developmentfile, add the backend URL:

VITE_BACKEND_URL=your_backend_url

- Launch Front End::

npm run dev

Detailed Operation Procedure

- PDF page embedding::

- utilization

pypdfiumConvert PDF pages to images. - Pass the image to a visual language model (e.g., ColPali) to obtain the embedding vector.

- Stores embedding vectors in the Qdrant vector database.

- utilization

- Search::

- The user inputs a query and the query embedding vector is obtained by visual language modeling.

- Search for similar embedding vectors in a vector database.

- The query and the best matching image are passed to a model (e.g., GPT4o) to generate a response.

- API Usage::

- Upload PDF files: via

POST /collectionsEndpoints upload files. - Query similar pages: by

POST /searchThe endpoint sends a query and gets a response.

- Upload PDF files: via

- Front-end Interaction::

- Use the React front-end interface to interact with the API.

- Provides file upload, query input and result display functions.

Reference article: building a RAG? Tired of chunking? Maybe visualization is all you need!

At the heart of most modern generative AI (GenAI) solutions, there's a program known as the RAG The method of Retrieval-Augmented Generation (RAG) is often referred to as "RAG" by software engineers in the field of applied AI. Software engineers in the field of applied AI often refer to this as "RAG". With RAG, language models can answer questions based on an organization's proprietary data.

The first letter R in RAG stands for Retrieve (retrieval), referring to the search process. When a user asks a GenAI robot a question, the search engine in the background should find exactly the material relevant to the question in order to generate a perfect, hallucination-free answer.A and G refer to the input of the retrieved data into the language model and the generation of the final answer, respectively.

In this paper, we focus on the retrieval process as it is the most critical, time-consuming and challenging part of realizing a RAG architecture. We will first explore the general concept of retrieval and then introduce the traditional chunk-based RAG retrieval mechanism. The second half of the paper then focuses on a new RAG approach that relies on image data for retrieval and generation.

A Brief History of Information Retrieval

Google and other major search engine companies have been trying to solve the problem of information retrieval for decades - "trying" being the key word. Information retrieval is still not as simple as expected. One reason is that humans process information differently from machines. Translating natural language into sensible search queries across diverse data sets is not easy. Advanced users of Google may be familiar with all the possible techniques for manipulating the search engine. But the process is still cumbersome, and the search results can be quite unsatisfactory.

With advances in language modeling, information retrieval suddenly has a natural language interface. However, language models perform poorly in providing fact-based information because their training data reflects a snapshot of the world at the time of training. In addition, knowledge is compressed in the model, and the well-known problem of illusion is unavoidable. After all, language models are not search engines, but reasoning machines.

The advantage of a language model is that it can be provided with data samples and instructions and asked to respond based on these inputs. This is ChatGPT and typical use cases for similar conversational AI interfaces. But people are lazy, and with the same amount of effort you might have accomplished the task yourself. That's why we need RAG: we can simply ask questions to an applied AI solution and get answers based on precise information. At least, that's the ideal situation in a world where search is perfect.

How does retrieval work in traditional RAG?

RAG search methods are as varied as the RAG implementations themselves. Search is always an optimization problem, and there is no generic solution that can be applied to all scenarios: the AI architecture must be tailored to each specific solution, be it search or other functionality.

Nevertheless, the typical baseline solution is the so-called chunking technique. In this approach, the information stored in the database (usually documents) is split into small chunks, approximately the size of a paragraph. Each chunk is then converted into a numeric vector by means of an embedding model associated with a language model. The generated numeric vectors are stored in a specialized vector database.

A simple vector database search is implemented as follows:

- The user asks a question.

- Generate an embedding vector from the problem.

- Perform semantic search in a vector database.

- In semantic search, the proximity between question vectors and vectors in the database is measured mathematically, taking into account the context and meaning of the text block.

- Vector search returns, for example, the 10 most matching blocks of text.

The retrieved text chunks are then inserted into the context (hints) of the language model and the model is asked to generate the answer to the original question. These two steps after retrieval are the A and G phases of RAG.

Chunking techniques and other pre-processing prior to indexing can have a significant impact on search quality. There are dozens of such preprocessing methods, and information can also be organized or filtered (called reordering) after searching. In addition to vector searches, traditional keyword searches or any other programming interface for retrieving structured information can also be used. Examples include text-to-SQL or text-to-API techniques for generating new SQL or API queries based on user questions. For unstructured data, chunking and vector search are the most commonly used retrieval techniques.

Chunking is not without its problems. Dealing with different file and data formats is cumbersome and separate chunking code must be written for each format. While there are off-the-shelf software libraries available, they are not perfect. Additionally, the size of the chunks and overlapping areas must be considered. Next, you run into the challenge of images, charts, tables, and other data, where understanding the visual information and its surrounding context (such as headings, font sizes, and other subtle visual cues) is critical. And these in cues are completely lost in chunking techniques.

What if this chunking is completely unnecessary and the search is like a human browsing through an entire page of a document?

Images retain visual information

Image-based search methods have become possible due to the development of advanced multimodal models. An exemplary AI solution based on image data is Tesla's self-driving solution, which relies entirely on cameras. The idea behind the approach is that humans perceive their surroundings primarily through vision.

The same concept applies to the RAG implementation. Unlike chunking, entire pages are indexed directly as images, i.e. in the same format as they would be viewed by a human. For example, each page of a PDF document is fed into a specialized AI model as an image (e.g. ColPali), the model creates vector representations based on visual content and text. These vectors are then added to the vector database. We can refer to this new RAG architecture as the Visual Retrieval Enhanced Generation(Vision Retrieval-Augmented Generation, or V-RAG).

The advantage of this approach may be a higher retrieval accuracy than traditional methods because the multimodal model generates a vector representation that takes into account both textual and visual elements. The search result will be the entire pages of the document, which are then fed as images into a powerful multimodal model such as GPT-4. The model can directly reference information in charts or tables.

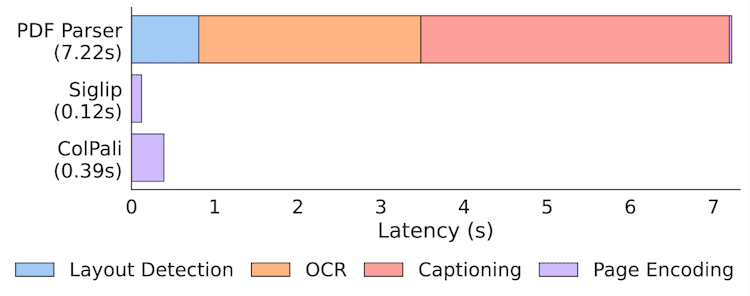

V-RAG eliminates the need to first extract complex structures (such as diagrams or tables) into text, then reconstruct that text into a new format, store it in a vector database, retrieve it, reorder it to form coherent hints, and ultimately generate answers. This is a significant advantage when dealing with old manuals, documents with a large number of tables, and any human-centered document format (where the content is more than just plain text). Indexing is also much faster than traditional layout detection and OCR processes.

Indexing Speed Statistics in ColPali Papers

Nonetheless, extracting text from documents is still valuable and can provide assistance alongside image search. However, chunking will soon be one of many options as a way to implement an AI search system.

Vision-RAG in Practice: Paligemma, ColPali and Vector Databases

Unlike traditional text-based RAG, V-RAG implementations still require access to specialized models and GPU computation. The best V-RAG implementation is to use a model developed specifically for this purpose ColPaliThe

ColPali is based on the multi-vector search approach introduced by the ColBERT model and Google's multimodal Paligemma language model.ColPali is a multimodal search model, which means that it understands not only the textual content, but also the visual elements of a document. In fact, the developers of ColPali extended ColBERT's text-based search approach to cover the visual domain, utilizing Paligemma.

When creating the embedding, ColPali divides each image into a 32 x 32 grid, with each image having about 1024 chunks, each represented by a 128-dimensional vector. The total number of chunks is 1030, because each image is accompanied by a "describe image" command token.

The user's text-based query is converted to the same embedding space in order to compare the chunks with the query part during the search process. The search process itself is based on the so-called MaxSim method in the this article It is described in detail in. This search method has been implemented in many vector databases that support multi-vector searching.

Vision is All You Need - V-RAG Demo and Code

We have created a V-RAG demo and the code is available on Softlandia's GitHub repository vision-is-all-you-need Find it in. You can also find other demos of applying AI under our account!

Running ColPali requires a GPU with a lot of memory, so the easiest way to run it is on a cloud platform that allows the use of GPUs. For this reason, we chose the excellent Modal platform, which makes serverless use of GPUs simple and affordable.

Unlike most online academic Jupyter Notebook presentations, our Vision is All You Need The demo provides a unique hands-on experience. You can clone the repository, deploy it yourself and run the full pipeline on cloud GPUs in minutes for free. This end-to-end application AI engineering example stands out by providing a real-world experience that most other demos can't match.

In that demo, we also used the Qdrant The in-memory version of Qdrant. Please note that when running the demo, indexed data disappears after the underlying container ceases to exist.Qdrant supports multi-vector search since version 1.10.0. The demo only supports PDF files, whose pages are converted to images by the pypdfium2 library. In addition, we used the transformers library and the colpali-engine created by the ColPali developers to run the ColPali model. Other libraries, such as opencv-python-headless (which is my work, by the way), are also in use.

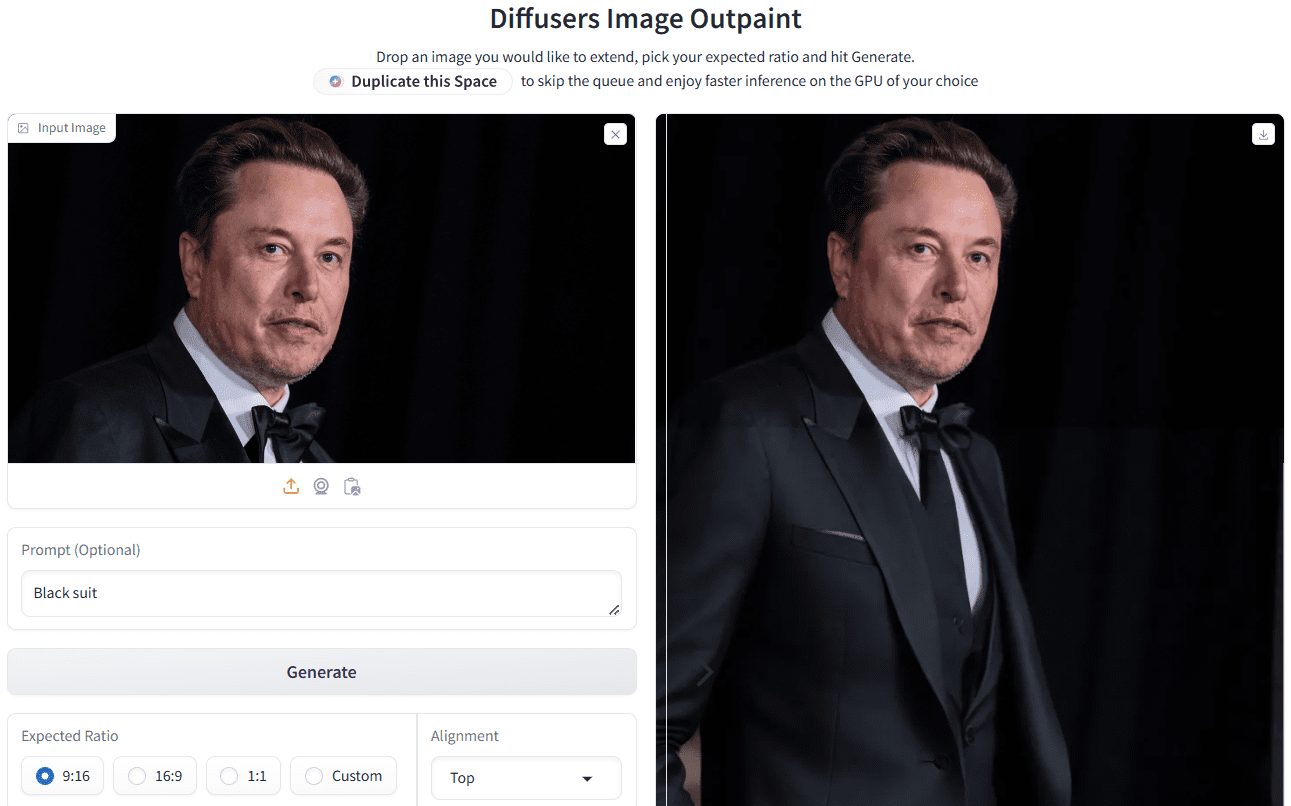

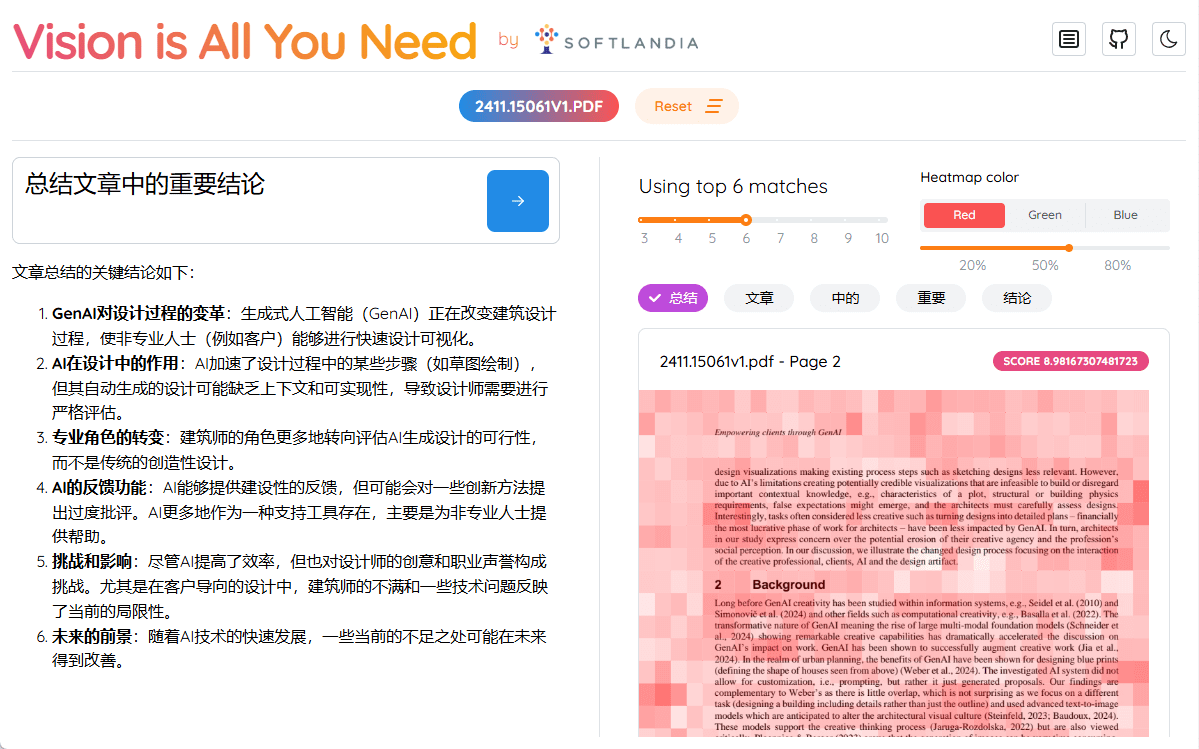

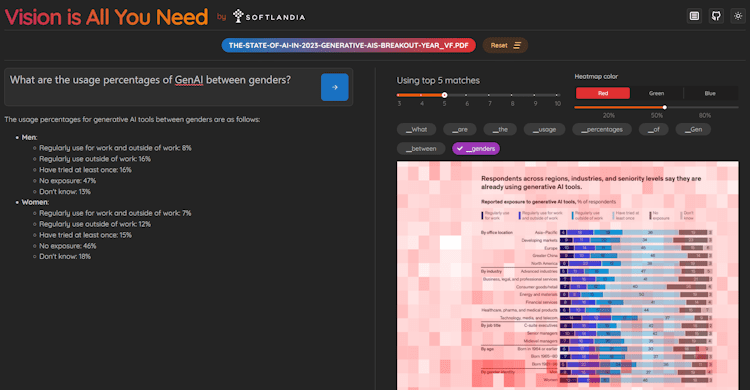

The demo provides an HTTP interface for indexing and asking questions. On top of that, we built a simple user interface using React. The UI also visualizes each Token of the attention map, making it easy for you to easily view the parts of the image that the ColPali model considers important.

Screenshots of the Vision is All You Need demo

Is vision really what you need?

Despite the title of the demo, search models like ColPali are not yet good enough, especially for multilingual data. These models are usually trained on a limited number of examples, which are almost always PDF files of some specific type. As a result, the demo only supports PDF files.

Another issue is the size of the image data and the embeddings computed from it. These data take up considerable space and searching on large datasets consumes far more computational power than traditional one-dimensional vector searches. This problem can be partially solved by quantizing the embeddings into smaller forms (even down to binary). However, this leads to loss of information and a slight decrease in search accuracy. In our demo, quantization has not been implemented yet, as optimization is not important for the demo. In addition, it is important to note that Qdrant does not yet directly support binary vectors.But it can Enabling Quantization in Qdrant, Qdrant will optimize the vectors internally. However, MaxSim based on the Hamming distance is not yet supported.

Therefore, it is still recommended to perform initial filtering in conjunction with traditional keyword-based searches before using ColPali for final page retrieval.

Multimodal search models will continue to evolve, as will embedding models that traditionally generate text embeddings. I am sure that OpenAI or a similar organization will soon release an embedding model similar to ColPali that will push search accuracy to a new level. However, this will upend all current systems built on chunking and traditional vector search methods.

Without a Flexible AI Architecture, You'll Fall Behind

Language models, search methods, and other innovations are being released at an accelerated rate in the AI space. More important than these innovations themselves is the ability to adopt them quickly, which provides a significant competitive advantage to companies that are faster than their competitors.

As a result, the AI architecture of your software, including the search function, must be flexible and scalable so that it can quickly adapt to the latest technological innovations. As the pace of development accelerates, it is critical that the core architecture of the system is not limited to a single solution, but rather supports a diverse range of search methodologies-whether it's traditional text search, multimodal image search, or even entirely new search models.

ColPali is just the tip of the iceberg for the future. Future RAG solutions will combine multiple data sources and search technologies, and only an agile and customizable architecture will allow for their seamless integration.

To solve this problem, we offer the following services:

- Assess the state of your existing AI architecture

- Deep dive into AI technologies with your tech leads and developers, including code-level details

- We examine search methods, scalability, architectural flexibility, security, and whether (generative) AI is being used according to best practices

- Suggests improvements and lists specific next steps for development

- Implementing an AI capability or AI platform as part of your team

- Dedicated application AI engineers ensure your AI projects don't fall behind other development tasks

- Develop AI products as an outsourced product development team

- We deliver complete AI-based solutions from start to finish

We help our customers gain a significant competitive advantage by accelerating AI adoption and ensuring its seamless integration. If you're interested in learning more, please contact us to discuss how we can help your company stay at the forefront of AI development.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...