RunPod: GPU Cloud Service Designed for AI with Fast Cold Start SD and Pay Per Second

General Introduction

RunPod is a cloud computing platform designed specifically for AI, aiming to provide developers, researchers and enterprises with a one-stop solution for AI model development, training and scaling. The platform integrates on-demand GPU resources, serverless reasoning, automatic scaling, and more to provide powerful support for all phases of an AI project.RunPod's core philosophy is to simplify the AI development process, allowing users to focus on model innovation without having to worry too much about infrastructure issues.

Pay only at the time of request and fast cold start, suitable for low-frequency services and requires high-quality operation. Meanwhile, the natural language processing class of large language model supports pay per tokens.

Function List

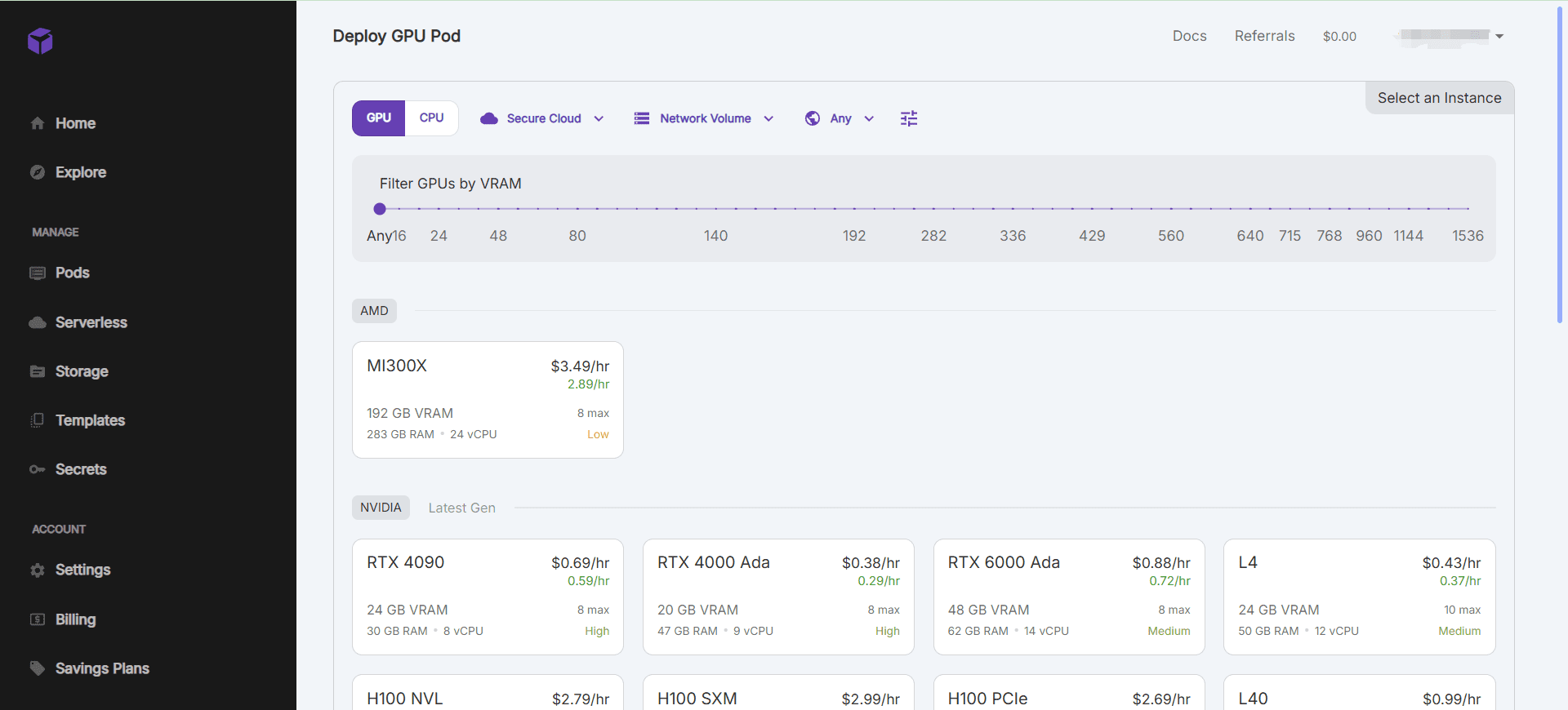

- On-demand GPU resources: Fast startup of GPU instances and support for multiple GPU models to meet different arithmetic requirements.

- Serverless reasoning: Automatically scales reasoning capabilities to efficiently handle changing workloads.

- Development Environment Integration: Pre-configured AI development environment that supports commonly used deep learning frameworks and tools.

- Data management and storage: Integrated data storage solution with efficient data transfer and access mechanisms.

- Collaboration and Version Control: Supports collaborative team development and provides model versioning capabilities.

Using Help

Installation and use

- Creating a GPU Instance::

- After logging in, go to the console page and click "Create Pod".

- Select the desired GPU model and configuration and click "Create" to start the instance.

- Once the instance is started, it can be operated via SSH or web terminal connection.

- Using preconfigured environments::

- RunPod offers a wide range of pre-configured AI development environments such as PyTorch, TensorFlow, and more.

- When creating an instance, you can select the desired environment template and quickly start project development.

- data management::

- RunPod provides an integrated data storage solution to manage datasets in the console.

- Supports uploading, downloading and sharing of data to ensure data security and privacy protection.

- Collaboration and Version Control::

- Supports collaborative team development, team members can be invited to work together on projects.

- Provide model version management function, easy to track and manage different versions of models.

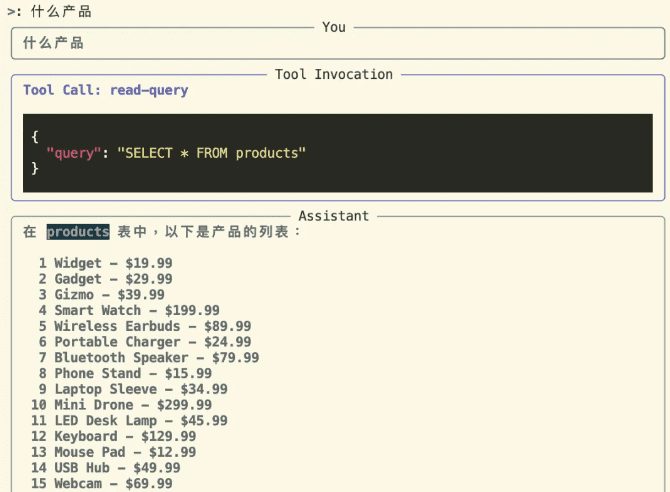

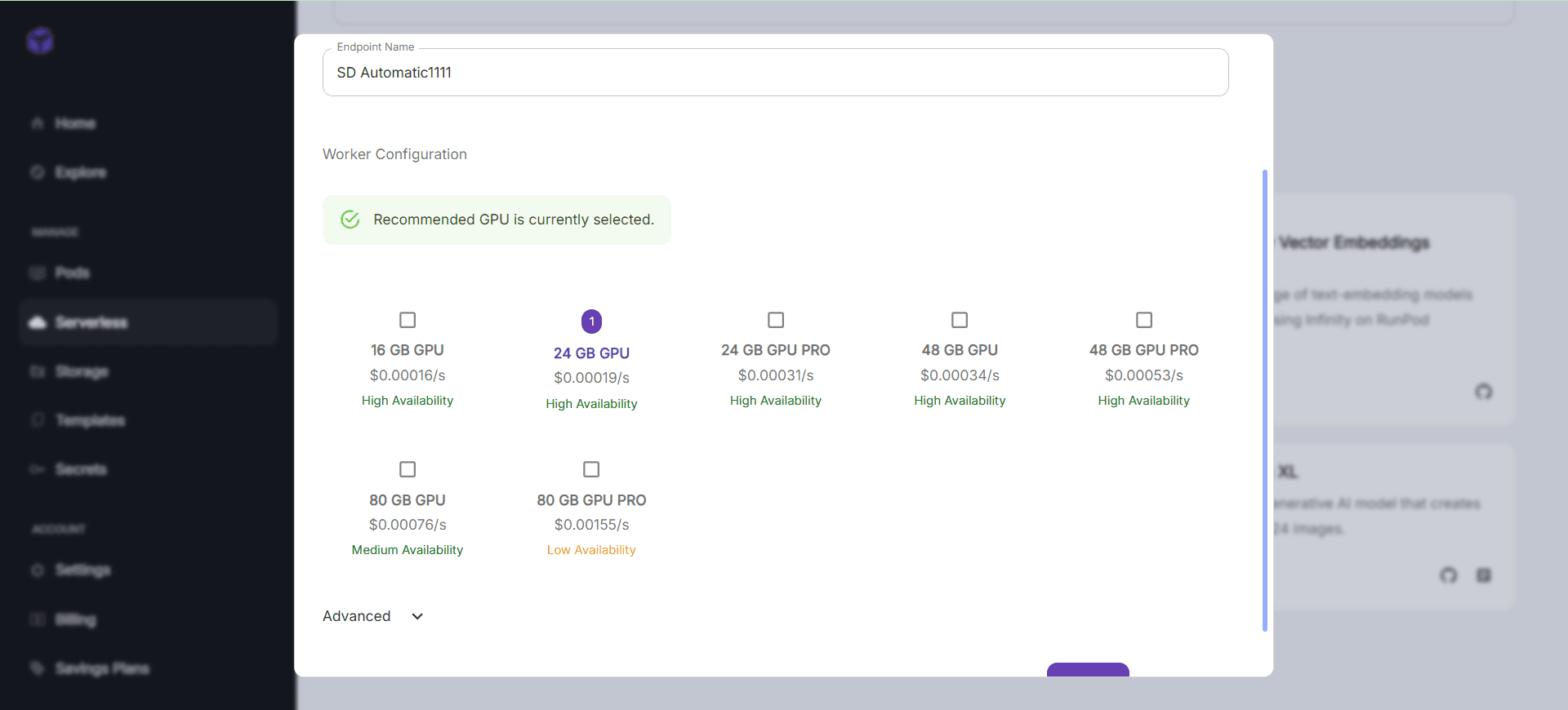

- Serverless reasoning::

- RunPod supports serverless architecture and automatically scales inference capabilities.

- API endpoints can be configured in the console to enable online inference services for models.

workflow

- Quick Start GPU Example::

- Log in to the console and select "Create Pod".

- Select the desired GPU model and configuration and click "Create".

- After the instance is started, it is operated via SSH or web terminal connection.

- Using preconfigured environments::

- When creating an instance, select the desired environment template.

- After connecting the instance, you can directly use the pre-configured development environment for project development.

- data management::

- Manage datasets in the console and support uploading, downloading and sharing of data.

- Ensure data security and privacy protection.

- Collaboration and Version Control::

- Invite team members to work together on projects and use versioning features to track and manage model versions.

- Serverless reasoning::

- Configure API endpoints in the console to enable online inference services for models.

- Automatically scale inference capabilities to efficiently handle changing workloads.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...