OneFileLLM: Integrating Multiple Data Sources into a Single Text File

General Introduction

OneFileLLM is an open source command line tool designed to consolidate multiple data sources into a single text file for easy input into Large Language Models (LLM). It supports processing GitHub repositories, ArXiv papers, YouTube video transcriptions, web content, Sci-Hub papers, and local files, automatically generating structured text and copying it to the clipboard. Developer Jim McMillan designed the tool to simplify the creation of LLM hints and reduce the tedious task of manually organizing data. Developed in Python, the tool supports multiple file formats, text preprocessing, and XML wrapping, making it suitable for developers, researchers, and content creators. It is easy to install, flexible to configure, and can be operated from the command line or through a web interface.

Function List

- Automatic detection of input types (e.g. GitHub repositories, YouTube links, ArXiv papers, local files, etc.).

- Support for processing GitHub repositories, Pull Requests, and Issues into a single text.

- Extract and convert ArXiv and Sci-Hub paper PDF content to text.

- Get YouTube video transcripts.

- Crawl web page content, support for specified depth of crawling links.

- Handles a wide range of file formats, including

.py,.ipynb,.txt,.md,.pdf,.csvetc. - Provides text preprocessing, such as removal of stop words, punctuation, and conversion to lowercase.

- Support for file and directory exclusion, filtering of auto-generated files (e.g.

*.pb.go) or test catalog. - Report token counts for compressed and uncompressed text to optimize LLM context management.

- The output text is encapsulated in XML format to improve the efficiency of LLM processing.

- Automatically copies uncompressed text to the clipboard for easy pasting into the LLM platform.

- Provides a Flask web interface to simplify URL or path entry.

Using Help

Installation process

OneFileLLM requires a Python environment and related dependencies. Here are the detailed installation steps:

- clone warehouse

Run the following command in a terminal to clone the OneFileLLM repository:git clone https://github.com/jimmc414/onefilellm.git cd onefilellm - Creating a Virtual Environment(Recommended)

To avoid dependency conflicts, it is recommended to create a virtual environment:python -m venv .venv source .venv/bin/activate # Windows 使用 .venv\Scripts\activate - Installation of dependencies

mountingrequirements.txtThe dependencies listed in thepip install -U -r requirements.txtDependencies include

PyPDF2(PDF processing),BeautifulSoup(web crawler),tiktoken(Token Count),pyperclip(Clipboard operation),youtube-transcript-api(YouTube transcription), etc. - Configuring GitHub Access Tokens(Optional)

To access private GitHub repositories, you need to set up a personal access token:- Log in to GitHub and go to Settings > Developer Settings > Personal Access Token.

- To generate a new token, select

repo(private warehouse) orpublic_repo(Open Warehouse) Permission. - Set the token as an environment variable:

export GITHUB_TOKEN=<your-token> # Windows 使用 set GITHUB_TOKEN=<your-token>

- Verify Installation

Run the following command to check if the installation was successful:python onefilellm.py --helpIf a help message is displayed, the installation is correct.

running mode

OneFileLLM supports both command line and web interface:

- command-line mode

Run the main script and manually enter the URL or path:python onefilellm.pyor specify the URL/path directly on the command line:

python onefilellm.py https://github.com/jimmc414/onefilellm - Web Interface Mode

Run the Flask web interface:python onefilellm.py --webOpen your browser and visit

http://localhost:5000Input the URL or path and click "Process" to get the output.

Main Functions

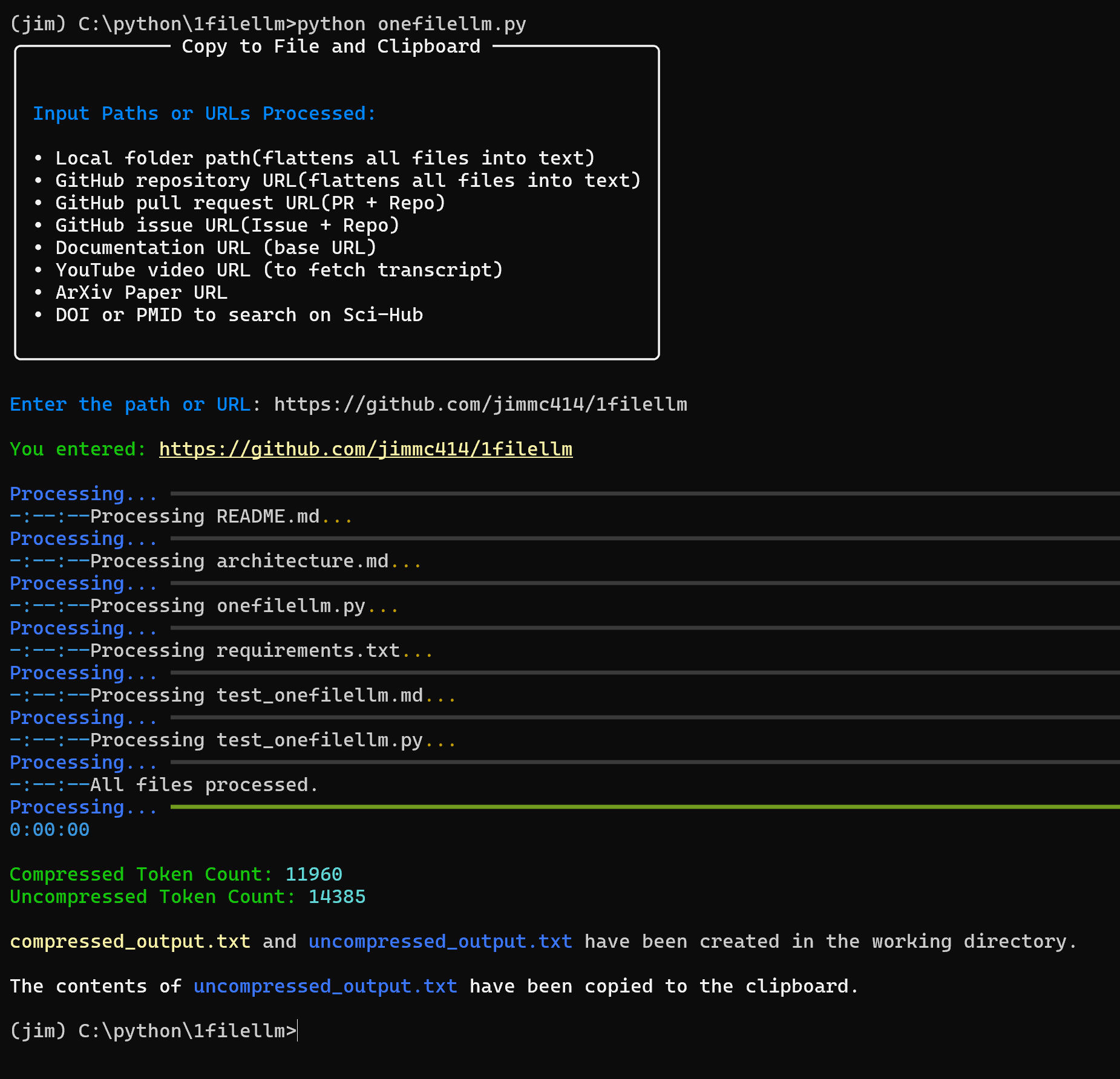

The core of OneFileLLM is the integration of multiple data sources into a single text, output as uncompressed_output.txt(uncompressed),compressed_output.txt(compressed) and processed_urls.txt(crawling a list of URLs). Here is how to use the main functions:

- Processing GitHub repositories

Enter the repository URL (e.g.https://github.com/jimmc414/onefilellm), the tool recursively gets the supported file types (e.g..py,.md), consolidated into a single text.

Example:python onefilellm.py Enter URL or path: https://github.com/jimmc414/onefilellmThe output file contains the contents of the repository file in the following XML wrapper format:

<source type="github_repository"> <content> [文件内容] </content> </source>Text is automatically copied to the clipboard.

- Handling GitHub Pull Requests or Issues

Enter a pull request (e.g.https://github.com/dear-github/dear-github/pull/102) or the issue URL (e.g.https://github.com/isaacs/github/issues/1191), the tool extracts diff details, comments, and entire repository content.

The sample output includes code changes, comments, and related documentation encapsulated as:<source type="github_pull_request"> <content> [差异详情和评论] </content> </source> - Extracting ArXiv or Sci-Hub papers

Enter the ArXiv URL (e.g.https://arxiv.org/abs/2401.14295) or Sci-Hub DOI/PMID (e.g.10.1053/j.ajkd.2017.08.002maybe29203127), the tool converts PDF to text.

Example:Enter URL or path: https://arxiv.org/abs/2401.14295The output is XML-wrapped text of the paper:

<source type="arxiv_paper"> <content> [论文内容] </content> </source> - Get YouTube Transcript

Enter the YouTube video URL (e.g.https://www.youtube.com/watch?v=KZ_NlnmPQYk), tool to extract transcribed text.

Example:Enter URL or path: https://www.youtube.com/watch?v=KZ_NlnmPQYkThe output is:

<source type="youtube_transcript"> <content> [转录内容] </content> </source> - web crawler

Enter the web page URL (e.g.https://llm.datasette.io/en/stable/), the tool crawls the page and specifies deep links (defaultmax_depth=2).

Example:Enter URL or path: https://llm.datasette.io/en/stable/The output is segmented web text, encapsulated as:

<source type="web_documentation"> <content> [网页内容] </content> </source> - Handling local files or directories

Enter the local file path (e.g.C:\documents\report.pdf) or catalog (e.g.C:\projects\research), the tool extracts content or integrates supported file types from the catalog.

Example:Enter URL or path: C:\projects\researchThe output is the XML-wrapped contents of the catalog.

Featured Function Operation

- XML Output Encapsulation

All outputs are in XML format, which provides a clear structure and improves the efficiency of LLM processing. The format is as follows:<source type="[source_type]"> <content> [内容] </content> </source>included among these

source_typeincluding throughgithub_repository,arxiv_paperetc. - File and directory exclusion

Support for excluding specific files (e.g.*.pb.go) and catalogs (e.g.tests) Modificationonefilellm.pyhit the nail on the headexcluded_patternscap (a poem)EXCLUDED_DIRSList:excluded_patterns = ['*.pb.go', '*_test.go'] EXCLUDED_DIRS = ['tests', 'mocks']This reduces extraneous content and optimizes token usage.

- token count

utilizationtiktokenCalculates the number of tokens for compressed and uncompressed text, displayed on the console:Uncompressed token count: 1234 Compressed token count: 567Helps the user ensure that the text fits in the LLM context window.

- Text Preprocessing

The tool automatically removes stop words, punctuation, converts to lowercase and generates compressed output. Users can modifypreprocess_textfunction customizes the processing logic. - Clipboard integration

Uncompressed output is automatically copied to the clipboard and pasted directly into LLM platforms (e.g. ChatGPT, Claude). - web interface

The Flask interface simplifies operations as the user enters a URL or path and downloads the output file or copies the text. Suitable for non-technical users.

Customized Configuration

- Document type

modificationsallowed_extensionslist, adding or removing supported file types:allowed_extensions = ['.py', '.txt', '.md', '.ipynb', '.csv'] - Web Crawl Depth

modificationsmax_depthparameter, the default value is 2:max_depth = 2 - Sci-Hub Domain Name

If the Sci-Hub domain name is not available, modify theonefilellm.pyThe Sci-Hub URL in the

caveat

- Ensure a stable internet connection, YouTube transcription and Sci-Hub access rely on external APIs.

- Large repositories or web pages may generate larger output, it is recommended to check the token count and adjust the exclusion rules.

- Sci-Hub access may require a domain name change due to regional restrictions.

- Some file formats (e.g. encrypted PDF) may not be processed correctly.

application scenario

- Code review

Developers enter GitHub repositories or pull request URLs, generate text with code and comments, and enter LLMs to analyze code quality or optimization suggestions. - Dissertation summary

Researchers enter the URL of an ArXiv or Sci-Hub paper, extract the text, and enter the LLM to generate an abstract or answer a research question. - Video Content Organization

Content creators enter YouTube video URLs to get transcribed text, enter LLMs to extract key points or generate scripts. - Document Integration

Technical writers enter web pages or local directory paths, integrate document content, and enter LLMs to rewrite or generate reports.

QA

- What file formats does OneFileLLM support?

be in favor of.py,.ipynb,.txt,.md,.pdf,.csvetc., can be modified by modifying theallowed_extensionsCustomization. - How do I access private GitHub repositories?

Setting the GitHub personal access token as an environment variableGITHUB_TOKENrequirerepoPermissions. - How to reduce the output text size?

modificationsexcluded_patternscap (a poem)EXCLUDED_DIRSExcluding extraneous files, adjustingmax_depthLimit web crawling depth. - What are the advantages of XML output?

XML is clearly structured, marking up content sources and types, improving LLM's ability to understand and process complex inputs. - What should I do if I can't download my Sci-Hub paper?

Check the network connection to make sure the DOI/PMID is correct, or update theonefilellm.pyThe Sci-Hub domain name in the

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...