MobileCLIP2 - Apple's Open Source Efficient End-Side Multi-Modal Modeling

What is MobileCLIP2

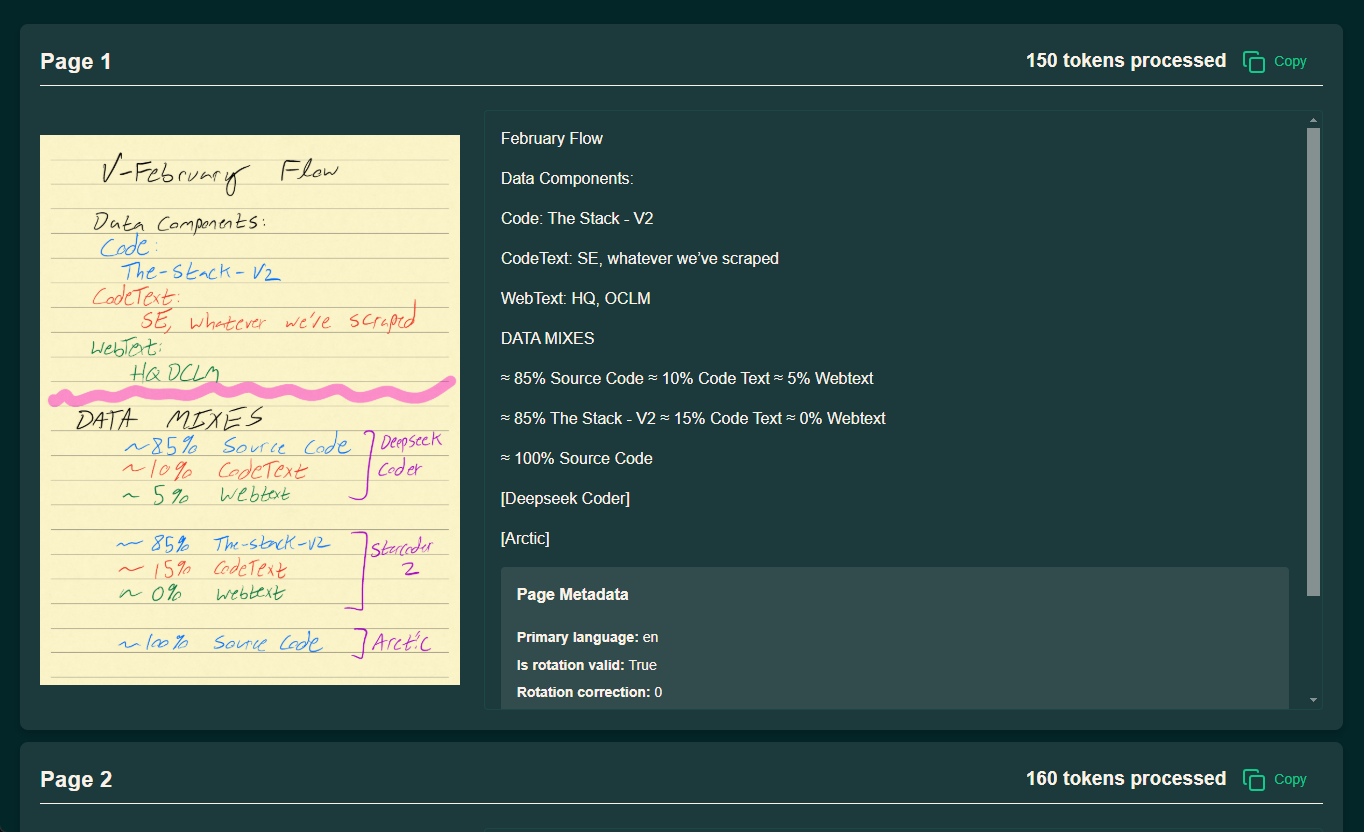

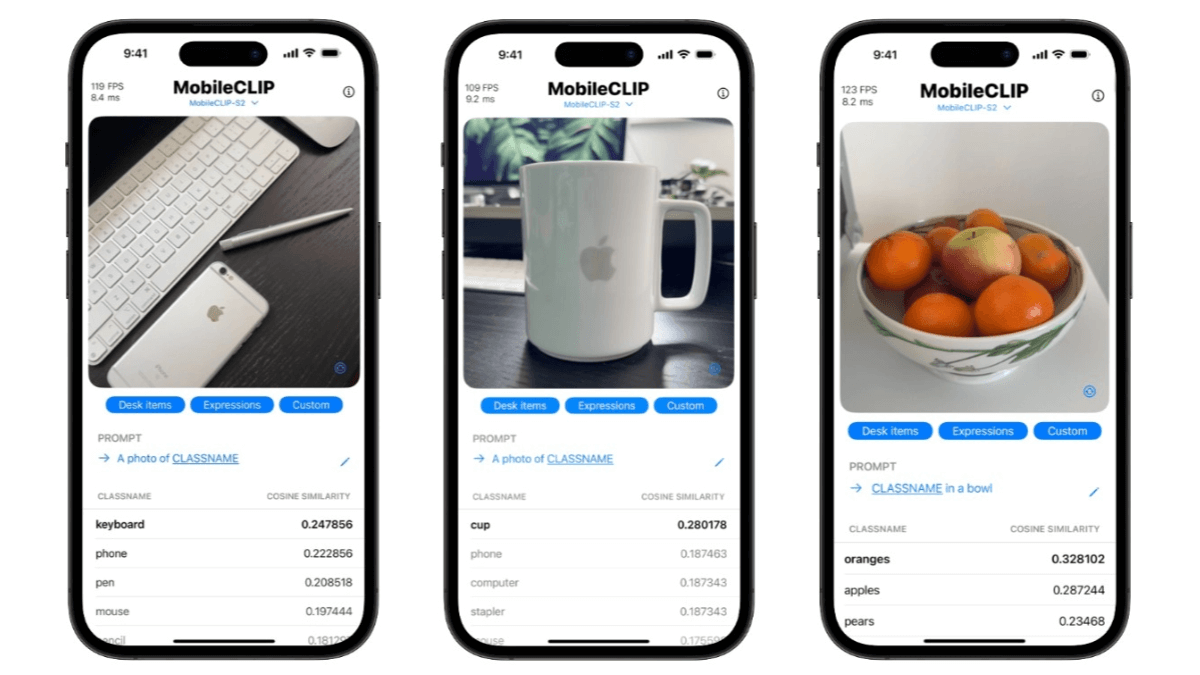

MobileCLIP2 is an upgraded version of MobileCLIP, an efficient end-side multimodal model introduced by Apple researchers. Optimized for multimodal reinforcement training, the model performance is further enhanced by training a better performing CLIP teacher model integration and an improved graphic generator teacher model on the DFN dataset.MobileCLIP2 performs well on zero-sample classification tasks, e.g., on the ImageNet-1k zero-sample classification task, the accuracy is improved by 2.21 TP3T compared to MobileCLIP -B by 2.2%. MobileCLIP2-S4 has a smaller model size and lower inference latency while maintaining comparable performance to SigLIP-SO400M/14. It also demonstrates good performance in a variety of downstream tasks, including visual language model evaluation and intensive prediction tasks.

Features of MobileCLIP2

- Efficient Multimodal Understanding: It can process images and text simultaneously, realizing accurate matching and understanding between images and text.

- Lightweight Model Architecture: Efficient model structures are designed for rapid deployment and operation in mobile devices and edge computing environments.

- Zero-sample classification capability: Classifying images directly without additional training data is adaptable and can be quickly applied to new tasks.

- Low-latency reasoning: Optimizes the inference speed of the model for fast response even on resource-constrained devices, enhancing the user experience.

- Privacy: Supports end-side processing, data does not need to be uploaded to the cloud, protects user privacy, and is suitable for application scenarios with high privacy requirements.

- Powerful feature extraction: Extract high-quality multimodal features for images and text, which can be widely used in a variety of downstream tasks, such as image classification and target detection.

- adaptable: Adaptable to many different tasks and datasets with good generalization capabilities through fine-tuning and optimization.

Core Benefits of MobileCLIP2

- High performance: MobileCLIP2 significantly reduces the computational complexity and inference latency of the model while maintaining high performance, making it suitable for fast operation on resource-constrained devices.

- Lightweight Architecture: Designed with lightweight models, such as MobileCLIP2-B and MobileCLIP2-S4, enabling efficient deployment in mobile devices and edge computing environments.

- intensive training: By optimizing multimodal reinforcement training, the model's ability to jointly understand images and text is improved, and the representation of multimodal features is enhanced.

- Privacy: Supports end-side processing, data does not need to be uploaded to the cloud, effectively protecting user privacy, especially suitable for application scenarios with high privacy requirements.

- zero-sample learning: With powerful zero-sample classification capability, it is adaptable to classify images from text descriptions even without class-specific training data.

What is MobileCLIP2's official website?

- Github repository:: https://github.com/apple/ml-mobileclip

- HuggingFace Model Library:: https://huggingface.co/collections/apple/mobileclip2-68ac947dcb035c54bcd20c47

Who MobileCLIP2 is for

- IoT Developer: The model can be integrated into IoT devices such as smart homes, security cameras, etc. for localized intelligent decision making.

- Artificial intelligence researchers: Can be used to study the optimization and application of multimodal models and explore new algorithms and techniques.

- data scientist: The multimodal feature extraction capabilities of MobileCLIP2 can be utilized to provide high-quality features for machine learning projects.

- Privacy Advocate: Suitable for application scenarios with strict requirements for data privacy, such as medical and financial fields, to ensure data security.

- educator: It can be used to develop educational tools, such as intelligent tutoring software, to improve teaching and learning through the combination of images and text.

- content creator: Models can be used to generate creative text or to categorize images to aid in content creation and editing.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...