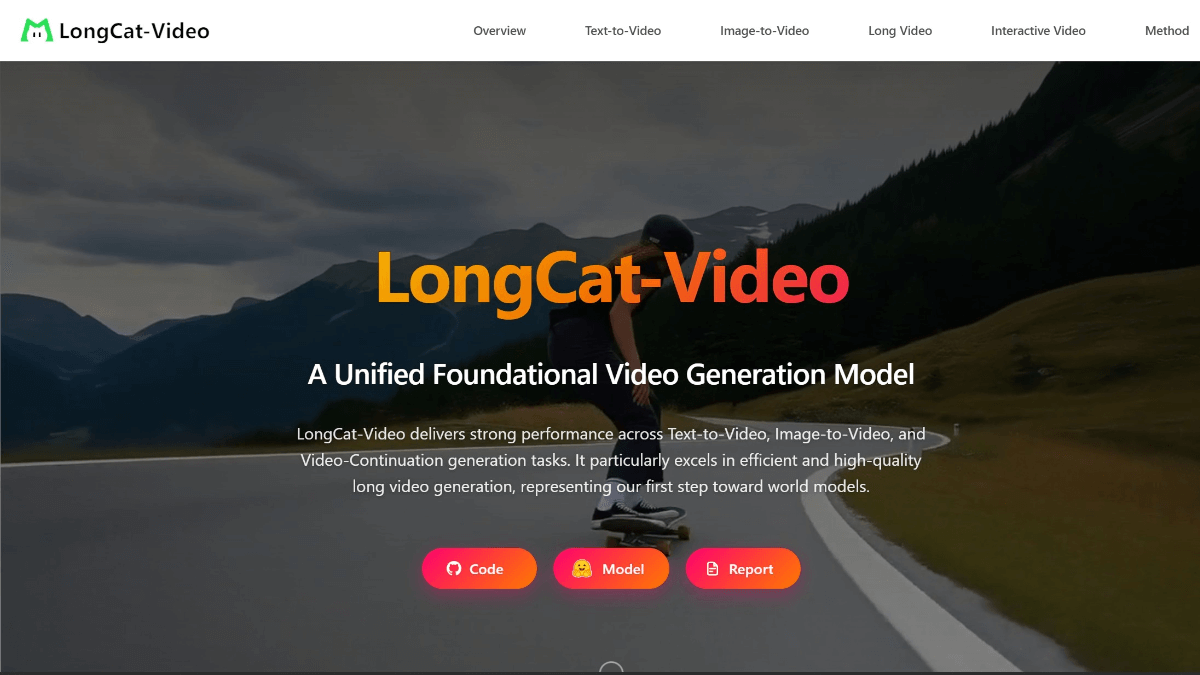

LongCat-Video - LongCat open source video generation model of the Mission

What is LongCat-Video

LongCat-Video is a 1.36 billion parameter video generation model open source by the LongCat team of Meituan, using the MIT open source protocol, supporting the three major tasks of text-generated video, graph-generated video and video continuation. Through the "coarse to fine" generation strategy and block sparse attention mechanism, the model can generate 720P HD long video within minutes, maintaining color consistency and no quality degradation. Technical highlights include multi-reward reinforcement learning optimization, performance close to commercial-grade SOTA models, and several metrics outperforming similar open-source models in internal tests. The model has been open-sourced on Hugging Face and GitHub, and provides one-click deployment solutions such as text/image input and video sequencing.

Features of LongCat-Video

- multitasking capability: Can simultaneously handle multiple video generation tasks such as text-to-video, image-to-video and video continuation.

- Long video generation: Specializes in generating high-quality videos that are several minutes long, maintaining content consistency and visual quality.

- Efficient Reasoning: Advanced technical strategies are used to generate high-resolution video quickly and significantly reduce generation time.

- performance optimization: Optimized by multi-reward reinforcement learning to ensure that the generated videos perform well on multiple dimensions.

- open source and easy to use: The model is weighted open source and provides detailed usage guidelines and code examples to facilitate developers to quickly get started and apply.

Core Advantages of LongCat-Video

- multitaskingOne model can accomplish multiple tasks such as text-to-video, image-to-video, and video continuation without switching models for different tasks.

- Long video generation capability: Generate videos that are several minutes long with no color drift or quality degradation during the generation process, maintaining the consistency and stability of the video.

- Efficient inference performance: With the coarse-to-fine generation strategy and Block Sparse Attention technology, it is able to generate high-quality 720p, 30fps videos in a short period of time, which significantly improves the inference efficiency.

- Multi-Reward Reinforcement Learning Optimization: With multi-reward Group Relative Policy Optimization (GRPO), it excels in multiple dimensions such as text alignment, visual quality, and motion quality, generating video quality comparable to leading open source and commercial solutions.

What is LongCat-Video's official website?

- Project website:: https://meituan-longcat.github.io/LongCat-Video/

- Github repository:: https://github.com/meituan-longcat/LongCat-Video

- HuggingFace Model Library:: https://huggingface.co/meituan-longcat/LongCat-Video

People for whom LongCat-Video is suitable

- Film & TV Production Team: Used to assist in film and television creation, generating video clips or continuing plots, providing creative inspiration and preliminary material for film and television production.

- educator: It can generate teaching videos, demo videos, etc. to enrich teaching resources and enhance teaching effect.

- game developer: Used to generate dynamic scenes or character animations in games to enhance the visual effect and immersion of the game.

- Scientific and technical researchers: Researchers interested in video generation techniques can take advantage of its open source nature for research and development.

- Corporate marketers: Used to produce product promotion videos, corporate videos, etc. to enhance brand influence and product appeal.

- Social Media Operators: It can quickly generate engaging video content, increase user interaction and engagement, and boost account activity.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...