Groq: AI big model inference acceleration solution provider, high-speed free big model interface

Groq General Introduction

Groq, a company based in Mountain View, California, developed the GroqChip™ and the Language Processing Unit™ (LPU). Known for its tensor processing units developed for low-latency AI applications.

Groq was founded in 2016, and its name was officially trademarked in the same year.Groq's main product is the Language Processing Unit (LPU), a new class of chips designed not to train AI models, but to run them quickly.Groq's LPU systems have led the way in the next generation of AI acceleration, which are designed to process sequential data (e.g., DNA, music, code.), natural language) and outperform GPUs.

They aim to provide solutions for real-time AI applications, claiming leading AI performance in compute centers, characterized by speed and accuracy.Groq supports standard machine learning frameworks such as PyTorch, TensorFlow and ONNX. In addition to this, they offer the GroqWare™ suite, which includes tools for custom development and optimization of workloads such as the Groq Compiler.

Groq Feature List

- Real-time AI application processing

- Support for standard machine learning frameworks

- Support for SaaS and PaaS lightweight hardware

- Delivering fast and accurate AI performance

- GroqWare™ Suite for Custom Optimized Workloads

- Ensure accurate, energy-efficient and repeatable large-scale inference performance

Groq Help

- Developers can self-serve developer access through Playground on GroqCloud

- If you are currently using the OpenAI API, you only need three things to convert to Groq: a Groq API key, an endpoint, a model

- If you need the fastest reasoning at datacenter scale, we should be talking

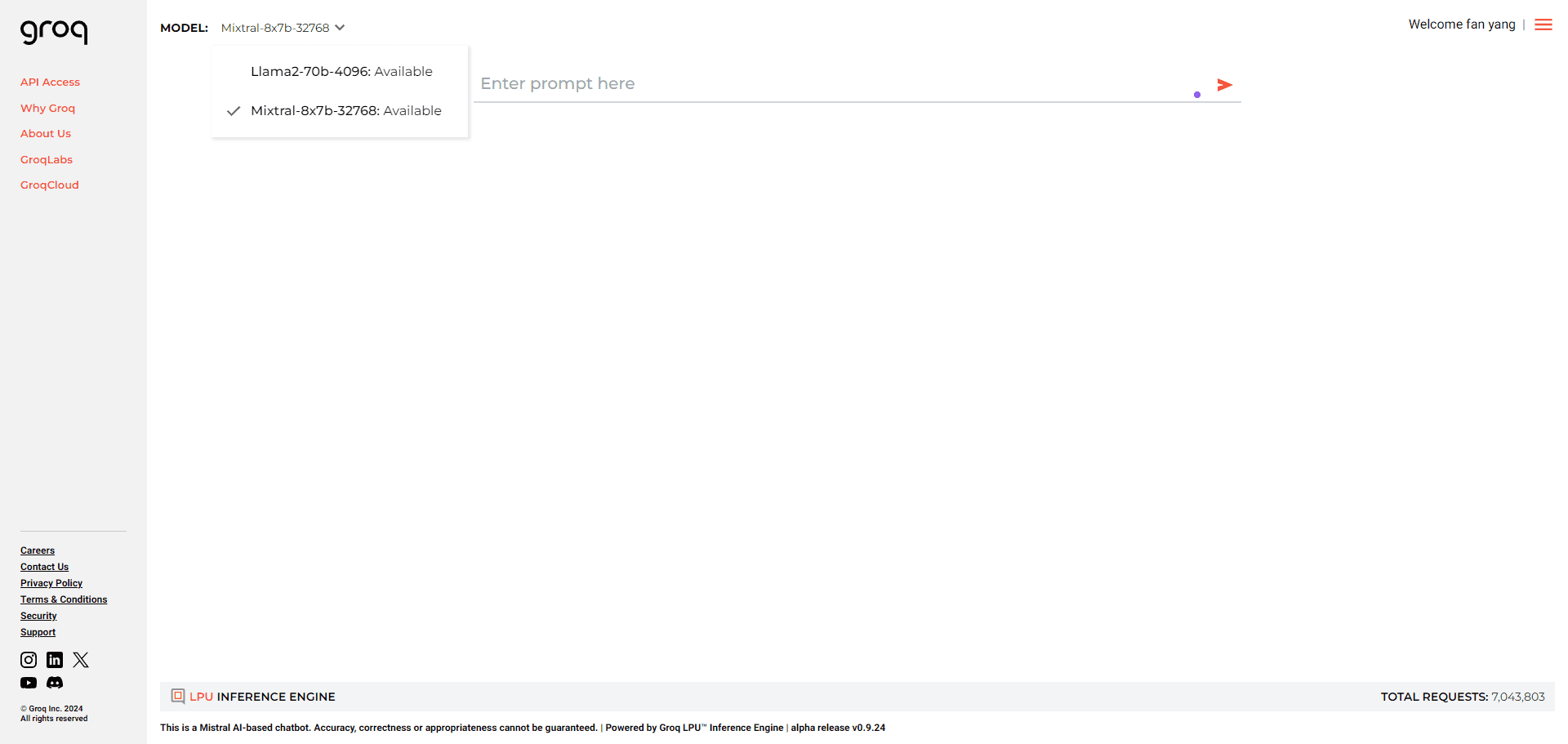

You can.Click hereApply for APIKEY free of charge and choose the model after the application is completed:

Chat Completion

| ID | Requests per Minute | Requests per Day | Tokens per Minute | Tokens per Day |

|---|---|---|---|---|

| gemma-7b-it | 30 | 14,400 | 15,000 | 500,000 |

| gemma2-9b-it | 30 | 14,400 | 15,000 | 500,000 |

| llama-3.1-70b-versatile | 30 | 14,400 | 20,000 | 500,000 |

| llama-3.1-8b-instant | 30 | 14,400 | 20,000 | 500,000 |

| llama-3.2-11b-text-preview | 30 | 7,000 | 7,000 | 500,000 |

| llama-3.2-1b-preview | 30 | 7,000 | 7,000 | 500,000 |

| llama-3.2-3b-preview | 30 | 7,000 | 7,000 | 500,000 |

| llama-3.2-90b-text-preview | 30 | 7,000 | 7,000 | 500,000 |

| llama-guard-3-8b | 30 | 14,400 | 15,000 | 500,000 |

| llama3-70b-8192 | 30 | 14,400 | 6,000 | 500,000 |

| llama3-8b-8192 | 30 | 14,400 | 30,000 | 500,000 |

| llama3-groq-70b-8192-tool-use-preview | 30 | 14,400 | 15,000 | 500,000 |

| llama3-groq-8b-8192-tool-use-preview | 30 | 14,400 | 15,000 | 500,000 |

| llava-v1.5-7b-4096-preview | 30 | 14,400 | 30,000 | (No limit) |

| mixtral-8x7b-32768 | 30 | 14,400 | 5,000 | 500,000 |

Speech To Text

| ID | Requests per Minute | Requests per Day | Audio Seconds per Hour | Audio Seconds per Day |

|---|---|---|---|---|

| distil-whisper-large-v3-en | 20 | 2,000 | 7,200 | 28,800 |

| whisper-large-v3 | 20 | 2,000 | 7,200 | 28,800 |

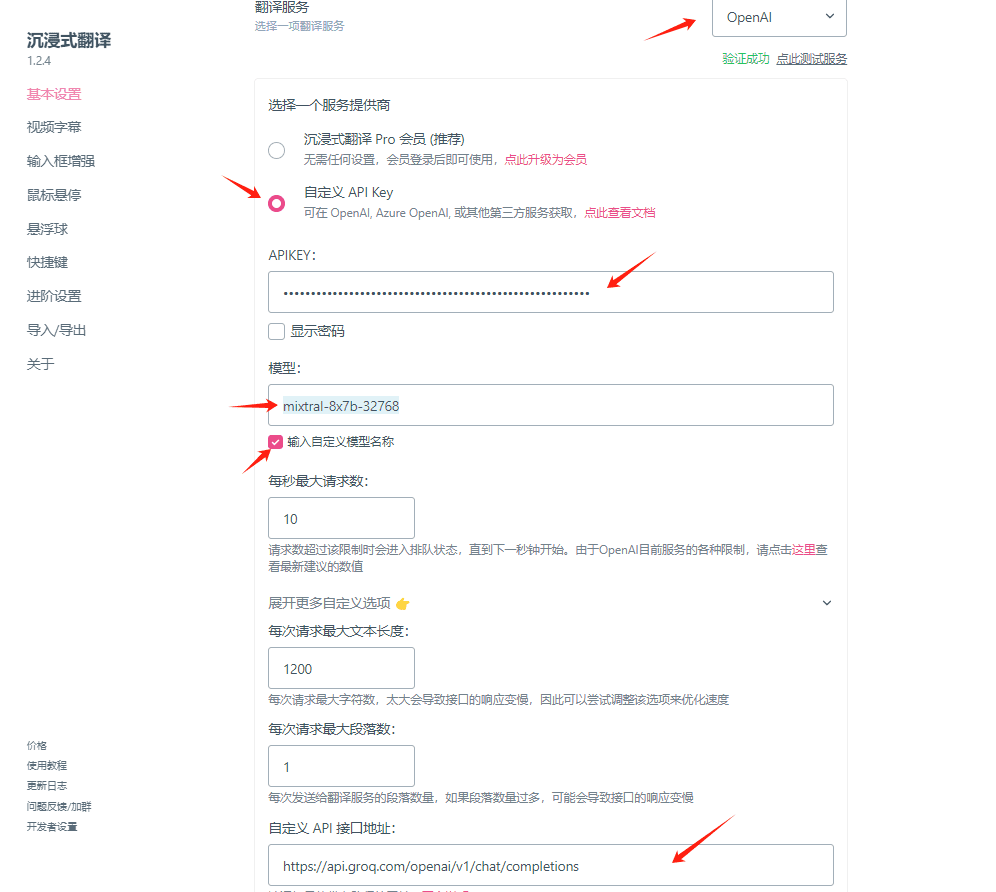

Next, take the curl format as an example, this interface is compatible with the OPENAI interface format, so use your imagination, as long as there are interfaces that allow customization of the OPENAI API, the same can be used in Groq.

curl -X POST "https://api.groq.com/openai/v1/chat/completions" \

-H "Authorization: Bearer $GROQ_API_KEY" \

-H "Content-Type: application/json" \

-d '{"messages": [{"role": "user", "content": "Explain the importance of low latency LLMs"}], "model": "mixtral-8x7b-32768"}'

Usage Example: Configuring Groq Keys for Use in the Immersive Translation Plugin

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...