Grok 2.5 - Musk's xAI open source AI model

What is Grok 2.5?

Grok 2.5 is an open source AI model from Elon Musk's xAI. With 269 billion parameters, it is based on the Mixed Expertise (MoE) architecture for powerful performance and reasoning. The model excels in tests such as Graduate Level Scientific Knowledge (GPQA), General Knowledge (MMLU, MMLU-Pro), and Mathematical Competitions (MATH) and is close to the current cutting edge. grok 2.5's file contains 42 weights files totaling about 500GB and requires at least 8 GPUs with more than 40GB of video memory to run. xAI recommends using the SGLang language and the latest version of the SGLang inference engine to run the model. It excels in logical reasoning and code generation and is suitable for academic research and solving complex problems.

Features of Grok 2.5

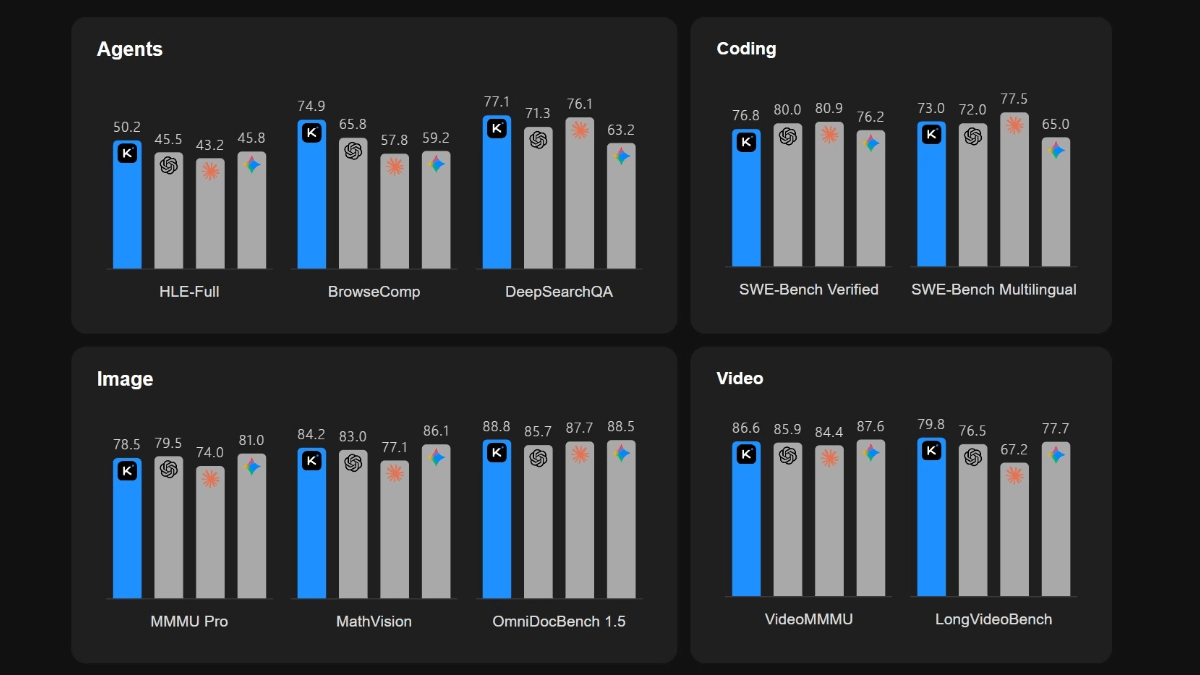

- Powerful performance: Performs well on tests such as Graduate Level Scientific Knowledge (GPQA), General Knowledge (MMLU, MMLU-Pro), and Mathematics at the Hurdle (MATH), which are near the current cutting edge.

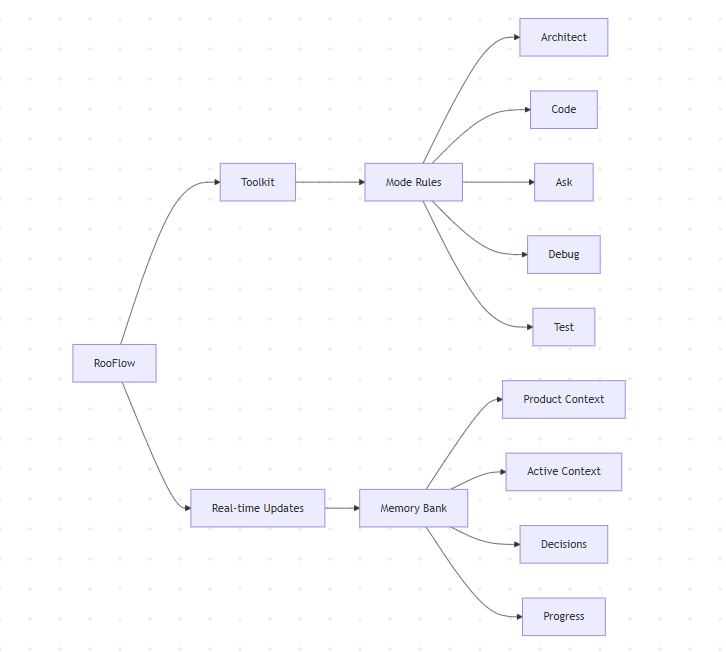

- Hybrid Expert Architecture: Based on the hybrid expert (MoE) architecture, it effectively improves the performance and efficiency of the model.

- Inference Engine Support: xAI recommends using the SGLang language and installing the latest version of the SGLang inference engine to run the model.

- Higher hardware requirements: Requires at least 8 GPUs with more than 40GB of video memory to run.

- Open Source License RestrictionsThe model is licensed under the Grok 2 Community License for non-commercial and research purposes, and commercial use is permitted only in accordance with xAI's Acceptable Commercial Policy, which explicitly prohibits the use of the model to train, create, or improve other base models or models of large languages.

Core Benefits of Grok 2.5

- Academic excellence: In a number of academic benchmark tests, such as Graduate Level Scientific Knowledge (GPQA), General Knowledge (MMLU, MMLU-Pro), and Math Athletics Tests (MATH), Grok 2.5 demonstrates near-current, cutting-edge capabilities that are powerful for academic research.

- Efficient Hybrid Expert Architecture: Based on the Mixed Expert (MoE) architecture, it effectively improves model performance and efficiency, and excels in handling complex tasks.

- Powerful logical reasoning and code generation capabilities: excels in logical reasoning and code generation, solving complex programming problems and fueling technology development and scientific research.

What is Grok 2.5's official website?

- HuggingFace Model Library:: https://huggingface.co/xai-org/grok-2

Who Grok 2.5 is for

- research worker: Grok 2.5's powerful academic performance and logical reasoning make it a powerful tool for researchers to conduct in-depth studies and explorations in various fields.

- developers: Advantages in code generation and complex problem solving can help developers solve programming challenges and improve development efficiency.

- educator: Can use Grok 2.5's academic knowledge and logical reasoning skills to provide students with richer teaching resources and more in-depth academic instruction.

- business user: In compliance with xAI's Acceptable Business Policy, organizations can explore new business application scenarios with the high performance and efficiency of Grok 2.5.

- technology enthusiast: For technology enthusiasts interested in artificial intelligence and machine learning, the open source nature of Grok 2.5 provides a platform for learning and practicing.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...