Microsoft's new model: Phi-4 is here, with 14B parameters comparable to larger scale models

With only 14 billion (14B) parameters, Phi-4 demonstrates performance comparable to or even surpassing some larger-scale models through innovative training methods and high-quality data. In this paper, we describe in detail Phi-4's architecture, features, training methods, and performance in real-world applications and benchmarks.

https://github.com/xinyuwei-david/david-share.git

I. Looking at indicators to measure capacity

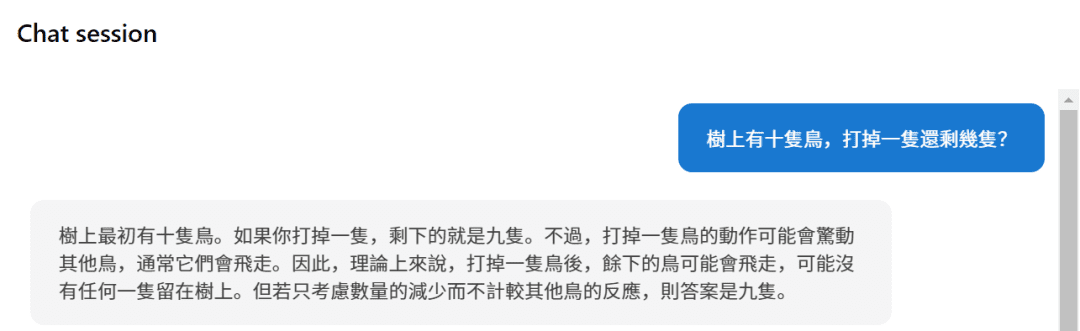

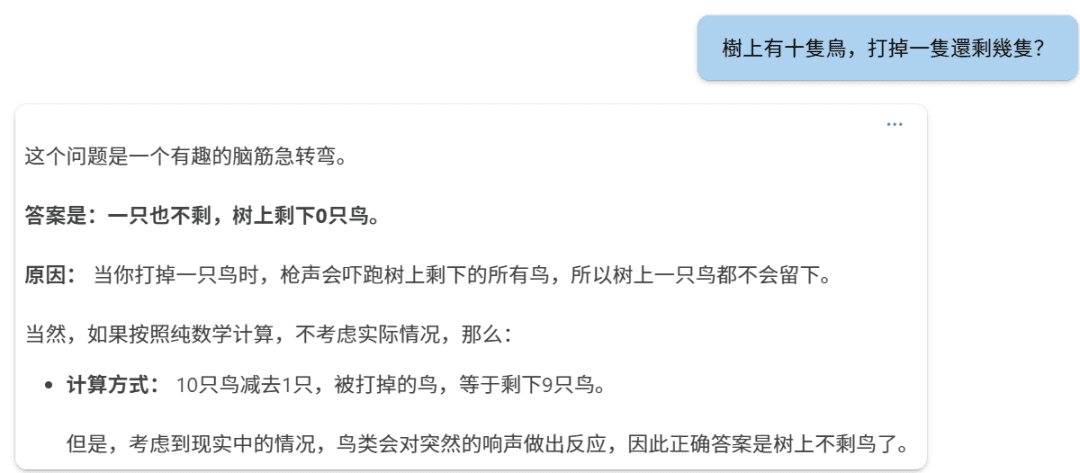

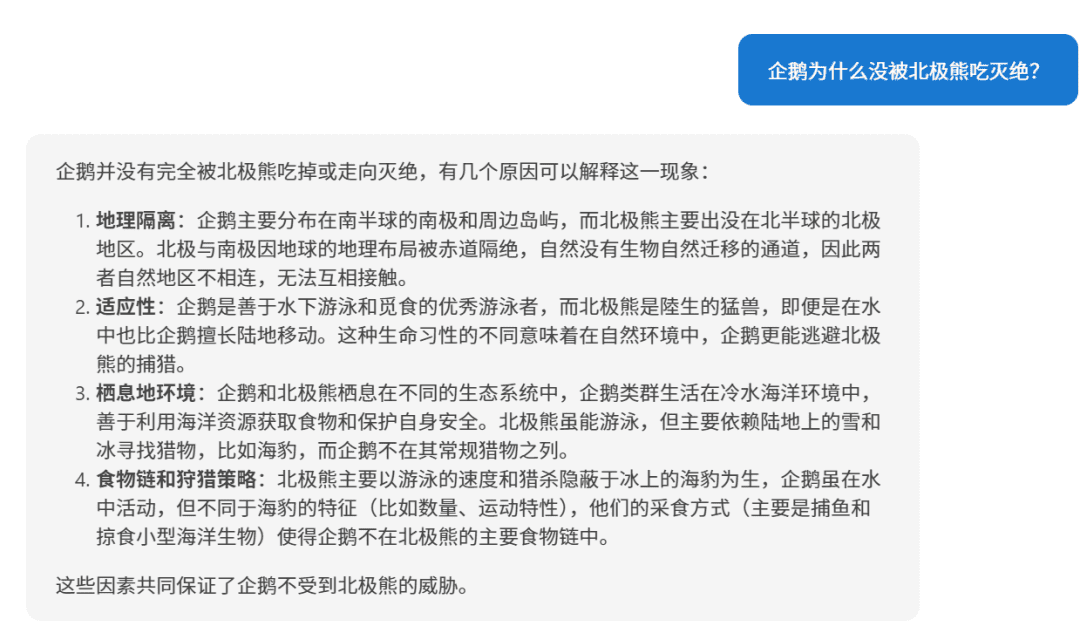

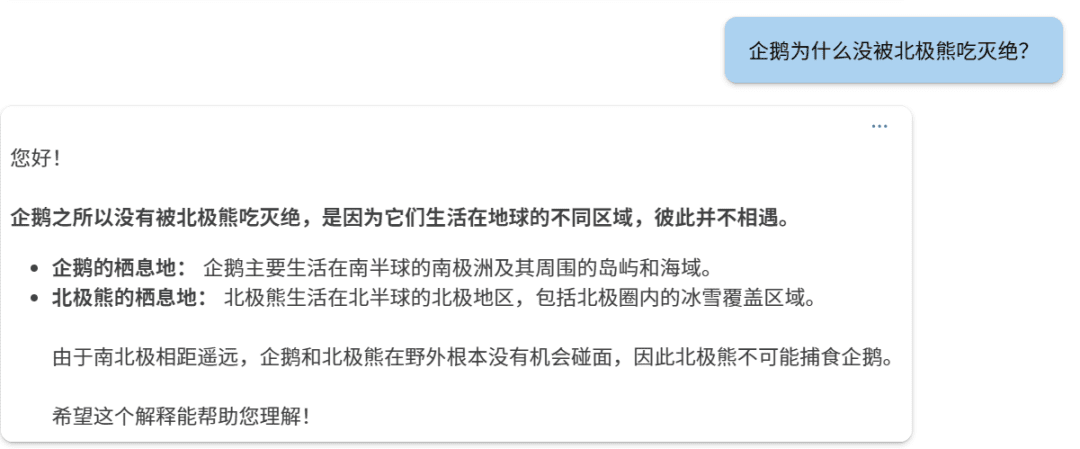

Measure three questions comparing o1 and Phi-4

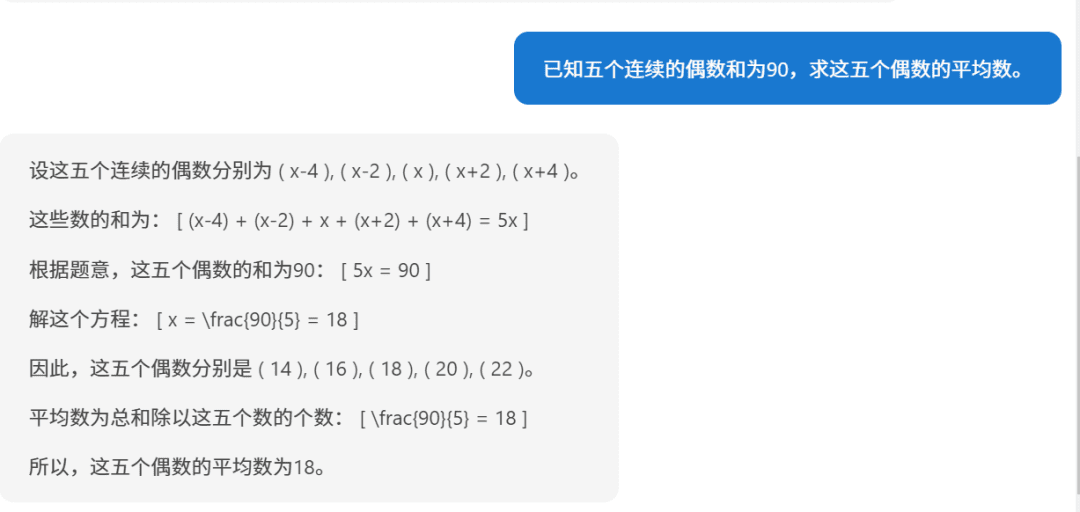

First question:

phi-4

o1

Second question:

Phi-4.

o1:

Third question:

Phi-4

o1.

要求五个连续偶数的平均数,已知它们的和为90,下面我们逐步解答。步骤1:设未知数设这五个连续的偶数为:第一个偶数:( n - 4 )第二个偶数:( n - 2 )第三个偶数:( n )第四个偶数:( n + 2 )第五个偶数:( n + 4 )(因为偶数之间相差2,所以相邻的偶数可以这样表示)步骤2:列方程求和根据题目,五个数的和为90:[(n - 4) + (n - 2) + n + (n + 2) + (n + 4) = 90]步骤3:简化方程合并同类项:[5n = 90]步骤4:求解 n[n = frac{90}{5} = 18]步骤5:求出五个偶数第一个偶数:( 18 - 4 = 14 )第二个偶数:( 18 - 2 = 16 )第三个偶数:( 18 )第四个偶数:( 18 + 2 = 20 )第五个偶数:( 18 + 4 = 22 )步骤6:计算平均数平均数等于总和除以数量:[text{平均数} = frac{14 + 16 + 18 + 20 + 22}{5} = frac{90}{5} = 18]答案:这五个连续的偶数的平均数是 18。

When you look at it this way, Phi-4's reasoning is still fine.

II. Phi-4 model architecture

1. Transformer-based decoder architecture

The Phi-4 utilizes a system based on the Transformer (used form a nominal expression) Decoder-only The architecture is similar to the GPT family of models. This architecture utilizes Self-Attention Mechanism to effectively capture long-term dependencies in text sequences and excels in natural language generation tasks.

2. Parameter size and number of layers

- Total number of parameters: 14 billion (14B) Parameters.

- Number of model floors: 40

3. Context length

- Initial context length: 4,096 Token.

- Medium-term training extension: In the mid-training phase, the context length of Phi-4 is extended to 16,000 Token (16K), which improves the model's ability to handle long texts.

4. Glossary and lexer

- Splitters: Using OpenAI's tiktoken SplitterThe program supports multi-language and has a better subtext effect.

- Glossary size: 100,352This includes some reserved unused Token.

III. Attention Mechanisms and Location Coding

1. Global attention mechanisms

Phi-4 uses Full Attention mechanism, i.e., self-attention is computed for the entire context sequence. This is in contrast to the predecessor model, Phi-3-medium, which employs 2,048 Token of the sliding window, while Phi-4 performs global attention computation directly on the contexts of 4,096 Token (initial) and 16,000 Token (expanded), improving the model's ability to capture long-range dependencies.

2. Rotary position encoding (RoPE)

To support longer context lengths, Phi-4 was adapted in the mid-training Rotary Position Embeddings (RoPE) of the base frequency:

- Base Frequency Adjustment: Increase the base frequency of the RoPE to 250,000to accommodate a context length of 16K.

- Role: RoPE helps the model to maintain the effectiveness of positional encoding in long sequences, enabling the model to maintain good performance over longer texts.

IV. Training Strategies and Methods

1. The concept of prioritizing data quality

The training strategy for Phi-4 is based on Data quality for the core. Unlike other models that are pre-trained using primarily organic data from the Internet (e.g., web content, code, etc.), Phi-4 strategically introduces throughout the training process a Synthetic dataThe

2. Generation and application of synthetic data

Synthetic data played a key role in the pre-training and mid-training of Phi-4:

- Multiple data generation techniques:

- Multi-Agent Prompting: The diversity of data is enriched by utilizing multiple language models or agents to co-generate data.

- Self-Revision Workflows: After the model generates the initial output, it performs self-assessment and correction to iteratively improve the quality of the output.

- Instruction Reversal (IR): Generating corresponding input instructions from existing outputs enhances the model's ability to understand and generate instructions.

- Advantages of synthetic data:

- Structured and progressive learning: Synthetic data allows precise control of difficulty and content, gradually guiding the model to learn complex reasoning and problem-solving skills.

- Improve training efficiency: The generation of synthetic data can provide targeted training data for model weaknesses.

- Avoid data contamination: Since the synthetic data is generated, the risk of the training data containing the content of the review set is avoided.

3. Fine screening and filtering of organic data

In addition to synthesizing data, Phi-4 focuses on carefully selecting and filtering high-quality data from a variety of sources Organic data::

- Data sources: Includes Web content, books, code libraries, academic papers, and more.

- Data Filtering:

- Remove low-quality content: Use automated and manual methods to filter out meaningless, incorrect, duplicate or harmful content.

- Prevent data contamination: A hybrid n-gram algorithm (13-gram and 7-gram) was used for de-duplication and de-contamination to ensure that the training data did not contain content from the review set.

4. Data-mixing strategy

Phi-4 is optimized in the composition of the training data with the following ratios:

- Synthesized data: take possession of 40%The

- Web Rewrites: take possession of 15%The first step is to rewrite high-quality Web content to generate new training samples.

- Organic Web data: take possession of 15%The Web's most valuable content is hand-picked and selected from the best of the best.

- Code Data: take possession of 20%, including the public code base and generated code synthesis data.

- Targeted Acquisitions: take possession of 10%, including academic papers, specialized books and other high-value content.

5. Multi-stage training process

Pre-training phase:

- Objective: Modeling basic language comprehension and generation skills.

- Data volume: economize 10 trillion (10T) Token.

Medium-term training phase:

- Objective: Extending context length to improve long text processing.

- Data volume: 250 billion (250B) Token.

Post-training phase (fine-tuning):

- Supervised Fine Tuning (SFT): Fine-tuning using high-quality, multi-domain data improves the model's ability to follow instructions and the quality of responses.

- Direct preference optimization (DPO): utilization Pivotal Token Search (PTS) and other methods to further optimize the model output.

V. Innovative training techniques

1. Pivotal Token Search (PTS)

PTS methodology is a major innovation in the Phi-4 training process:

- Principle: By identifying key Token that have a significant impact on the correctness of the answer during the generation process, the model is targeted to optimize the prediction on these Token.

- Advantage:

- Improve training efficiency: Focusing your optimization on the parts that have the greatest impact on results is twice as effective.

- Improved model performance: Helps the model make the right choices at key decision points and improves the overall quality of the output.

2. Improved direct preference optimization (DPO)

- DPO method: Optimization is performed directly using preference data to make the output of the model more consistent with human preferences.

- Innovation Points:

- Combined with PTS: Introducing PTS-generated training data pairs in DPO improves optimization.

- Assessment of indicators: Measure optimization more accurately by evaluating the model's performance on key Token.

VI. Model features and advantages

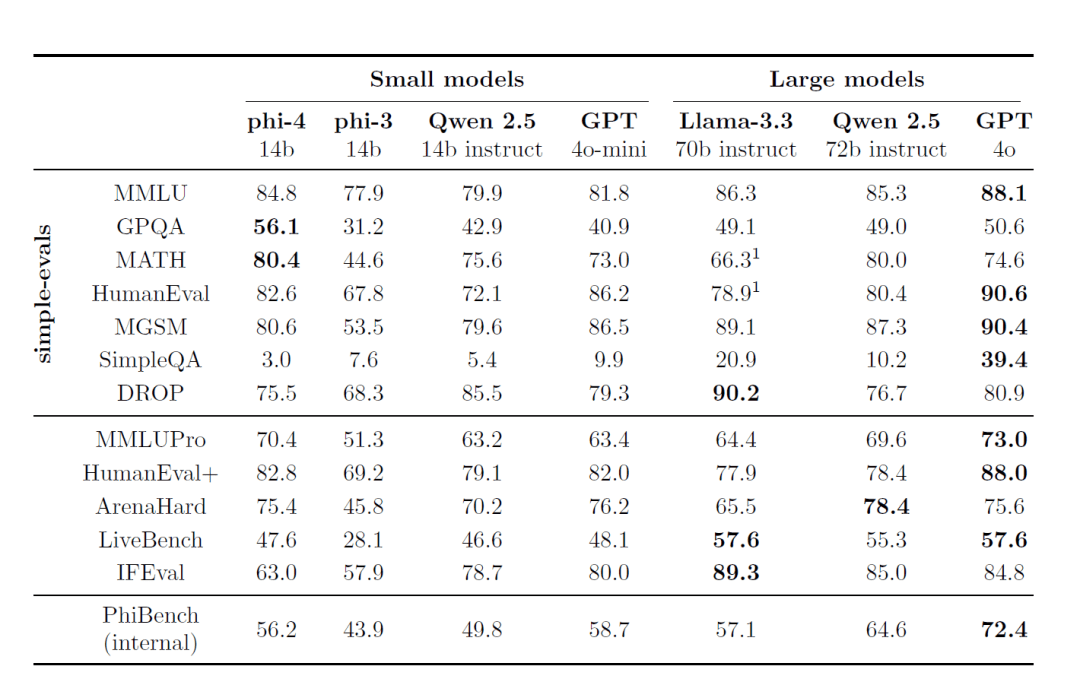

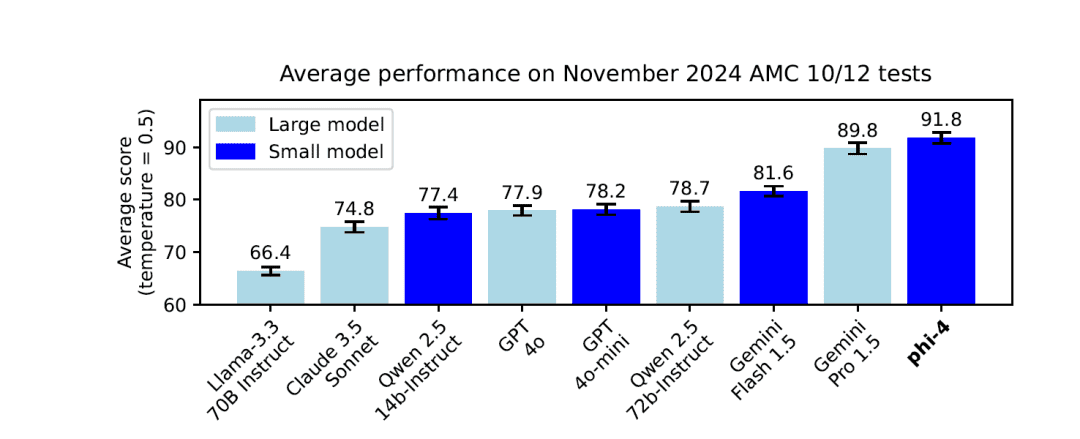

1. Excellent performance

- Small models, big capabilities: Although the parameter scale is only 14BHowever, Phi-4 performs well on several review benchmarks, especially on reasoning and problem solving tasks.

2. Excellent reasoning skills

- Math and Science Problem Solving: exist GPQA,MATH In benchmark tests such as this one, Phi-4 scores even better than its teacher model GPT-4oThe

3. Long contextualization capabilities

- Context length extension: By extending the context length in mid-training to the 16,000 Token, Phi-4 is able to handle long text and long distance dependencies more efficiently.

4. Multilingual support

- Coverage of multiple languages: The training data consisted of the German, Spanish, French, Portuguese, Italian, Hindi, Japanese and many other languages.

- Cross-linguistic competence: Excels at tasks such as translation and cross-language quizzing.

5. Security and compliance

- Principles of Responsible AI: The development process strictly follows Microsoft's Principles of Responsible AI, focusing on model security and ethics.

- Data decontamination and privacy protection: Strict data de-duplication and filtering strategies are used to prevent training data from containing sensitive content.

VII. Benchmarks and Performance

1. External benchmarking

Phi-4 demonstrates leading performance on several publicly available review benchmarks:

- MMLU (Multitasking Language Understanding): Achieved excellent results in complex multitasking comprehension tests.

- GPQA (Graduate level STEM quiz): excelled in the difficult STEM quiz, scoring higher than some of the larger scale models.

- MATH (math competition): Phi-4 demonstrates strong reasoning and computational capabilities in mathematical problem solving.

- HumanEval / HumanEval+ (code generation): In the code generation and comprehension tasks, Phi-4 outperforms models of the same size and even approaches models of a larger size.

2. Internal evaluation suite (PhiBench)

To gain insight into the model's capabilities and shortcomings, the team developed a specialized internal evaluation suite PhiBench::

- The task of diversification: Includes code debugging, code completion, mathematical reasoning, and error recognition.

- Guided model optimization: By analyzing PhiBench scores, the team was able to target improvements to the model.

VIII. Security and liability

1. Strict security alignment strategy

The development of Phi-4 follows Microsoft's Principles for Responsible AI, focusing on the safety and ethics of the model during training and fine-tuning:

- Protection against harmful content: Reduce the probability of the model generating inappropriate content by including safety fine-tuning data in the post-training phase.

- Red team testing and automated assessment: Extensive red team testing and automated security assessments were conducted, covering dozens of potential risk categories.

2. Data decontamination and prevention of overfitting

- Enhanced data decontamination strategies: A hybrid 13-gram and 7-gram algorithm is used to prevent model overfitting by removing elements of the training data that may overlap with the review benchmark.

IX. Training resources and time

1. Training time

While the official report does not specify the total training time for the Phi-4, consider this:

- Model Scale: 14B Parameters.

- Volume of training data: Pre-training phase 10T Token, mid-training 250B Token.

It can be surmised that the whole training process took a considerable amount of time.

2. GPU resource consumption

| GPUs | 1920 H100-80G |

| Training time | 21 days |

| Training data | 9.8T tokens |

X. Applications and limitations

1. Application scenarios

- Q&A system: Phi-4 performs well in complex quizzing tasks and is suitable for all kinds of intelligent quizzing applications.

- Code Generation and Understanding: It has excellent performance in programming tasks and can be used in scenarios such as code tutoring, automatic generation and debugging.

- Multilingual translation and processing: Multilingual support for globalized language services.

2. Potential limitations

- Knowledge cutoffs: The model's knowledge cuts off at the training data and may not be knowledgeable about events that occur after training.

- Long Sequence Challenge: Although the context length is extended to 16K, there may still be challenges when dealing with longer sequences.

- Risk Control: Despite stringent security measures, models may still be subject to adversarial attacks or accidentally generate inappropriate content.

The success of Phi-4 demonstrates the importance of data quality and training strategy in the development of large-scale language models. Through innovative synthetic data generation methods, careful training data mixing strategies, and advanced training techniques, Phi-4 achieves excellent performance while maintaining a small parameter size:

- Reasoning skills are outstanding: Excels in the areas of math, science and programming.

- Long text processing: The extended context length gives the model an advantage in long text processing tasks.

- Safety and responsibility: Strict adherence to responsible AI principles ensures that models are safe and ethical.

Phi-4 sets a new benchmark in the development of small parametric quantitative models, demonstrating that by focusing on data quality and training strategies, superior performance can be achieved even at smaller parameter scales.

References: /https://www.microsoft.com/en-us/research/uploads/prod/2024/12/P4TechReport.pdf

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...