Weavel: an intelligent tool for optimizing hint engineering based on Ape

General Introduction

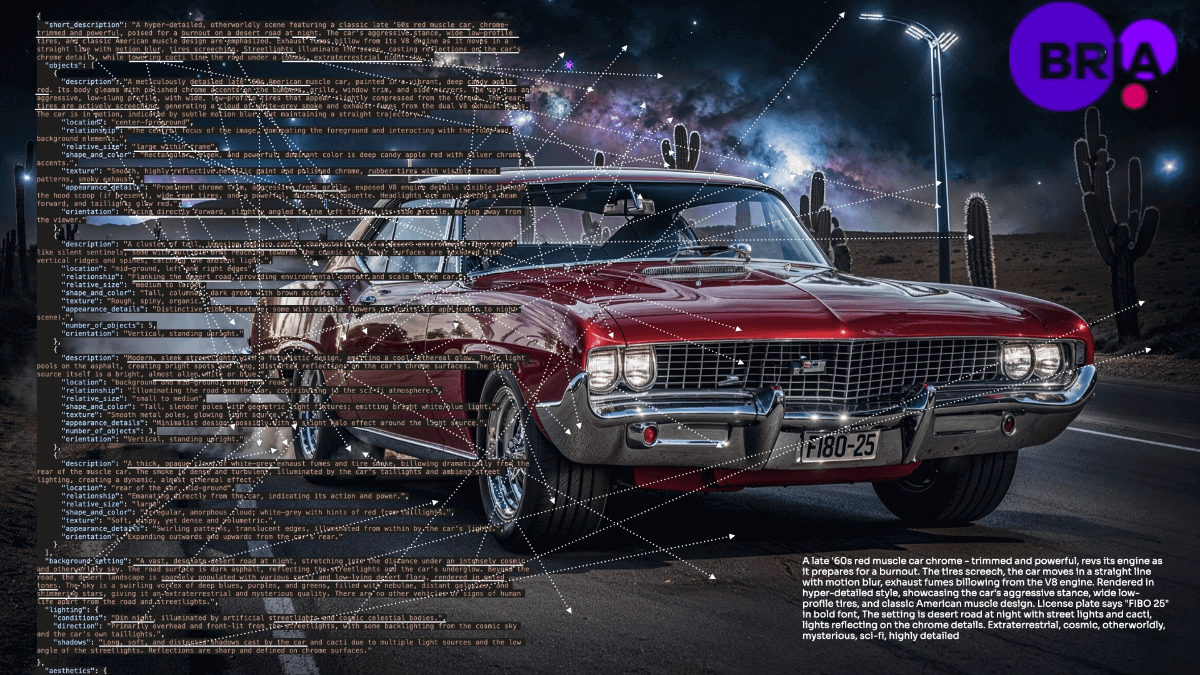

Weavel introduces Ape, a smart tool designed to optimize AI cueing projects.Ape helps users optimize cues by reducing cost and latency while improving performance.Ape achieves an outstanding score of 94.5% in the GSM8K benchmark, far surpassing methods such as Vanilla, CoT and DSPy. Users can set up and use Ape in just a few simple steps, significantly improving the efficiency and effectiveness of cueing projects.

Function List

- Cueing Optimization: Improving cueing performance by reducing cost and latency

- Data logging: Record input and output data to generate data sets

- Automated assessment: generating assessment code, using LLM as a rubric

- Continuous Improvement: Continuous optimization of cueing performance as production data increases

Using Help

Installation and Integration

- Installing the SDK: Weavel provides a Python SDK that users can install with the following command:

pip install weavel - Data logging: Record data to the Weavel platform with the following lines of code:

import weavel weavel.log(input_data, output_data) - Creating Data Sets: Users can import existing data or manually create datasets for prompt optimization.

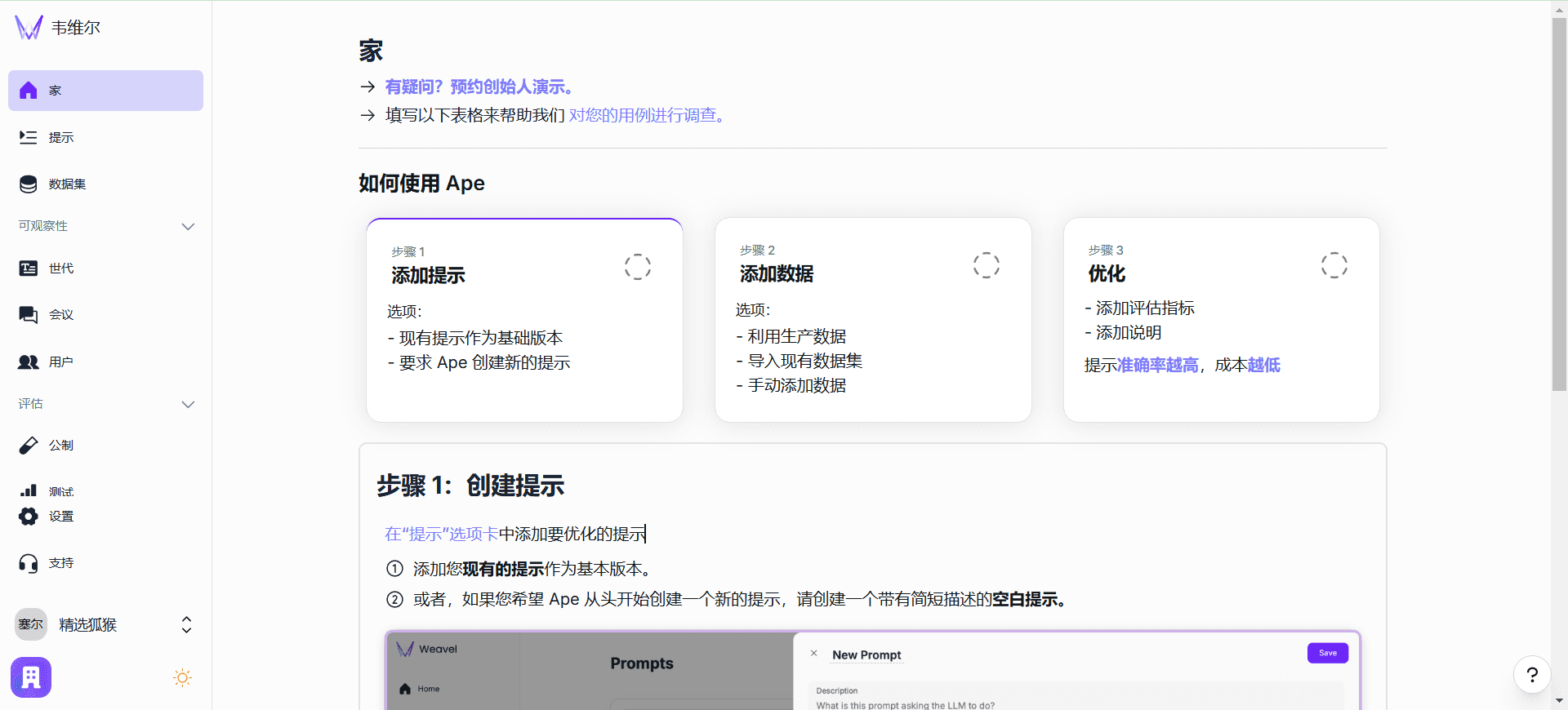

Functional operation flow

- Cue Optimization: Users can fill in the necessary information (e.g. JSON schema) via Ape and then run the optimization process. and Ape generates an optimized version of the prompt.

- Data logging: After integrating the Weavel SDK in an application, all input and output data is logged. Users can view the details of this data through the Weavel platform.

- Automated assessment: Ape generates evaluation code and uses LLM as a rubric to automatically evaluate the effectiveness of the prompt.

- continual improvement: As production data increases, Ape will continue to optimize cue performance to ensure that cues are always at their best.

usage example

- Optimization Tips: In an AI-powered chatbot, users can optimize prompts to reduce response times and improve accuracy with Ape, which records all input and output data and generates optimized versions of the prompts.

- data analysis: Through the Weavel platform, users can view all recorded data, analyze the effectiveness of the prompts, and make targeted optimizations.

- Automated assessment: Ape automatically generates evaluation codes and uses LLM as a rubric to help users quickly assess the effectiveness of the prompts.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...