voyage-3 and voyage-3-lite: a new generation of small but powerful general-purpose embedding models

summaries - We are pleased to announce the launch of voyage-3 cap (a poem) voyage-3-lite embedded models, which reach new heights in terms of retrieval quality, latency, and cost.voyage-3 Average performance improvement of 7.55% over OpenAI v3 large across all evaluation domains including code, legal, finance, multilingual, and long contexts, along with a 2.2x cost reduction and a 3x reduction in embedding dimensions, resulting in a 3x reduction in vectorDB cost.voyage-3-lite It improves retrieval accuracy by 3.82% over OpenAI v3 large, reduces cost by a factor of 6, and reduces embedding dimensions by a factor of 6. Both models support 32K-token context length, which is 4 times higher than OpenAI.

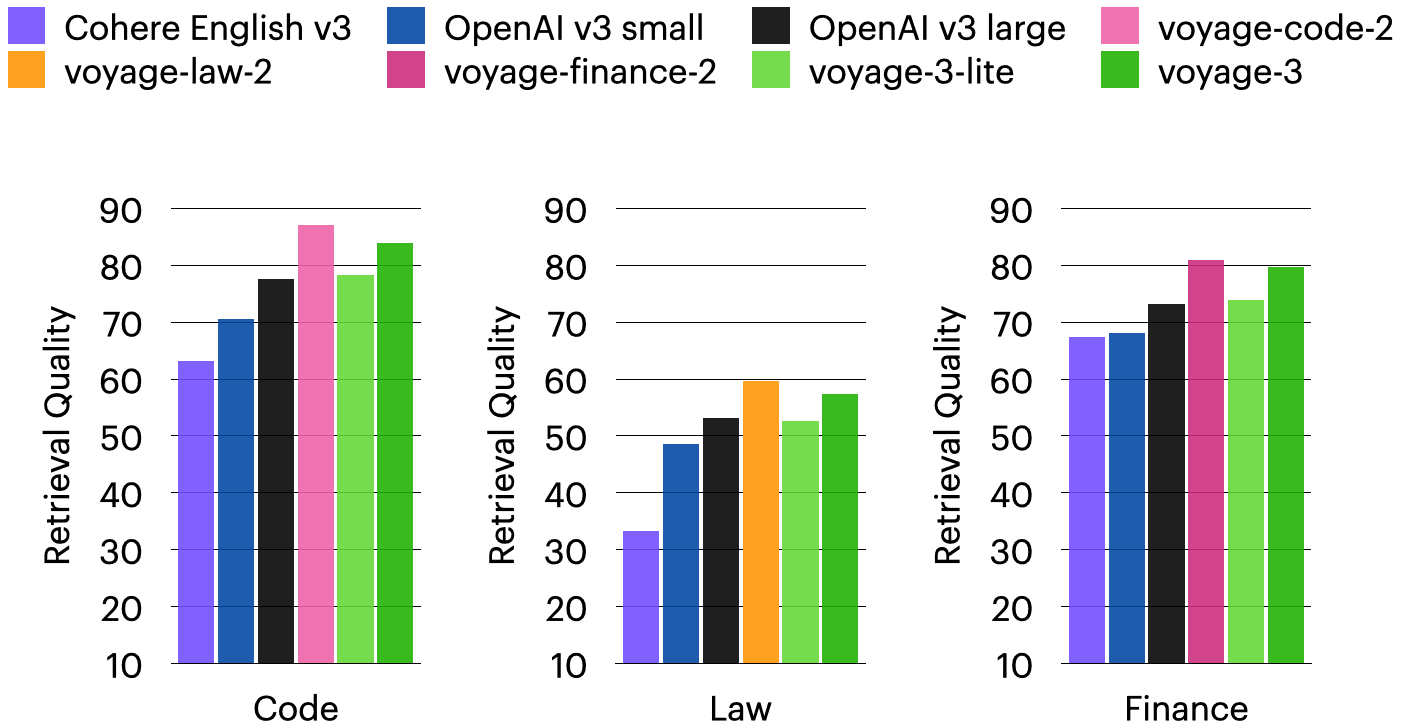

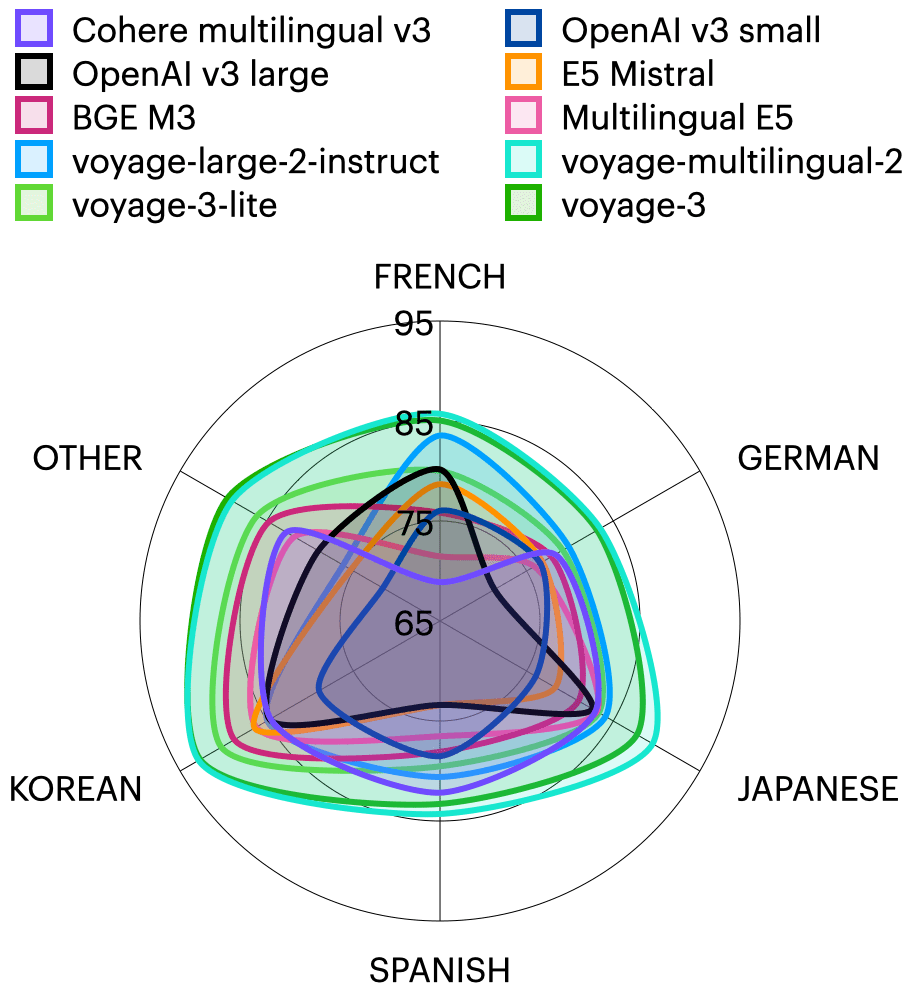

Over the past nine months, we've released the Voyage 2 series of embedded model kits, which include features like the voyage-large-2 Such state-of-the-art generalized models, as well as a number of domain-specific models, such as the voyage-code-2,voyage-law-2,voyage-finance-2 cap (a poem) voyage-multilingual-2, all of these models are adequately trained on data from their respective domains. For example.voyage-multilingual-2 Excellent performance in French, German, Japanese, Spanish and Korean, as well as best-in-class performance in English. We have also fine-tuned the model for specific use cases and data for specific organizations, such as Harvey.ai The legal embedding model of the

Now, we are pleased to introduce the Voyage 3 series of embedded models, including voyage-3 cap (a poem) voyage-3-liteand will be available in a few weeks voyage-3-large. These models outperform the competition in terms of retrieval quality while significantly reducing the price and subsequent cost of vectorDB. Specifically.voyage-3 With the following characteristics:

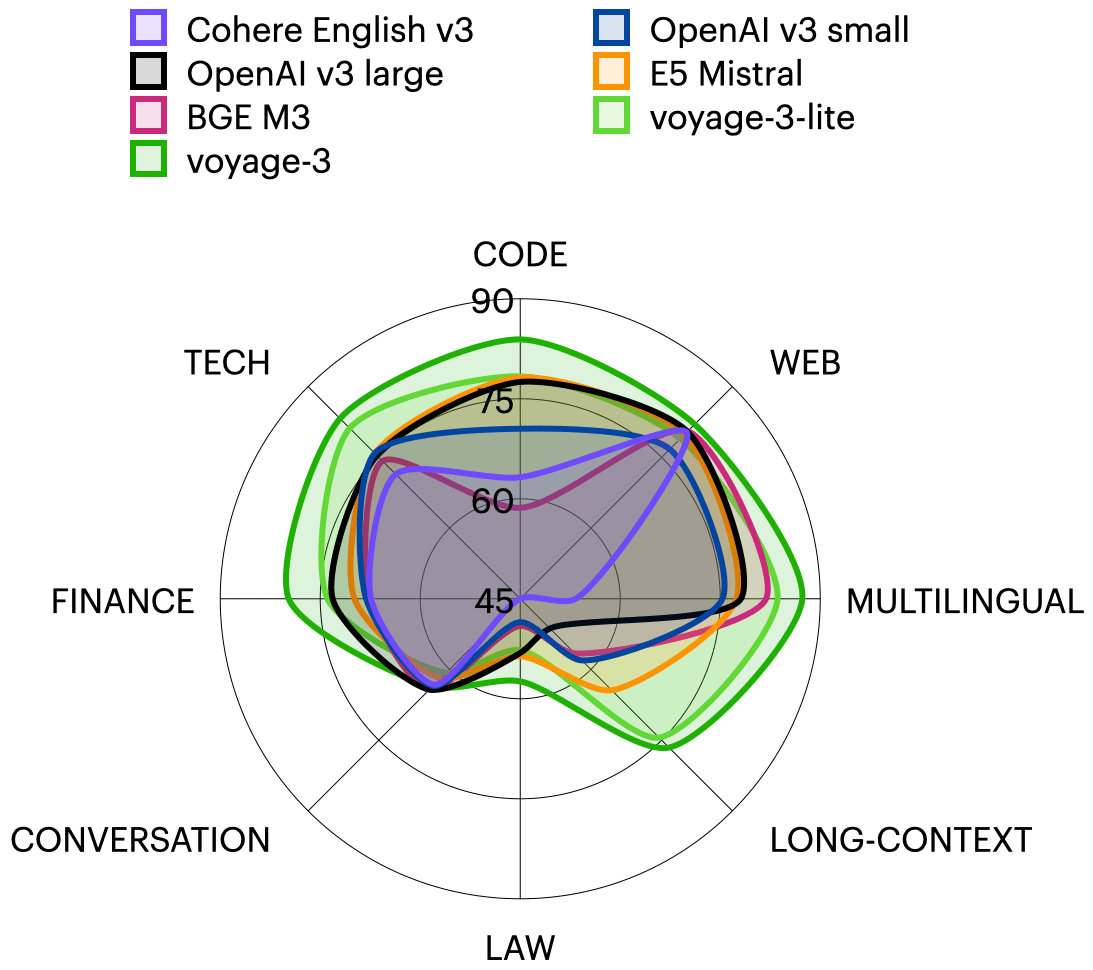

- Performance was on average 7.55% higher than OpenAI v3 large across all eight evaluation domains (technical, code, web, legal, financial, multilingual, protection, and long context).

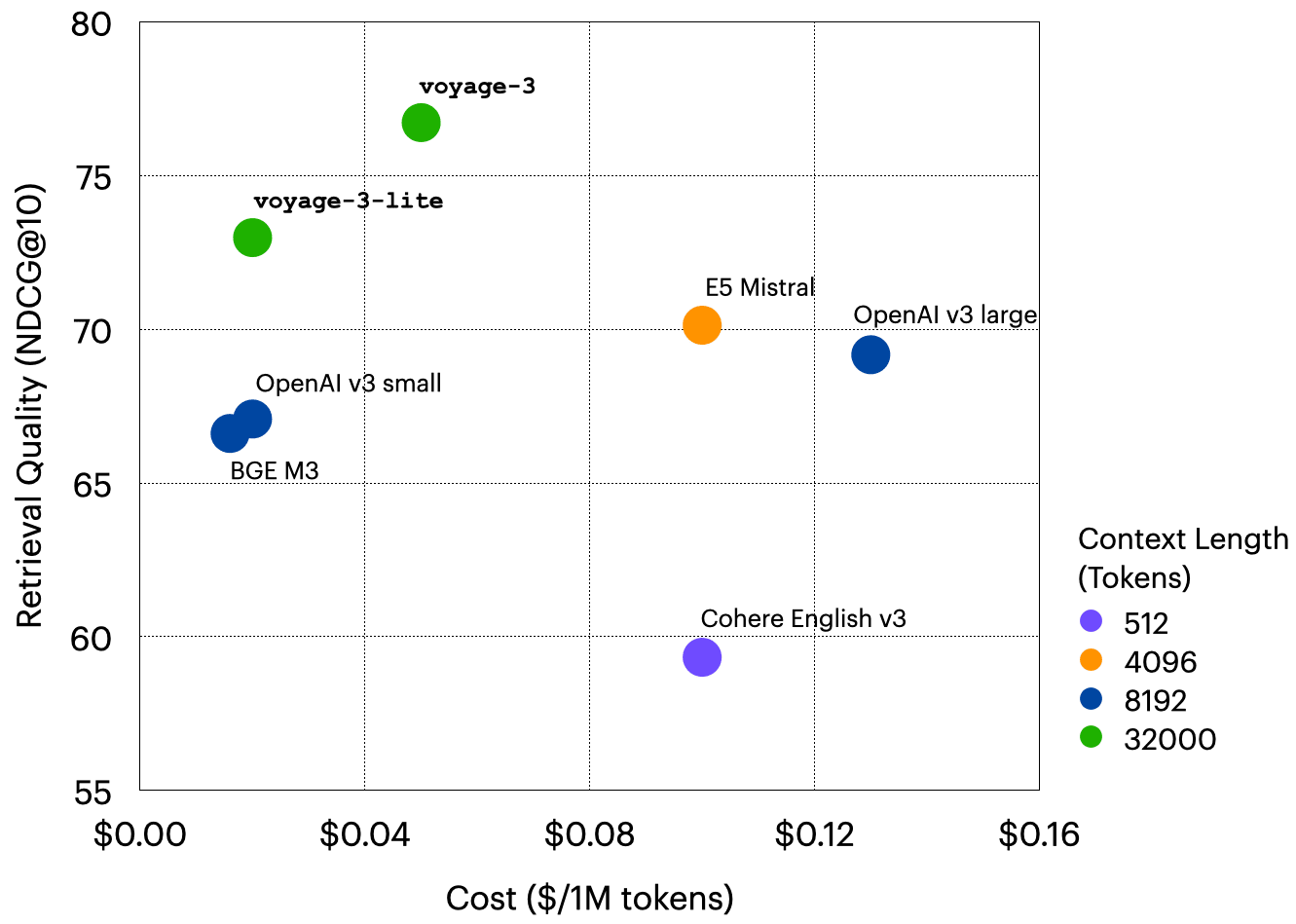

- Costs 2.2x less than OpenAI v3 large and 2.2x more than Cohere English v3 is 1.6 times lower per 1 million. tokens The cost is $0.06.

- embedding dimension than OpenAI (3072) and E5 Mistral (4096) is 3-4 times smaller (1024), reducing vectorDB cost by a factor of 3-4.

- Supports 32K-token context lengths, compared to 8K for OpenAI and 512 for Cohere.

voyage-3-lite is a lightweight model optimized for latency and low cost, and its features include:

- The average performance across domains is 3.82% higher than OpenAI v3 large.

- The cost is 6.5 times lower than OpenAI v3 large, at $0.02 per 1 million tokens.

- It outperforms OpenAI v3 small by 7.58% at the same price.

- Embedding dimension is 6-8 times smaller (512) than OpenAI (3072) and E5 Mistral (4096), which reduces vectorDB cost by 6-8 times.

- Supports 32K-token context lengths, compared to 8K for OpenAI and 512 for Cohere.

The following table summarizes important aspects of these models and some of their competitors, and is accompanied by a chart on the relationship between retrieval quality and cost 2.

| mould | dimension (math.) | Context Length | Cost (per million Token) | Retrieval Quality (NDCG@10) |

|---|---|---|---|---|

| voyage-3 | 1024 | 32K | $0.06 | 76.72 |

| voyage-3-lite | 512 | 32K | $0.02 | 72.98 |

| OpenAI v3 large | 3072 | 8K | $0.13 | 69.17 |

| OpenAI v3 small | 1536 | 8K | $0.02 | 67.08 |

| Cohere English v3 | 1024 | 512 | $0.10 | 59.33 |

| E5 Mistral | 4096 | 4K | $0.10 | 70.13 |

| BGE M3 | 1024 | 8K | $0.016 | 66.61 |

voyage-3 cap (a poem) voyage-3-lite is the result of several research innovations, including improved architectures, distillation from larger models, more than 2 trillion high-quality Token of pre-training, and alignment of retrieval results through human feedback.

testimonials. Any generic embedded user can upgrade to voyage-3 Get higher quality searches at a lower cost, or choose to voyage-3-lite Further cost savings. If you are particularly concerned with code, legal, financial, and multilingual searching, the Voyage 2 family of domain-specific models (voyage-code-2,voyage-law-2,voyage-finance-2 cap (a poem) voyage-multilingual-2) remain the best choice in their respective fields, even though the voyage-3 performance is also very competitive (see section below). If you're already using Voyage Embedding, just add the Voyage API The call will model The parameter is specified as "voyage-3" maybe "voyage-3-lite", which can be used for corpus and querying.

Assessment details

data set. We evaluated on 40 domain-specific retrieval datasets covering eight domains including technical documents, code, law, finance, web reviews, multilingual, long documents, and conversations. Each dataset contains a corpus to be retrieved and a set of queries. The corpus usually consists of documents in a particular domain, such as StackExchange answers, court opinions, technical documents, etc.; the queries can be questions, summaries of long documents, or individual documents. The following table lists the datasets in eight categories in addition to multilingual. The Multilingual domain covers 62 datasets in 26 languages, including French, German, Japanese, Spanish, Korean, Bengali, Portuguese, and Russian. The first five of these languages have multiple datasets, while the remaining languages contain one dataset per language and are categorized in the OTHER category in the Multilingual Radar Chart below.

| form | descriptive | data set |

|---|---|---|

| skill | technical documentation | Cohere, 5G, OneSignal, LangChain, PyTorch |

| coding | Code snippets, document strings | LeetCodeCpp, LeetCodeJava, LeetCodePython, HumanEval, MBPP, DS1000-referenceonly, DS1000, apps_5doc |

| legislation | Cases, court opinions, codes, patents | LeCaRDv2, LegalQuAD, LegalSummarization, AILA casedocs, AILA statutes |

| financial | SEC filings, financial QA | RAG benchmark (Apple-10K-2022), FinanceBench, TAT-QA, Finance Alpaca, FiQA Personal Finance, Stock News Sentiment, ConvFinQA, FinQA, HC3 Finance |

| reticulation | Comments, forum posts, policy pages | Huffpostsports, Huffpostscience, Doordash, Health4CA |

| long context | Long documents such as government reports, academic papers and dialogues | NarrativeQA, Needle, Passkey, QMSum, SummScreenFD, WikimQA |

| dialogues | Proceedings, dialogues | Dialog Sum, QA Conv, HQA |

A list of all evaluation datasets can be found in the This spreadsheet View in.

mould. We evaluated the voyage-3 cap (a poem) voyage-3-lite, as well as a number of alternative models, including: OpenAI v3 small (text-embedding-3-small) and large (text-embedding-3-large), E5 Mistral (intfloat/e5-mistral-7b-instruct), BGE M3 (BAAI/bge-m3Cohere English v3.embed-english-v3.0) and voyage-large-2-instruct. For domain-specific and multilingual datasets, we also evaluated voyage-law-2,voyage-finance-2,voyage-multilingual-2Multilingual E5.infloat/multilingual-e5-large) and Cohere multilingual v3 (embed-multilingual-v3.0).

norm. For the query, we retrieve the top 10 documents based on cosine similarity and reportNormalized Discount Cumulative Gain(NDCG@10), which is a standardized metric of retrieval quality and a variant of recall.

in the end

Cross-domain search. As mentioned earlier, and shown in the first radar chart in this paper, thevoyage-3 performs on average 7.55% better than OpenAI v3 large in several domains. in addition, as shown in the bar chart below.voyage-3 performance is only slightly lower than Voyage's domain-specific model.

multilingual search. As shown in the radar diagram below.voyage-3 The quality of the multilingual search is only slightly inferior to that of the voyage-multilingual-2but with lower latency and half the cost.voyage-3-lite outperforms all non-Voyage models by 4.55%, 3.13%, and 3.89% over OpenAI v3 large, Cohere multilingual v3, and Multilingual E5, respectively.

All assessment results are available on the This spreadsheet View in.

Try the Voyage 3 Series!

Try it now voyage-3 cap (a poem) voyage-3-lite! The first 200M Tokens are free. Go to our (computer) file Learn more. If you're interested in fine-tuning the embedding, we'd love to hear from you too - please contact us at contact@voyageai.com Contact Us. Follow us on X (Twitter) cap (a poem) LinkedInand join our Discord for more updates.

- The average NDCG@10 for Cohere English v3 on the LAW and LONG-CONTEXT datasets is 33.32% and 42.48%, respectively. in the radargram visualization, we rounded these values to 45%.

- E5 Mistral and BGE M3 are open source models. We use $0.10 as the cost of E5 Mistral, which is in line with the industry standard for 7B parametric models, and $0.016 for BGE M3, which is based on Fireworks.ai's cost for 350M parametric models. prices Estimated.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...