Unsloth solves duplicate inference problem in quantized version of QwQ-32B

Recently, the Qwen team released QwQ-32B model, an inference model that has been used in many Benchmarks It's a show that rivals the DeepSeek-R1 The performance of the program is excellent. However, many users have encountered infinite generation, excessive duplicates, token issues, and fine-tuning problems. This article aims to provide a detailed guide to help you debug and solve these problems, and unleash the full potential of QwQ-32B.

Unsloth The model uploaded by the team fixes the above bugs and allows for better support of tools and frameworks such as fine-tuning, vLLM, and Transformers. For those who use llama.cpp As well as other users who use llama.cpp as a backend engine, please refer to the this link Get guidance on fixes for infinite generation issues.

Unsloth QwQ-32B model (bug fixed):

- GGUF format model

- Dynamic 4-bit Quantization Model

- BnB 4-bit Quantization Model

- 16-bit full precision model

Official Recommended Settings

⚙️ Official Recommended Settings

Based on Qwen's official recommendations, the following are the recommended parameter settings for model inference:

- Temperature: 0.6

- Top_K: 40 (recommended range 20-40)

- Min_P: 0.1 (optional, but works well)

- Top_P: 0.95

- Repetition Penalty: 1.0 (in llama.cpp and transformers, 1.0 means disabled)

- Chat template:

<|im_start|>user\nCreate a Flappy Bird game in Python.<|im_end|>\n<|im_start|>assistant\n<think>\n

llama.cpp Recommended Settings

👍 llama.cpp recommended settings

The Unsloth team has noticed that many users prefer to use a Repetition Penalty However, this approach actually interferes with the sampling mechanism of llama.cpp. The duplicate penalty was intended to reduce duplicate generation, but experiments have shown that this approach does not have the desired effect.

That said, disabling the repeat penalty altogether (set to 1.0) is also an option. However, the Unsloth team found that a proper repeat penalty is still effective in suppressing infinite generation.

In order to effectively use the repetition penalty, the order of the samplers in llama.cpp must be adjusted to ensure that when applying the Repetition Penalty before sampling, otherwise it will result in infinite generation. To do this, add the following parameter:

--samplers "top_k;top_p;min_p;temperature;dry;typ_p;xtc"

By default, llama.cpp uses the following order of samplers:

--samplers "dry;top_k;typ_p;top_p;min_p;xtc;temperature"

The Unsloth team's adjusted order essentially swaps the positions of temperature and dry, and moves min_p forward. This means that the sampler will be applied in the following order:

top_k=40

top_p=0.95

min_p=0.1

temperature=0.6

dry

typ_p

xtc

If the problem persists, try moving the --repeat-penalty The value of 1.0 was slightly increased from 1.0 to 1.2 or 1.3.

Thanks to @krist486 for alerting us to the sampling direction issue with llama.cpp.

Dry Repetition Penalty

☀️ Dry Repeat Penalty

The Unsloth team looked at the suggested dry penalty usage and tried to use a value of 0.8. However, the experimental results show thatdry penalty It is more likely to cause syntax errors, especially when generating code . If the user still encounters problems, try setting the dry penalty Increase to 0.8.

If you choose to use the dry penalty, the adjusted sampling order can be equally helpful.

Ollama Run QwQ-32B Tutorials

🦙 Ollama Running QwQ-32B Tutorial

- If not already installed

ollamaPlease install it first!

apt-get update

apt-get install pciutils -y

curl -fSSL [https://ollama.com/install.sh](https://www.google.com/url?sa=E&q=https%3A%2F%2Follama.com%2Finstall.sh) | sh

- Run the model! If the run fails, try running it in another terminal

ollama serveThe Unsloth team has included all fixes and suggested parameters (temperature, etc.) in the Hugging Face upload model'sparamDocumentation!

ollama run hf.co/unsloth/QwQ-32B-GGUF:Q4_K_M

llama.cpp Run QwQ-32B Tutorials

📖 llama.cpp Run QwQ-32B Tutorials

- surname Cong llama.cpp Get the latest version

llama.cpp. You can refer to the following build instructions to build. If you don't have a GPU or just want to do CPU inference, set the-DGGML_CUDA=ONReplace with-DGGML_CUDA=OFFThe

apt-get update

apt-get install pciutils build-essential cmake curl libcurl4-openssl-dev -y

git clone [https://github.com/ggerganov/llama.cpp](https://www.google.com/url?sa=E&q=https%3A%2F%2Fgithub.com%2Fggerganov%2Fllama.cpp)

cmake llama.cpp -B llama.cpp/build

-DBUILD_SHARED_LIBS=ON -DGGML_CUDA=ON -DLLAMA_CURL=ON

cmake --build llama.cpp/build --config Release -j --clean-first --target llama-quantize llama-cli llama-gguf-split

cp llama.cpp/build/bin/llama-* llama.cpp

- Download the model (during installation)

pip install huggingface_hub hf_transfer(after). Q4_K_M or other quantized versions (e.g. BF16 full precision) can be selected. More versions are available at: https://huggingface.co/unsloth/QwQ-32B-GGUF.

# !pip install huggingface_hub hf_transfer

import os

os.environ["HF_HUB_ENABLE_HF_TRANSFER"] = "1"

from huggingface_hub import snapshot_download

snapshot_download(

repo_id="unsloth/QwQ-32B-GGUF",

local_dir="unsloth-QwQ-32B-GGUF",

allow_patterns=[" Q4_K_M "], # For Q4_K_M

)

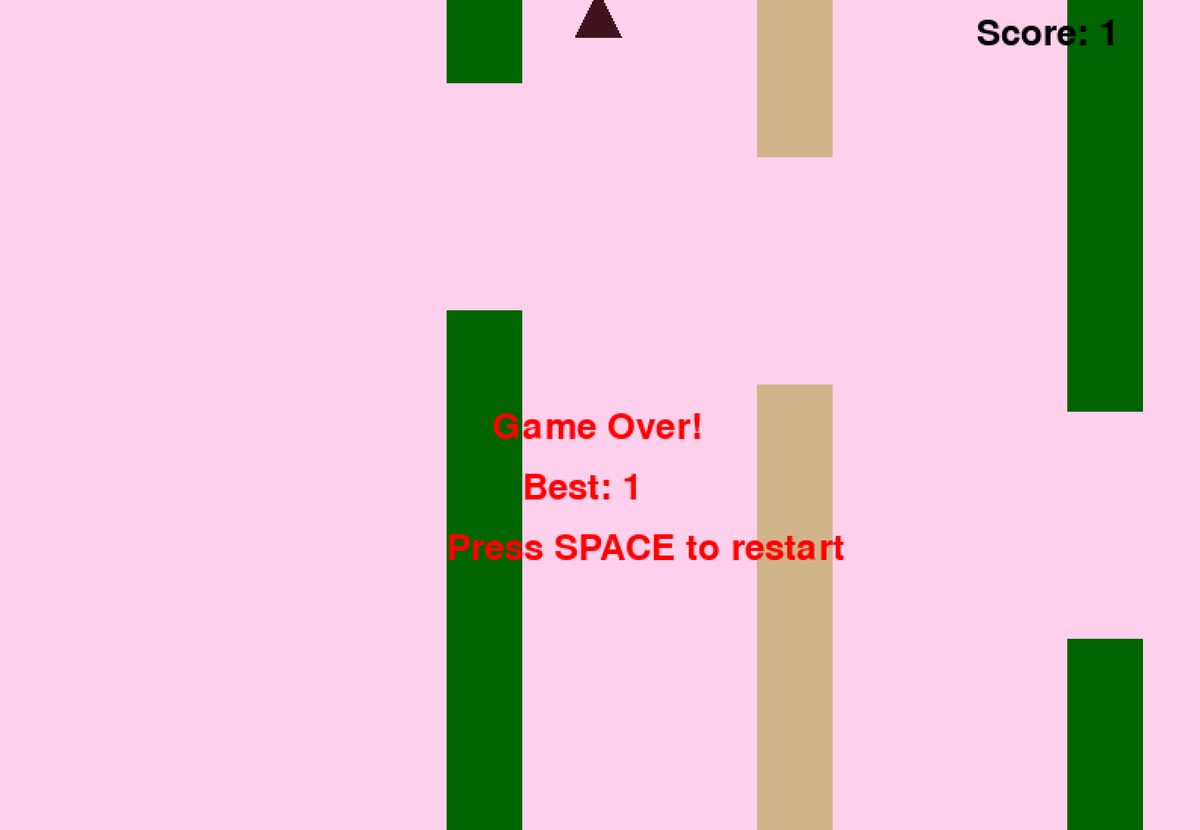

- Run the Flappy Bird test script provided by Unsloth and the output will be saved to the

Q4_K_M_yes_samplers.txtDocumentation. - Adjust the parameters according to the actual situation.

--threads 32Set the number of CPU threads.--ctx-size 16384Set the context length of the--n-gpu-layers 99Set the number of GPU offload tiers. If the GPU is running low on memory, try adjusting the--n-gpu-layersvalue. Remove this parameter if only CPU inference is used. --repeat-penalty 1.1cap (a poem)--dry-multiplier 0.5are the repeat penalty and dry penalty parameters, which can be adjusted by the user as needed.

./llama.cpp/llama-cli

--model unsloth-QwQ-32B-GGUF/QwQ-32B-Q4_K_M.gguf

--threads 32

--ctx-size 16384

--n-gpu-layers 99

--seed 3407

--prio 2

--temp 0.6

--repeat-penalty 1.1

--dry-multiplier 0.5

--min-p 0.1

--top-k 40

--top-p 0.95

-no-cnv

--samplers "top_k;top_p;min_p;temperature;dry;typ_p;xtc"

--prompt "<|im_start|>user\nCreate a Flappy Bird game in Python. You must include these things:\n1. You must use pygame.\n2. The background color should be randomly chosen and is a light shade. Start with a light blue color.\n3. Pressing SPACE multiple times will accelerate the bird.\n4. The bird's shape should be randomly chosen as a square, circle or triangle. The color should be randomly chosen as a dark color.\n5. Place on the bottom some land colored as dark brown or yellow chosen randomly.\n6. Make a score shown on the top right side. Increment if you pass pipes and don't hit them.\n7. Make randomly spaced pipes with enough space. Color them randomly as dark green or light brown or a dark gray shade.\n8. When you lose, show the best score. Make the text inside the screen. Pressing q or Esc will quit the game. Restarting is pressing SPACE again.\nThe final game should be inside a markdown section in Python. Check your code for errors and fix them before the final markdown section.<|im_end|>\n<|im_start|>assistant\n <think> \n"

2>&1 | tee Q4_K_M_yes_samplers.txt

The above Flappy Bird game hints are from Unsloth's DeepSeekR1-Dynamic 1.58bit Blogs. The full cue word is below:

<|im_start|>user

Create a Flappy Bird game in Python. You must include these things:

1. You must use pygame.

2. The background color should be randomly chosen and is a light shade. Start with a light blue color.

3. Pressing SPACE multiple times will accelerate the bird.

4. The bird's shape should be randomly chosen as a square, circle or triangle. The color should be randomly chosen as a dark color.

5. Place on the bottom some land colored as dark brown or yellow chosen randomly.

6. Make a score shown on the top right side. Increment if you pass pipes and don't hit them.

7. Make randomly spaced pipes with enough space. Color them randomly as dark green or light brown or a dark gray shade.

8. When you lose, show the best score. Make the text inside the screen. Pressing q or Esc will quit the game. Restarting is pressing SPACE again.

The final game should be inside a markdown section in Python. Check your code for errors and fix them before the final markdown section.<|im_end|>

<|im_start|>assistant

<think>

Here are the beginning and ending parts of the Python code generated by the model (with the thought process removed):

import pygame

import random

import sys

pygame.init()

### Continues

class Bird:

def __init__(self):

### Continues

def main():

best_score = 0

current_score = 0

game_over = False

pipes = []

first_time = True # Track first game play

# Initial setup

background_color = (173, 216, 230) # Light blue initially

land_color = random.choice(land_colors)

bird = Bird()

while True:

for event in pygame.event.get():

### Continues

if not game_over:

# Update bird and pipes

bird.update()

### Continues

# Drawing

### Continues

pygame.display.flip()

clock.tick(60)

if __name__ == "__main__":

main()

The model successfully generated a working Flappy Bird game!

Next, try removing the --samplers "top_k;top_p;min_p;temperature;dry;typ_p;xtc" parameter and run the same command without the Unsloth fix. The output will be saved to the file Q4_K_M_no_samplers.txt.

./llama.cpp/llama-cli

--model unsloth-QwQ-32B-GGUF/QwQ-32B-Q4_K_M.gguf

--threads 32

--ctx-size 16384

--n-gpu-layers 99

--seed 3407

--prio 2

--temp 0.6

--repeat-penalty 1.1

--dry-multiplier 0.5

--min-p 0.1

--top-k 40

--top-p 0.95

-no-cnv

--prompt "<|im_start|>user\nCreate a Flappy Bird game in Python. You must include these things:\n1. You must use pygame.\n2. The background color should be randomly chosen and is a light shade. Start with a light blue color.\n3. Pressing SPACE multiple times will accelerate the bird.\n4. The bird's shape should be randomly chosen as a square, circle or triangle. The color should be randomly chosen as a dark color.\n5. Place on the bottom some land colored as dark brown or yellow chosen randomly.\n6. Make a score shown on the top right side. Increment if you pass pipes and don't hit them.\n7. Make randomly spaced pipes with enough space. Color them randomly as dark green or light brown or a dark gray shade.\n8. When you lose, show the best score. Make the text inside the screen. Pressing q or Esc will quit the game. Restarting is pressing SPACE again.\nThe final game should be inside a markdown section in Python. Check your code for errors and fix them before the final markdown section.<|im_end|>\n<|im_start|>assistant\n<think>\n"

2>&1 | tee Q4_K_M_no_samplers.txt

Without using fixes, the model generates results with loops and a high number of Python syntax errors , as well as a variety of other problems. For example, the following code snippet appears to be correct, but is actually incorrect! Line 39 pipes.clear() ### <<< NameError: name 'pipes' is not defined. Did you forget to import 'pipes'? Did you forget to import 'pipes'?

import pygame

import random

pygame.init()

# Constants

WIDTH, HEIGHT = 800, 600

GROUND_HEIGHT = 20

GRAVITY = 0.7

PIPE_SPEED = -3

BIRD_SIZE = 45

MIN_GAP = 130

MAX_GAP = 200

PIPE_COLORS = [(0, 96, 0), (205, 133, 63), (89, 97, 107)]

DARK_BROWN = (94, 72, 4)

YELLOW = (252, 228, 6)

screen = pygame.display.set_mode((WIDTH, HEIGHT))

clock = pygame.time.Clock()

def random_light_color():

return (

random.randint(180, 230),

random.randint(190, 300),

random.randint(250, 255)

)

def reset_game():

global bird_x, bird_y

global pipes, score

global background_color, land_color

global bird_shape, bird_color

# Bird properties

bird_x = WIDTH * 0.3

bird_y = HEIGHT // 2

bird_vel = -5 # Initial upward thrust

pipes.clear() ### <<< NameError: name 'pipes' is not defined. Did you forget to import 'pipes'?

If you further increase --repeat-penalty to 1.5, the situation gets worse, with syntax errors becoming more obvious and the code not working at all.

import pygame

from random import randint # For generating colors/shapes/positions randomly

pygame.init()

# Constants:

WIDTH, HEIGHT =456 ,702 #

BACKGROUND_COLOR_LIGHTS=['lightskyblue']

GAP_SIZE=189 #

BIRD_RADIUS=3.

PIPE_SPEED=- ( ) ?

class Game():

def __init__(self):

self.screen_size=( )

def reset_game_vars():

global current_scor e

# set to zero and other initial states.

# Main game loop:

while running :

for event in pygame.event.get() :

if quit ... etc

pygame.quit()

print("Code is simplified. Due time constraints, full working version requires further implementation.")

One might think that this is only a problem with the quantized version of Q4_K_M... the full precision version of BF16 should be fine, right? However, this is not the case. Even with the BF16 full-precision model, if you don't apply the -samplers "top_k;top_p;min_p;temperature;dry;typ_p;xtc" fixes provided by the Unsloth team and use the Repetition Penalty, you will have the same generation failure.

Token not displayed?

🤔 token Not shown?

Some users have reported that some systems may not be able to output the thought process correctly due to the token added by default to the chat template. Users need to manually edit the Jinja templates to include:

{%- if tools %} {{- '<|im_start|>system\n' }} {%- if messages[0]['role'] == 'system' %} {{- messages[0]['content'] }} {%- else %} {{- '' }} {%- endif %} {{- "\n\n# Tools\n\nYou may call one or more functions to assist with the user query.\n\nYou are provided with function signatures within <tools></tools> XML tags:\n<tools>" }} {%- for tool in tools %} {{- "\n" }} {{- tool | tojson }} {%- endfor %} {{- "\n</tools>\n\nFor each function call, return a json object with function name and arguments within <tool_call></tool_call> XML tags:\n<tool_call>\n{\"name\": <function-name>, \"arguments\": <args-json-object>}\n</tool_call><|im_end|>\n" }} {%- else %} {%- if messages[0]['role'] == 'system' %} {{- '<|im_start|>system\n' + messages[0]['content'] + '<|im_end|>\n' }} {%- endif %} {%- endif %} {%- for message in messages %} {%- if (message.role == "user") or (message.role == "system" and not loop.first) %} {{- '<|im_start|>' + message.role + '\n' + message.content + '<|im_end|>' + '\n' }} {%- elif message.role == "assistant" and not message.tool_calls %} {%- set content = message.content.split('</think>')[-1].lstrip('\n') %} {{- '<|im_start|>' + message.role + '\n' + content + '<|im_end|>' + '\n' }} {%- elif message.role == "assistant" %} {%- set content = message.content.split('</think>')[-1].lstrip('\n') %} {{- '<|im_start|>' + message.role }} {%- if message.content %} {{- '\n' + content }} {%- endif %} {%- for tool_call in message.tool_calls %} {%- if tool_call.function is defined %} {%- set tool_call = tool_call.function %} {%- endif %} {{- '\n<tool_call>\n{"name": "' }} {{- tool_call.name }} {{- '", "arguments": ' }} {{- tool_call.arguments | tojson }} {{- '}\n</tool_call>' }} {%- endfor %} {{- '<|im_end|>\n' }} {%- elif message.role == "tool" %} {%- if (loop.index0 == 0) or (messages[loop.index0 - 1].role != "tool") %} {{- '<|im_start|>user' }} {%- endif %} {{- '\n<tool_response>\n' }} {{- message.content }} {{- '\n</tool_response>' }} {%- if loop.last or (messages[loop.index0 + 1].role != "tool") %} {{- '<|im_end|>\n' }} {%- endif %} {%- endif %} {%- endfor %} {%- if add_generation_prompt %} {{- '<|im_start|>assistant\n<think>\n' }} {%- endif %}

Modified to remove the final \n. The modification requires models to manually add \n during inference, but this may not always work. the DeepSeek team has also modified all models to add tokens by default to force the model into inference mode.

Therefore, change {%- if add_generation_prompt %} {{- 'assistant\n\n' }} {%- endif %} to {%- if add_generation_prompt %} { {- 'assistant\n' }} {%- endif %}, i.e. remove \n.

Full jinja template with the \n section removed

{%- if tools %} {{- '<|im_start|>system\n' }} {%- if messages[0]['role'] == 'system' %} {{- messages[0]['content'] }} {%- else %} {{- '' }} {%- endif %} {{- "\n\n# Tools\n\nYou may call one or more functions to assist with the user query.\n\nYou are provided with function signatures within <tools></tools> XML tags:\n<tools>" }} {%- for tool in tools %} {{- "\n" }} {{- tool | tojson }} {%- endfor %} {{- "\n</tools>\n\nFor each function call, return a json object with function name and arguments within <tool_call></tool_call> XML tags:\n<tool_call>\n{\"name\": <function-name>, \"arguments\": <args-json-object>}\n</tool_call><|im_end|>\n" }} {%- else %} {%- if messages[0]['role'] == 'system' %} {{- '<|im_start|>system\n' + messages[0]['content'] + '<|im_end|>\n' }} {%- endif %} {%- endif %} {%- for message in messages %} {%- if (message.role == "user") or (message.role == "system" and not loop.first) %} {{- '<|im_start|>' + message.role + '\n' + message.content + '<|im_end|>' + '\n' }} {%- elif message.role == "assistant" and not message.tool_calls %} {%- set content = message.content.split('</think>')[-1].lstrip('\n') %} {{- '<|im_start|>' + message.role + '\n' + content + '<|im_end|>' + '\n' }} {%- elif message.role == "assistant" %} {%- set content = message.content.split('</think>')[-1].lstrip('\n') %} {{- '<|im_start|>' + message.role }} {%- if message.content %} {{- '\n' + content }} {%- endif %} {%- for tool_call in message.tool_calls %} {%- if tool_call.function is defined %} {%- set tool_call = tool_call.function %} {%- endif %} {{- '\n<tool_call>\n{"name": "' }} {{- tool_call.name }} {{- '", "arguments": ' }} {{- tool_call.arguments | tojson }} {{- '}\n</tool_call>' }} {%- endfor %} {{- '<|im_end|>\n' }} {%- elif message.role == "tool" %} {%- if (loop.index0 == 0) or (messages[loop.index0 - 1].role != "tool") %} {{- '<|im_start|>user' }} {%- endif %} {{- '\n<tool_response>\n' }} {{- message.content }} {{- '\n</tool_response>' }} {%- if loop.last or (messages[loop.index0 + 1].role != "tool") %} {{- '<|im_end|>\n' }} {%- endif %} {%- endif %} {%- endfor %} {%- if add_generation_prompt %} {{- '<|im_start|>assistant\n' }} {%- endif %}

Additional Notes

Additional Notes

The Unsloth team initially hypothesized that the problem might stem from the following:

- The context length of QwQ may not be the native 128K, but 32K plus the YaRN extension. See, for example, the readme file at https://huggingface.co/Qwen/QwQ-32B:

{

...,

"rope_scaling": {

"factor": 4.0,

"original_max_position_embeddings": 32768,

"type": "yarn"

}

}

The Unsloth team tried to rewrite YaRN's handling in llama.cpp, but the problem persisted.

--override-kv qwen2.context_length=int:131072

--override-kv qwen2.rope.scaling.type=str:yarn

--override-kv qwen2.rope.scaling.factor=float:4

--override-kv qwen2.rope.scaling.original_context_length=int:32768

--override-kv qqwen2.rope.scaling.attn_factor=float:1.13862943649292 \

- The Unsloth team also suspected that the RMS Layernorm epsilon value might be incorrect, and should perhaps be 1e-6 instead of 1e-5. For example. This link. in which rms_norm_eps=1e-06 , and the This link. in rms_norm_eps=1e-05. the Unsloth team also tried to rewrite this value, but the problem is still not solved:

--override-kv qwen2.attention.layer_norm_rms_epsilon=float:0.000001 \

- Thanks to @kalomaze, the Unsloth team also tested the tokenizer IDs between llama.cpp and Transformers to see if they matched. The results show that they do match, so the mismatch in tokenizer IDs is not the source of the problem.

Here are the results of the Unsloth team's experiment:

61KB file_BF16_no_samplers.txt

BF16 Full precision, no sample repair applied

55KB file_BF16_yes_samplers.txt

BF16 Full Precision, Sample Restoration Applied

71KB final_Q4_K_M_no_samplers.txt

Q4_K_M Accuracy, no sample fix applied

65KB final_Q4_K_M_yes_samplers.txt

Q4_K_M Accuracy, sampling fixes applied

Tokenizer Bug Fixes

✏️ Tokenizer Bug Fix

- The Unsloth team has also found some specific issues affecting the fine-tuning.The EOS token is correct, but a more logical choice for the PAD token would be "".The Unsloth team has updated the configuration at https://huggingface.co/unsloth/QwQ-32B/blob/ The Unsloth team has updated the configuration in main/tokenizer_config.json.

"eos_token": "<|im_end|>",

"pad_token": "<|endoftext|>",

Dynamic 4-bit Quantization

🛠️ Dynamic 4-bit Quantization

The Unsloth team also uploaded a dynamic 4-bit quantization model, which significantly improves model accuracy compared to plain 4-bit quantization! The figure below shows the error analysis of the QwQ model activation values and weights during the quantization process:

The Unsloth team has uploaded the dynamic 4-bit quantization model to: https://huggingface.co/unsloth/QwQ-32B-unsloth-bnb-4bit.

since vLLM As of version 0.7.3 (February 20, 2024) https://github.com/vllm-project/vllm/releases/tag/v0.7.3, vLLM has begun to support loading Unsloth dynamic 4-bit quantization models!

All GGUF format models can be found at https://huggingface.co/unsloth/QwQ-32B-GGUF!

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...