Thera: any size image enlargement, de-aliased open source tools

General Introduction

Thera is an open source image super-resolution tool developed by a team at ETH Zurich and the University of Zurich. It can zoom low-resolution images to arbitrary scales, such as 2x, 3.14x, or even non-integer multiples, and zoom in without jaggies or blurring.At the heart of Thera is the use of Neural Heat Fields and a built-in physical observation model that mimics the real imaging process, allowing for naturalistic image detail. The tool is freely available on GitHub, and anyone can download the code or use the pre-trained model.

Demo address: https://huggingface.co/spaces/prs-eth/thera

Function List

- Support arbitrary scale magnification: you can freely set the magnification, not limited to integers.

- Jagged-free effect: eliminates jaggedness and distortion during amplification through Neural Thermal Field technology.

- Built-in physical observation model: simulates the real imaging process to enhance the naturalness of the image.

- Open source support: Users can modify the code or adapt it to their needs.

- Provides pre-trained models: includes multiple variants of both EDSR and RDN backbone networks.

- Cross-platform operation: Based on Python 3.10, supports Linux systems and NVIDIA GPUs.

- Local demo support: upload images and process them in real time through the Gradio interface.

Using Help

The use of Thera is divided into two parts: installation and operation. Below are detailed steps to ensure you get started quickly.

Installation process

Thera requires Linux, Python 3.10 and NVIDIA GPU support. The installation steps are listed below:

- Creating the Environment

Create a Python 3.10 environment with Conda and activate it:

conda create -n thera python=3.10

conda activate thera

- Download Code

Clone the Thera project from GitHub:

git clone https://github.com/prs-eth/thera.git

cd thera

- Installation of dependencies

Install the required libraries with pip:

pip install --upgrade pip

pip install -r requirements.txt

- Download pre-trained model

Thera offers a variety of pre-trained models such asthera-rdn-pro.pklYou can download it from Hugging Face or Google Drive. You can download it from Hugging Face or Google Drive. The link is below:

- EDSR Air. Hugging Face

- RDN Pro. Hugging Face

After downloading, place the model file into thetherafolder.

Once the installation is complete, Thera is ready to run. If you have problems, you can use the python run_eval.py -h Viewing Help.

workflow

The main function of Thera is to enlarge the image. Here are the exact steps:

- Preparing the input image

Place the image to be enlarged in a folder, for exampledata/test_imagesIt supports PNG, JPEG and other formats. - Running Super Resolution

Enter commands in the terminal to process the image:

./super_resolve.py input.png output.png --scale 3.14 --checkpoint thera-rdn-pro.pkl

input.pngis the input image.output.pngis the output image.--scaleSet the magnification, e.g. 3.14.--checkpointSpecifies the pre-trained model path.

- batch file

If you want to process multiple images, you can use therun_eval.py::

python run_eval.py --checkpoint thera-rdn-pro.pkl --data-dir data --eval-sets test_images

The output is saved in the outputs folder.

Featured Function Operation

The highlights of Thera are the arbitrary scales and the non-aliased effects. Here is a detailed description:

- Zoom in at any scale

You can set any magnification such as--scale 2.5maybe--scale 3.14This is more flexible than traditional tools that can only select a fixed number of times. This is more flexible than the traditional tool which only allows you to select a fixed magnification. For example, when zooming to 3.14x, the command is:

./super_resolve.py input.png output.png --scale 3.14 --checkpoint thera-rdn-pro.pkl

- No jagged effect

Thera processes image edges with Neural Thermal Field technology. No additional settings are required, and zooming in automatically preserves details and avoids jaggies. For example, when zooming in on a text image, the edges remain sharp. - Physical observation model

This feature is built into the model and will simulate the real imaging process. You don't have to adjust the parameters, Thera will automatically optimize the output to make the image more realistic.

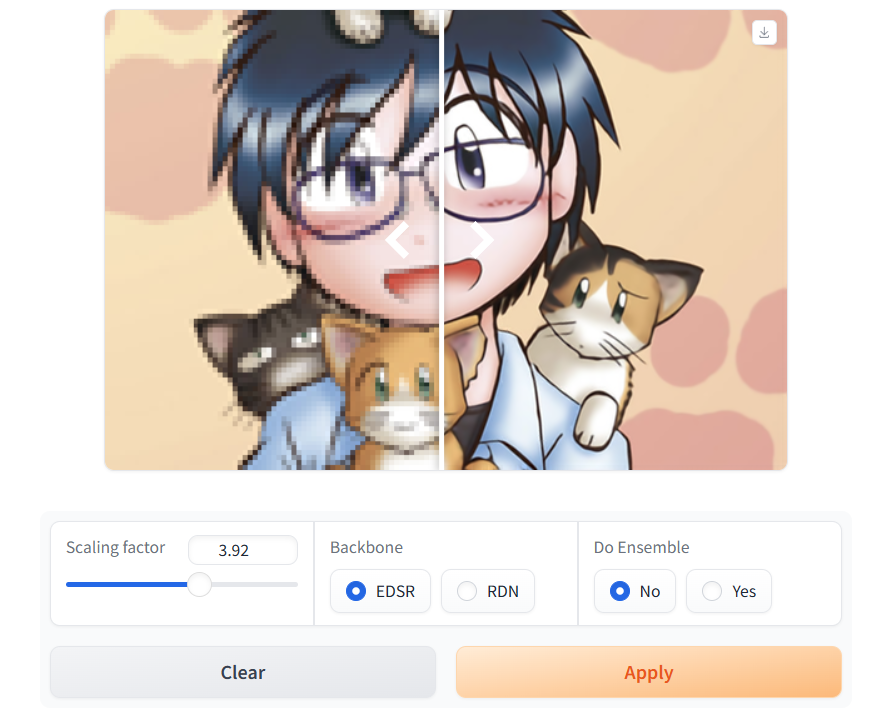

Local demo version

For a more visual experience with Thera, run the Gradio demo:

- Cloning demo code

git clone https://huggingface.co/spaces/prs-eth/thera thera-demo

cd thera-demo

- Installation of dependencies

pip install -r requirements.txt

- Startup Demo

python app.py

Open your browser and visit http://localhost:7860. You can upload an image on the webpage, adjust the magnification and see the effect in real time.

Debugging Recommendations

These XLA parameters can be used if performance problems are encountered:

- Turn off video memory pre-allocation:

XLA_PYTHON_CLIENT_PREALLOCATE=false - Turn off JIT debugging:

JAX_DISABLE_JIT=1

With these steps, you can process any image with Thera. It's easy to use and gives excellent results.

application scenario

- Remote Sensing Image Processing

Thera can zoom in on images taken by satellites or drones. Researchers can use it to analyze details of the terrain, such as monitoring changes in forest cover. - Medical Image Enhancement

Thera improves the resolution of X-ray or MRI images. Doctors can use it to visualize more subtle lesions and improve diagnostic efficiency. - Digital Art Restoration

Artists can use Thera to zoom in on low-resolution works or old photographs. Zooming in on a blurry sketch, for example, results in more detail.

QA

- Does Thera support Windows?

Currently only Linux is supported as it relies on NVIDIA GPUs and specific environments. windows users can run it in a virtual machine. - Is there a limit to the magnification?

There is no fixed upper limit. However, if the magnification is too high (e.g. 10x or more), the effect may be degraded due to insufficient information in the original image. - When will the training codes be released?

Officially, the training code will be released soon, at a time to be determined. You can follow the GitHub page for updates.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...