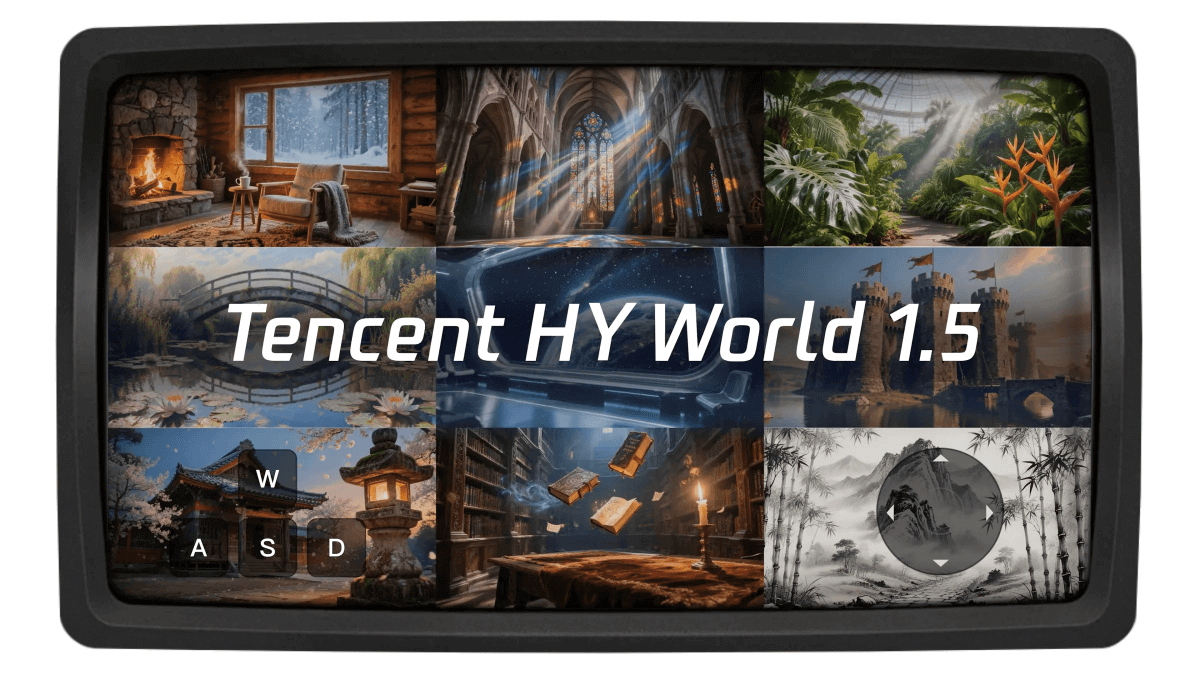

Mixed World Model 1.5 - Tencent Mixed Open Source Real-time World Model Generation Framework

What is the hybrid world model 1.5

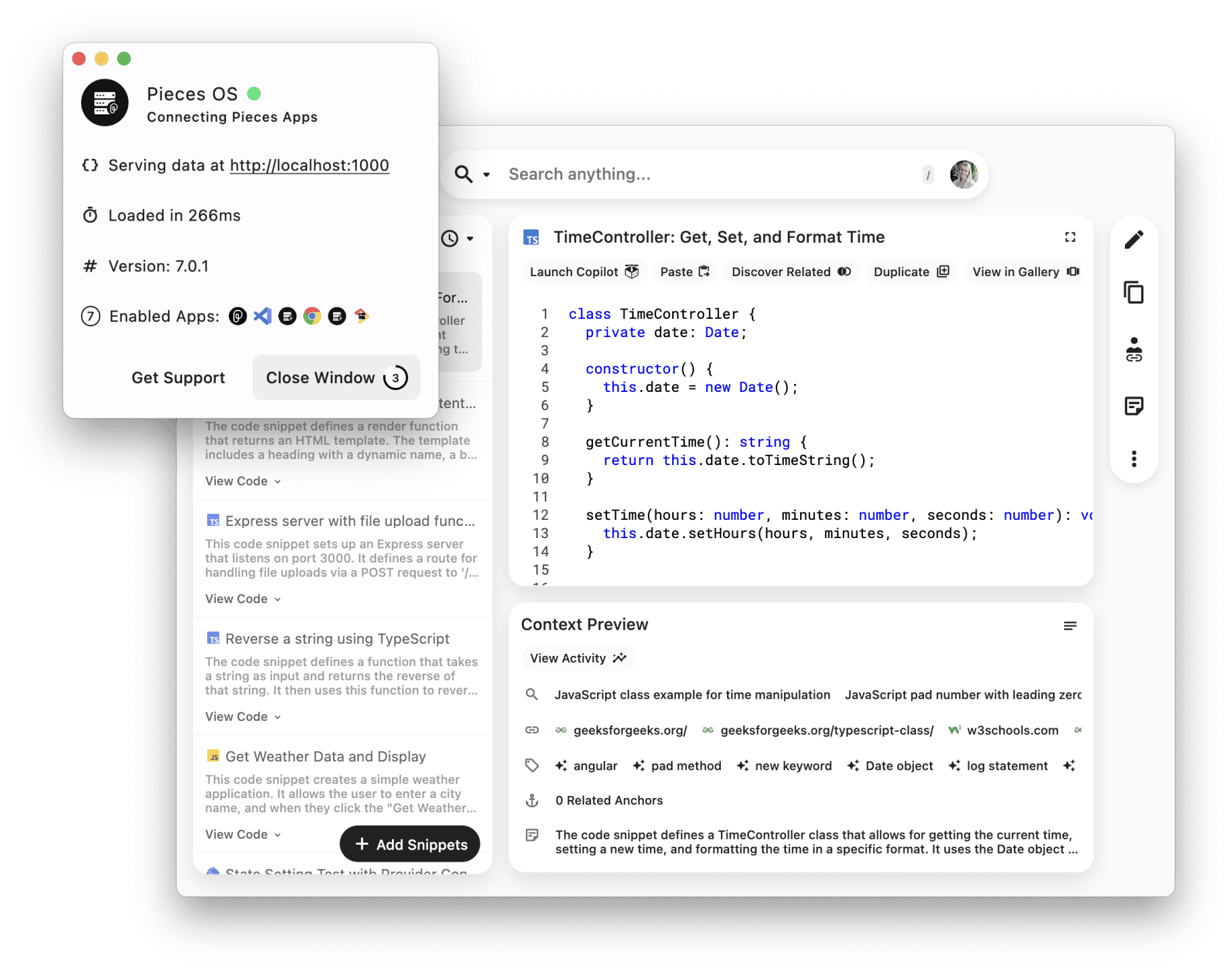

Hybrid WorldPlay 1.5 (Tencent HY WorldPlay) is the industry's first open source real-time world modeling framework released by Tencent, covering the entire chain of data, training, and streaming inference deployment. The core is the WorldPlay autoregressive diffusion model, which is trained using the Next-Frames-Prediction task, cracking the real-time and geometric consistency problem. Real-time interaction generation, through the original Context Forcing distillation scheme and streaming inference optimization, can generate 720P HD video at 24 frames per second; long range 3D consistency, with the help of reconstructed memory mechanism, supports the geometric consistency generation of minute-level content; diversified interactive experience, applicable to different styles of scenarios and first- and third-person perspectives.

Functional features of the Hybrid World Model 1.5

- Real-time interaction generation capabilitiesThrough the original "context-aligned distillation" scheme and streaming reasoning optimization, the model can generate 720P HD video streams in real time at 24 frames per second, ensuring smooth interaction and allowing users to manipulate the viewpoint and environment changes in real time.

- Long range 3D consistencyThe model can support the generation of video content up to the minute level with a high degree of geometric consistency by means of a "reconstructive memory mechanism", laying the foundation for the construction of a high-quality 3D spatial simulator.

- Diverse Interactive Experiences: Users can quickly generate an exclusive 3D interactive world by simply entering a text description or a picture. With a keyboard, mouse or joystick, users can control the viewpoint movement and steering as freely as a game character to explore the AI-generated environment immersively.

- Open source full link framework: The first open source real-time world model training system, covering the whole chain and link of data, training and inference deployment, providing developers with a complete development and deployment process.

- High-quality data acquisition: The automated 3D scene rendering process built by the Mixed Meta team enables access to large amounts of high-quality real-world rendering data, further energizing the potential of the core algorithms.

Core Benefits of the Hybrid World Model 1.5

- Real-time and Fluency: The ability to generate 720P HD video streams in real time at 24 frames per second ensures smooth and instantaneous user interaction.

- long term consistency: Supports 3D content generation up to the minute level and maintains a high degree of geometric consistency for long duration generation of complex scenes.

- Diverse Interactions: Supports multiple input methods (text, images) and interaction modes (first-person, third-person) to provide an immersive 3D exploration experience.

- Open source and full link support: The first open source complete training and deployment system, covering data, training, inference and other links, to provide developers with strong technical support.

- High-quality data-driven: Obtain high-quality data by automating the 3D scene rendering process to further improve the quality of model generation and generalization capabilities.

- Innovative training frameworks: Enhancing the visual quality and geometric consistency of generated content using context-aligned distillation techniques and a 3D reward reinforcement learning framework.

What is the official website for Hybrid World Model 1.5?

- Project website:: https://3d-models.hunyuan.tencent.com/world/

- GitHub repository:: https://github.com/Tencent-Hunyuan/HY-WorldPlay

- HuggingFace Model Library:: https://huggingface.co/tencent/HY-WorldPlay

- Technical Papers:: https://3d-models.hunyuan.tencent.com/world/world1_5/HYWorld_1.5_Tech_Report.pdf

People for the Hybrid World Model 1.5

- game developer: It can be used to quickly generate game scenes and content, reduce development costs and improve development efficiency, especially suitable for 3D game development that requires real-time interaction and long-time generation.

- VFX TeamThe company's 3D scenes and animations can be generated in real time, providing a more efficient content generation tool for movie and TV special effects production and accelerating the creation process.

- Virtual Reality (VR) and Augmented Reality (AR) Developers: Supports immersive 3D interactive experiences for VR/AR application development, creating more realistic and fluid virtual environments for users.

- Artificial intelligence researchers: The open-source, link-wide framework and high-quality datasets provide researchers with a rich research resource that facilitates research and innovation in related fields.

- content creator: e.g. video bloggers, animators, etc., can quickly generate creative content through simple text or image input, enhancing the diversity and efficiency of content creation.

- Educators and students: It can be used to create immersive teaching scenarios that enhance the fun and interactivity of learning and provide a platform for students to practice and explore.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...