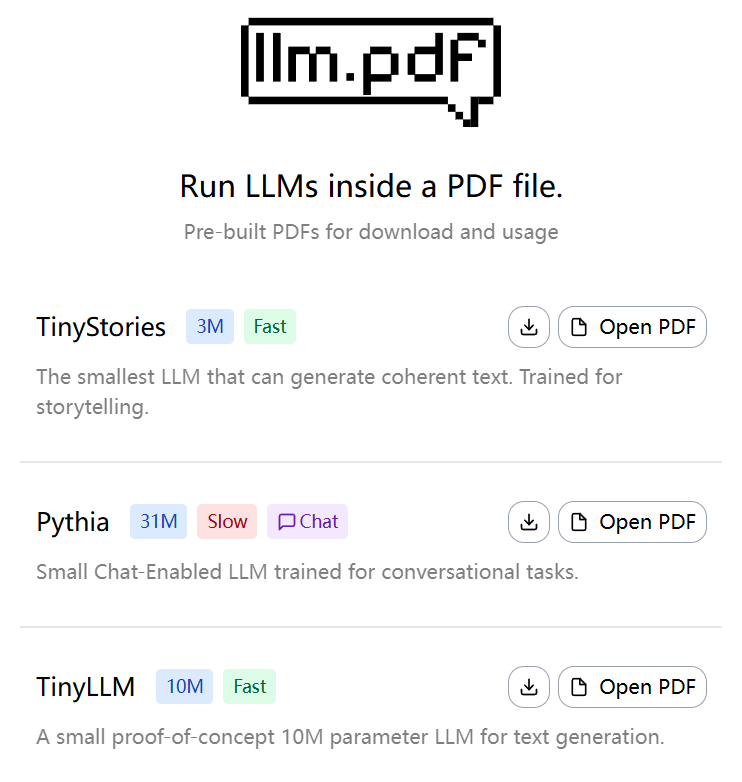

llm.pdf: experimental project to run a large-scale language model in a PDF file

General Introduction llm.pdf is an open source project that allows users to run Large Language Models (LLMs) directly in PDF files. Developed by EvanZhouDev and hosted on GitHub, this project demonstrates an innovative approach: by Em...