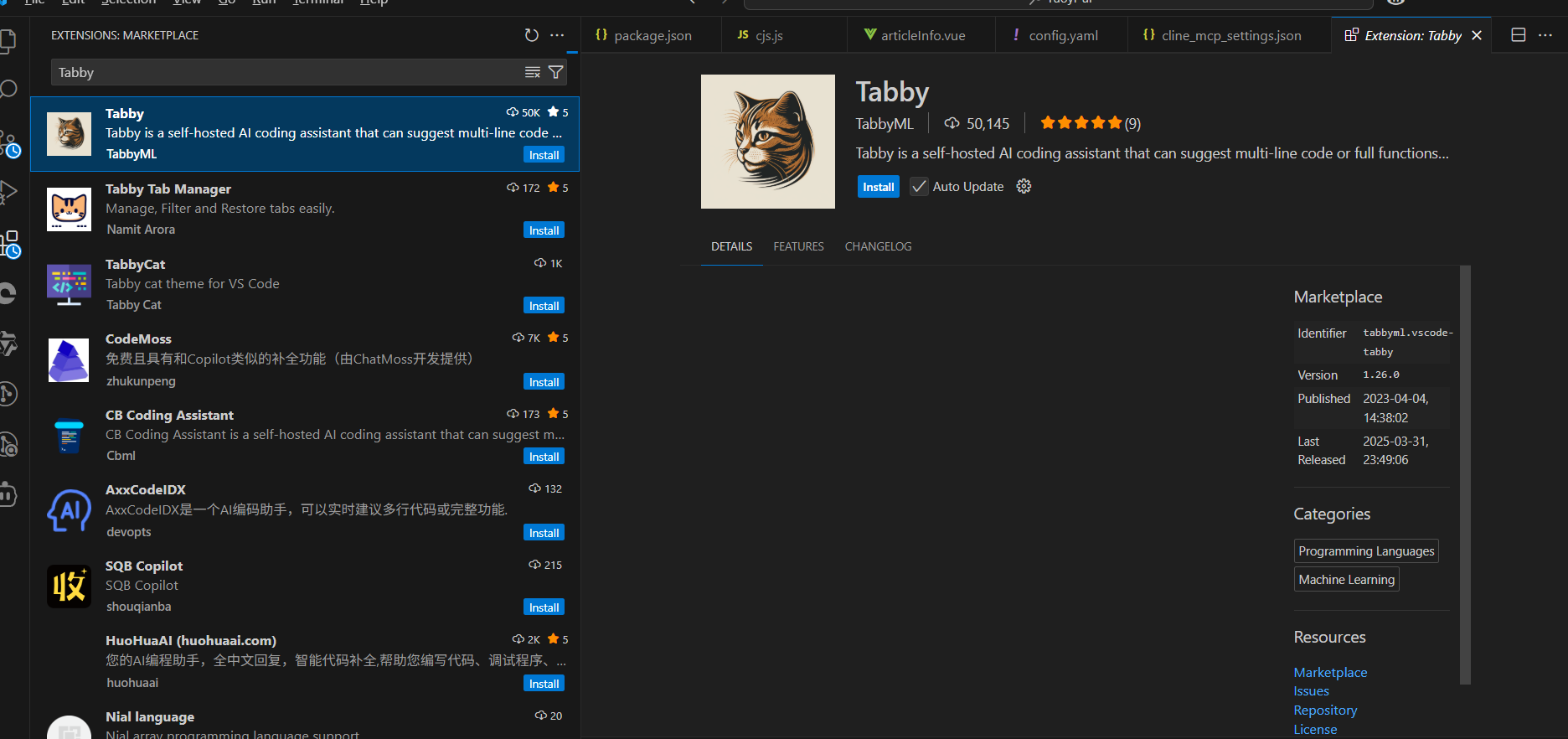

Tabby: a native self-hosted AI programming assistant that integrates into VSCode

General Introduction

Tabby is an open source AI programming assistant, developed by the TabbyML team, that users can deploy themselves locally or on a server. It provides similar GitHub Copilot Tabby supports multiple programming languages and can be integrated into development tools such as VSCode and Vim. Official data shows that as of April 2025, the latest version v0.24.0 has supported LDAP authentication and background task notification, and the function is continuously optimized. It is suitable for privacy-conscious developers or teams.

Function List

- Code Completion: Suggest single or multiple lines of code in real time when entering code to improve efficiency.

- Intelligent Chat Assistant: Answer programming questions or generate code snippets through dialog.

- Self-hosted deployment: Runs locally or in the cloud, protects data privacy, supports customization.

- Multi-model support: Open source models such as StarCoder-1B, Qwen2-1.5B can be used.

- context-sensitive: Understand code context and provide precise recommendations.

- IDE Integration: Support VSCode, Vim, IntelliJ and other major editors.

- code browser: Browse project code with search and navigation support.

- utilization statistics: View usage data for Code Completion and Chat.

- LDAP Authentication: Enterprise users can manage permissions via LDAP (new in v0.24.0).

Using Help

Installation process

Tabby needs to be built by the user, here are the detailed steps based on Docker.

- Preparing the environment

- Ensure that Docker is installed (version 20.10 or above).

- If you are using a GPU, you need to install the NVIDIA driver and CUDA Toolkit (11.8 or 12.x recommended).

- Reserve at least 10GB of storage space for models and data.

- Pulling Mirrors

Run the following command in the terminal to get the latest Tabby:

docker pull tabbyml/tabby

- Start the server

Use the following command to start, listening on port 8080 by default:

docker run -it --gpus all -p 8080:8080 -v $HOME/.tabby:/data tabbyml/tabby serve --model TabbyML/StarCoder-1B --device cuda --chat-model Qwen2-1.5B-Instruct

--gpus all: Enable GPU acceleration (no GPU can be removed).-p 8080:8080: Map the container port locally.-v $HOME/.tabby:/data:: Data is stored locally.tabbyFolder.--model: Specifies the code-completion model, default StarCoder-1B.--chat-model: Specify the chat model, default Qwen2-1.5B-Instruct.--device cuda: use GPU, if no GPU change tocpuThe

- Verification runs

After startup, access thehttp://localhost:8080. If the welcome page is displayed or the log prompts "Listening at 0.0.0.0:8080", it is successful. - Installing IDE extensions

- VSCode: Search for "Tabby" in the extension market, install it and set the server address to

http://localhost:8080The - Vim: Installation via plug-in manager

TabbyML/vim-tabbyRunningnpx tabby-agent --stdioConnections. - IntelliJ: Search for "Tabby" in the JetBrains Marketplace to install and configure the address.

Main Functions

Code Completion

- procedure: Enter code in the IDE and Tabby will pop up a suggestion box. Press

TabAcceptance.EscDenial. - Featured Functions: Context-awareness is supported. For example, input

def sort_list, may suggest:

def sort_list(lst):

return sorted(lst)

- Adjustment parameters: In

config.tomlmodificationsmax_input_length(default 1024) andmax_output_tokens(default 512).

Intelligent Chat Assistant

- How to open: In VSCode, click the Tabby icon in the sidebar to open the chat panel.

- Usage: Input questions such as "Write an array de-duplication function in JavaScript". which Tabby will return:

function uniqueArray(arr) {

return [...new Set(arr)];

}

It can also be done through the @ Mention the file to add context.

- Run command: Enter similar

dircommand to view the simulation results (v0.23.0 Enhanced).

code browser

- access path: Click "Code Browser" in the web interface.

- workflow: Enter keywords (e.g.

class), search code. Support filtering by file type, click on the results to jump to a specific location. - Update Features: v0.23.0 Optimized browsing experience to support more navigation options.

supplementary note

- initialization: Downloading the model may take 5-10 minutes, depending on the network.

- performance optimization: Use

--parallelism 4Increase concurrent processing power (requires high end hardware). - Community Support: Available via Slack (

links.tabbyml.com/join-slack) Get help.

application scenario

- personal development

A front-end developer deploys Tabby locally, using it to complement the React Component codes to save time on duplicate entries. - Enterprise Collaboration

The company deployed Tabby on an internal server, and with LDAP authentication, team members were able to quickly resolve bugs through the chat feature. - Education and training

Students learn C++ with Tabby, asking the question "What's a pointer?" in chat and getting code samples and explanations.

QA

- What models does Tabby support?

StarCoder-1B (Code Completion) and Qwen2-1.5B-Instruct (Chat) are supported by default, and users can replace other open source models. - What are the advantages of self-hosting?

Data is not uploaded to the cloud, completely localized for privacy-sensitive scenarios, and can be freely adjusted for configuration. - What are the minimum hardware requirements?

The CPU runs on 8GB of RAM and the GPU runs on 16GB of RAM and 4GB of video memory.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...