Step-Audio: a multimodal voice interaction framework that recognizes speech and communicates using cloned speech, among other features

General Introduction

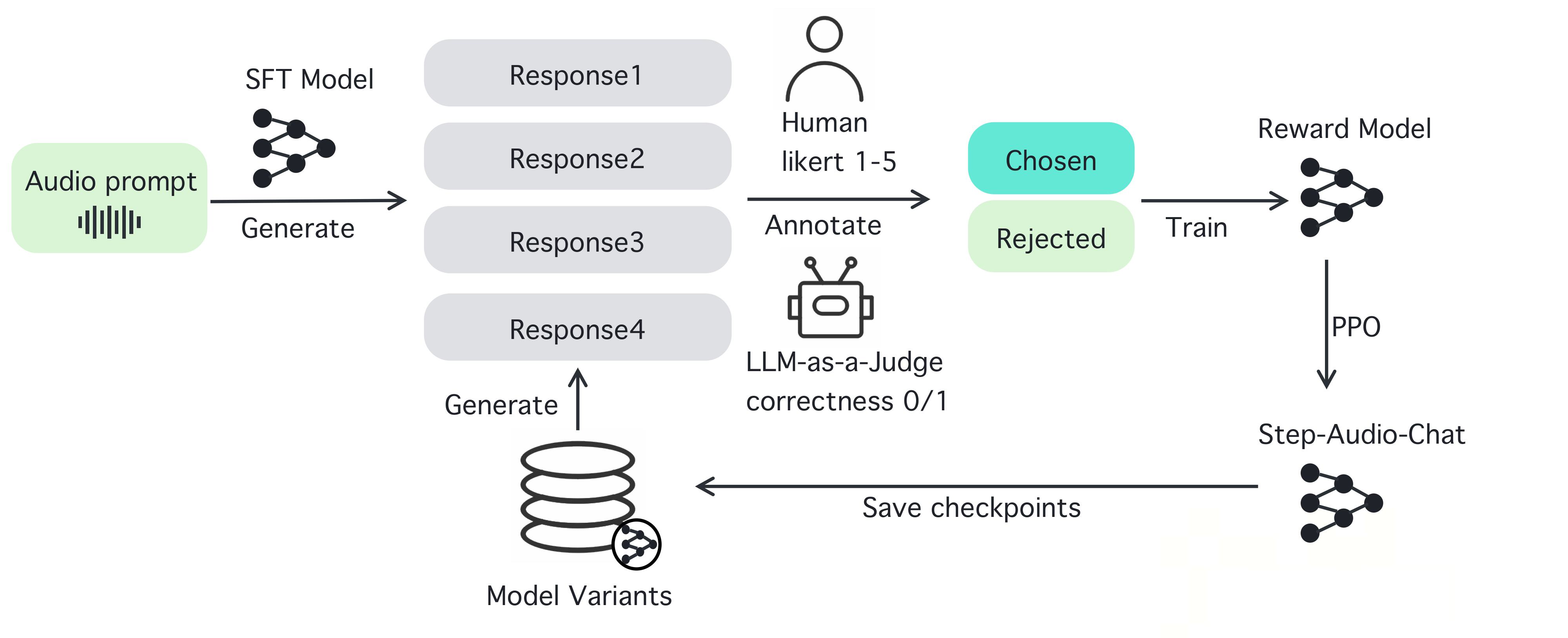

Step-Audio is an open source intelligent voice interaction framework designed to provide out-of-the-box speech understanding and generation capabilities for production environments. The framework supports multi-language dialog (e.g., Chinese, English, Japanese), emotional speech (e.g., happy, sad), regional dialects (e.g., Cantonese, Szechuan), and adjustable speech rate and rhyme styles (e.g., rap.) Step-Audio implements speech recognition, semantic understanding, dialog, speech cloning, and speech synthesis through a 130B-parameter multimodal model. Its generative data engine eliminates the reliance on traditional TTS manual data collection by generating high-quality audio to train and publish the resource-efficient Step-Audio-TTS-3B model.

Function List

- Real-time Speech Recognition (ASR): Converts speech to text and supports high-precision recognition.

- Text-to-Speech Synthesis (TTS): Converts text to natural speech, supporting a wide range of emotions and intonations.

- Multi-language support: Handles languages such as Chinese, English, Japanese, and dialects such as Cantonese and Szechuan.

- Emotion and intonation control: Adjusting speech output emotion (e.g., happy, sad) and rhyme style (e.g., RAP, humming).

- Voice cloning: Generate similar voice based on input voice, support personalized voice design.

- Conversation Management: Enhance the user experience by maintaining conversation continuity through the context manager.

- Open source toolchain: provides complete code and model weights that developers can use directly or develop twice.

Using Help

Step-Audio is a powerful open source multimodal voice interaction framework for developers to build real-time voice applications. Below is a detailed step-by-step guide to installing and using Step-Audio as well as its features to ensure that you can easily get started and utilize it to its full potential.

Installation process

To use Step-Audio, you need to install the software in an environment with an NVIDIA GPU. Below are the detailed steps:

- environmental preparation::

- Make sure you have Python 3.10 installed on your system.

- Install Anaconda or Miniconda to manage the virtual environment.

- Verify that the NVIDIA GPU driver and CUDA support are installed, 4xA800/H800 GPUs (80GB RAM) are recommended for best generation quality.

- clone warehouse::

- Open a terminal and run the following command to clone the Step-Audio repository:

git clone https://github.com/stepfun-ai/Step-Audio.git cd Step-Audio

- Open a terminal and run the following command to clone the Step-Audio repository:

- Creating a Virtual Environment::

- Create and activate a Python virtual environment:

conda create -n stepaudio python=3.10 conda activate stepaudio

- Create and activate a Python virtual environment:

- Installation of dependencies::

- Install the required libraries and tools:

pip install -r requirements.txt git lfs install - Cloning additional model weights:

git clone https://huggingface.co/stepfun-ai/Step-Audio-Tokenizer git clone https://huggingface.co/stepfun-ai/Step-Audio-Chat git clone https://huggingface.co/stepfun-ai/Step-Audio-TTS-3B

- Install the required libraries and tools:

- Verify Installation::

- Running a simple test script (as in the example code)

run_example.py) to ensure that all components are working properly.

- Running a simple test script (as in the example code)

Once the installation is complete, you can start using the various features of Step-Audio. The following are detailed instructions for operating the main and featured functions.

Main Functions

1. Real-time speech recognition (ASR)

Step-Audio's speech recognition feature converts user voice input into text, making it suitable for building voice assistants or real-time transcription systems.

- procedure::

- Make sure the microphone is connected and configured.

- Use the provided

stream_audio.pyScript to start live audio streaming:python stream_audio.py --model Step-Audio-Chat - When you speak, the system converts speech to text in real time and outputs the result at the terminal. You can check the log to confirm the recognition accuracy.

- Featured Functions: Supports multi-language and dialect recognition, such as mixed Chinese and English input, or localized speech such as Cantonese and Sichuan.

2. Text-to-speech synthesis (TTS)

With the TTS feature, you can convert any text into natural speech, supporting a wide range of emotions, speech rates and styles.

- procedure::

- Prepare the text to be synthesized, e.g. save as

input.txtThe - utilization

text_to_speech.pyScripts to generate speech:python text_to_speech.py --model Step-Audio-TTS-3B --input input.txt --output output.wav --emotion happy --speed 1.0 - Parameter Description:

--emotion: Set the emotion (e.g. happy, sad, neutral).--speed: Adjust the speed of speech (0.5 for slow, 1.0 for normal, 2.0 for fast).--output: Specifies the output audio file path.

- Prepare the text to be synthesized, e.g. save as

- Featured Functions: Supports the generation of RAP and humming styles of speech, for example:

python text_to_speech.py --model Step-Audio-TTS-3B --input rap_lyrics.txt --style rap --output rap_output.wav

这将生成一段带有 RAP 节奏的音频,非常适合音乐或娱乐应用。

#### 3. 多语言与情感控制

Step-Audio 支持多种语言和情感控制,适合国际化应用开发。

- **操作步骤**:

- 选择目标语言和情感,例如生成日语悲伤语气语音:

python generate_speech.py --language japanese --emotion sad --text "私は悲しいです" --output sad_jp.wav

- 方言支持:如果需要粤语输出,可以指定:

python generate_speech.py --dialect cantonese --text "I'm so hung up on you" --output cantonese.wav

- **特色功能**:通过指令可以无缝切换语言和方言,适合构建跨文化语音交互系统。

#### 4. 语音克隆

语音克隆允许用户上传一段语音样本,生成相似的声音,适用于个性化语音设计。

- **操作步骤**:

- 准备一个音频样本(如 `sample.wav`),确保音频清晰。

- 使用 `voice_clone.py` 进行克隆:

python voice_clone.py --input sample.wav --output cloned_voice.wav --model Step-Audio-Chat

- 生成的 `cloned_voice.wav` 将模仿输入样本的音色和风格。

- **特色功能**:支持高保真克隆,适用于虚拟主播或定制语音助手。

#### 5. 对话管理与上下文保持

Step-Audio 内置上下文管理器,确保对话的连续性和逻辑性。

- **操作步骤**:

- 启动对话系统:

python chat_system.py --model Step-Audio-Chat

- Enter text or speech and the system generates a response based on the context. Example:

- User: "How's the weather today?"

- SYSTEM: "Please tell me your location and I'll check it out."

- User: "I'm in Beijing."

- SYSTEM: "Beijing is sunny today, with a temperature of 15°C."

- Featured Functions: Supports multiple rounds of dialog, maintains contextual information, and is suitable for customer service bots or intelligent assistants.

caveat

- hardware requirement: Ensure that the GPU memory is sufficient, 80GB or more is recommended for optimal performance.

- network connection: Some of the model weights need to be downloaded from Hugging Face to ensure a stable network.

- error detection: If you encounter installation or runtime errors, check the log files or refer to the GitHub Issues page for help.

By following these steps, you can take advantage of the power of Step-Audio, whether you're developing real-time speech applications, creating personalized speech content, or building a multilingual dialogue system. the open source nature of Step-Audio also allows you to modify the code and optimize the model as needed to meet your specific project requirements.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...