An in-depth look at Titans: the path to convergence of long-time memory and efficient sequence modeling

Titans: Learning to Memorize at Test Time Original: https://arxiv.org/pdf/2501.00663v1

Titans Architecture Unofficial implementation: https://github.com/lucidrains/titans-pytorch

One, Background and Motivation: Limitations of the Transformer and the Inspiration of Human Memory

1. Limitations of Transformer: a bottleneck in long sequence processing

Transformer Since its introduction, the model has been recognized for its powerfulSelf-attention mechanism, which has made revolutionary progress in areas such as natural language processing and computer vision. However, as the task complexity increases, Transformer gradually exposes some critical problems when dealing with long sequences:

- High computational complexity limits model scalability :.

- The self-attention mechanism requires the computation of each token The similarity to all other tokens in the sequence with time complexity and space complexity of O(N²)which N is the sequence length.

- This means that when the sequence length increases, the computation and memory consumption grows in square steps, severely limiting the model's ability to handle long sequences, e.g., Transformer is often overwhelmed when dealing with tasks such as processing long text, video comprehension, or long term time series prediction.

Figure 1: The computational process of the self-attention mechanism.

- Limited Context Window to Capture Long Distance Dependencies:.

- To ease the computational burden, Transformers typically use a fixed-length context window (e.g., 512 or 1024), which means that the model can only focus on the information within the current window.

- However, many real-world tasks require models that can capture dependencies over longer time scales, such as understanding contextual information in long texts or conversations, integrating information from different points in time in videos, and making predictions using long-term trends and patterns in historical data.

2. The Linear Transformer Tradeoff: Efficiency vs. Performance

In order to solve the computational bottleneck of Transformer, the researchers proposed theLinear TransformerThe main improvements are:

- Replacing softmax with kernel functions. Replacing the softmax computation in the self-attention mechanism with a kernel function reduces the computational complexity to O(N)The

- Parallelizable Reasoning. The computational process of the Linear Transformer can be represented as a cyclic form, allowing for more efficient reasoning.

However, the Linear Transformer also has some limitations:

- Performance degradation.

- The kernel trick degrades the model into a linear recurrent network where the data is compressed into a matrix-valued state, resulting in a performance that is inferior to that of the standard Transformer.

- This compression is difficult to effectively capture complex nonlinear dependencies.

- Memory management issues.

- The Linear Transformer compresses historical data into a fixed-size matrix, but when dealing with very long contexts, this compression results in amemory overflowwhich affects the model performance.

Figure 2: Memory update process for the Linear Transformer.

3. Inspiration of the human memory system: building stronger mechanisms for long-term memory

In order to overcome the above challenges, the authors startedhuman memory systemDrawing inspiration from the

- The relationship between memory and learning: the

- The paper draws on definitions of memory and learning from the neuropsychological literature, viewing memory as neural updating induced by input, and defining learning as the process of acquiring effective and useful memories given a goal.

- This means thatEffective learning cannot be achieved without a strong memory mechanismThe

- The multilevel nature of human memory: the

- The human memory system is not a single structure but consists of multiple subsystems, such as short-term memory, working memory, and long-term memory, each of which has a different function and organization and is capable of operating independently.

- This multi-layered nature allows humans to store, retrieve and manage information efficiently.

- Shortcomings of existing models.

- Existing neural network architectures, from Hopfield networks to LSTMs and Transformers, have challenges in handling generalization, length extrapolation, and inference, capabilities that are critical for many real-world complex tasks.

- These architectures, while drawing inspiration from the human brain, all lacked the ability toephemeral memoryThe effective modeling of theMulti-level nature of the memory systemThe simulation.

Two, Core Innovation: Neural Long-Term Memory Module and Titans Architecture

Based on these reflections, the authors propose the following innovations:

1. Neural Long-term Memory Module

(1) Design Concept.

- Meta-Contextual Learning Mechanisms.

- The module is designed as ametamodel, learning how to memorize/store data into its parameters during testing.

- this kind ofOnline Learningapproach allows the model to dynamically adjust its memory to the current input, rather than relying on a memory that was fixed during pre-training.

- Surprise-Based Memory Updates : The

- The authors draw on the human memory mechanism that "surprising events are more likely to be remembered," and propose a method based ondegree of surpriseThe mechanism of memory updating.

- degree of surpriseBy calculating the neural network relative to the inputgradientto measure, the larger the gradient, the more the input data differs from the historical data and is more worthy of being remembered.

- This method is effective in capturing the key information in the data and storing it in the long term memory.

- In contrast, Linear Transformer can only perform linear transformations based on the current input data, which makes it difficult to effectively capture long-range dependencies.

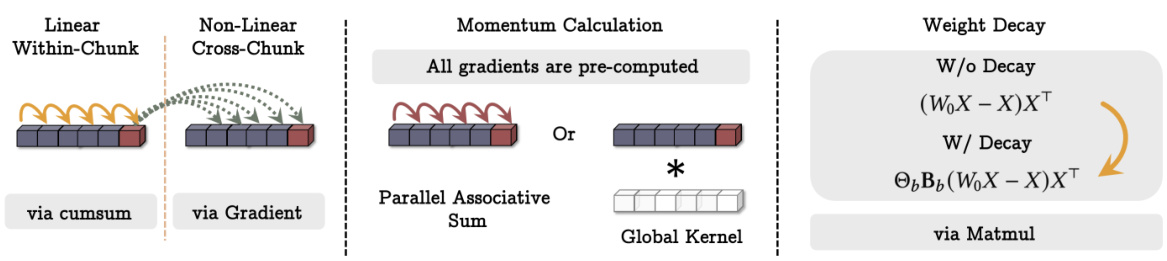

Figure 3: Surprise-based memory update mechanism.

(2) Key technologies.

- Momentum Mechanisms.

- To prevent the model from being over-influenced by a single surprising event, the authors introduced themomentum mechanism, taking the surprise of past moments into account.

- This means that the model takes into account the surprise of both current and historical inputs, leading to smoother memory updates.

- Attenuation Mechanisms.

- To prevent memory overflows, the authors also introduced theAttenuation mechanismByweight decayway of gradually forgetting unimportant information.

- The mechanism can be viewed as agating mechanism, which can selectively erase memories as needed.

- The authors note that this decay mechanism is a generalization of the forgetting mechanism in modern recurrent models and is equivalent to the optimization of meta-neural networks under small batch gradient descent, momentum and weight decay.

(3) Memory structures.

- In contrast to the traditional linear memory model, the authors used theMulti-Layer Perceptron (MLP) as a memory module.

- MLP has stronger nonlinear representation and can store and retrieve complex information more efficiently.

- In contrast, linear Transformers can only use matrix-valued states to store information, making it difficult to capture complex nonlinear relationships.

2. Titans Architecture: Integrating Long-Term and Short-Term Memory

After designing the Neural Long-Term Memory module, the authors further thought about how to effectively integrate it into a deep learning architecture and proposed the Titans architecture with the following key features:

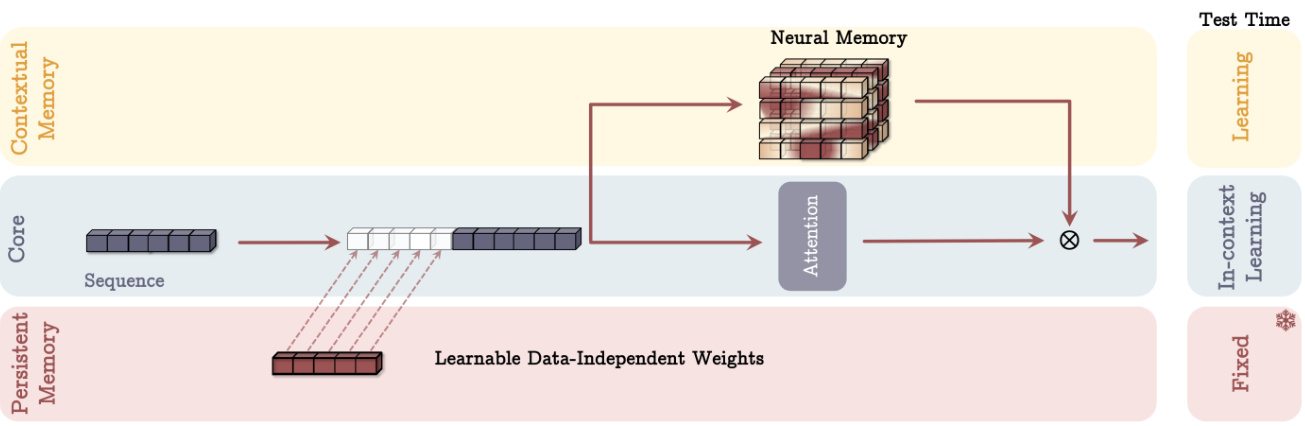

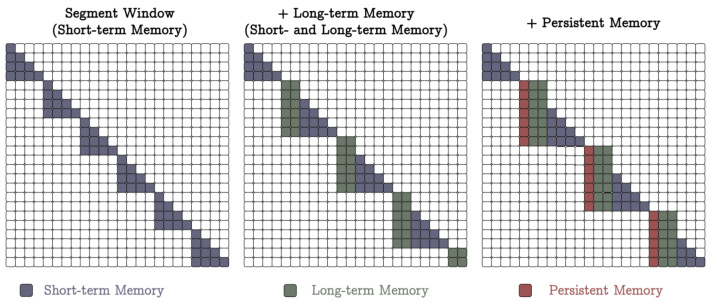

(1) Three superheads working in tandem.

- Core.

- Consisting of short-term memory, it is responsible for the main process of handling data.

- utilizationAttention Mechanisms for Finite Window Sizes, such as Sliding Window Attention (SWA) or Fully Connected Attention (FCA).

- Short-term memory can be viewed asshort-term memory, which is used to capture dependencies in the current context.

- Long-term Memory.

- Responsible for storing/remembering information from the long past.

- The neural long term memory module described above is used.

- Long-term memory can be viewed asephemeral memory, which is used to store and retrieve information over a longer time frame.

- Persistent Memory.

- is a set of learnable but data-independent parameters that encode a priori knowledge about the task.

- Similar to the parameters of the fully-connected layer in Transformer, but with different functionality.

- Persistent memory can be viewed asmetamemory, which is used to store task-related knowledge, such as grammar rules, common sense knowledge, etc.

Figure 4: Schematic diagram of the Titans architecture (MAC variant).

(2) Three different types of integration.

- Memory as Context (MAC).

- Connecting long and persistent memories to input sequences as additional information to the current context.

- Attentional mechanisms determine what information needs to be stored in long term memory.

- At the time of testing, the persistent memory parameters remained fixed, the attention module weighted for contextual learning, and the long term memory module continued to learn/memorize information.

- This design allows the model to flexibly utilize long term memory information based on the current input.

- Memory as Gating (MAG).

- Using sliding window attention for short-term memory and neural memory modules for long-term memory.

- Combine the two through gating mechanisms, e.g., normalize both using learnable vector-valued weights and then apply a nonlinear activation function.

- This design can be viewed as a multi-head architecture in which different heads are structured differently.

Figure 5: Different variants of the Titans architecture (MAC and MAG). - Memory as a Layer (MAL).

- Using the neural memory module as a layer of a deep neural network compresses past and current contextual information before the attention module.

- Such designs are more common in the literature, such as the H3 model.

(3) Strengths.

- More flexible memory management.

- By using memory modules as contextual or gated branches, the Titans architecture is able to dynamically utilize long-time memory information based on the current input.

- This provides more flexibility than the traditional approach of using memory modules as layers.

- Stronger Expression.

- The synergy of the three superheads allows the Titans architecture to process long sequential data more efficiently and to integrate the benefits of short-term, long-term and persistent memory.

- Scalability.

- Compared to Transformer, the Titans architecture has better scalability when dealing with long sequences and is able to maintain high performance over larger context windows.

Three, Experimental Results and Analysis: Validating the Titans Architecture

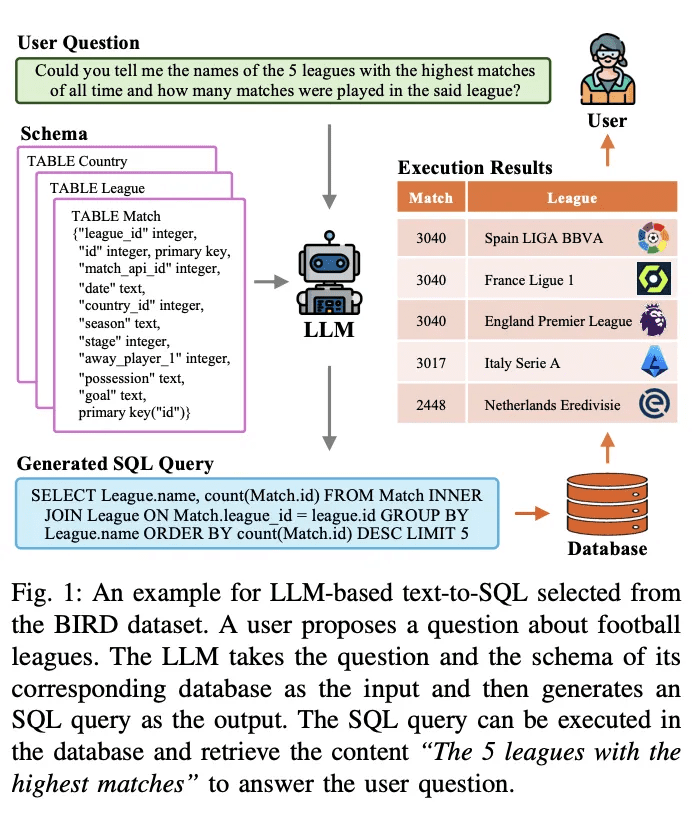

The authors conducted extensive experiments on several tasks to evaluate the performance of the Titans architecture and its variants:

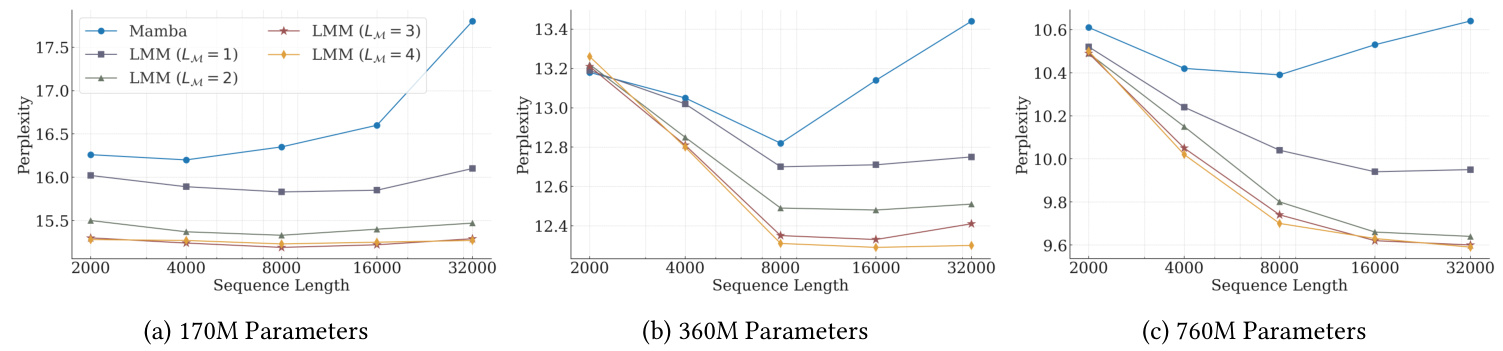

1. Linguistic Modeling and Common Sense Reasoning.

- Experimental setup.

- Three Titans models of different sizes (340M, 400M, 760M parameters) were used as well as several baseline models including Transformer++, RetNet, GLA, Mamba, Mamba2, DeltaNet, TTT and Gated DeltaNet.

- The FineWeb-Edu dataset was used for training data.

- Key findings.

- In the unmixed model, the Neural Long-Term Memory module achieved the best performance in both perplexity and accuracy metrics.

- All three variants of Titans (MAC, MAG, MAL) outperformed Samba (Mamba + attention) and Gated DeltaNet-H2 (Gated DeltaNet + attention).

- MAC performs better in dealing with long-range dependencies, while both MAG and MAC outperform the MAL variant.

Figure 6: Comparison of Titans' performance with the baseline model on language modeling and commonsense reasoning tasks.

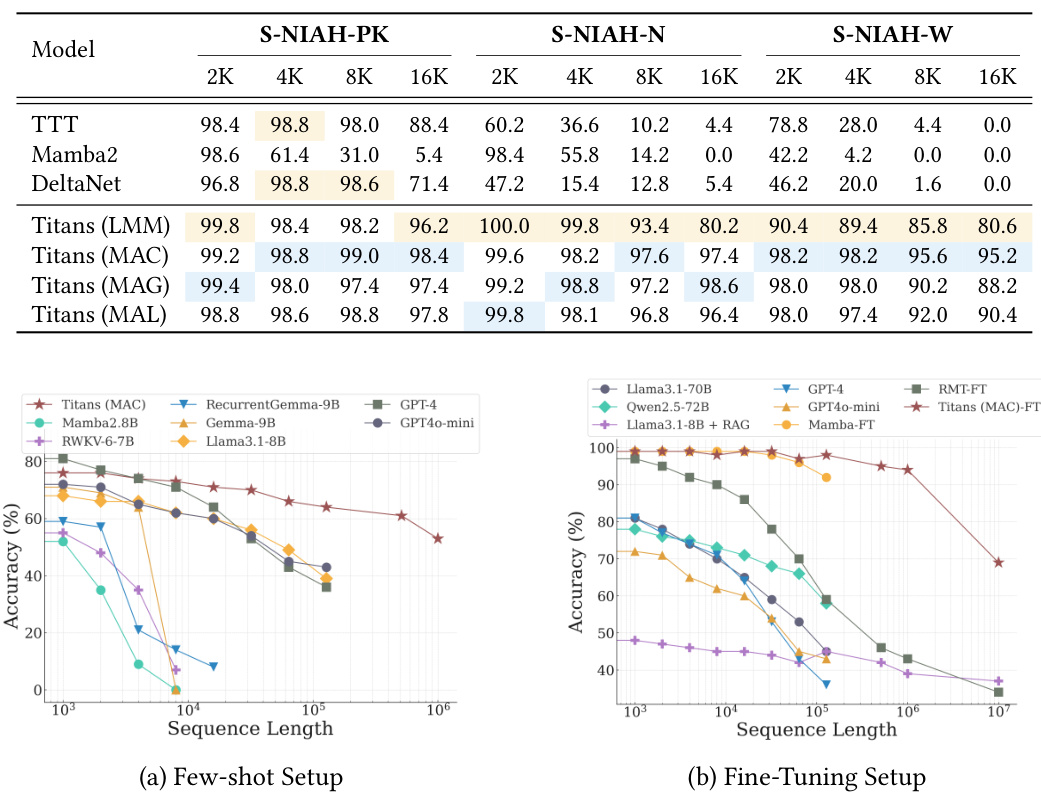

2. "Needle in the Haystack" mission.

- Experimental setup.

- Using the Single NIAH (S-NIAH) task in the RULER benchmarking, the retrieval ability of the model was evaluated on sequences of 2K, 4K, 8K and 16K length.

- Key findings.

- The Neural Long-Term Memory module achieved the best results in all three tasks.

- The Titans variants also performed well, with the MAC variant performing the best.

3. BABILong Benchmarking.

- Experimental setup.

- The task requires the model to reason about distributed factual information in extremely long documents.

- There are sample less settings and fine tuning settings.

- Key findings.

- In the less-sample setting, Titans outperforms all baselines, including models with larger numbers of parameters, such as GPT-4 and GPT4o-mini.

- Titans also outperforms all models in fine-tuning settings, even very large models like GPT-4.

- Compared to Transformer-based Memory Models (RMTs), Titans exhibit better performance, mainly due to its strong memorization capabilities.

Figure 7: Comparison of the performance of Titans with the baseline model on the BABILong benchmark.

4. Time series forecasting.

- Experimental setup.

- Using the Simba framework, the Mamba module is replaced with a neural long term memory module.

- Evaluated on ETT, ECL, Traffic and Weather benchmark datasets.

- Key findings.

- The Neural Long-Term Memory module outperforms all baselines, including Mamba, Linear Model and Transformer-based architectures.

5. DNA modeling.

- Experimental setup.

- Evaluate downstream task performance of pre-trained models on GenomicsBenchmarks.

- Key findings.

- Titans (LMM) is competitive in different downstream genomics tasks and is on par with state-of-the-art methods.

6. Efficiency analysis.

- Key findings.

- The Neural Long-Term Memory module is slightly slower to train compared to other recurrent models, mainly due to its deeper memory and more complex transformation process, as well as the highly optimized kernel implemented in Mamba2.

- Titans (MAL) is faster than the baseline as well as the Memory Module, mainly due to the use of FlashAttention's highly optimized kernel.

7. Ablation studies.

- Key findings.

- All components of the neural memory design contribute positively to performance, with weight decay, momentum, convolution and persistent memory contributing the most.

- Architecture design also has a significant impact on performance, with MAC and MAG performing close to each other in language modeling and commonsense reasoning tasks, while MAC performs better in long context tasks.

Four, Innovative points and strengths of the thesis

- A novel neural long term memory module is proposed: the

- It draws on key elements of human memory mechanisms, such as surprise, momentum, and forgetting, to achieve more efficient memory updating and storage.

- A deep neural network is used as a memory module to give the model more expressive capability.

- Designed the Titans architecture, which combines long-term and short-term memory.

- Three different integration approaches are proposed, providing flexible options for different application scenarios.

- The synergy of the three superheads, Core, Long-term Memory and Persistent Memory, allows the model to process long sequential data more efficiently.

- Excels in multiple tasks.

- Whether for language modeling, commonsense reasoning, time series prediction, or DNA modeling, the Titans architecture demonstrates strong performance that outperforms existing Transformer and linear loop models.

- Scalable.

- The ability to maintain high performance over a larger context window opens up the possibility of processing very long sequences.

Five, future outlook

While the Titans architecture has yielded impressive results on a number of fronts, there are still the following directions that deserve further exploration.

- Exploring more complex memory module architectures: the

- For example, introducing hierarchical memory structures or combining memory modules with other models such as graph neural networks.

- Development of more efficient mechanisms for memory updating and storage.

- For example, sparsification techniques or quantization techniques are used to reduce memory consumption and computational costs.

- Applying the Titans architecture to a wider range of applications.

- Examples include video understanding, robot control, recommendation systems, etc.

- Exploring More Effective Training Strategies.

- For example, introducing more advanced optimization algorithms or using meta-learning to accelerate model training.

- Investigating the Interpretability of the Titans Architecture.

- A deeper understanding of how Titans stores and utilizes long term memory information could provide new ideas for building more powerful AI systems.

Six, summarize

The central contribution of this paper is:

- A novel neural long term memory module is proposedThe design is inspired by the human memory system and incorporates key concepts from deep learning such as gradient descent, momentum and weight decay.

- Built the Titans architecture, which organically combines long-term and short-term memory, and explores three different integration methods, providing flexible options for different application scenarios.

- The superior performance of Titans has been verified through rigorous experimentation., which performs well on multiple tasks, especially when dealing with long context tasks, demonstrating strong scalability and higher accuracy.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...