Mastering RAG Document Chunking: A Guide to Chunking Strategies for Building Efficient Retrieval Systems

If your RAG The app is failing to deliver the desired results, so perhaps it's time to revisit your chunking strategy.Better chunking means more accurate retrieval, which ultimately leads to higher quality responses.

However, chunking is not a one-size-fits-all approach, and no single approach is absolutely optimal. You need to consider and choose the most suitable strategy according to the specific needs of the project, document characteristics and budget and other factors.

Why does the quality of chunking directly affect the quality of RAG responses?

I am sure that by reading this article you have an understanding of the basic concepts of chunking and RAG. As a quick recap, the core idea of RAG is to make LLM Answer the questions based on the given contextual informationThis is because LLM has a rich knowledge base, but it has a lag in updating its knowledge and cannot access private data directly. This is because, although LLM has a rich knowledge base, it has a lag in updating its knowledge and does not have direct access to private data.

The RAG compensates for the shortcomings of the LLM itself by injecting relevant document fragments (i.e., context) into the prompts and directing the LLM to generate answers based on these fragments. Context can be obtained in a variety of ways, such as database queries, Internet searches, or extraction from PDF documents.

There are two key challenges to building an efficient RAG application:

- Context Window Limits for LLM: Early LLMs, such as GPT-2 and GPT-3, had small context windows, limiting the amount of text that could be processed in a single pass. While there are now models that support larger context windows, this does not mean that we can just cram an entire document into an LLM.

- context noise problem: Even if the context window of the LLM is large enough, if the provided context information contains a lot of content (noise) that is irrelevant to the question, it can affect the understanding and judgment of the LLM, leading to a degradation of the quality of the response or even hallucinations.

In order to address these issues.Document chunkingThe technology was born. The core idea is to split large documents into smaller, semantically coherent segments (chunks), and then in the retrieval phase, only the most relevant chunks are selected as the context provided to the LLM.

There are various ways of document chunking, such as simple sentence and paragraph chunking, and complex semantic chunking, Agentic chunking, etc. Choosing a suitable chunking strategy is crucial, which directly affects the efficiency and quality of the final response of the RAG system. Choosing a suitable chunking strategy is crucial, and it directly affects the retrieval efficiency of the RAG system and the quality of the final response.

In this article, we'll dive into several more advanced and practical document chunking strategies to help you build more robust RAG applications. We'll skip simple sentence and paragraph chunking and focus on techniques that are more valuable in real-world RAG applications.

Next, I'll detail a few chunking strategies that I've learned and practiced.

Recursive Character Segmentation: A Fast and Economical Basic Approach

Recursive character chunking, you might think, is the most basic method. Indeed, it is basic, but it is still one of the most commonly used and cost-effective chunking techniques in my opinion. It is easy to understand, simple to implement, fast and inexpensive, making it especially suitable for rapid prototyping and cost-sensitive projects.

The core idea of recursive character segmentation isUse a fixed-size sliding window, and allows overlap between windows. It generates blocks of text by continuously sliding the window from the starting position of the document with a preset block size and number of overlapping characters.

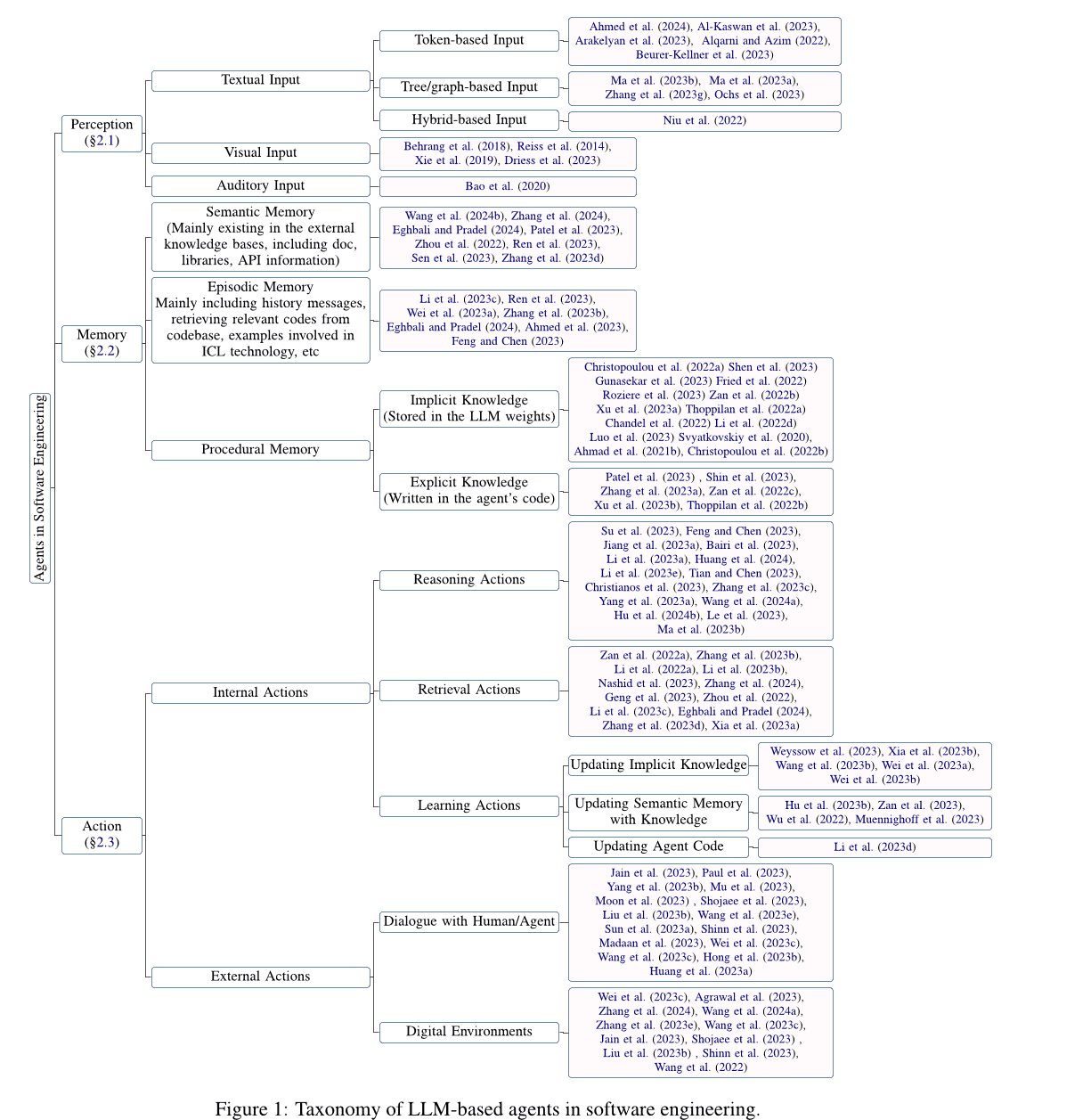

The following figure shows how recursive character segmentation works:

How recursive character segmentation works - drawn by Thuwarakesh

How recursive character segmentation works - drawn by ThuwarakeshThe advantage of recursive character segmentation is its simplicity and efficiency. It allows for fast processing of large documents and chunking of annual reports at the minute level. Implementing recursive character segmentation in Langchain is very simple:

from langchain.text_splitter import RecursiveCharacterTextSplitter

text = """

Hydroponics is an intelligent way to grow veggies indoors or in small spaces. In hydroponics, plants are grown without soil, using only a substrate and nutrient solution. The global population is rising fast, and there needs to be more space to produce food for everyone. Besides, transporting food for long distances involves lots of issues. You can grow leafy greens, herbs, tomatoes, and cucumbers with hydroponics.

"""

rc_splits = RecursiveCharacterTextSplitter.from_tiktoken_encoder(

chunk_size=20, chunk_overlap=2

).split_text(text)

Sliding window variants

In practice, the size of the sliding window and the sliding step can be varied to suit different needs:

- Character-based vs. Token-based Sliding Windows: The above example is a character-based sliding window. It is also possible to use a character-based Token sliding window to ensure that the block size is more in line with the way LLM handles it.Langchain's RecursiveCharacterTextSplitter Both character and token modes are supported.

- Dynamic window size:: While fixed window sizes characterize recursive character segmentation, dynamic window resizing can also be considered in certain scenarios. For example, adaptively adjusting the window size according to the length of a sentence or the structure of a paragraph to ensure the semantic integrity of a block.

limitations

Recursive character segmentation is aPosition-based chunking approach. It simply assumes that text adjacent to a position in a document is also semantically related. However, this assumption does not hold in many cases.

Think: Why does location-based chunking lead to poor performance of RAGs? How to implement semantic chunking and get better results?

For example, in the same chapter, the author may first discuss several different concepts before finally relating them. If only recursive character segmentation is used, content that should belong to the same semantic unit may be split, or semantically unrelated content may be combined, affecting retrieval.

Despite its limitations, recursive character segmentation is ideal for getting started with RAG. It often provides satisfactory results during the prototyping phase, or for simply structured documents. Recursive character segmentation is also a worthwhile option if your project has high cost and speed requirements.

Semantic chunking: a chunking approach to understanding the meaning of text

Semantic chunking is a more advanced chunking strategy thatInstead of relying solely on the positional information of the text, a deeper understanding of the semantic meaning of the text is achieved. The core idea is to segment documents when their semantics change significantly, ensuring that each chunk is centered around a single topic as much as possible.

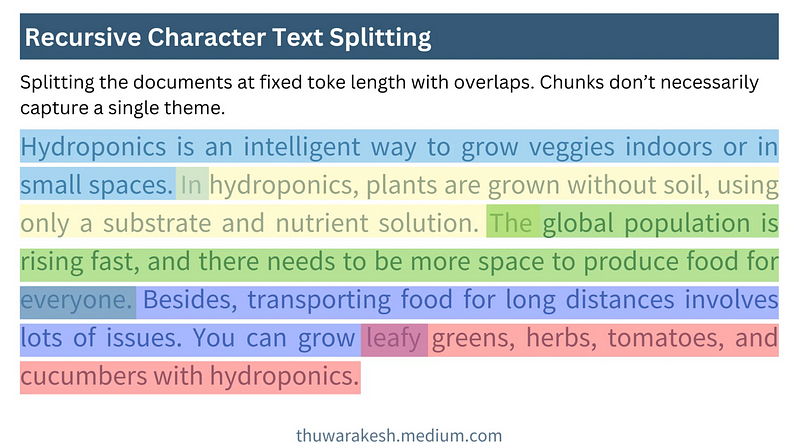

The following figure shows how semantic chunking works:

Semantic Chunking Works - drawn by Thuwarakesh

Semantic Chunking Works - drawn by ThuwarakeshUnlike recursive character segmentation, semantic chunking generates blocks of typically variable length. It will determine the boundaries of the block based on semantic integrity, rather than presetting a fixed number of characters or tokens.

Key steps in realizing semantic chunking

The difficulty with semantic chunking is howProgrammed to understand the semantics of sentences**. This is usually done with the help ofembedding modelto implement. Embedding models such as OpenAI's text-embedding-3-large, sentences can be converted into vector representations, and vectors are able to capture the semantic information of a sentence. Sentences that are semantically similar have vectors that are also closer in space. **

A typical process for semantic chunking consists of the following five steps:

- Constructing the initial block: Initially splits the document into sentences or paragraphs and combines neighboring sentences or paragraphs into an initial block.

- Generate Block Embedding: Use the embedding model to generate vector embeddings for each initial block.

- Calculate the distance between blocks: Calculate the semantic distance between neighboring blocks. Commonly used distance metrics include cosine distance and so on. The larger the distance, the greater the semantic difference.

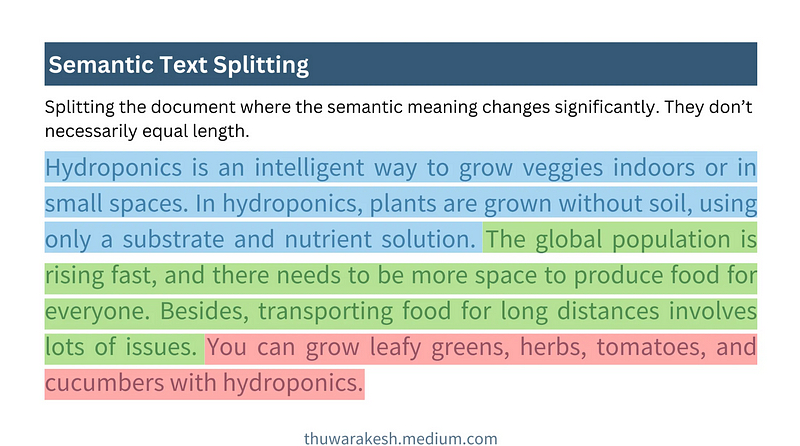

- Determine the split point: Set a distance threshold. When the distance between neighboring chunks exceeds the threshold, disconnect them and form new semantic chunks. The selection of the threshold needs to be adjusted according to the specific document and the experimental effect.

- Visualization (optional): Visualizing the distance between blocks helps to understand the chunking effect more intuitively and to adjust the threshold.

The following code shows how to implement semantic chunking:

# Step 1 : Create initial chunks by combining concecutive sentences.

# ------------------------------------------------------------------

#Split the text into individual sentences.

sentences = re.split(r"(?<=[.?!])\s+", text)

initial_chunks = [

{"chunk": str(sentence), "index": i} for i, sentence in enumerate(sentences)

]

# Function to combine chunks with overlapping sentences

def combine_chunks(chunks):

for i in range(len(chunks)):

combined_chunk = ""

if i > 0:

combined_chunk += chunks[i - 1]["chunk"]

combined_chunk += chunks[i]["chunk"]

if i < len(chunks) - 1:

combined_chunk += chunks[i + 1]["chunk"]

chunks[i]["combined_chunk"] = combined_chunk

return chunks

# Combine chunks

combined_chunks = combine_chunks(initial_chunks)

# Step 2 : Create embeddings for the initial chunks.

# ------------------------------------------------------------------

# Embed the combined chunks

chunk_embeddings = embeddings.embed_documents(

[chunk["combined_chunk"] for chunk in combined_chunks]

# If you haven't created combined_chunk, use the following.

# [chunk["chunk"] for chunk in combined_chunks]

)

# Add embeddings to chunks

for i, chunk in enumerate(combined_chunks):

chunk["embedding"] = chunk_embeddings[i]

# Step 3 : Calculate distance between the chunks

# ------------------------------------------------------------------

def calculate_cosine_distances(chunks):

distances = []

for i in range(len(chunks) - 1):

current_embedding = chunks[i]["embedding"]

next_embedding = chunks[i + 1]["embedding"]

similarity = cosine_similarity([current_embedding], [next_embedding])[0][0]

distance = 1 - similarity

distances.append(distance)

chunks[i]["distance_to_next"] = distance

return distances

# Calculate cosine distances

distances = calculate_cosine_distances(combined_chunks)

# Step 4 : Find chunks with significant different to it's previous ones.

# ----------------------------------------------------------------------

import numpy as np

threshold_percentile = 90

threshold_value = np.percentile(cosine_distances, threshold_percentile)

crossing_points = [

i for i, distance in enumerate(distances) if distance > threshold_value

]

len(crossing_points)

# Step 5 (Optional) : Create a plot of chunk distances to get a better view

# -------------------------------------------------------------------------

import seaborn as sns

import matplotlib.pyplot as plt

import numpy as np

def visualize_cosine_distances_with_thresholds_multicolored(

cosine_distances, threshold_percentile=90

):

# Calculate the threshold value based on the percentile

threshold_value = np.percentile(cosine_distances, threshold_percentile)

# Identify the points where the cosine distance crosses the threshold

crossing_points = [0] # Start with the first segment beginning at index 0

crossing_points += [

i

for i, distance in enumerate(cosine_distances)

if distance > threshold_value

]

crossing_points.append(

len(cosine_distances)

) # Ensure the last segment goes to the end

# Set up the plot

plt.figure(figsize=(14, 6))

sns.set(style="white") # Change to white to turn off gridlines

# Plot the cosine distances

sns.lineplot(

x=range(len(cosine_distances)),

y=cosine_distances,

color="blue",

label="Cosine Distance",

)

# Plot the threshold line

plt.axhline(

y=threshold_value,

color="red",

linestyle="--",

label=f"{threshold_percentile}th Percentile Threshold",

)

# Highlight segments between threshold crossings with different colors

colors = sns.color_palette(

"hsv", len(crossing_points) - 1

) # Use a color palette for segments

for i in range(len(crossing_points) - 1):

plt.axvspan(

crossing_points[i], crossing_points[i + 1], color=colors[i], alpha=0.3

)

# Add labels and title

plt.title(

"Cosine Distances Between Segments with Multicolored Threshold Highlighting"

)

plt.xlabel("Segment Index")

plt.ylabel("Cosine Distance")

plt.legend()

# Adjust the x-axis limits to remove extra space

plt.xlim(0, len(cosine_distances) - 1)

# Display the plot

plt.show()

return crossing_points

# Example usage with cosine_distances and threshold_percentile

crossing_poings = visualize_cosine_distances_with_thresholds_multicolored(

distances, threshold_percentile=bp_threashold

)

Visualize the Seborn chart for distance:

Used to illustrate semantic chunking Seaborn diagrams - drawn by Thuwarakesh

Used to illustrate semantic chunking Seaborn diagrams - drawn by ThuwarakeshAdvantages of Semantic Chunking and Applicable Scenarios

The advantage of semantic chunking is the ability toBetter captures the semantic structure of documents and aggregates semantically related text fragments together, thus improving the retrieval quality of the RAG system. It is more suitable for processing the following types of documents:

- Documents with complex structures and diverse topics: e.g., lengthy reports, technical documents, books, etc. that contain multiple sub-topics.

- Documents with high semantic jumps: Authors may jump around in their thoughts when writing, and semantic chunking better accommodates this style of writing.

Compared to recursive character segmentation, semantic chunking of theThe computation is more expensive** and slower. This is mainly due to the need for embedding vector computation and distance metrics. Therefore, in resource-limited scenarios, trade-offs need to be considered.

Agentic chunking: chunking strategies that mimic human understanding

Agentic chunking is a further step towards intelligent chunking. ItDrawing on the way humans read and understand documents, LLM is used as an "intelligent agent" to assist in chunking.

Human reading habits are not completely linearAgentic chunking attempts to simulate this human comprehension process. When we read, we jump around from topic to topic or concept to concept and build the logical structure of a document in our minds. agentic chunking attempts to mimic this human comprehension process.

Unlike the previous two methods, Agentic chunkingdoes not assume that semantically similar content occurs consecutively in a document**. It can aggregate scattered but semantically related pieces of a document to form semantic chunks that are more compatible with human perception.

Agentic chunking workflow

The core idea of Agentic chunking isLet LLM "read" the document like a human, recognizing the core concepts and topics in the document and chunking based on those concepts and topics. A typical Agentic chunking process includes:

- Propositioning: Convert each sentence in a document into a more independent Proposition. For example, replacing unknown pronouns with objects to give each sentence a more complete semantic meaning.

- Building Block Containers: Create one or more Chunk Containers for semantically related propositions. Each Chunk Container can have a title and a summary that describes the topic of that container.

- Agent-driven propositional assignment: Using LLM as an Agent, "read" propositions one by one and determine to which block container the proposition should belong.

- If the Agent considers the proposition to be relevant to the topic of one of the existing block containers, it is added to that container.

- If the Agent thinks the proposition proposes a new topic, it creates a new block container to hold the proposition.

- Block container reprocessing: Post-processing of block containers, e.g., generating more refined block summaries and titles based on propositions within the container.

The following code shows the implementation of Agentic chunking:

from langchain import hub

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.pydantic_v1 import BaseModel, Field

# Step 1: Convert paragraphs to propositions.

# --------------------------------------------

# Load the propositioning prompt from langchain hub

obj = hub.pull("wfh/proposal-indexing")

# Pick the LLM

llm = ChatOpenAI(model="gpt-4o")

# A Pydantic model to extract sentences from the passage

class Sentences(BaseModel):

sentences: List[str]

extraction_llm = llm.with_structured_output(Sentences)

# Create the sentence extraction chain

extraction_chain = obj | extraction_llm

# NOTE: text is your actual document

paragraphs = text.split("\n\n")

propositions = []

for i, p in enumerate(paragraphs):

propositions = extraction_chain.invoke(p

propositions.extend(propositions)

# Step 2: Create a placeholder to store chunks

chunks = {}

# Step 3: Deine helper classes and functions for agentic chunking.

class ChunkMeta(BaseModel):

title: str = Field(description="The title of the chunk.")

summary: str = Field(description="The summary of the chunk.")

def create_new_chunk(chunk_id, proposition):

summary_llm = llm.with_structured_output(ChunkMeta)

summary_prompt_template = ChatPromptTemplate.from_messages(

[

(

"system",

"Generate a new summary and a title based on the propositions.",

),

(

"user",

"propositions:{propositions}",

),

]

)

summary_chain = summary_prompt_template | summary_llm

chunk_meta = summary_chain.invoke(

{

"propositions": [proposition],

}

)

chunks[chunk_id] = {

"summary": chunk_meta.summary,

"title": chunk_meta.title,

"propositions": [proposition],

}

def add_proposition(chunk_id, proposition):

summary_llm = llm.with_structured_output(ChunkMeta)

summary_prompt_template = ChatPromptTemplate.from_messages(

[

(

"system",

"If the current_summary and title is still valid for the propositions return them."

"If not generate a new summary and a title based on the propositions.",

),

(

"user",

"current_summary:{current_summary}\n\ncurrent_title:{current_title}\n\npropositions:{propositions}",

),

]

)

summary_chain = summary_prompt_template | summary_llm

chunk = chunks[chunk_id]

current_summary = chunk["summary"]

current_title = chunk["title"]

current_propositions = chunk["propositions"]

all_propositions = current_propositions + [proposition]

chunk_meta = summary_chain.invoke(

{

"current_summary": current_summary,

"current_title": current_title,

"propositions": all_propositions,

}

)

chunk["summary"] = chunk_meta.summary

chunk["title"] = chunk_meta.title

chunk["propositions"] = all_propositions

# Step 5: The main functino that creates chunks from propositions.

def find_chunk_and_push_proposition(proposition):

class ChunkID(BaseModel):

chunk_id: int = Field(description="The chunk id.")

allocation_llm = llm.with_structured_output(ChunkID)

allocation_prompt = ChatPromptTemplate.from_messages(

[

(

"system",

"You have the chunk ids and the summaries"

"Find the chunk that best matches the proposition."

"If no chunk matches, return a new chunk id."

"Return only the chunk id.",

),

(

"user",

"proposition:{proposition}" "chunks_summaries:{chunks_summaries}",

),

]

)

allocation_chain = allocation_prompt | allocation_llm

chunks_summaries = {

chunk_id: chunk["summary"] for chunk_id, chunk in chunks.items()

}

best_chunk_id = allocation_chain.invoke(

{"proposition": proposition, "chunks_summaries": chunks_summaries}

).chunk_id

if best_chunk_id not in chunks:

best_chunk_id = create_new_chunk(best_chunk_id, proposition)

return

add_proposition(best_chunk_id, proposition)

Examples of propositionalization

原始文本

===================

A crow sits near the pond. It's a white one.

命题化文本

==================

A crow sits near the pond. This crow is a white one.

Benefits and Challenges of Agentic Chunking

Agentic chunkedThe biggest advantage is its flexibility and intelligence**. It can better understand the deep semantic structure of documents and generate semantic chunks that are more in line with human cognition, and is particularly good at handling the following types of documents:**

- Documentation of nonlinear structures: For example, documents that jump around in thought and contain a lot of background knowledge or implied information.

- Documents that need to integrate information across paragraphs and sections: Agentic chunking can aggregate semantically related fragments that are scattered in different locations in a document.

However, Agentic chunking also faces some challenges:

- high cost: Agentic chunking requires frequent calls to the LLM, which is computationally and time expensive.

- Prompt Project Dependencies: The effectiveness of Agentic chunking depends heavily on the design of the Prompt. The Prompt needs to be finely designed in order to guide LLM to chunk effectively.

- Uncertainty of outcome: There may be some uncertainty in the output of the LLM, leading to unstable chunking results.

Agentic chunking application scenarios

Agentic chunking, while more costly, is still an option worth considering in some scenarios where RAG effectiveness is critical. Example:

- Knowledge base in specialized areas: For example, knowledge bases in fields such as law, medicine, finance, etc., require extremely high retrieval accuracy and recall.

- complex question and answer system: A question and answer system that needs to handle complex questions that require reasoning and information integration.

In-depth reading:Agentic Chunking: AI Agent-Driven Semantic Text Chunking

Chunking strategies for different document formats

The previous discussion mainly focused on chunking plain text. However, in practical applications, we often encounter a variety of different document formats, such as Markdown, HTML, PDF, code, and so on. For different formats, a more refined chunking strategy is needed to fully utilize the structural information of the document.

Markdown and HTML document chunking

Markdown and HTML documents have structured tagging information, such as headings, paragraphs, lists, code blocks, and so on. We canUse these labels as a basis for chunking** for more accurate chunking. **

- Block by title: Treat each heading and the content under it as a separate block. This is suitable for clearly structured documents with distinct sections.

- chunk: Treat each paragraph as a chunk. Paragraphs are usually semantically complete units and are suitable as basic chunking units.

- chunking: Combine headings and paragraphs for chunking. For example, first split the document by first-level headings, and then in the content under each first-level heading, then split by paragraphs.

Example: HTML tag-based chunking (Python)

from bs4 import BeautifulSoup

html_text = """

<h1>Section 1</h1>

<p>This is the first paragraph of section 1.</p>

<p>This is the second paragraph of section 1.</p>

<h2>Subsection 1.1</h2>

<ul>

<li>List item 1</li>

<li>List item 2</li>

</ul>

"""

soup = BeautifulSoup(html_text, 'html.parser')

chunks = []

# 按 h1 标题分块

for h1_tag in soup.find_all('h1'):

chunk_text = h1_tag.text + "\n"

next_sibling = h1_tag.find_next_sibling()

while next_sibling and next_sibling.name not in ['h1', 'h2']: # 假设按 h1 和 h2 分级

chunk_text += str(next_sibling) + "\n" # 保留 HTML 标签,或 next_sibling.text 只保留文本

next_sibling = next_sibling.find_next_sibling()

chunks.append(chunk_text)

# 可以类似地处理 h2, p, ul, ol 等标签

print(chunks)

PDF document chunking

Chunking PDF documents is relatively complex because PDF is essentially a typeset format where textual content is mixed with typeset information. Splitting PDFs directly by character or line may destroy semantic integrity.

The key steps in PDF chunking usually include:

- PDF Text Extraction: Use PDF parsing libraries (e.g. PyPDF2, pdfminer, or a more specialized unstructured.io) extracts text content from PDF files.

- Text cleaning and pre-processing: Removing noisy characters, handling line breaks, fixing OCR errors, and more.

- Structured Information Extraction: Try to extract structured information from PDFs, such as titles, headers and footers, tables, lists, etc. Some advanced PDF parsing libraries (such as unstructured.io) can assist in structured information extraction.

- Chunking Strategy Options: Select appropriate chunking strategies (e.g., semantic chunking, recursive character segmentation, etc.) based on the extracted text content and structural information.

draw attention to sth.: unstructured.io is a powerful tool that can handle a wide range of document formats (including PDF) and attempts to extract structured information from documents, simplifying the process of PDF chunking.

Code Documentation Chunking

Chunking of code documents (e.g. Python, Java, C++ code files) requires consideration of the syntactic structure and logical units of the code. Simply splitting the code by lines or characters is likely to destroy the integrity and executability of the code.

Common strategies for chunking code documentation include:

- Chunking by function/class: Treat each function or class as a separate block. Functions and classes are usually logical units of code.

- Chunking by Code Block: Identifies blocks of logic (e.g., loops, conditional statements, try-except blocks, etc.) in the code, treating each block as a chunk.

- Combining code comment chunking: Code comments are usually explanations of the functionality and logic of the code. Code comments and their associated code blocks can be chunked as a whole.

artifact: Structured analysis and chunking of code can be aided by the use of syntax parsing tools such as tree-sitter, which parses code from a variety of programming languages and generates Abstract Syntax Trees (ASTs), which make it easy to chunk code according to its syntactic structure.

Choosing the right chunk size and overlap

Chunk Size and Chunk Overlap are two important parameters in the chunking policy that directly affect the performance of the RAG system.

- chunk size: The amount of text contained in each chunk. Too small a chunk size may lead to incomplete semantic information; too large a chunk size may introduce noise and reduce retrieval accuracy.

- overlap size: The amount of text that overlaps between neighboring blocks. The purpose of overlap is to ensure contextual continuity and avoid losing information at block boundaries.

How do I choose the right chunk size and overlap?

There is no absolutely optimal answer to choosing the right chunk size and overlap, and it usually needs to be determined based on theDocument Featurescap (a poem)experimental effectto determine. Here are some rules of thumb and suggestions:

- heuristic method::

- Based on sentence/paragraph length: The average sentence length or paragraph length of a document can be analyzed as a reference for the chunk size. For example, if the average paragraph length is 150 Token, you can try to set the chunk size to 150-200 Token.

- Consider the LLM context window: The block size should not be too large to avoid exceeding the context window limitations of the LLM. At the same time, it should not be too small to ensure that the block contains enough semantic information.

- Experimentation and evaluation:

- Iterative tuning: Set an initial set of chunk size and overlap parameters (e.g., 500 Token for chunk size and 50 Token for overlap), construct the RAG system and evaluate it. Then, gradually adjust the parameters, observe the changes in retrieval and Q&A effects, and choose the optimal combination of parameters.

- Assessment of indicators: Quantify the performance of the RAG system using suitable evaluation metrics, such as Recall@k, Precision@k, NDCG (Normalized Discounted Cumulative Gain), etc. for retrieval. These metrics can help you objectively evaluate the effectiveness of different chunking strategies.

Assessment tools in Langchain

Langchain provides a number of evaluation tools that can assist in the evaluation and parameter tuning of RAG systems. For example, tools such as DatasetEvaluator and RetrievalQAChain can help you automate the evaluation of the effects of combinations of different chunking strategies, retrieval models, and LLM models.

Evaluating the effectiveness of the chunking strategy

After choosing the right chunking strategy, how do you evaluate its effectiveness? "Better chunking means better retrieval", but how to quantify "better"? We need some metrics to evaluate the merits of a chunking strategy.

The following are some common evaluation metrics that can help you assess the impact of a chunking strategy on the performance of a RAG system:

- Retrieval of indicators:

- Recall (Recall@k): refers to the proportion of relevant documents (or chunks) among the Top-k retrieved results. The higher the recall, the more relevant information is retrieved by the chunking strategy.

- Accuracy (Precision@k): refers to the proportion of truly relevant documents (or chunks) among the Top-k retrieved results. The higher the accuracy, the higher the quality of the search results.

- NDCG (Normalized Discounted Cumulative Gain): is a more fine-grained ranking quality assessment metric that takes into account the relevance level and position of the retrieved results. a higher NDCG indicates a better quality of retrieval ranking.

- Q&A indicators:

- Answer Relevance: Evaluate how relevant the answers generated by the LLM are to the question. The higher the answer relevance, the better the RAG system is at generating meaningful answers based on the information retrieved.

- Answer Accuracy/Faithfulness: Evaluate whether the answers generated by the LLM are faithful to the contextual information retrieved, avoiding "illusions" and inaccurate information. Higher answer accuracy indicates that the chunking strategy is more capable of providing reliable context and guiding the LLM to generate more credible answers.

- Answer Fluency and Coherence: Although mainly influenced by the LLM's own abilities, a good chunking strategy can also indirectly improve the fluency and coherence of answers. For example, semantic chunking provides a more coherent context and helps LLMs to produce more natural language.

- Answer Relevance: Evaluate how relevant the answers generated by the LLM are to the question. The higher the answer relevance, the better the RAG system is at generating meaningful answers based on the information retrieved.

Assessment tools and methodologies

- manual assessment: The most direct and reliable method. A human evaluator is invited to rate the search results and Q&A answers of the RAG system based on preset evaluation criteria. The disadvantages of manual evaluation are that it is costly, time-consuming, and highly subjective.

- Automated assessment:: Use of automated assessment indicators and tools, for example:

- Retrieval of indicators: Recall, accuracy, NDCG, etc., as mentioned above, can be measured using standard information retrieval evaluation tools (e.g.

rank_bm25,sentence-transformersetc.) for automated calculations. - Q&A indicators: Some NLP assessment metrics (e.g. BLEU, ROUGE, METEOR, BERTScore, etc.) can be used to assist in assessing answer quality. However, it should be noted that automated Q&A assessment metrics still have limitations and cannot completely replace manual assessment.

- Langchain Evaluation Tool: Langchain offers a number of integrated evaluation tools, such as

DatasetEvaluatorcap (a poem)RetrievalQAChainThis simplifies the automated evaluation process of the RAG system.

- Retrieval of indicators: Recall, accuracy, NDCG, etc., as mentioned above, can be measured using standard information retrieval evaluation tools (e.g.

Recommendations for the assessment process

- Construction of the assessment data set:: Prepare an evaluation dataset containing questions and corresponding standardized answers. The dataset should cover as much as possible the typical application scenarios and question types of RAG systems.

- Selection of assessment indicators:: Selection of appropriate search and question-and-answer indicators based on the purpose of the assessment. A combination of manual and automated assessment can be used simultaneously.

- Running the RAG system: Run the RAG system on the evaluation dataset using different combinations of chunking strategies, retrieval models, and LLM models to record evaluation results.

- Analysis and comparison: Compare the evaluation metrics of different strategies, analyze their strengths and weaknesses, and select the optimal combination of strategies.

- Iterative optimization: Based on the evaluation results, the parameters of the chunking strategy, retrieval model and LLM model are continuously adjusted for iterative optimization to improve the performance of the RAG system.

To summarize: choose the chunking strategy that works best for you

This paper provides an in-depth discussion of the document chunking techniques that are crucial for RAG applications, from basic recursive character segmentation, to smarter semantic chunking and Agentic chunking, to refined chunking strategies for different document formats, as well as methods for selecting and evaluating chunk sizes and overlap parameters.

Review of core points

- Chunk mass determines RAG mass: A good chunking strategy is the cornerstone of building a high-performance RAG system.

- There is no one-size-fits-all chunking strategy: Different chunking strategies have their own advantages and disadvantages and are suitable for different scenarios. You need to choose the most appropriate strategy based on the specific requirements of your project, document characteristics and resource constraints.

- Recursive character segmentation: Simple, fast and economical for prototyping and cost-sensitive projects.

- semantic chunking: Understanding text semantics and generating semantically coherent chunks improves retrieval quality, but is computationally expensive.

- Agentic chunking: Simulates human understanding, is smarter, more flexible, and can handle complex documents, but is costly and Prompt engineering is complex.

- Chunking for different formats: For different formats such as Markdown, HTML, PDF, code, etc., a fine-grained chunking strategy is required to utilize the structural information of the document.

- Chunk size and overlap: Need to be tuned according to document characteristics and experimental results, there is no absolute optimal value.

- Assessment is key:: Quantify the effectiveness of the chunking strategy through evaluation metrics and manual evaluation, and perform iterative optimization.

How to choose? My advice.

- Rapid Prototyping:: Priority attemptsRecursive character segmentationThe RAG is a fast way to build a prototype of a RAG and to verify the feasibility of the system.

- The quest for higher quality: If there is a high demand for RAG quality and computational resources allow, you can try thesemantic chunkingThe

- Handling complex documents:: For documents with complex structures and semantic jumps.Agentic chunkingmay be the preferred option, but the cost and effectiveness need to be carefully weighed.

- Format-specific: If you are working with documents in a specific format such as Markdown, HTML, PDF, code, etc., be sure to use theTargeted chunking strategy, making full use of the structural information of the document.

- Continuous Iterative Optimization:: The selection of a chunking strategy is not an overnight process. In real projects, it is necessary to constantlyExperimentation, evaluation and iterative optimization, in order to find the best chunking program for you.

I hope this article can help you understand RAG chunking technology more deeply, and select and apply appropriate chunking strategies in real projects to build more powerful RAG applications!

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...