PearAI Chinese tutorials, practical shortcuts to explain the command

⚙️PearAI Core Features

CMD+I- Inline code editing: PearAI will make changes to your current file and show the differences.CMD+L- New Chat (if you select a code, it will also be added to the chat).CMD+SHIFT+L- Add to current chat.- Address Symbol (@) Command

@filename/foldername(math.) genus@docs- Add files, folders or documents. You can also choose to add links to your own documents by scrolling to the bottom of the page and clicking "Add Docs".- importation

@codebaseThe most relevant snippets in the code base can be automatically retrieved. Read more about indexing and retrieval here. You can also pressCMD/CTRL+ENTERUse the codebase context directly. - importation

@codeReferences specific functions or classes throughout the project. - importation

@terminalQuoting from the IDE terminal. - importation

@diffReferences all changes made in your current branch. This is useful when summarizing what you've done before committing or when requesting a general review. - importation

@problemsCite the issue in the current document.

- slash command (computing)

/commit- Generate commit messages for all your current changes./cmd- Generate a CLI command and paste it directly into the terminal./edit- utilizationCMD+LmaybeCMD+SHIFT+L(Windows users areCTRL) Bring the code into the chat and add/editto generate code changes with diffs./comment- and/editFunctions similarly, but adds comments to the code./test- and/editFunctions similarly, but generates unit tests for highlighted or supplied code.

Fast Terminal Debugging: Use the CMD+SHIFT+R Add the final terminal text to the chat.

- Customizing the Slash CommandThere are two main ways to add custom slash commands:

- Natural language cues:You can do this by sending a request to the

config.jsonto add custom slash commands by adding content to the customCommands property in thename: Use/nameThe name of the command invoked.description: A brief description of what is displayed in the drop-down menu.prompt: Templated prompts sent to the Large Language Model (LLM).

Ideal for frequent reuse of hints. For example, create a command to check for errors in your code with predefined hints.

- Custom Functions:To create a custom function for the slash command, use the

config.tsrather thanconfig.json. Will the newSlashCommandobjects are pushed to theslashCommandslist, specify "name" (to invoke the command), "description" (to be displayed in the drop-down menu), and "run" (an asynchronous generator (an asynchronous generator function that generates the string to be displayed in the UI). More advanced features, such as generating commit messages based on code changes, can be achieved by writing custom TypeScript code.~/.pearai/config.tsexport function modifyConfig(config: Config): Config { config.slashCommands?.push({ name: "commit", description: "Write a commit message", run: async function* (sdk) { const diff = await sdk.ide.getDiff(); for await (const message of sdk.llm.streamComplete( `${diff}\n\nWrite a commit message for the above changes. Use no more than 20 tokens to give a brief description in the imperative mood (e.g. 'Add feature' not 'Added feature'):`, { maxTokens: 20, }, )) { yield message; } }, }); return config; }This flexibility enables you to create powerful and reusable custom commands to meet specific workflow needs.

If you would like to have the PearAI Tab auto-completion is also available, see the next section.

- Natural language cues:You can do this by sending a request to the

🗃️ "@" Command

How to use

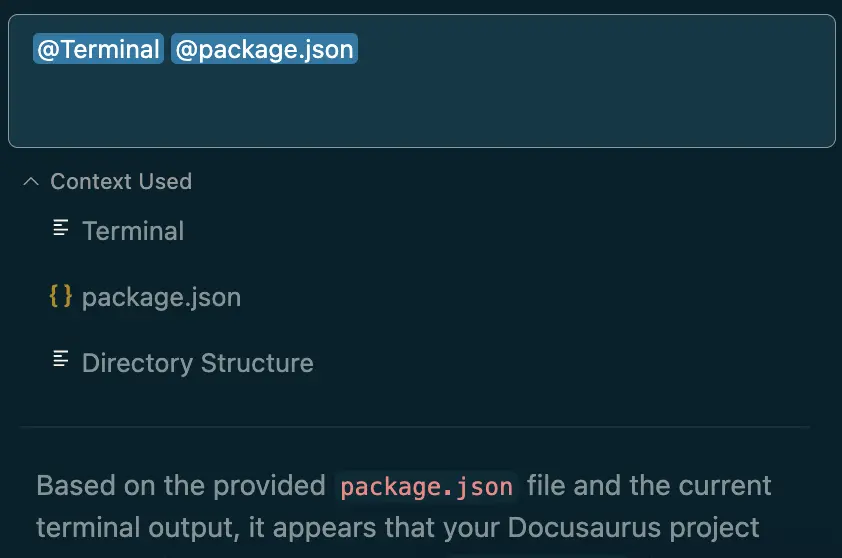

@ command provides additional contextual information (e.g., extra information) that can be included in your prompts to give the Large Language Model (LLM) a better understanding of the context in which you are working. To use it, type in PearAI Chat @, then a drop-down list will appear with context options. Each context provider is a plugin that allows you to reference an additional piece of information.

For example, you are having problems running an application locally and there are tons of errors in the terminal. You can use the @terminal Contains the error logs and further uses the @files quote package.jsonPearAI simplifies the debugging process by letting you have the complete context in one place.

built-in context provider (BCP)

PearAI comes with some useful context providers out of the box. If you wish to remove or add context providers, you can do so in the config.json (used form a nominal expression) contextProviders list to make changes.

@Files

Allows you to specify a file as the context.

{ "contextProviders": [ { "name": "files" } ]}

@Codebase

Allows you to use the codebase as a context. Note that depending on the size of the codebase, this may consume a significant amount of credits.

{ "contextProviders": [ { "name": "codebase" } ]}

@Code

Allows you to specify functions/classes.

{ "contextProviders": [ { "name": "code" } ]}

@Docs

Allows you to specify a document site as the context.

{ "contextProviders": [ { "name": "docs" } ]}

@Git Diff

Provides all changes made in your current branch relative to the master branch as context. Use this feature to get a summary of your current work or for code review.

{ "contextProviders": [ { "name": "diff" } ]}

@Terminal

Adds the contents of your current terminal as a context.

{ "contextProviders": [ { "name": "terminal" } ]}

@Problems

Add the issue from your current file as a context.

{ "contextProviders": [ { "name": "problems" } ]}

@Folder

References everything in the specified folder as a context.

{ "contextProviders": [ { "name": "folder" } ]}

@Directory Structure

Provides the project's directory structure as a context. You can use this context to let LLM know about any changes you make to the catalog.

{ "contextProviders": [ { "name": "directory" } ]}

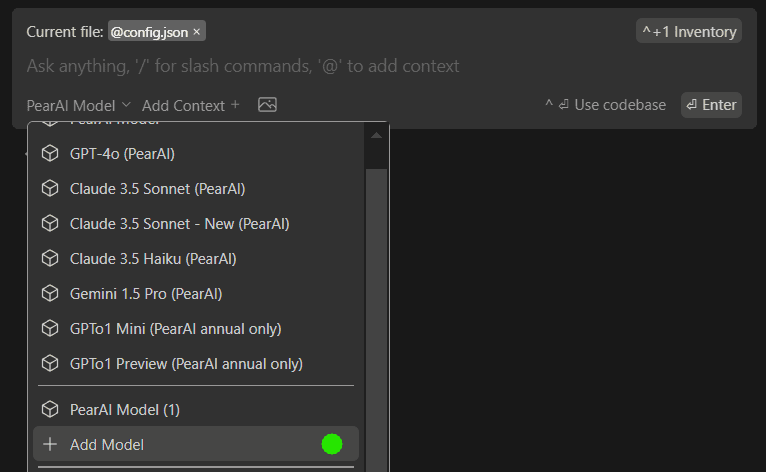

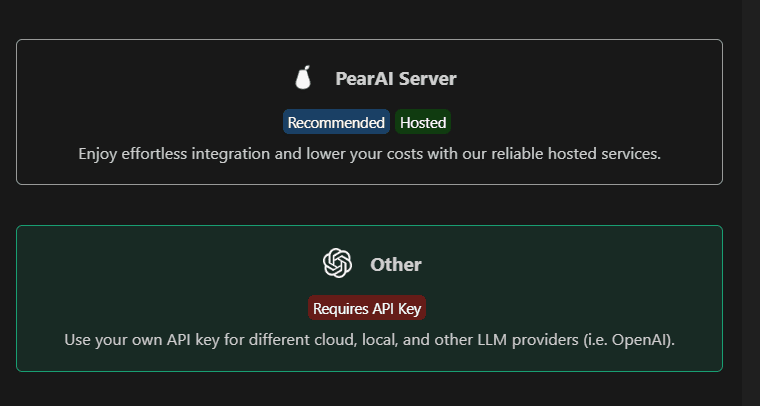

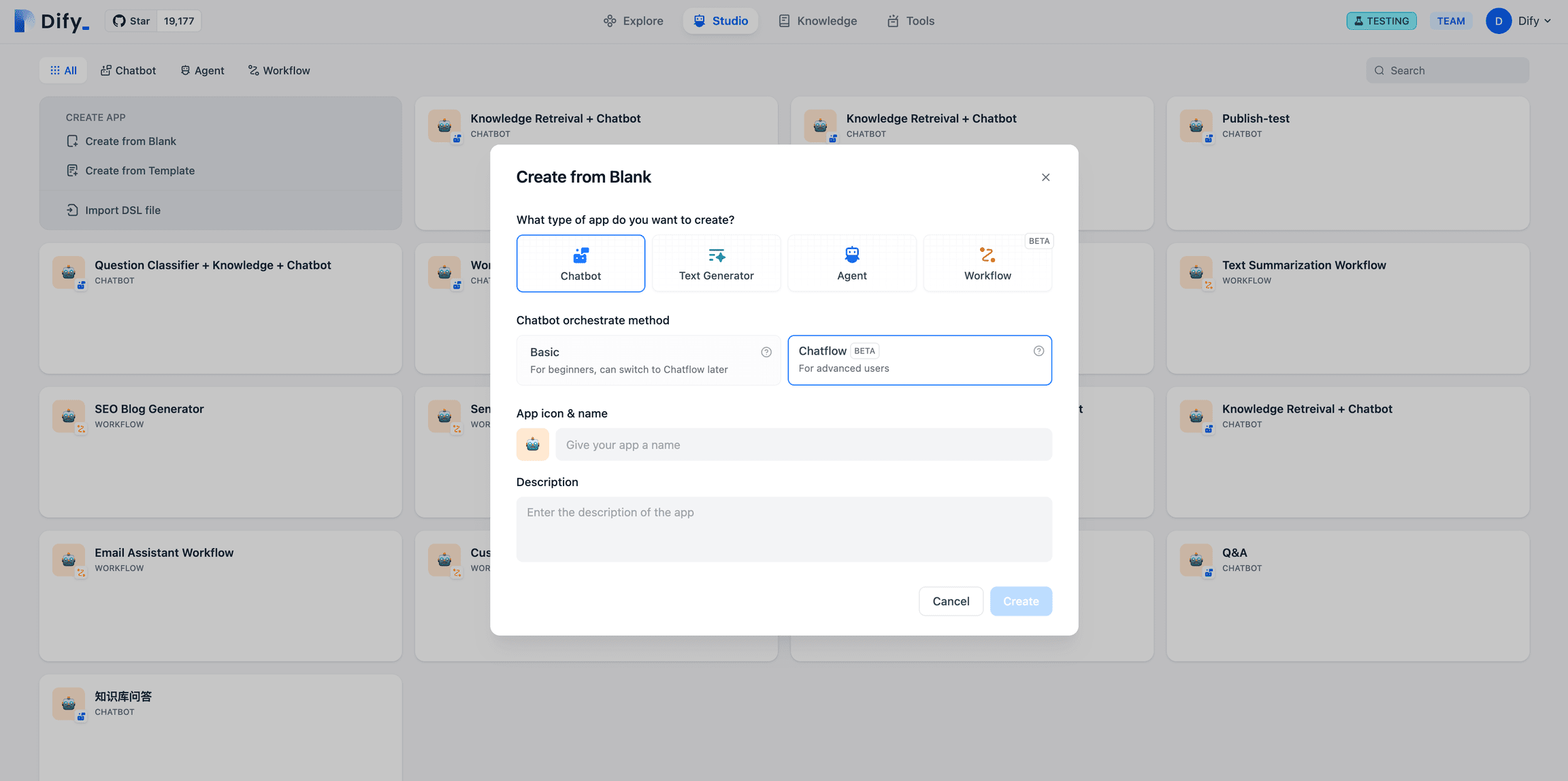

🆕 Adding a new model

In addition to PearAI's built-in models, you can add your own models/API keys by following these steps.

- Open the Add Model section in PearAI.

- Select "Other".

- Then select any models you wish to add.

- Follow the on-screen instructions to complete the setup.

Attention:

- Added model configurations can be found in PearAI's

config.jsonFind in the file (CMD/CTRL+SHIFT+P>Open config.json). - For Azure OpenAI, the "engine" field is the name of your deployment.

⏩ Important Shortcuts

| manipulate | Mac | Windows (computer) |

|---|---|---|

| New Chat (with selected codes) | CMD+L | CTRL+L |

| Append selected codes | CMD+SHIFT+L | CTRL+SHIFT+L |

| Open/Close Chat Panel | CMD+; | CTRL+; |

| Switching Models | CMD+' | CTRL+' |

| Zoom in/out chat window | CMD+\ | CTRL+\ |

| Last open chat | CMD+0 | CTRL+0 |

| historical record | CMD+H | CTRL+H |

| Bring the final terminal text into the chat | CMD+SHIFT+R | CTRL+SHIFT+R |

These shortcuts can be pressed again to switch to the previous state.

All shortcuts can be viewed from within PearAI by opening the command panel CMD/CTRL+SHIFT+P → Keyboard shortcutsThe

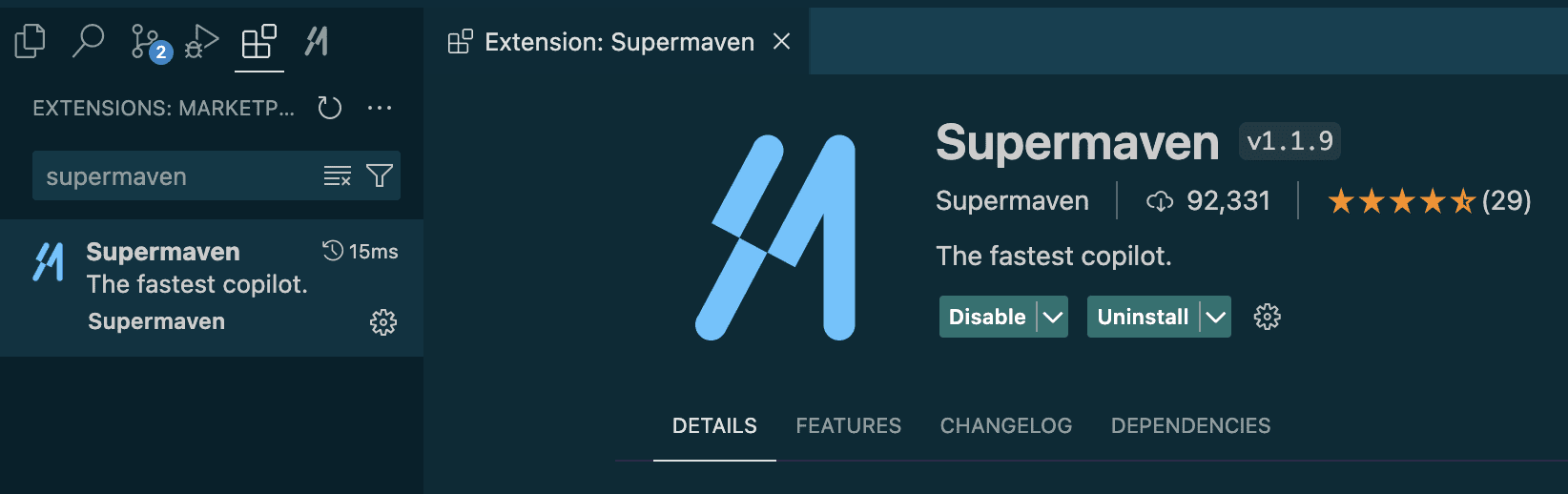

✅ Tab auto-completion

PearAI supports Tab key auto-completion, which predicts and suggests what to type next as you write code. Here's how to set it up:

Setup Guide

Supermaven It's currently one of the fastest and best code auto-completion AIs on the market, and it comes with a generous free usage allowance. Simply install Supermaven as an extension to PearAI.

We are currently developing our own code auto-completion model, so stay tuned!

alternative

- Setting the CodestralWe recommend the use of CodestralThis is the leading code completion (or FIM - Fill In Middle) model available and is open source! You'll need to start with the Mistral API Get a Codestral API key.

- Add to PearAI's config.json fileAdd the following to your config.json file (replace "YOUR_API_KEY" with your actual API key):

"tabAutocompleteModel": { "title": "Codestral", "provider": "mistral", "model": "codestral-latest", "apiKey": "YOUR_API_KEY"} - Enjoy development speedup with auto-completion!

🚀 Cost of use vs. available models

How much does it cost to use? (PearAI servers only)

The cost of using PearAI is calculated in points. The amount of points consumed depends on the size of the input prompts, the size of the output responses, the model used, and the AI tool (e.g., PearAI Chat, PearAI Search, PearAI Creator, etc.).

As an early adopter benefit, current subscribers receive early bird pricing - meaning you keep these special rates forever. For a subscription fee of just $15 per month, you'll get more usage and better value for your money than if you bought the equivalent amount of API credits directly from a large language modeling provider.

Note that longer messages and larger files consume more credits. Similarly, long conversations will run out of credits faster because each previous message is sent as context. It is recommended to start new conversations frequently. By being more specific with your prompts, you will not only save more credits, but you will also get more accurate results because the AI will process less irrelevant data.

If you are a subscriber and have reached your monthly credit limit, you can easily recharge your credits in your dashboard and they will not expire.

How can I maximize my use of PearAI?

Here are some tips to help you use PearAI more efficiently:

- Starting a new conversation: Open a new conversation when you switch to a new topic or have an unrelated question. This helps keep conversation management organized and optimizes usage efficiency.

- Avoid duplicate uploads: Files that have been uploaded in the same conversation do not need to be uploaded again; PearAI remembers your previous uploads.

- Provide relevant context: Although PearAI has access to your entire codebase, you get the best results by providing only the files that are directly relevant to the request. This helps PearAI focus on the most relevant information and provide more accurate and useful responses.

We understand that usage limitations can sometimes be confusing. We are always working to improve PearAI and provide the best possible experience with AI. If you have any questions or issues, please feel free to contact us at email Contact us or join our DiscordThe

usable model

PearAI server

- Claude 3.5 Sonnet latest

- Claude 3.5 Haiku (unlimited, switches automatically when user reaches monthly credit limit)

- GPT-4o latest

- OpenAI o1-mini

- OpenAI o1-preview

- Gemini 1.5 Pro

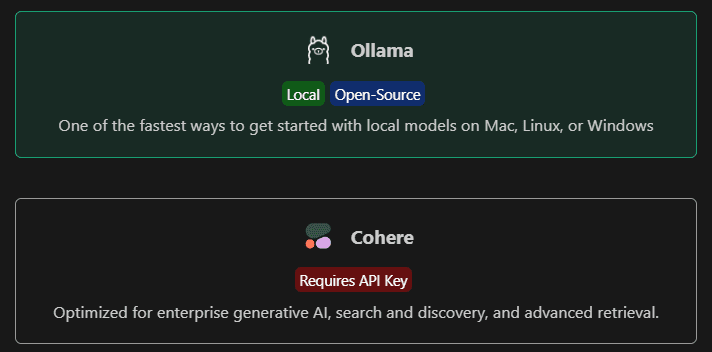

Bring your own API key or local

- Claude

- OpenAI

- Google Gemini

- Azure OpenAI

- Mistral

- Ollama

- Cohere

- Groq

- DeepSeek

- TogetherAI

- LM Studio

- llamafile

- Replicate

- llama.cpp

- WatsonX

- Other OpenAI Compatible APIs

💻 Codebase Contexts

PearAI indexes your codebase so that it can later automatically extract the most relevant context from the entire workspace. This is done through a combination of embedding search and keyword search. By default, all embeddings are searched using the all-MiniLM-L6-v2 computed locally and stored in the ~/.pearai/indexThe

Currently, the codebase search function is available through the "codebase" and "folder" context providers. You can type @codebase maybe @folder, and then ask the question. The contents of the input box will be compared with the embedding in the rest of the code base (or folder) to identify the relevant files.

Here are some common usage scenarios:

- Ask advanced questions about the code base

- "How do I add a new endpoint to a server?"

- "Are we using VS Code's CodeLens feature anywhere?"

- "Is there already code to convert HTML to markdown?"

- Generate code using existing examples as a reference

- "Generate a new date selector with the React component, using the same pattern as the existing component"

- "Write a draft CLI application for this project using Python's argparse."

- "In

barClasses that implement thefoomethod, following otherbazPatterns in subclasses"

- utilization

@folderAsk questions about specific folders to increase the likelihood of relevant results- "What is the main purpose of this folder?"

- "How are we using VS Code's CodeLens API?"

- or any of the above examples, but replace the

@codebaseReplace with@folder

Here are some scenarios that are not suitable:

- Need to get the big language model to look at the code base of theEach file

- "Find out all of the calls to the

fooWhere the function " - "Check our code base and find all spelling errors."

- "Find out all of the calls to the

- reconstruct

- "for

barfunction adds a new argument and updates the usage "

- "for

Ignore files when indexing

PearAI follows .gitignore file to determine which files should not be indexed.

If you want to view the files that PearAI has indexed, the metadata is stored in the ~/.pearai/index/index.sqlite. You can use something like DB Browser for SQLite tool to view this file in the tag_catalog Table.

If you need to force a refresh of the index, you can use the cmd/ctrl + shift + p + "Reload Window" to reload the VS Code window, or press the index button in the lower left corner of the chat panel.

📋 Common Usage Scenarios

Easy to understand code snippets

cmd+L(MacOS) /ctrl+L(Windows)

Using the Tab Key to Auto-Complete Code Suggestions

tab(MacOS) /tab(Windows)

Refactoring functions while writing code

cmd+I(MacOS) /ctrl+I(Windows)

Ask a question about the code base

@codebase(MacOS) /@codebase(Windows)

Quickly use documents as contexts

@docs(MacOS) /@docs(Windows)

Use the slash command to initiate an operation

/edit(MacOS) //edit(Windows)

Adding classes, files, etc. to a context

@files(MacOS) /@files(Windows)

Understand terminal errors immediately

cmd+shift+R(MacOS) /ctrl+shift+R(Windows)

Mac users: Download the development version of PearAI.

To run PearAI in development mode, you will need to download an unsigned version of the application, as the signed version will not run in debug mode.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...