Observers: lightweight library for AI observability that tracks OpenAI-compatible API request data

General Introduction

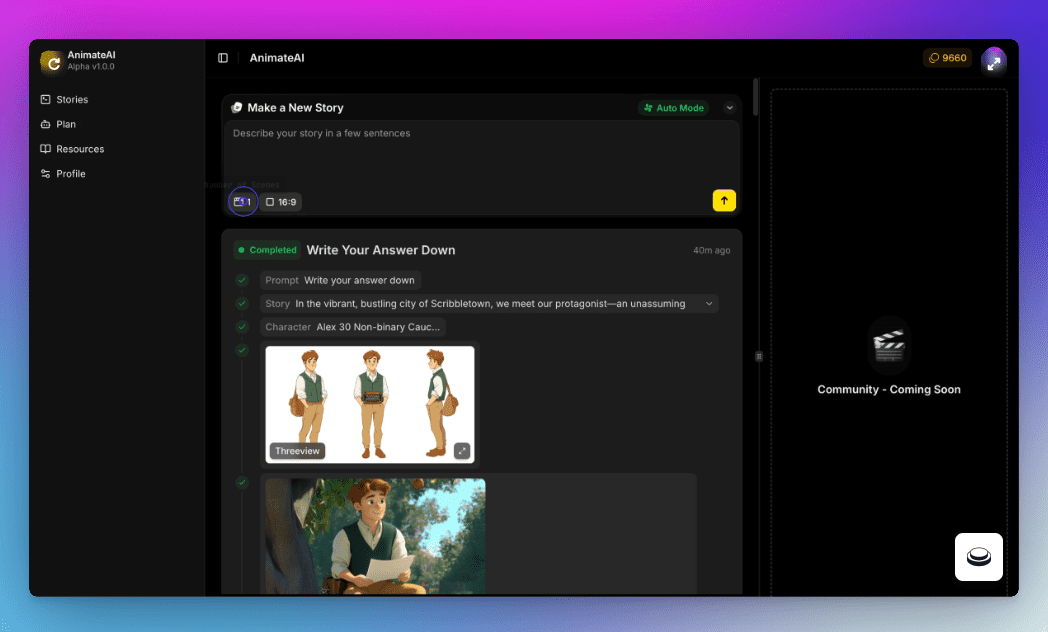

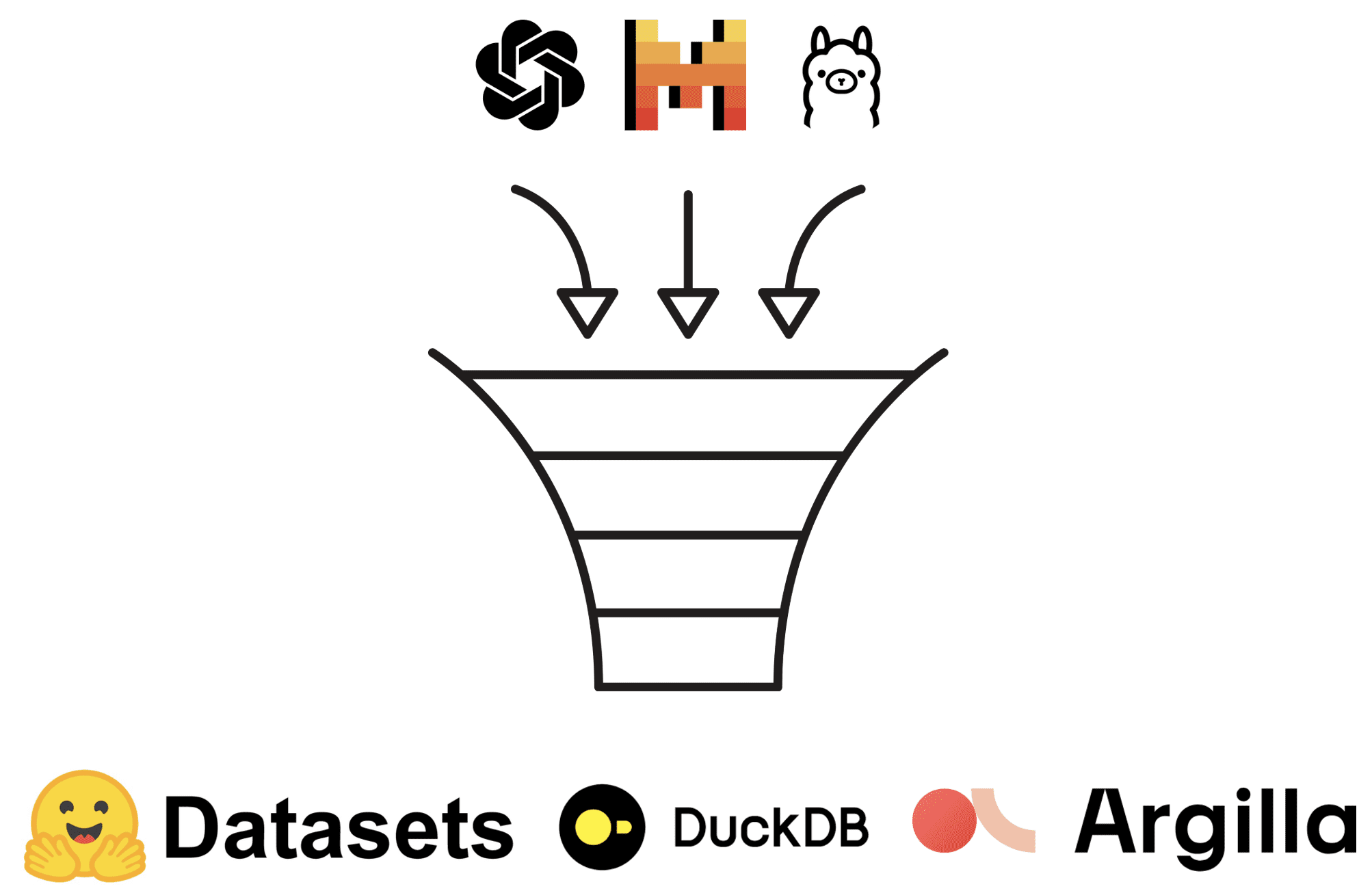

Observers is an open source Python SDK designed to provide comprehensive observability for generative AI APIs. The library enables users to easily track and record interactions with AI models and store these observations in multiple backends. Whether it is OpenAI or another LLM provider that implements the OpenAI API message format, observers can be monitored and logged efficiently. By integrating with storage backends such as DuckDB and Hugging Face datasets, users can easily query and analyze AI interaction data.

Function List

- Generative AI API Monitoring: Support for OpenAI and other LLM providers that implement the OpenAI API message format.

- Multiple back-end data storage: Support for DuckDB, Hugging Face datasets, and many other storage backends.

- Document Information Watch: Support for multiple document formats such as PDF, DOCX, PPTX, XLSX, Images, HTML, AsciiDoc and Markdown through Docling integration.

- Open Source Telemetry Support: Support for multiple telemetry providers through OpenTelemetry integration.

- unified interface: Provides a unified LLM API interface through AISuite and Litellm.

Using Help

mounting

First, you can install the observer SDK using pip:

pip install observers

If you wish to use another LLM provider through AISuite or Litellm, you can install it using the following command:

pip install observers[aisuite] # 或者 observers[litellm]

If you need to observe document information, you can use the Docling integration:

pip install observers[docling]

For open source telemetry support, the following can be installed:

pip install observers[opentelemetry]

utilization

The observer library distinguishes between observers and storage. Observers package generative AI APIs (e.g. OpenAI or llama-index) and track their interactions. Storage classes, on the other hand, synchronize these observations to different storage backends (e.g., DuckDB or Hugging Face datasets).

sample code (computing)

Below is a simple example code showing how to send requests and log interactions using the Observer Library:

from observers.observers import wrap_openai

from observers.stores import DuckDBStore

from openai import OpenAI

store = DuckDBStore()

openai_client = OpenAI()

client = wrap_openai(openai_client, store=store)

response = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": "Tell me a joke."}],

)

The code sends the request to a serverless endpoint and logs the interaction to a Hub dataset using the default storage DatasetsStore. The dataset will be pushed to your personal workspace (e.g. http://hf.co/{your_username}).

Configuration Storage

To configure a different storage backend, you can refer to the following example:

- DuckDB Storage: The default storage is DuckDB, which can be viewed and queried using the DuckDB CLI.

- Hugging Face dataset storage: Datasets can be viewed and queried using the Hugging Face Datasets Viewer.

- Argilla Storage: Allows synchronization of observations to Argilla.

- OpenTelemetry Storage: Allows synchronization of observations to any provider that supports OpenTelemetry.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...