MuseV+Muse Talk: Complete Digital Human Video Generation Framework | Portrait to Video | Pose to Video | Lip Synchronization

General Introduction

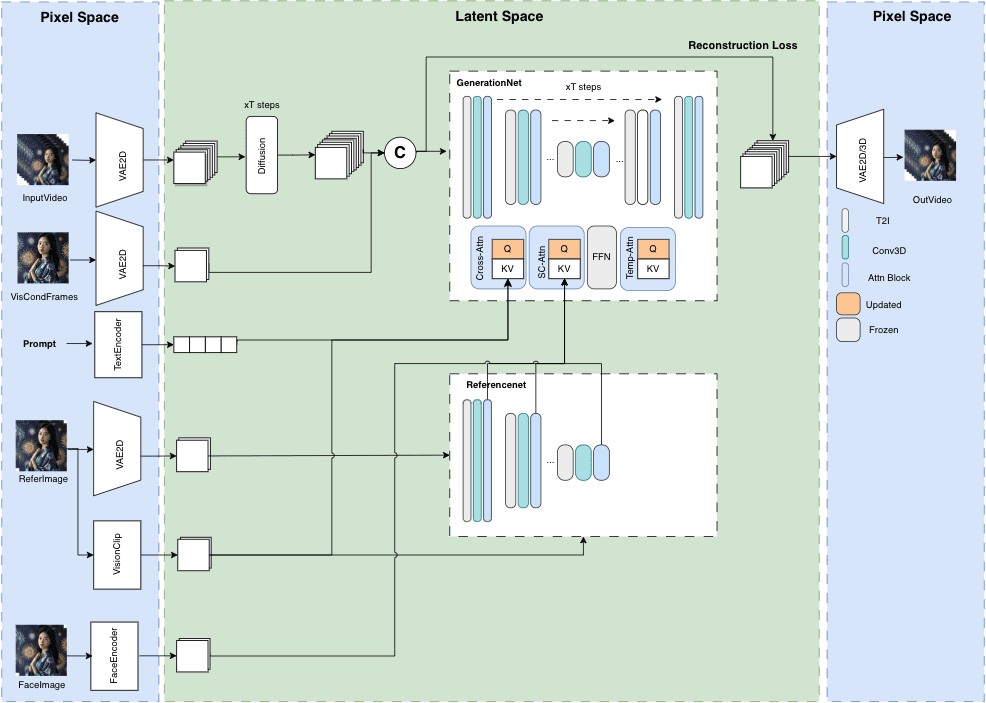

MuseV is a public project on GitHub that aims to enable the generation of avatar videos of unlimited length and high fidelity. It is based on diffusion technology and provides various features such as Image2Video, Text2Image2Video, Video2Video and more. Details of the model structure, use cases, quick start guide, inference scripts and acknowledgements are provided.

MuseV is a virtual human video generation framework based on diffusion modeling with the following features:

It supports infinite-length generation using a novel visual conditional parallel denoising scheme without the problem of error accumulation, especially for scenes with fixed camera positions.

A pre-trained model for avatar video generation trained on a character type dataset is provided.

Supports image-to-video, text-to-image-to-video, and video-to-video generation.

Compatible with the Stable Diffusion text generation ecosystem, including base_model, lora, controlnet, and more.

Support for multiple reference image technologies including IPAdapter, ReferenceOnly, ReferenceNet, IPAdapterFaceID.

We'll be rolling out the training code later as well.

Function List

Unlimited length video generation

High Fidelity Virtual Human Images

Versatile support: Image2Video, Text2Image2Video, Video2Video

Clear model structure and use cases

Quick Start and Reasoning Scripts

Using Help

Visit GitHub repositories for updates and downloadable resources

Follow the Quick Start Guide for initial program setup

Use the provided inference scripts to generate video content

Combined use method:

Method 1: Live Video + Muse Talk

Method 2: Picture + MuseV + Muse Talk

a finished product

From a static picture loop video, make the anime character speak, the cartoon character's lips itself is missing which makes it strange to speak, next time you should change the image with "lips", preferably "real people". 45 seconds of the video in the official Wait about 15 minutes for the test environment.

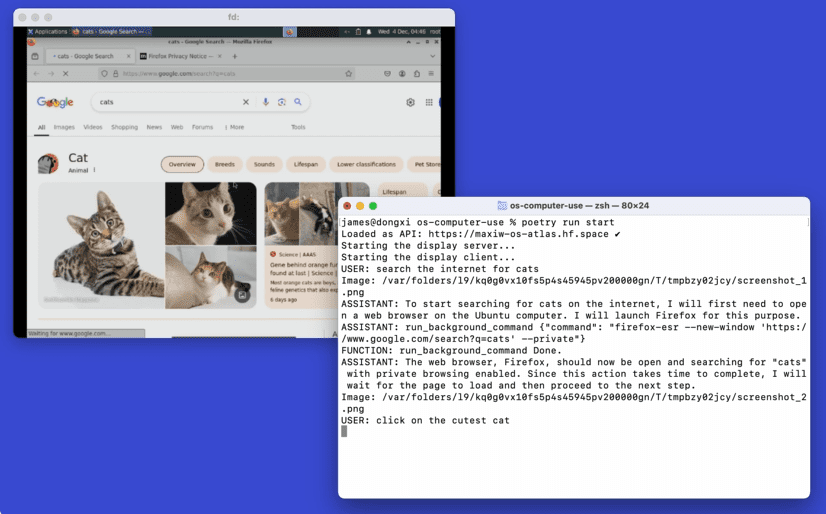

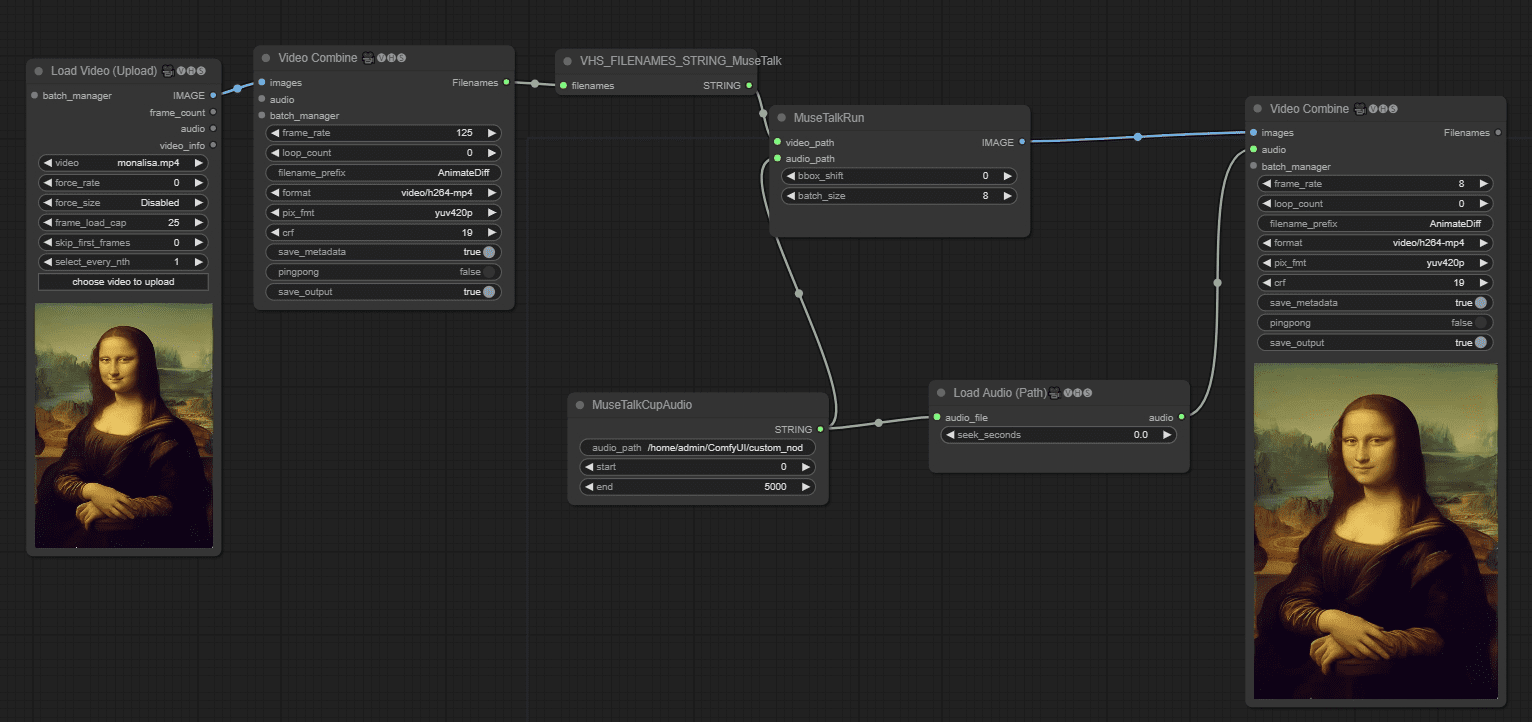

Making Video Speak in ComfyUI Workflows

We launch MuseTalk MuseTalk is a real-time, high-quality mouth synchronization model (30fps+ on NVIDIA Tesla V100). MuseTalk can be applied with input video, such as that generated by MuseV, as a complete avatar solution.

MuseV Online Experience / Windows One-Click Installation Package

MuseV's companion mouth synchronization model MuseTalk

Link: https://pan.quark.cn/s/ed896ceda5c8

Extract code: JygA

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...