MiMo-V2-Flash - a large model of the open source MoE architecture released by Xiaomi

What is MiMo-V2-Flash?

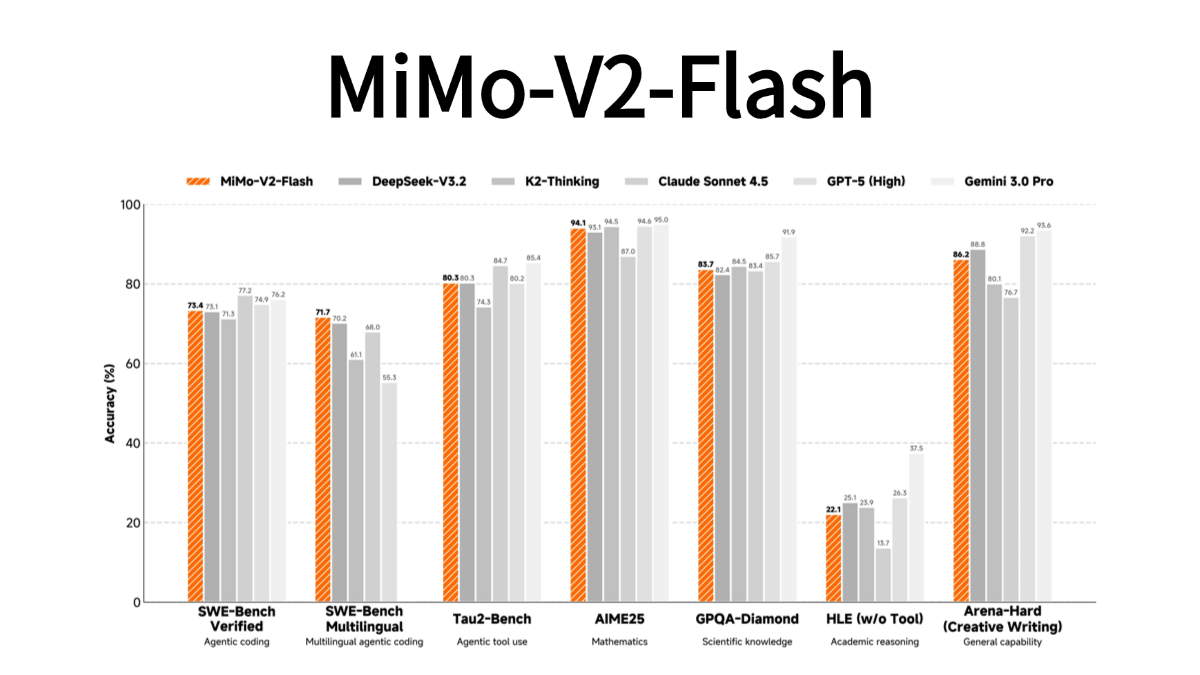

MiMo-V2-Flash is an open source MoE architecture large model released by Xiaomi, with 309 billion total parameters and 15 billion active parameters, focusing on efficient reasoning and intelligent body applications. The model adopts hybrid attention architecture and multi-word meta-prediction technology, with an inference speed of 150 tokens/second, at a cost of only 2.5% of similar models, and performs well in code generation, mathematical reasoning and other tasks. Its innovations include three-layer MTP parallel prediction technology (2-2.6 times speedup), multi-teacher online distillation training (arithmetic saving 98%), support for 256K ultra-long context windows and networked search function. The model is currently open-sourced at Hugging Face, offering a MIT protocol license with API pricing of $0.1/million token for input and $0.3/million token for output.

Features of MiMo-V2-Flash

- High Performance Reasoning: Adopting hybrid attention architecture and lightweight multi-Token prediction technology, it significantly improves inference efficiency, generates faster speeds, and significantly reduces inference costs.

- Long text processing capability: Supports context lengths of up to 256K, making it suitable for long text generation and comprehension tasks such as long-form content creation and document processing.

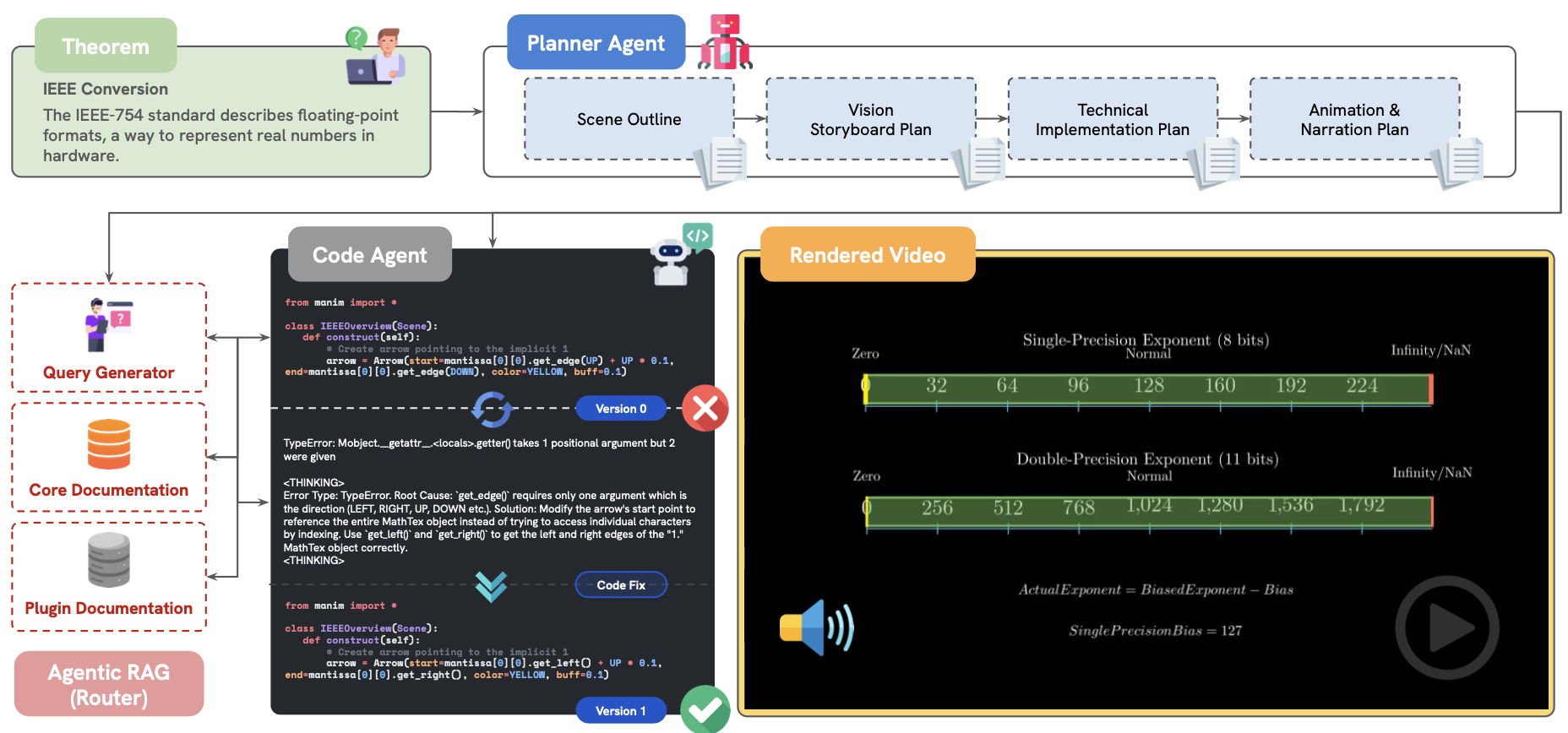

- Intelligent Body Optimization: Designed for intelligent body AI, it improves complex task processing through large-scale intelligent body reinforcement learning and multi-instructor online strategy distillation.

- coding skills: Excellent in code generation, completion and comprehension, supports multiple programming languages and is suitable for developer tool integration.

- Multi-language support: Multi-lingual capability, capable of handling text generation, translation and comprehension tasks in multiple languages, suitable for internationalized applications.

- open source and easy to use: The model weights and inference code is fully open-source, uses the MIT protocol, is easy for developers to use and secondary development, and supports rapid deployment.

- Reasoning Optimization: Supports FP8 mixed-precision inference and combines with the SGLang framework to provide efficient inference performance for large-scale applications.

Core Advantages of MiMo-V2-Flash

- Extreme Reasoning Efficiency: The inference cost is only 2.5% of the benchmark closed-source model, and the generation speed is improved by 2X, which is suitable for high-efficiency task processing.

- Powerful long text capability: Supports very long context length of 256K, significantly better than other open source models, suitable for long text generation and comprehension.

- Excellent coding skills: Outperforms most open-source models and approaches the level of benchmark closed-source models in code generation, completion, and comprehension tasks.

- Intelligent body mission expertise: Reinforcement learning through large-scale intelligentsia, specializing in complex reasoning and multi-round conversation tasks, suitable for intelligentsia AI scenarios.

What is the official website of MiMo-V2-Flash?

- Github repository:: https://github.com/xiaomimimo/MiMo-V2-Flash

- HuggingFace Model Library:: https://huggingface.co/XiaomiMiMo/MiMo-V2-Flash

- Technical Papers:: https://github.com/XiaomiMiMo/MiMo-V2-Flash/blob/main/paper.pdf

People for whom MiMo-V2-Flash is suitable

- developers: Suitable for software engineers who need high-performance AI models for application development, which can be used to build intelligent assistants, automation tools, and more.

- research worker: For scholars working in natural language processing and artificial intelligence research for modeling and algorithm improvement.

- business user: Suitable for enterprises to use to improve business efficiency, such as customer service automation, data analytics, and intelligent decision support.

- educator: It can be used in the field of education to assist teaching and learning, generate teaching materials, provide intelligent tutoring, etc.

- content creator: Suitable for writers, editors, copywriters, etc., for content creation, copy generation and creative inspiration.

- technology enthusiast: Individual users interested in AI technology for learning, experimenting and exploring AI applications.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...