MiMo-Embodied - Xiaomi's Open Source Cross-Domain Embodied Intelligence Pedestal Model

What is MiMo-Embodied?

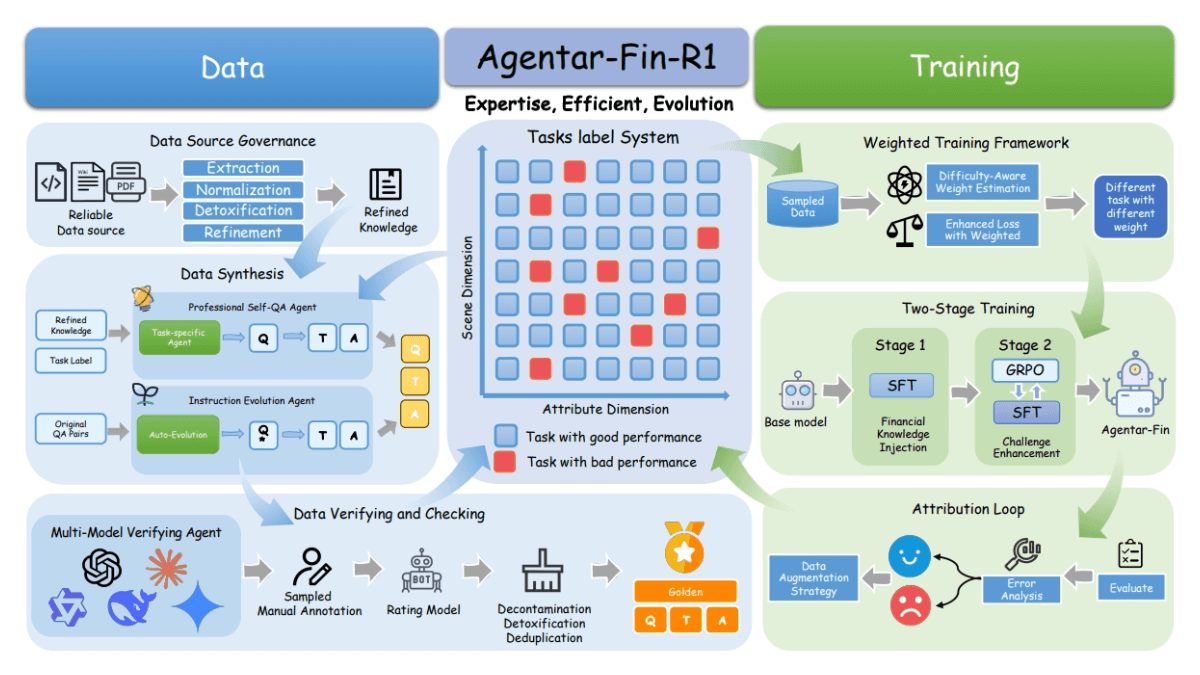

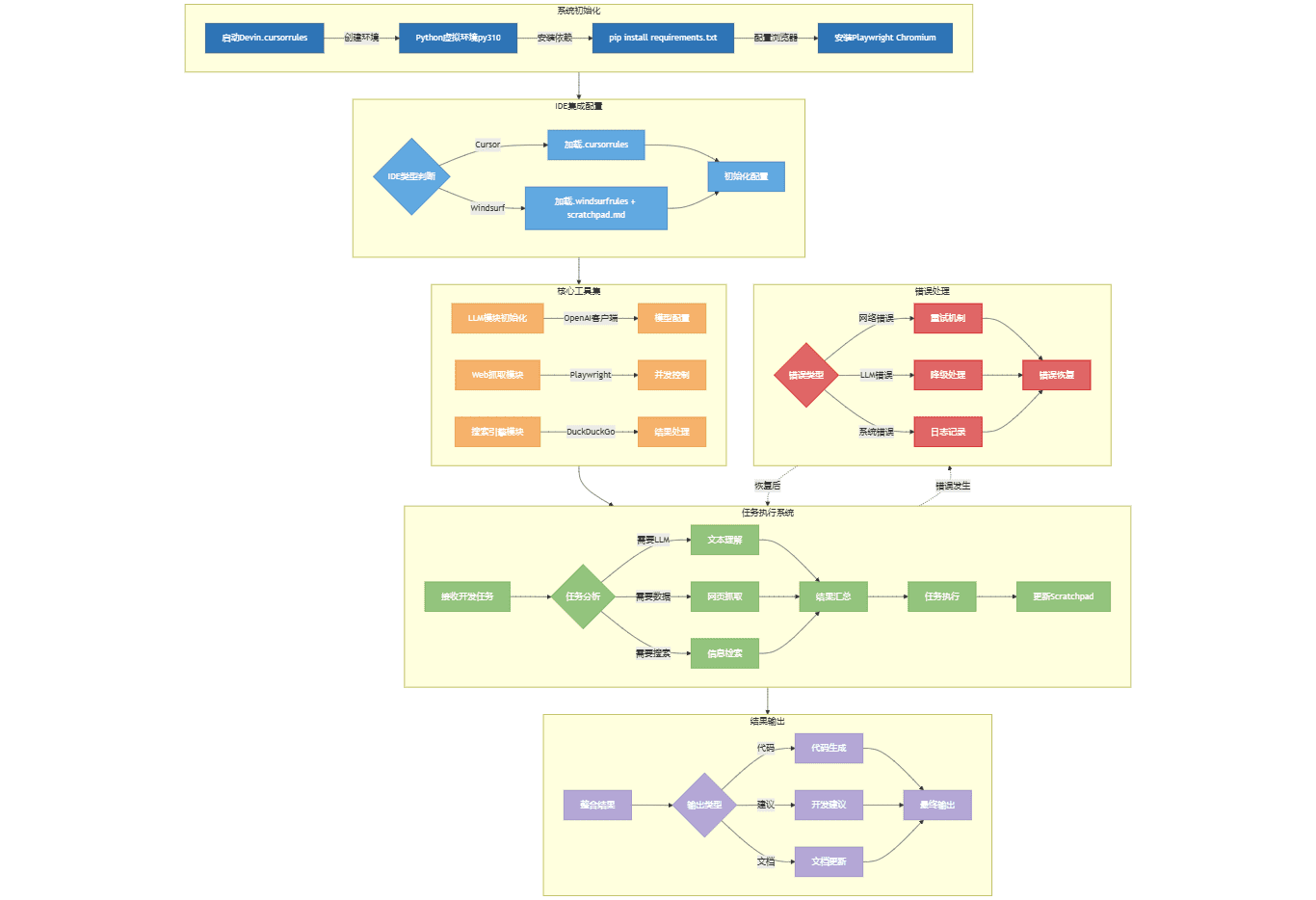

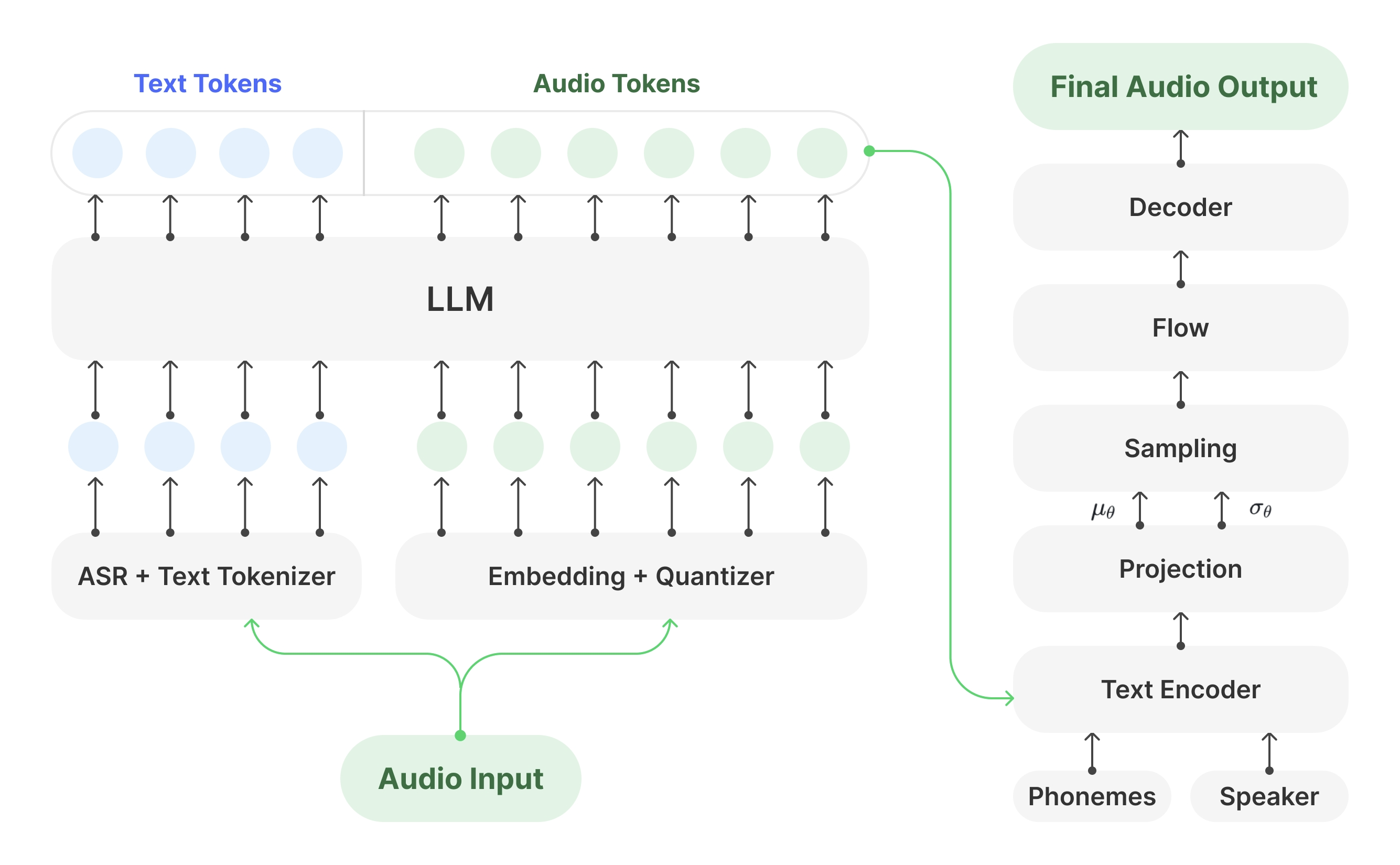

MiMo-Embodied is the world's first cross-embodied base model that successfully integrates Embodied AI and autonomous driving open-sourced by Xiaomi Group. It solves the knowledge migration problem between Embodied AI and autonomous driving, and realizes the unified modeling of tasks in the two fields. Simultaneously support the three core tasks of Embodied AI (reasoning, task planning, and spatial understanding) and the three key tasks of autonomous driving (environment sensing, state prediction, and driving planning) to form a full-scene intelligence support. The unified architecture integrates indoor operation (e.g., robot navigation, object interaction) and outdoor driving (e.g., environment perception, path planning) tasks, breaking the limitation that traditional visual language models (VLMs) are limited to a single domain.

Features of MiMo-Embodied

- Cross-domain generalizability: As the first model to integrate autonomous driving and embodied intelligence, MiMo-Embodied enables multimodal perception, reasoning, and decision-making in dynamic environments for a wide range of complex scenarios.

- Multimodal interaction capabilities: It supports image, video and text inputs, and can handle multimodal tasks such as visual question and answer, command following, etc., providing a more natural human-computer interaction experience.

- Powerful reasoning: Fine-tuned by chained reasoning, the model is capable of complex logical reasoning and multi-step task planning, suitable for task execution in embodied intelligence and path planning in autonomous driving.

- High-precision environment sensing: In autonomous driving scenarios, MiMo-Embodied accurately senses the traffic scene, identifies key elements and predicts dynamic behavior to ensure driving safety.

- Spatial understanding and navigation: The model has excellent spatial understanding for indoor navigation, object localization, and spatial relationship reasoning, and is suitable for path planning in robot operation and autonomous driving.

- Enhanced Learning Optimization: Employing reinforcement learning fine-tuning to improve the quality and reliability of the model's decisions in complex tasks and ensure efficient deployment in real-world environments.

- Open Source and Scalability: MiMo-Embodied is completely open source, with code and models available at Hugging Face, providing researchers and developers with powerful tools to support further customization and extensions.

MiMo-Embodied's Core Advantages

- Cross-domain capability coverage: Integrating indoor manipulation (e.g., robot navigation, object interaction) and outdoor driving (e.g., environment perception, path planning) tasks through a unified architecture, breaking the limitation of traditional visual language models (VLMs) limited to a single domain.

- Bidirectional synergy empowerment: The knowledge transfer synergy between indoor interaction capability and road decision-making capability is verified, providing new ideas for cross-scene intelligent integration.

- Full chain optimization is reliable: A four-stage progressive training strategy including embodied/self-driving ability learning, chain of thought (CoT) reasoning enhancement, and reinforcement learning (RL) fine reinforcement is used to improve the reliability of the model for deployment in real-world environments.

- multimodal interaction: Supports multiple inputs such as visual and verbal, and is capable of handling complex multimodal tasks such as visual question and answer, instruction following, and scene understanding.

- Enhanced Learning Optimization: Fine-tuning through reinforcement learning to improve the model's decision-making ability and reliability of task execution in complex environments.

- Highly effective reasoning skills: Strong logical reasoning and multi-step task planning capabilities for complex task execution and decision making in dynamic environments.

- Spatial comprehension: excels in spatial relationship understanding, object localization, and navigation tasks to support precision operations in robotics and autonomous driving systems.

What is MiMo-Embodied's official website?

- Github repository:: https://github.com/XiaomiMiMo/MiMo-Embodied

- HuggingFace Model Library:: https://huggingface.co/XiaomiMiMo/MiMo-Embodied-7B

- arXiv Technical Paper:: https://arxiv.org/pdf/2511.16518

Who is MiMo-Embodied for?

- Autonomous Driving Technology Developers: It can be used to develop and optimize autonomous driving systems, providing support for core functions such as environment awareness and decision planning.

- Robotics Engineer: Suitable for robot navigation, manipulation and interaction tasks, facilitating autonomous robot actions in complex environments.

- artificial intelligence researcher: as an open source model to provide an experimental platform for studying multimodal interaction, embodied intelligence, and autonomous driving.

- Intelligent Transportation System Developer: It can be used in traffic monitoring, intelligent dispatching and other scenarios to enhance the intelligence of the transportation system.

- Smart Home and Industrial Automation Developer: Supports complex task planning and human-machine collaboration for smart home and industrial automation scenarios.

- Universities and Research Institutions: To provide open source resources for teaching and research in related fields, and to promote academic exchanges and technology development.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...