Memary: an open-source project to enhance Agent long-term memory using knowledge graphs

General Introduction

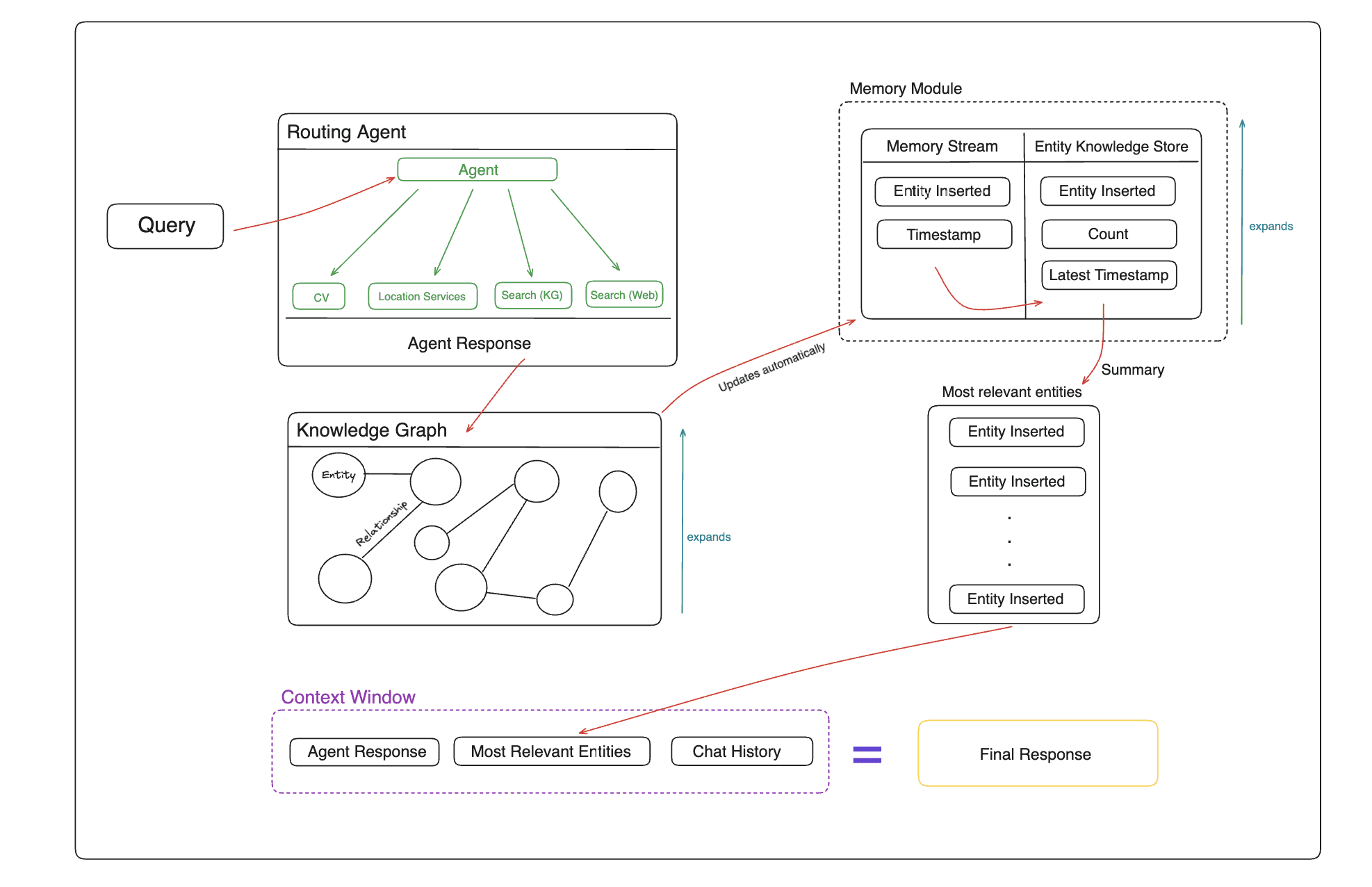

Memary is an innovative open source project focused on providing long-term memory management solutions for autonomous intelligences. The project helps intelligences break through the limitations of traditional context windows to achieve a smarter interaction experience through knowledge graphs and specialized memory modules.Memary uses an automated memory generation mechanism that can automatically update memories during intelligence interactions and display these memories through a unified dashboard. The system supports multiple model configurations, including locally running Llama and LLaVA models, as well as GPT models in the cloud. In addition, Memary provides multi-graph support, allowing developers to create independent instances of intelligences for different users, enabling personalized memory management.

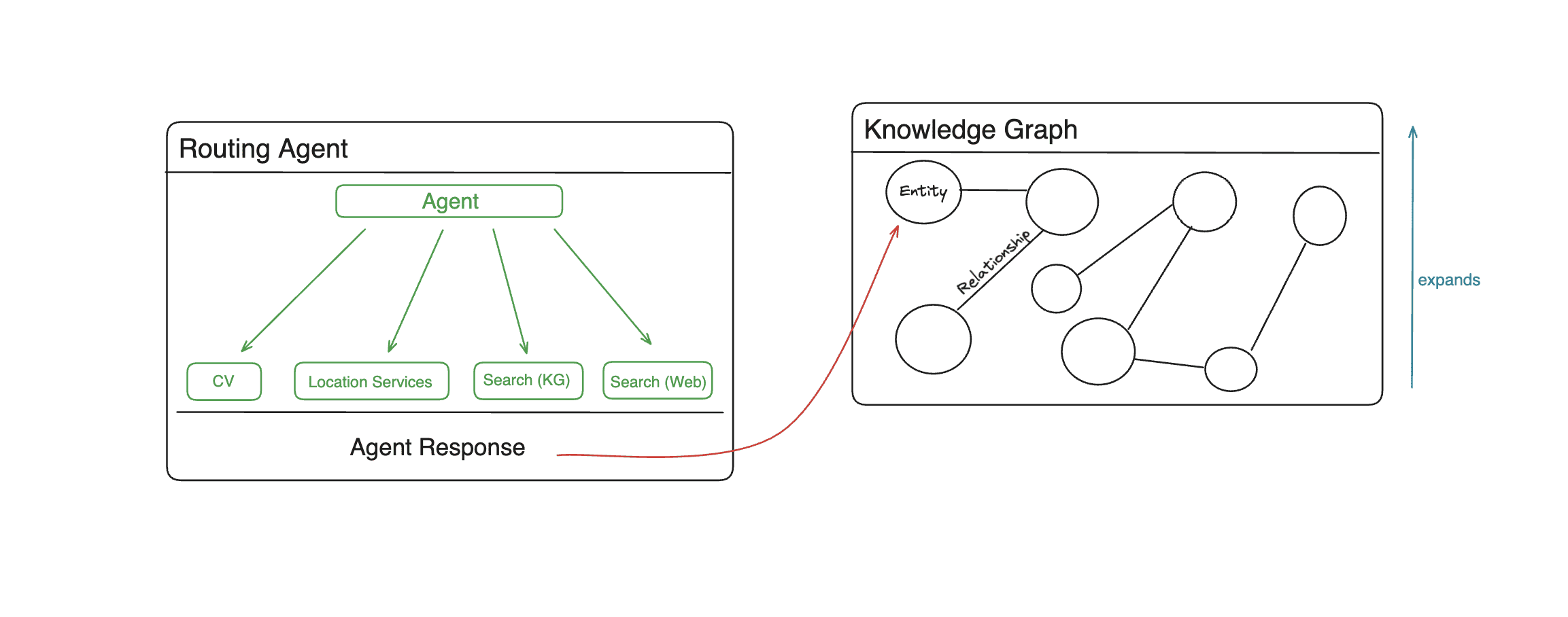

Memary Overall Architecture

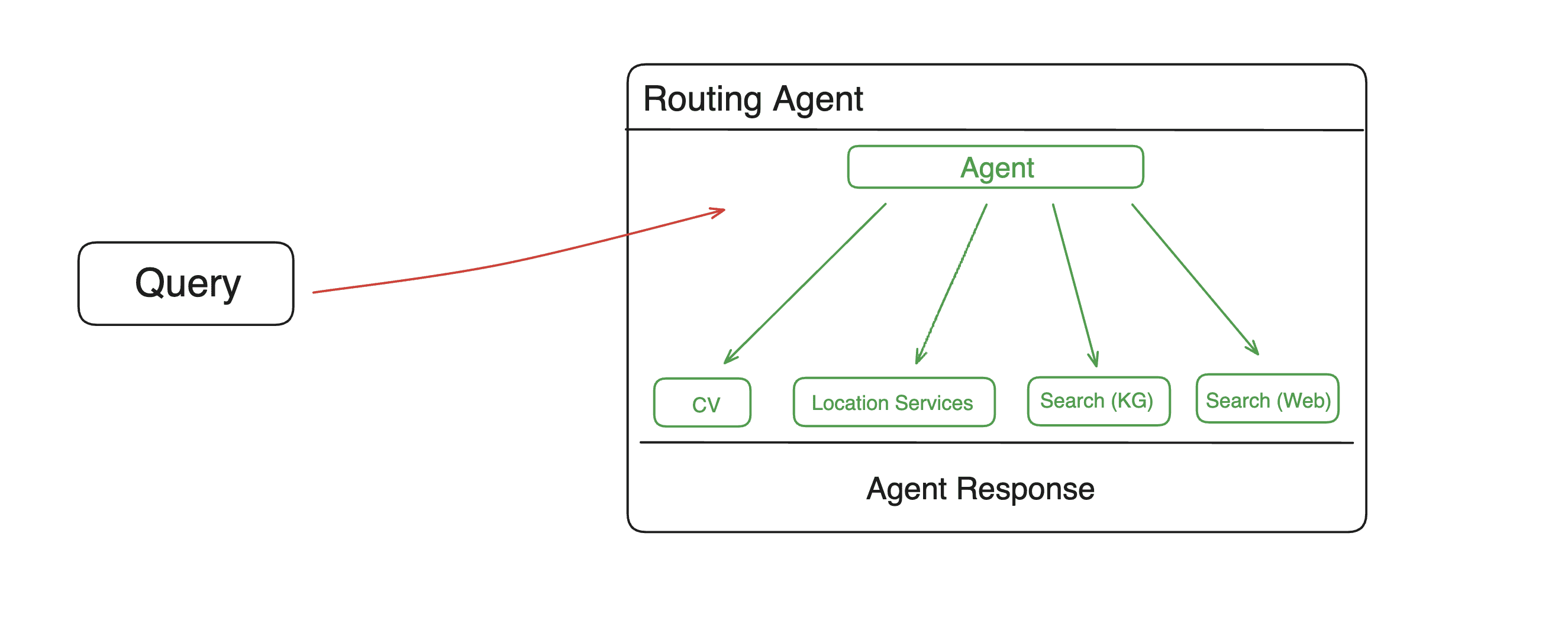

Memary Agent

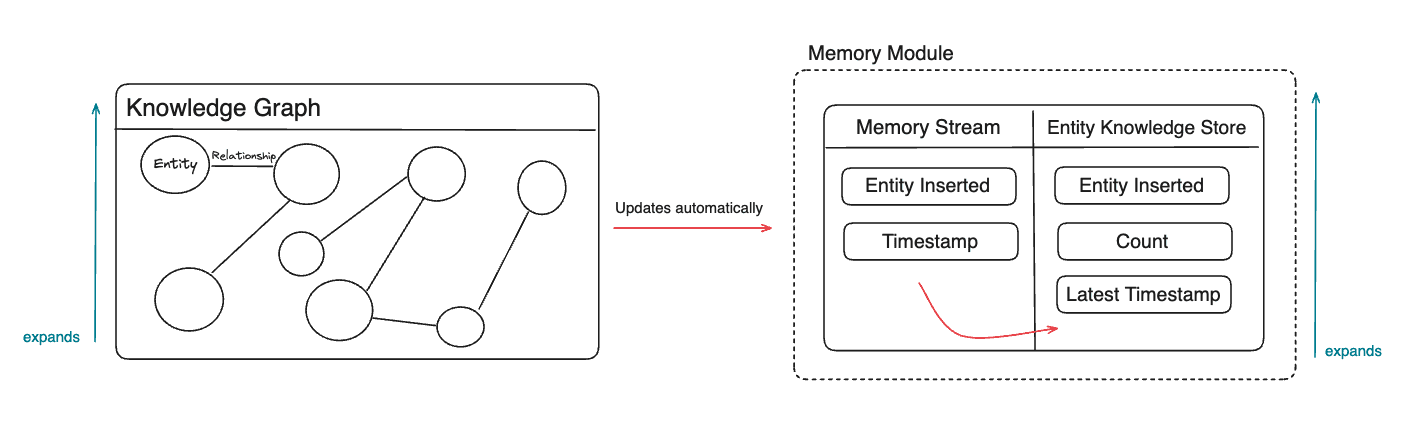

Memary Knowledge Graphs

Memory Modules

Function List

- Automated Memory Generation and Updating

- Knowledge graph storage and retrieval

- Memory Stream Tracking Entities and Timestamps

- Frequency and timeliness of Entity Knowledge Store (EKS) management

- Recursive Retrieval Methods to Optimize Knowledge Graph Search

- Multi-hop reasoning supports complex query processing

- Customized Tool Extension Support

- Multi-Intelligence Body Mapping Management

- Memory compression and context window optimization

- Topic Extraction and Entity Categorization

- Time line analysis function

Using Help

1. Installation configuration

1.1 Basic requirements

- Python Version Requirements: <= 3.11.9

- Recommended to use a virtual environment for installation

1.2 Installation method

a) Use pip to install.

pip install memary

b) Local installation.

- Create and activate a virtual environment

- Installation dependencies.

pip install -r requirements.txt

1.3 Model configuration

Memary supports two modes of operation:

- Local mode (default): Use the Ollama operational model

- LLM: Llama 3 8B/40B (recommended)

- Visual model: LLaVA (recommended)

- Cloud mode:

- LLM: gpt-3.5-turbo

- Vision model: gpt-4-vision-preview

2. Environmental preparation

2.1 Configuring the .env File

OPENAI_API_KEY="YOUR_API_KEY"

PERPLEXITY_API_KEY="YOUR_API_KEY"

GOOGLEMAPS_API_KEY="YOUR_API_KEY"

ALPHA_VANTAGE_API_KEY="YOUR_API_KEY"

# 数据库配置(二选一):

FALKORDB_URL="falkor://[[username]:[password]]@[falkor_host_url]:port"

或

NEO4J_PW="YOUR_NEO4J_PW"

NEO4J_URL="YOUR_NEO4J_URL"

2.2 Update user configuration

- compiler

streamlit_app/data/user_persona.txtSetting user characteristics - Optional: Modify

streamlit_app/data/system_persona.txtAdjustment of system characteristics

3. Basic use

3.1 Launching the application

cd streamlit_app

streamlit run app.py

3.2 Code Examples

from memary.agent.chat_agent import ChatAgent

# 初始化聊天智能体

chat_agent = ChatAgent(

"Personal Agent",

memory_stream_json,

entity_knowledge_store_json,

system_persona_txt,

user_persona_txt,

past_chat_json,

)

# 添加自定义工具

def multiply(a: int, b: int) -> int:

"""乘法计算工具"""

return a * b

chat_agent.add_tool({"multiply": multiply})

# 移除工具

chat_agent.remove_tool("multiply")

4. Multi-intelligence configuration

Applies when using the FalkorDB database:

# 用户 A 的个人智能体

chat_agent_user_a = ChatAgent(

"Personal Agent",

memory_stream_json_user_a,

entity_knowledge_store_json_user_a,

system_persona_txt_user_a,

user_persona_txt_user_a,

past_chat_json_user_a,

user_id='user_a_id'

)

# 用户 B 的个人智能体

chat_agent_user_b = ChatAgent(

"Personal Agent",

memory_stream_json_user_b,

entity_knowledge_store_json_user_b,

system_persona_txt_user_b,

user_persona_txt_user_b,

past_chat_json_user_b,

user_id='user_b_id'

)

5. Memory management features

5.1 Memory Stream

- Automatically capture all entities and their timestamps

- Support for timeline analysis

- Theme Extraction Function

5.2 Entity Knowledge Store

- Tracking entity citation frequency and timeliness

- Entity Relevance Ranking

- entity classification function

- Time-varying analysis

5.3 Knowledge Mapping Functions

- Recursive Search Optimization

- Multi-hop reasoning support

- Automatic update mechanism

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...