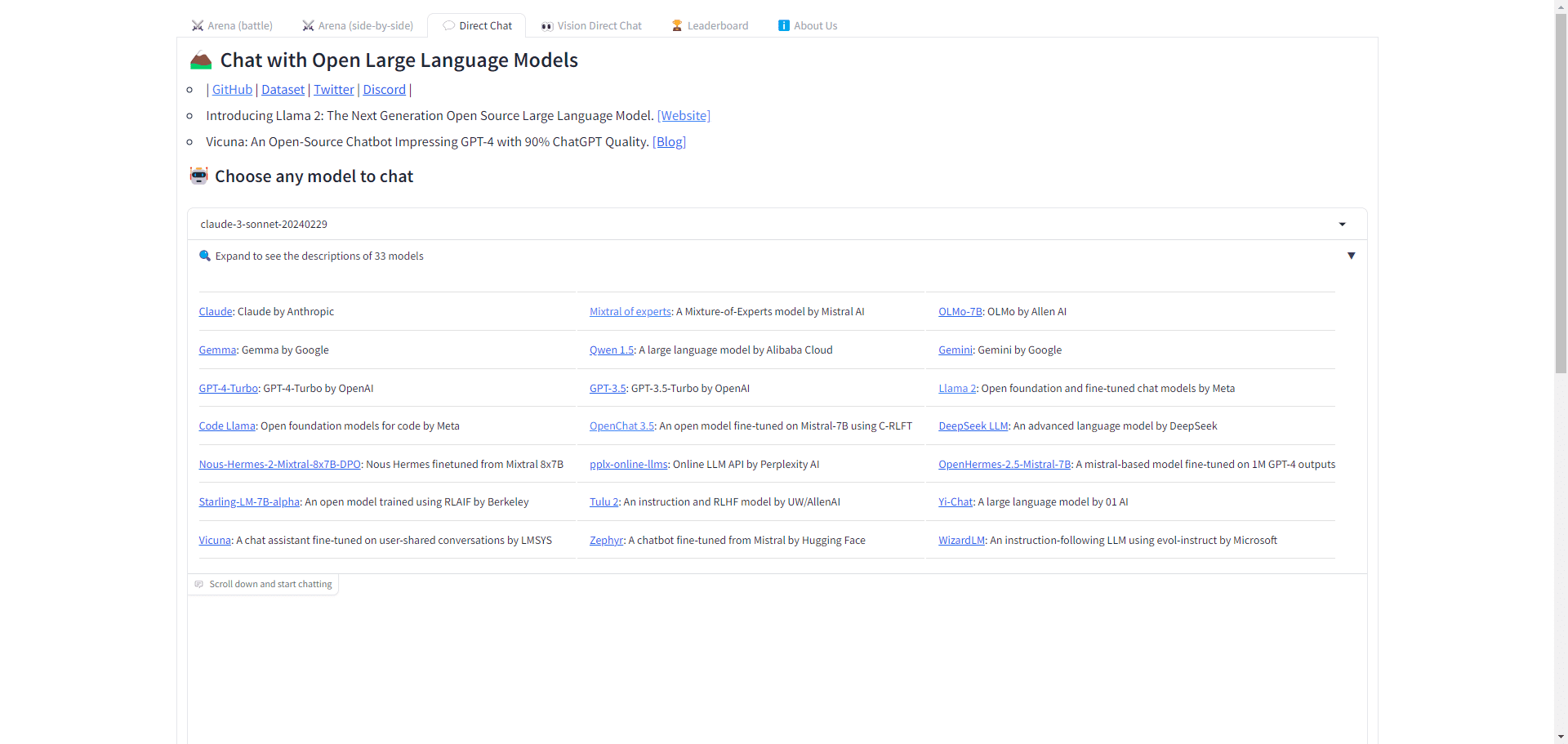

Chatbot Arena (LMSYS): an online competitive platform for benchmarking large language models and comparing performance across multiple models

General Introduction

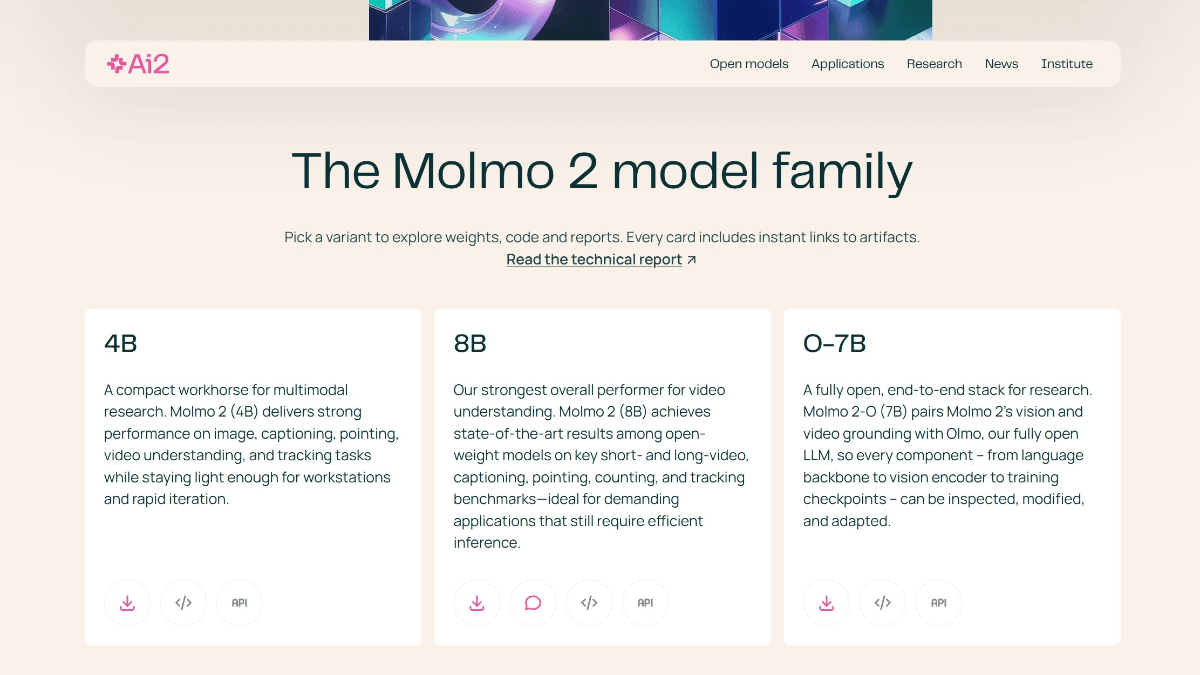

The LMSYS Org, known as the Large Model Systems Organization, is an open research organization co-founded by students and faculty at the University of California, Berkeley, in collaboration with the University of California, San Diego, and Carnegie Mellon University. The goal of the organization is to make large models accessible to all by jointly developing open models, datasets, systems, and evaluation tools.

Chatbot Arena is an online platform focused on benchmarking and comparing the performance of different Large Language Models (LLMs). The platform was created by researchers to provide users with an anonymous, randomized environment to interact and evaluate various AI chatbots side-by-side. Through detailed quality, performance, and price analysis, Chatbot Arena helps users find the AI solution that best fits their needs.

Model PK: https://lmarena.ai/

Function List

- Vicuna: a chatbot with 90% ChatGPT quality, available in 7B/13B/33B sizes.

- Chatbot Arena: scalable and gamified evaluation of LLMs through crowdsourcing and the Elo rating system.

- SGLang: Efficient interface and runtime for complex LLM programs.

- LMSYS-Chat-1M: A large-scale dataset of actual LLM conversations.

- FastChat: an open platform for training, serving and evaluating LLM-based chatbots.

- MT-Bench: a challenging, multi-round, open-ended set of questions for evaluating chatbots.

Using Help

- model comparison::

- Visit the Model Comparison page.

- Select the models you want to compare and click the "Add to Compare" button.

- View comparison results including quality, performance, price and other metrics.

- quality control::

- View quality test results on the Model Details page.

- Find out the specific scores and rankings for the different test dimensions.

- Price analysis::

- On the Model Details page, view the price analysis.

- Compare the prices of different models to find the most cost-effective option.

- Performance Evaluation::

- On the Model Details page, view the performance evaluation results.

- Understand the model's output speed, latency, and other performance metrics.

- context window analysis::

- On the Model Details page, view the Context Window Analysis.

- Understand the context window size of the model for different application scenarios.

By following these steps, users can get a comprehensive understanding of the performance and characteristics of different large-scale language models and make the choice that best suits their needs.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...