llms.txt Generator: Rapidly crawls website content and generates LLM training text datasets.

General Introduction

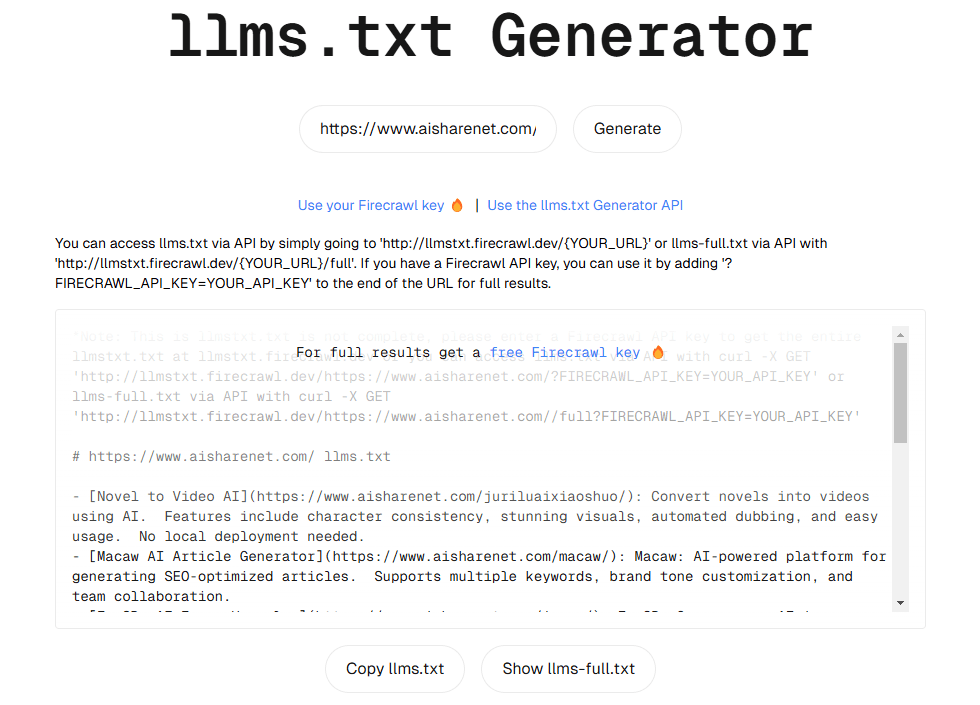

llmstxt-generator is a professional web content extraction and integration tool specialized in preparing high-quality text datasets for Large Language Model (LLM) training and inference. Developed by Mendable AI, the tool uses web crawling technology provided by @firecrawl_dev and GPT-4-mini for text processing. It automatically crawls the content of a given website and consolidates all the content into a standardized text file. The tool provides a convenient web interface and API interface, allowing users to easily generate training datasets, especially suitable for AI researchers and developers who need to obtain text data from websites in bulk.

Function List

- Automatically crawl all relevant pages of the target website content

- Provides two text output formats: standard version (llms.txt) and full version (llms-full.txt).

- Supports intuitive operation via web interface

- Provide RESTful API interface to enable programmatic calls

- Support for special handling of GitHub repository content

- Capable of intelligent extraction and processing of web content

- Support for customizing Firecrawl API keys to improve crawl limits

- Includes a web caching mechanism to improve data capture efficiency

- Support for multiple content format conversions (e.g. Markdown)

Using Help

1. Web interface usage

- Visit the official website: https://llmstxt.firecrawl.dev

- Enter the URL of the target website in the input box

- Click on the "Generate" button to start generating text.

- Wait for processing to complete to get the generated text file

2. API usage

Basic API calls:

GET https://llmstxt.firecrawl.dev/[YOUR_URL_HERE]

- Get the standard version of the text: go directly to the URL above

- To get the full version of the text: add "/full" to the end of the URL.

Use a custom API Key:

If a higher crawl limit is needed, you can use your own by Firecrawl API key::

GET https://llmstxt.firecrawl.dev/[YOUR_URL_HERE]?FIRECRAWL_API_KEY=YOUR_API_KEY

3. Guidelines for local deployment

If you need to run it in a local environment, please follow the steps below:

- Environment Configuration:

establish.envfile and configure the following necessary parameters:

FIRECRAWL_API_KEY=你的key

SUPABASE_URL=你的supabase URL

SUPABASE_KEY=你的supabase key

OPENAI_API_KEY=你的OpenAI key

- Installation and operation:

npm install

npm run dev

4. Precautions for use

- Processing time: may take a few minutes due to the need for site crawling and LLM processing operations

- Free version limitation: up to 10 pages per site crawled without custom API key

- Advanced version: Up to 100 page limit with custom Firecrawl API key

- Caching mechanism: the system will cache the results, repeated requests for the same URL within 3 days will return the cached content directly

- GitHub repository support: For GitHub repository URLs, special processing is performed to extract repository-related content.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...