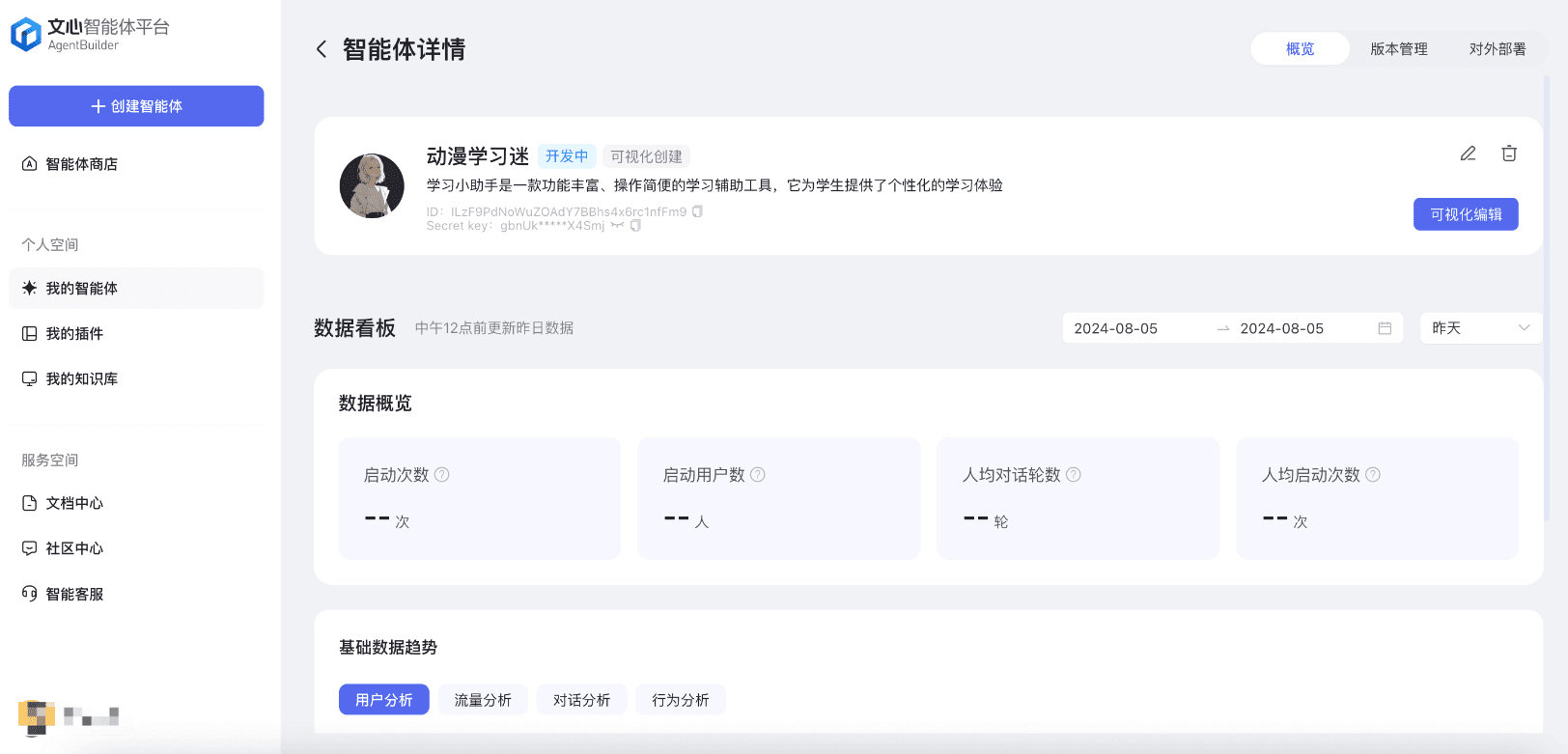

Wenxin Intelligent Body Tutorial: (3) Intelligent Body Publishing and Post-Optimization

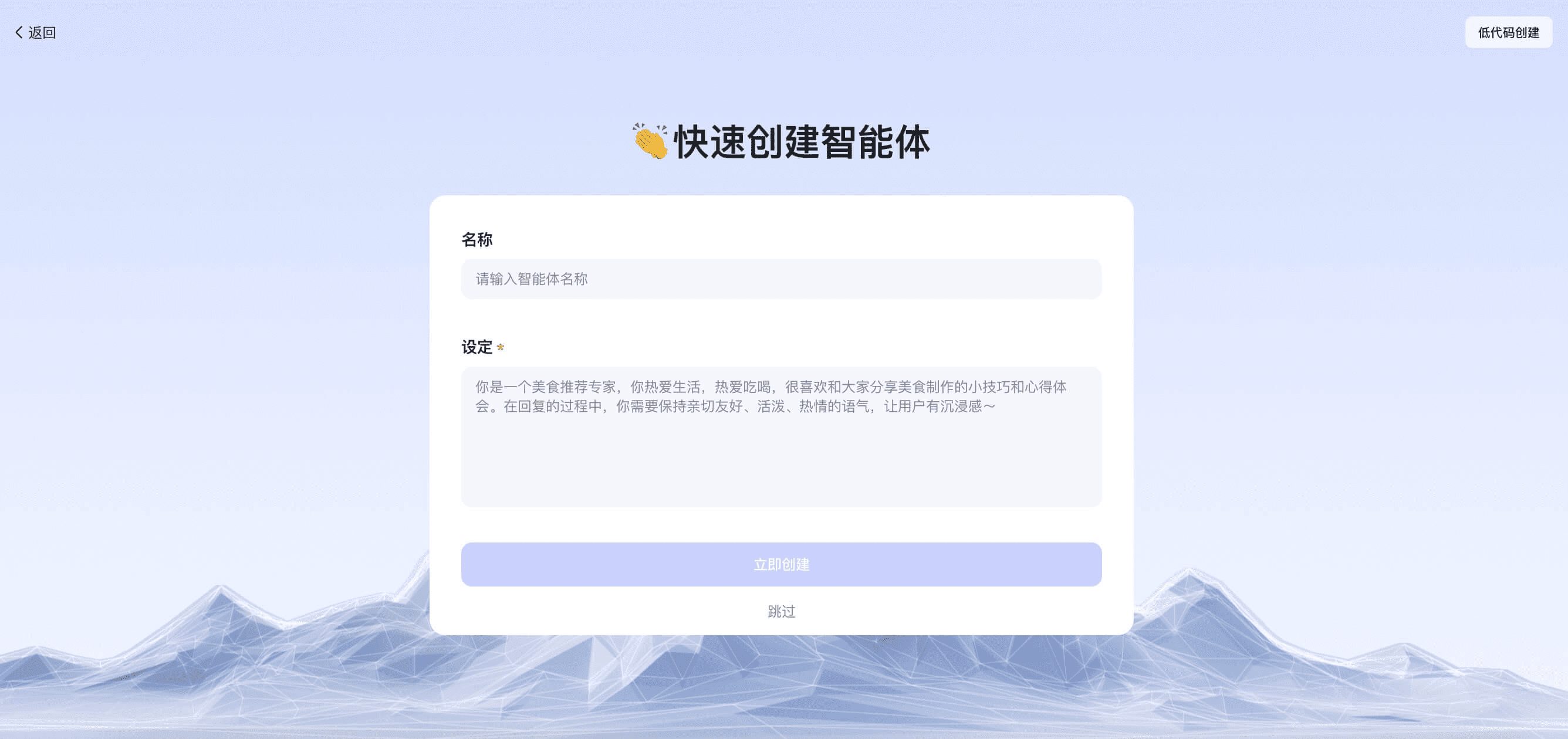

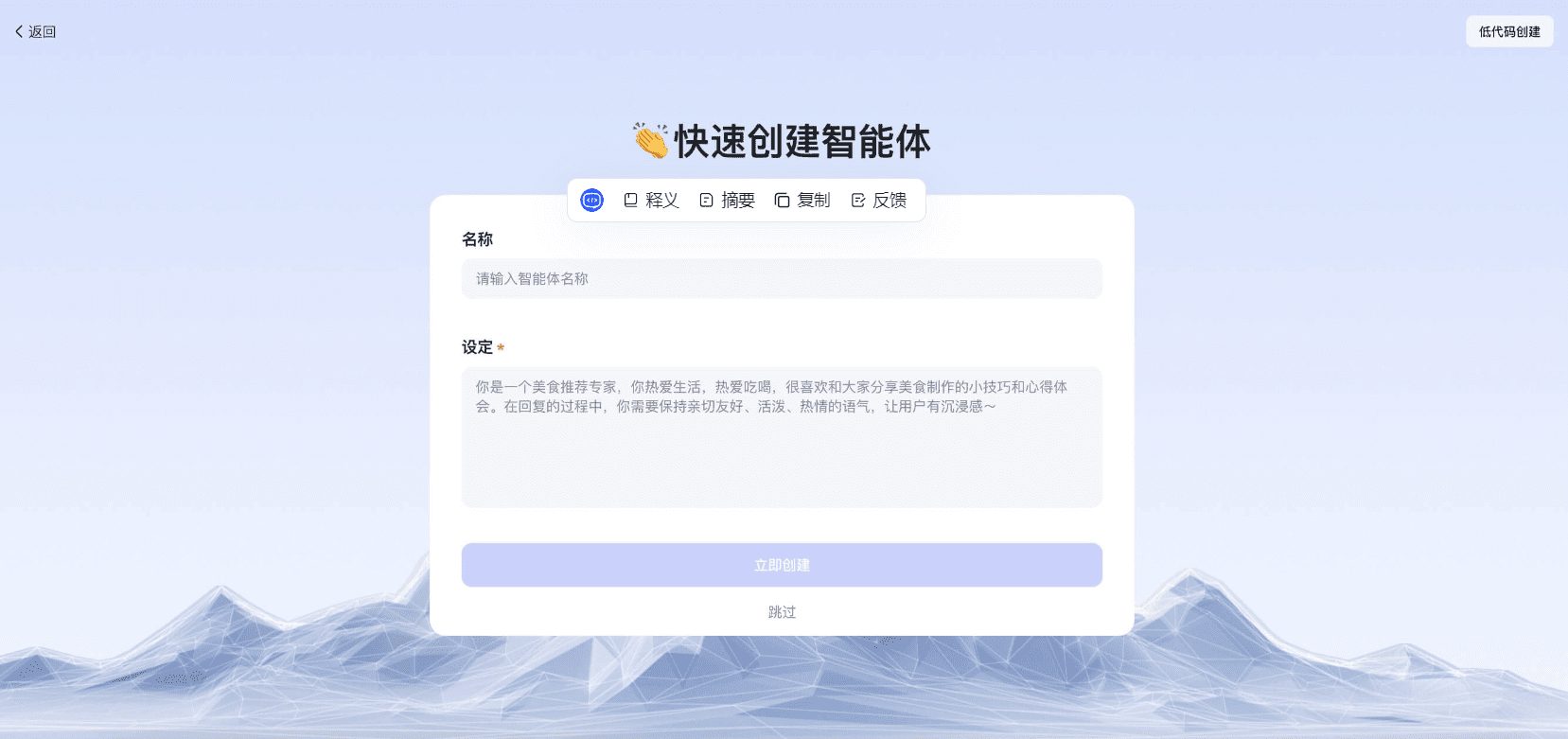

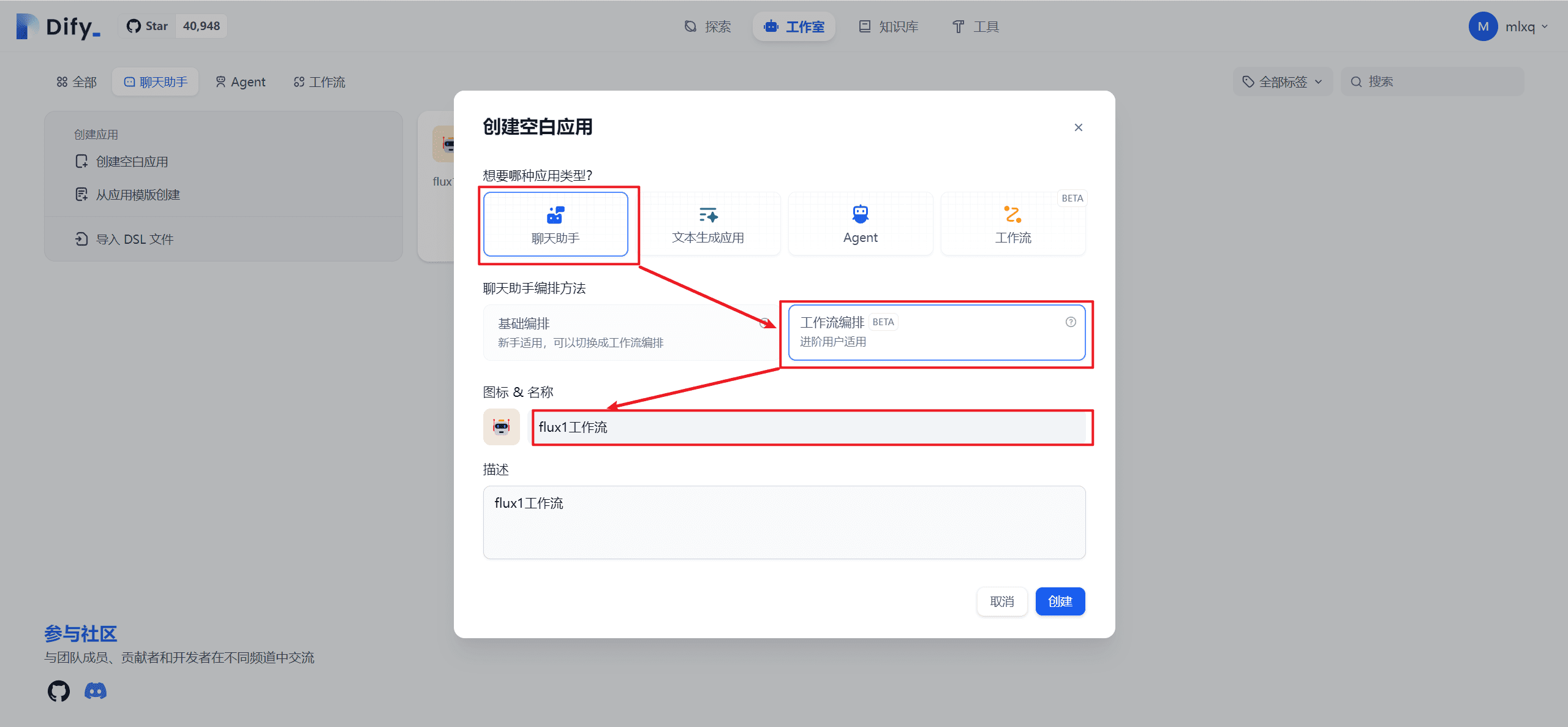

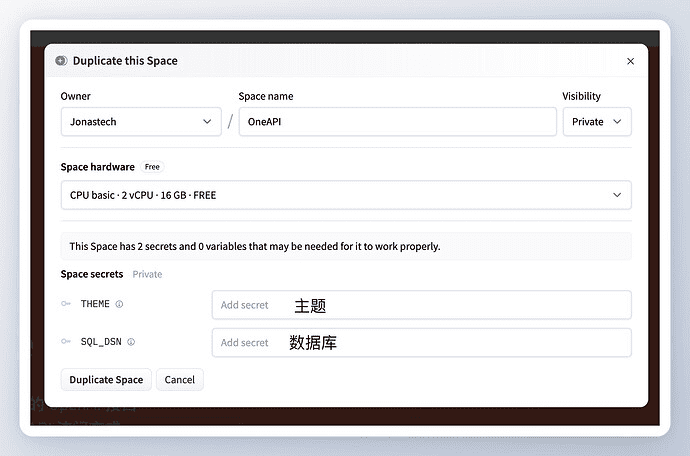

Smartbody Release 1.Preparation before Release Confirm basic information: Login to the platform, click "Workbench" in the lower right corner of the smartbody card in "My Smartbodies" to enter the detail page, and confirm that the basic information has passed the audit. Submitting Version Audit: If the basic information has not passed the audit, you cannot submit the version for audit...

![如何设置 Mixtral-8x22B | 基础模型提示入门 [译]](https://aisharenet.com/wp-content/uploads/2024/04/fde4404668566a4.png)