InternVL3.5 - Shanghai AI Lab Open Source Multimodal Large Models

What is InternVL 3.5?

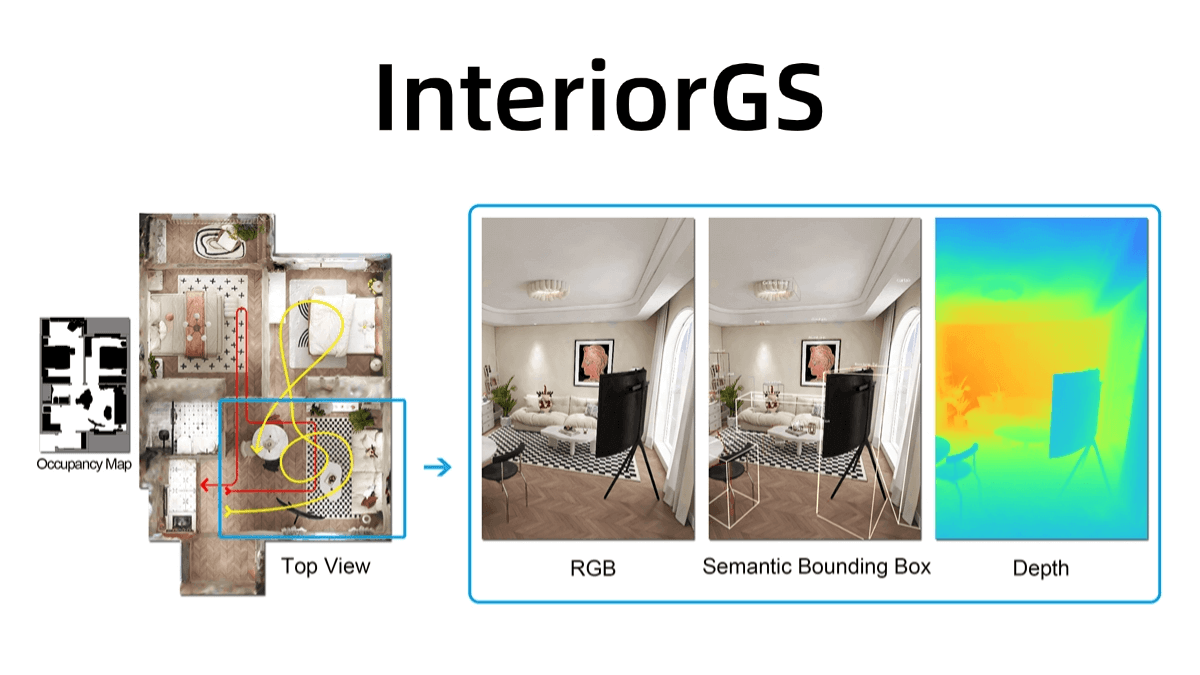

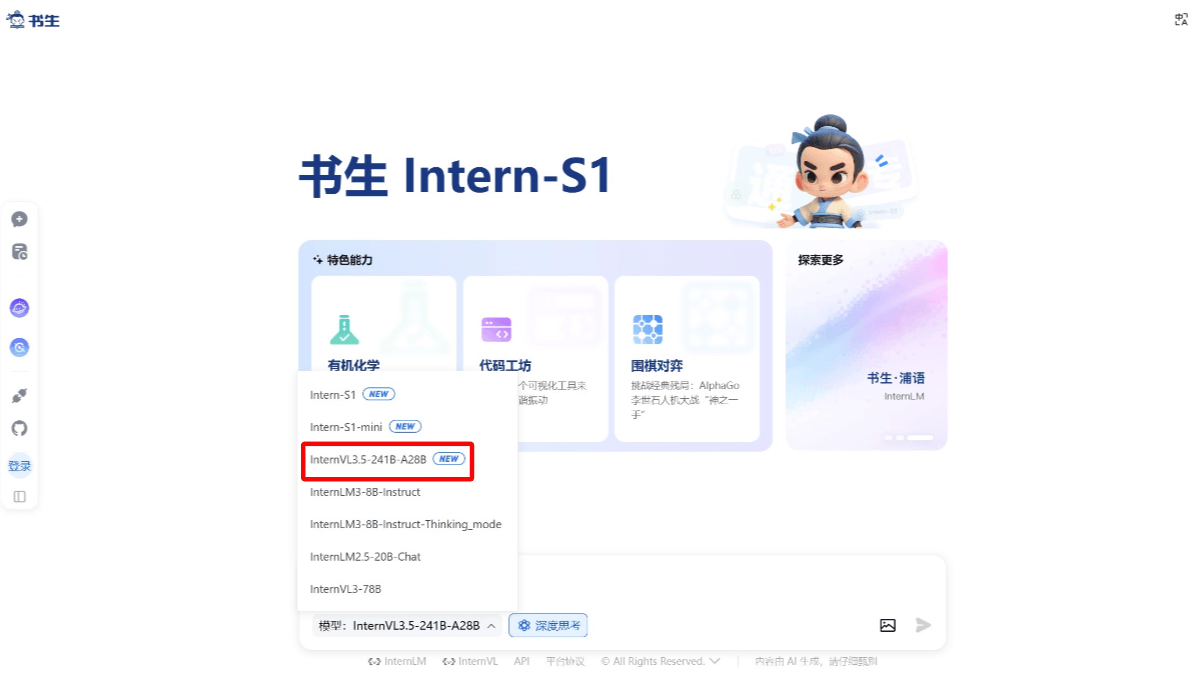

InternVL3.5 is an open source multimodal large model from Shanghai Artificial Intelligence Laboratory (SAL), which has been comprehensively upgraded in terms of generalization ability, inference ability and deployment efficiency, and provides nine sizes ranging from 1 billion to 241 billion parameters, covering different resource demand scenarios, including dense model and mixed model of experts (MoE), and is the first open source multimodal large model supporting GPT-OSS language and model base. InternVL3.5 adopts Cascade Reinforcement Learning (Cascade RL) framework, which significantly improves the inference ability through the two-phase process of "offline warm-up - online fine-tuning". The core capabilities of GUI, embodied spatial reasoning, and vector graphics processing have been strengthened. For example, in the ScreenSpot GUI localization task, the model outperforms the mainstream open source model with a score of 92.9.

Features of InternVL 3.5

- Powerful multimodal sensing capabilities: It can understand and process a wide range of visual information, such as images and videos, and generate relevant text descriptions, which are suitable for content creation, intelligent customer service and other fields.

- Excellent multimodal inference performance: Excellent performance in multidisciplinary reasoning benchmark tests, capable of handling complex multimodal reasoning tasks such as mathematical physics problem solving, logical reasoning, etc., suitable for education, research and other scenarios.

- Efficient text processing capabilities: It excels in natural language processing tasks such as text reasoning and Q&A, and provides high-quality text generation and analysis for applications such as intelligent writing and text analysis.

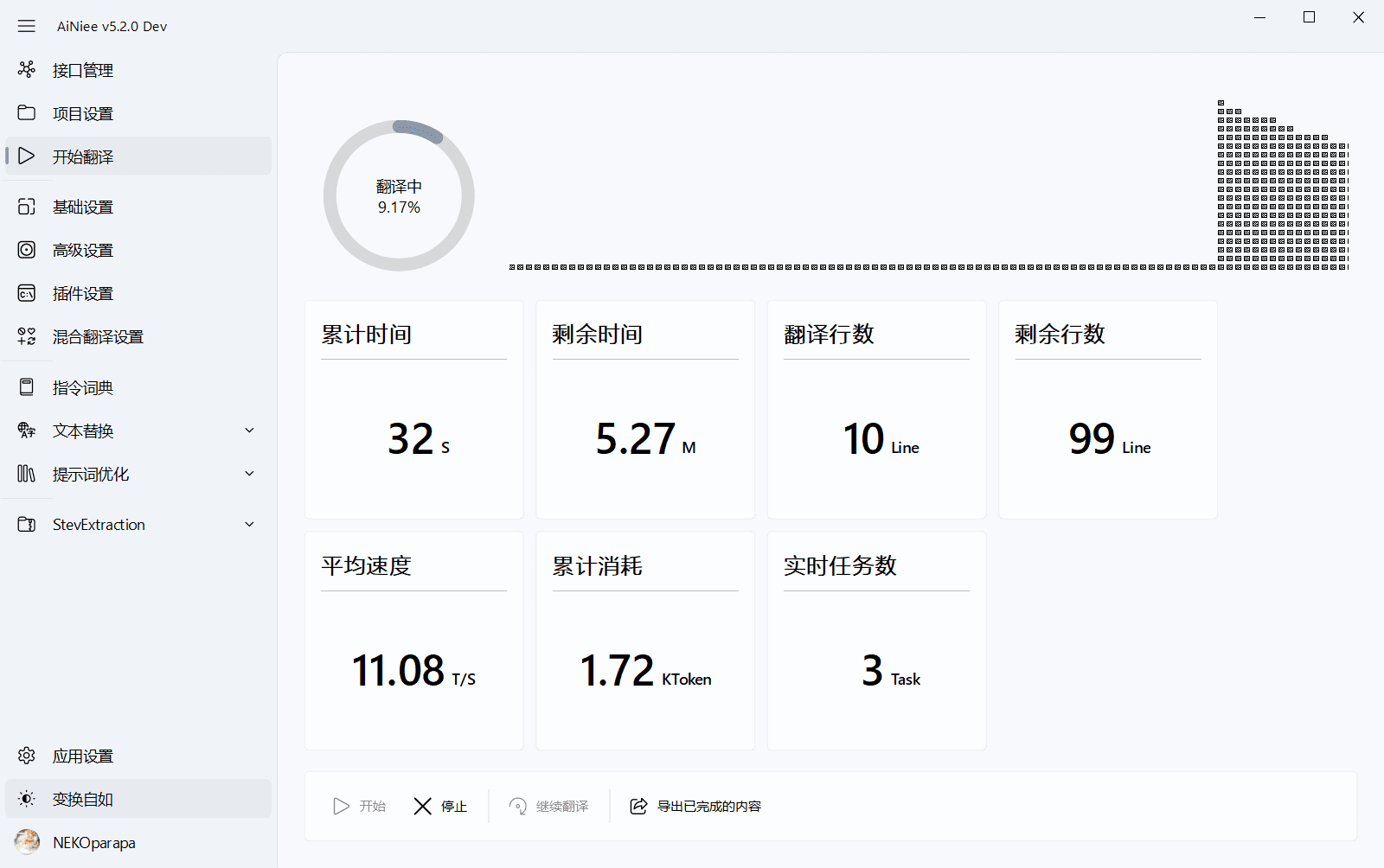

- Advanced GUI Intelligent Body FunctionsThe interface elements can be automated across platforms to achieve document recovery, PDF export, emailing and other tasks to improve the level of office automation.

- Excellent embodied spatial reasoning: Supporting physical spatial relationship understanding and navigation, it can be applied to embodied intelligence scenarios such as robot navigation and smart home control to enhance the autonomy and intelligence of devices.

- Highly efficient vector graphics processing: It can generate or edit vector graphics based on natural language commands, which is suitable for professional scenarios such as web design and engineering drawing parsing to improve the efficiency of design and parsing.

- Flexible model deployment options: Provides a wide range of model sizes from 1 billion to 241 billion parameters to meet different resource requirements and application scenarios, and supports dense models and Mixed Models of Experts (MoE).

Core Benefits of InternVL 3.5

- Cascaded Reinforcement Learning FrameworkThe two-stage process of "offline warm-up - online fine-tuning", combined with the hybrid preference optimization (MPO) and GSPO algorithms, significantly improves the model's reasoning ability and training stability.

- Dynamic visual resolution routing: Dynamically choosing the compression rate for each image slice reduces visual tokens while preserving key information, significantly improving inference speed with little performance loss.

- Decoupled Deployment ArchitectureThe new design is based on a combination of BF16 precision feature transfer and asynchronous pipelining, which greatly improves throughput and solves the resource blocking problem of traditional serial deployments by placing the visual encoder and language model on different GPUs.

- Full-scale model optimizationThe model size ranges from 1 billion to 241 billion parameters, covering different resource demand scenarios, and supports dense models and Mixed Model of Experts (MoE) to meet diversified application requirements.

- Excellent multimodal reasoning: Achieved the highest score for an open source model in the multidisciplinary reasoning benchmark MMMU, significantly outperforming existing open source models with strong mathematical and logical reasoning capabilities.

- Efficient Deployment EfficiencyThe model's response speed is dramatically improved with high-resolution inputs, and the throughput of the 38B model is improved by a factor of 4.05, which significantly reduces the actual cost of deployment.

What is InternVL3.5's official website?

- Github repository:: https://github.com/OpenGVLab/InternVL

- HuggingFace model address:: https://huggingface.co/OpenGVLab/InternVL3_5-241B-A28B

- Technical Report:: https://huggingface.co/papers/2508.18265

- Online experience address:: https://chat.intern-ai.org.cn/

People for whom InternVL3.5 is intended

- Artificial intelligence researchers: The model provides researchers with a powerful multimodal research tool that can be used to explore new algorithms, model architectures, and application scenarios, advancing academic research in multimodal AI.

- software developer: Developers can use open source code and flexible deployment options to integrate the model into a variety of software applications and develop products and services with intelligent interaction capabilities.

- Educators and students: In education, the model's multimodal reasoning and text processing capabilities can be used to develop intelligent tutoring tools to help students better understand and solve complex subject matter problems.

- content creator: Content creators can use multimodal perception and text generation capabilities to quickly generate creative content, such as image descriptions, video captions, articles, etc., to improve creative efficiency.

- Office automation users: Through the GUI intelligent body function, users can realize cross-platform automated office operation, improve work efficiency and reduce repetitive work.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...