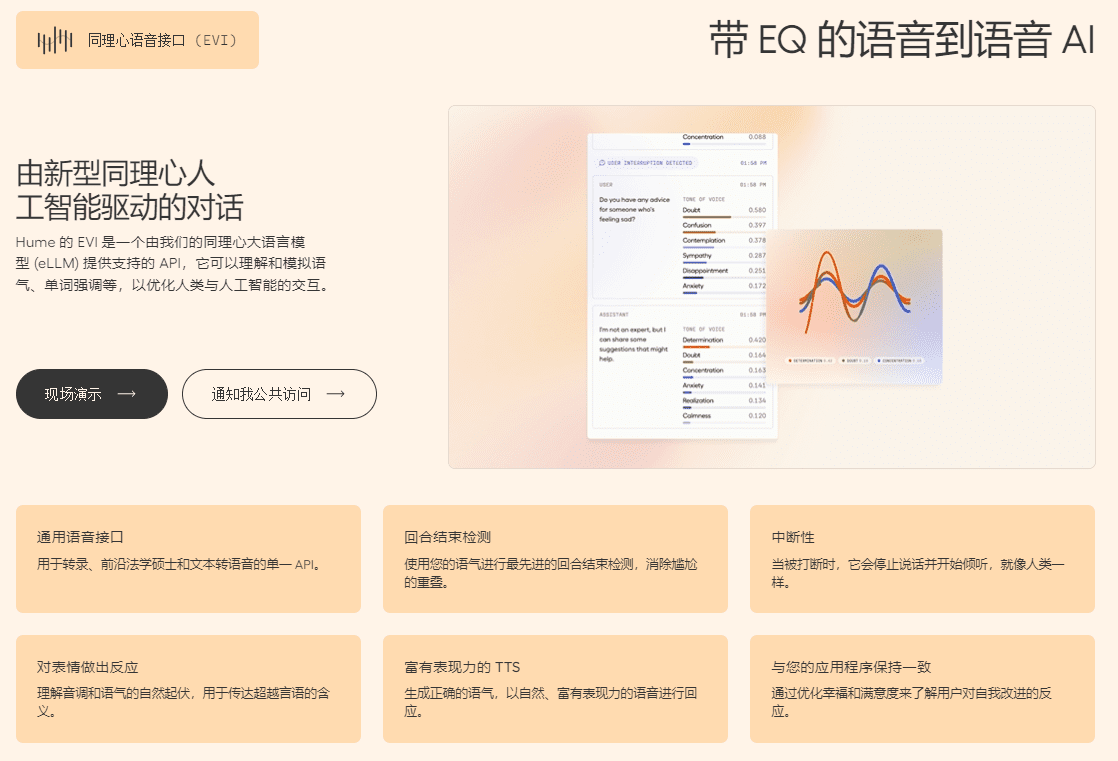

Hume AI: Empowering AI with Emotion Recognition | Recognizing Emotional States from Sounds and Expressions | Generating Speech with Emotional States

General Introduction

Hume AI is an Artificial Intelligence company focused on Emotional Intelligence, developing multimodal AI technologies that understand and respond to human emotions. Its flagship product, the Empathic Voice Interface (EVI), recognizes and responds to user emotions in multiple forms, including speech, facial expressions, and language, to enhance the emotional experience of human-computer interaction.Hume AI's goal is to ensure that AI technology can truly serve the emotional well-being of human beings through scientific methods and ethical principles.

Function List

- emotion recognition: Recognize the user's emotions in a variety of forms, including speech, facial expressions and language.

- speech synthesis: Generate voice responses with emotion to enhance the interactive experience.

- multimodal interaction: Supports a wide range of interactions such as voice, text and emoticons.

- Personalization: Customize different AI personalities and voice styles according to user needs.

- real time response: Provides real-time sentiment analysis and response for a wide range of application scenarios.

Using Help

Installation and use

Hume AI is very easy to use and does not require a complicated installation process. Users just need to visit the official website and register an account to start using its online service. Here are the detailed steps to use it:

- Register for an account: Access Hume AI Official WebsiteClick on the Register button and fill in the relevant information to complete the registration.

- Login Platform: Log in to the Hume AI platform using your registered account and password.

- Select Service: Select the service module you need to use on the platform's homepage, such as emotion recognition, speech synthesis, and so on.

- Upload data: Upload voice, video or text data to be analyzed as prompted.

- View Results: The system automatically performs sentiment analysis and generates detailed reports and response results.

Functional operation flow

emotion recognition

- Access to the Emotion Recognition Module: After logging in, click on "Emotion Recognition" in the navigation bar.

- Upload data: Select the voice or video file to be analyzed and click Upload.

- Start analysis: Click on the "Start Analysis" button and the system will automatically recognize the sentiment.

- View Report: Once the analysis is complete, users can view a detailed sentiment analysis report, including sentiment type, intensity, and change trends.

speech synthesis

- Access to the Speech Synthesis Module: After logging in, click on "Speech Synthesis" in the navigation bar.

- input text: Enter the text content to be synthesized in the text box.

- Selecting a voice style: Select different voice styles and emotional expressions as required.

- Generate Speech: Click the "Generate Voice" button and the system will generate the corresponding voice file.

- Download Voice: Once the generation is complete, users can download the voice files for use in various application scenarios.

multimodal interaction

- Access to the Multimodal Interaction Module: After logging in, click on "Multimodal Interaction" in the navigation bar.

- Select Interaction Method: Choose to interact with voice, text or emoji.

- Starting Interaction: Interacting with prompts, the system recognizes and responds to the user's emotions in real time.

- View Records: At the end of the interaction, users can view the interaction history and sentiment analysis results.

Hume AI provides a wealth of documentation and tutorials, and users can find detailed guides and FAQs in the Help Center on the official website. If you encounter any problems while using Hume AI, please feel free to contact Hume AI's customer service team for assistance.

Empathic Voice Interface (EVI)

Hume's Emotionally Intelligent Voice Interface (EVI) is the world's first voice AI with emotional intelligence. It takes live audio input and returns audio and text transcriptions enhanced by tonal expressiveness data. By analyzing pitch, rhythm and timbre, EVI unlocks additional added features such as vocalizing at the right time and generating empathetic speech with the right intonation. These features make voice-based human-computer interactions smoother and more satisfying, while opening up new possibilities in new areas such as personal AI, customer service, ease of use, robotics, immersive gaming, VR experiences, and more.

We provide a full suite of tools for easy integration and customization of EVI in your applications, including a WebSocket API for handling audio and text transfers, a REST API, and SDKs for Typescript and Python that simplify integration on web and Python base projects.In addition to this, we provide open source examples and web parts as a practical starting point for developers to explore and implement EVI capabilities in their own projects.

Building with EVI

The primary way to use EVI is through a WebSocket connection that sends audio and receives feedback in real time. This allows for a smooth two-way conversation: the user comments, EVI listens and analyzes their expression, and then EVI generates emotionally intelligent feedback.

You can start a conversation by connecting to a WebSocket and sending the user's voice input to the EVI. You can also send text to the EVI and it will read it out.

EVI will respond in the following manner:

- Give a textual response from EVI

- Provides expressive audio feedback for EVI

- Provides transcription of users' messages and measurement data of their vocal expressions

- If the user interrupts the EVI, feedback on this is given

- When the EVI has finished replying, it will give an alert message

- If there is a problem, an error message is given

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...