How GRPO outdid the o1, o3-mini and R1 in the game "Clue of Time".

In recent years, the field of Artificial Intelligence has made significant progress in its reasoning capabilities. After OpenAI demonstrated the powerful reasoning potential of large language models (LLMs) last year, Google DeepMind, Alibaba, DeepSeek and Anthropic Institutions such as the University of California, Berkeley, and others have been quick to follow suit, using reinforcement learning (RL) techniques to train advanced models with "chain-of-thinking" (CoT) capabilities. These models have achieved near-saturation scores on many benchmark tests in areas such as math and programming.

However, one fact that should not be overlooked is that even the best current models still face some insurmountable obstacles when dealing with logical reasoning problems. Large language models often struggle to maintain a coherent chain of logical reasoning with constant attention to all relevant details, or to reliably connect multiple reasoning steps. Even state-of-the-art models that generate content 10-100 times the length of ordinary models often make low-level errors that are easily detected by human problem solvers.

To explore this question, Brad Hilton and Kyle Corbitt, the authors of this article, began to think: can the latest reinforcement learning techniques be utilized to bring smaller, open-source models to the forefront of inference performance? To answer this question, they chose a method called Group Relative Policy Optimization (GRPO) of reinforcement learning algorithms.

What is GRPO?

Simply put, GRPO is a method for optimizing strategy gradients. Policy gradient methods improve the performance of a model by adjusting its policy (i.e., the probability distribution of the model's actions in a given situation.) GRPO updates the policy more efficiently by comparing the relative merits of a set of model responses. Compared to traditional methods such as Proximal Policy Optimization (PPO), GRPO simplifies the training process while still guaranteeing excellent performance.

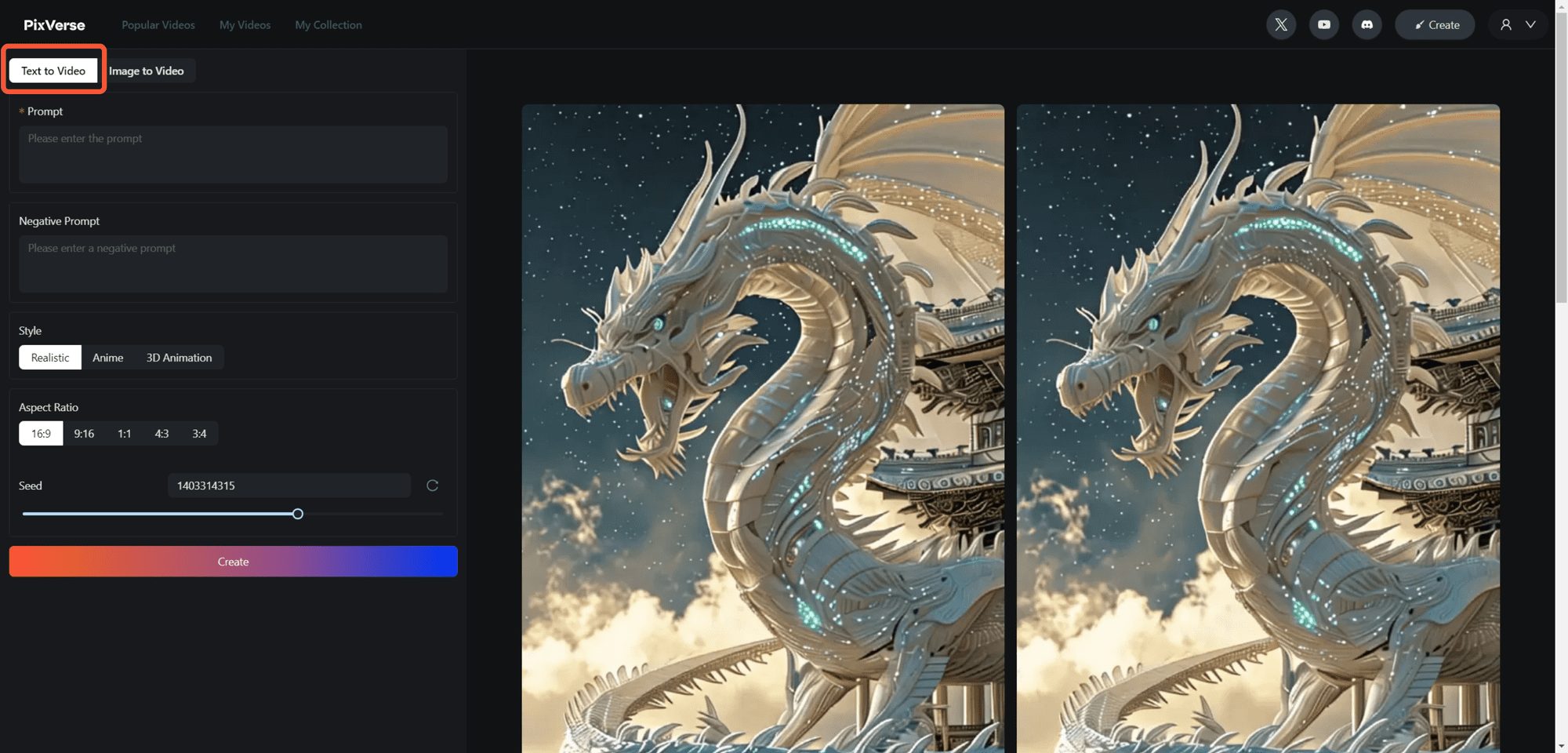

To verify the validity of GRPO, they chose a program called "Temporal Clue"Reasoning Games as Experimental Platforms.

What is the game "Time Clue"?

"Time Clue is a puzzle game inspired by the classic board game "Clue (Cluedo)". In this game, the player needs to find out the murderer, the murder weapon, the place, the time and the motive through a series of clues. Unlike the original game, "Time Clue" expands the problem to five dimensions, increasing the complexity and challenge of the game.

The authors started with relatively weak models and trained them iteratively on the game "Time Clue" using the GRPO algorithm. Over time, they observed a significant improvement in the reasoning ability of these models, which eventually rivaled and even surpassed some of the most powerful proprietary models.

Now, they will share the information that includestest,Training programs,data set cap (a poem)model weight research results, all of which are freely available under the MIT license.

benchmarking

In order to conduct an experiment, one first needs to identify a challenging reasoning task that has a clearly verifiable solution and scalable complexity. Coincidentally, one of the authors, Brad Hilton, had previously created a program called Temporal Clue of the puzzle set that meets these needs perfectly. In addition to meeting the criteria of basic factual clarity, new puzzles can be created as needed.

Temporal Clue is inspired by the popular board game Clue (Cluedo)In this game, players race to find out who killed Mr. Boddy in Tudor Mansion. In this game, players race to find out who killed Mr. Boddy in the Tudor Mansion.Temporal Clue turns this game into a standalone logic puzzle that goes beyond the standardwho,with what?,where, and added two additional dimensions:when?(time) andfor what reason?(Motivation). The puzzles are randomly generated, and minimal but sufficient clues are used to OR-Tools (used form a nominal expression) CP-SAT Solver Elected.

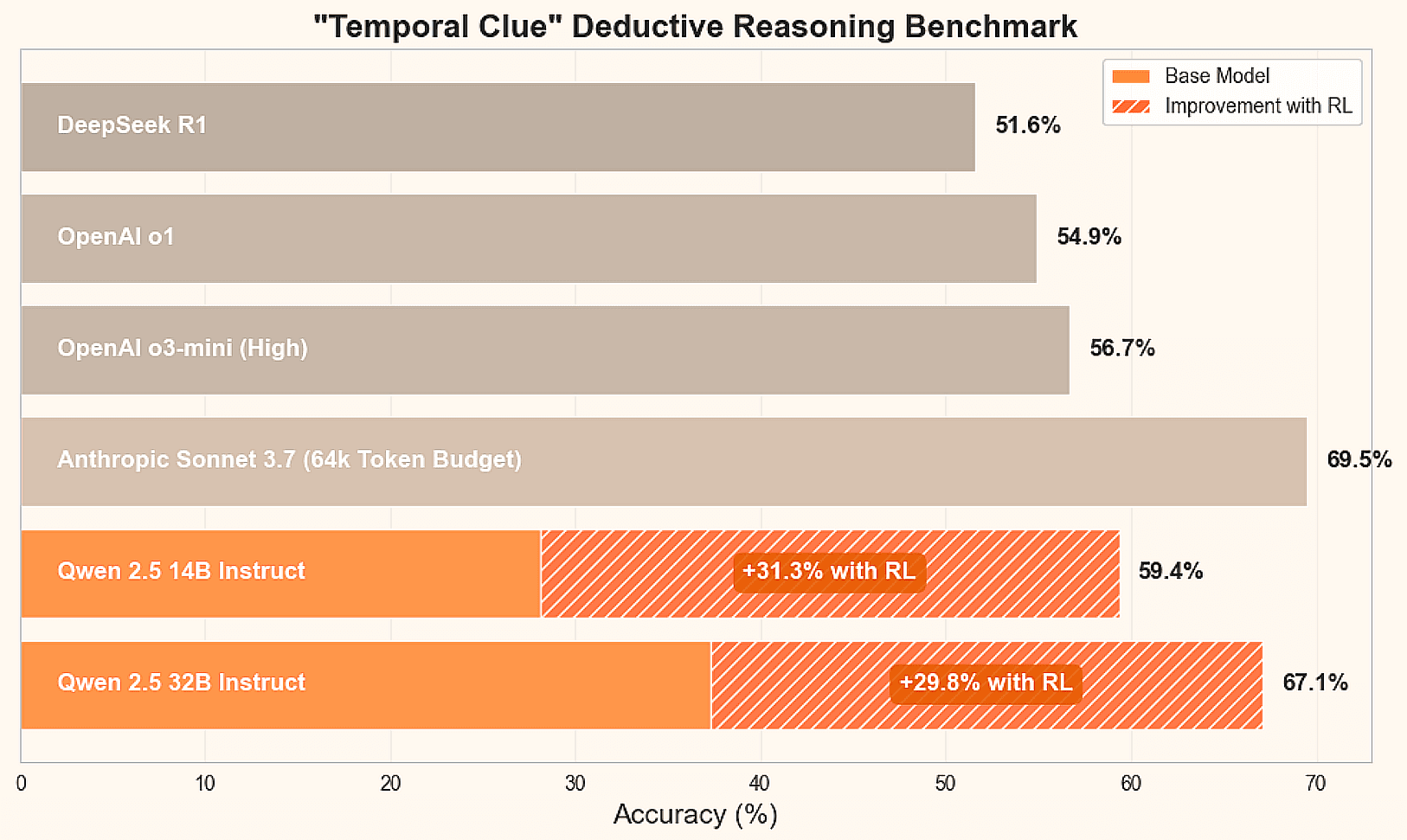

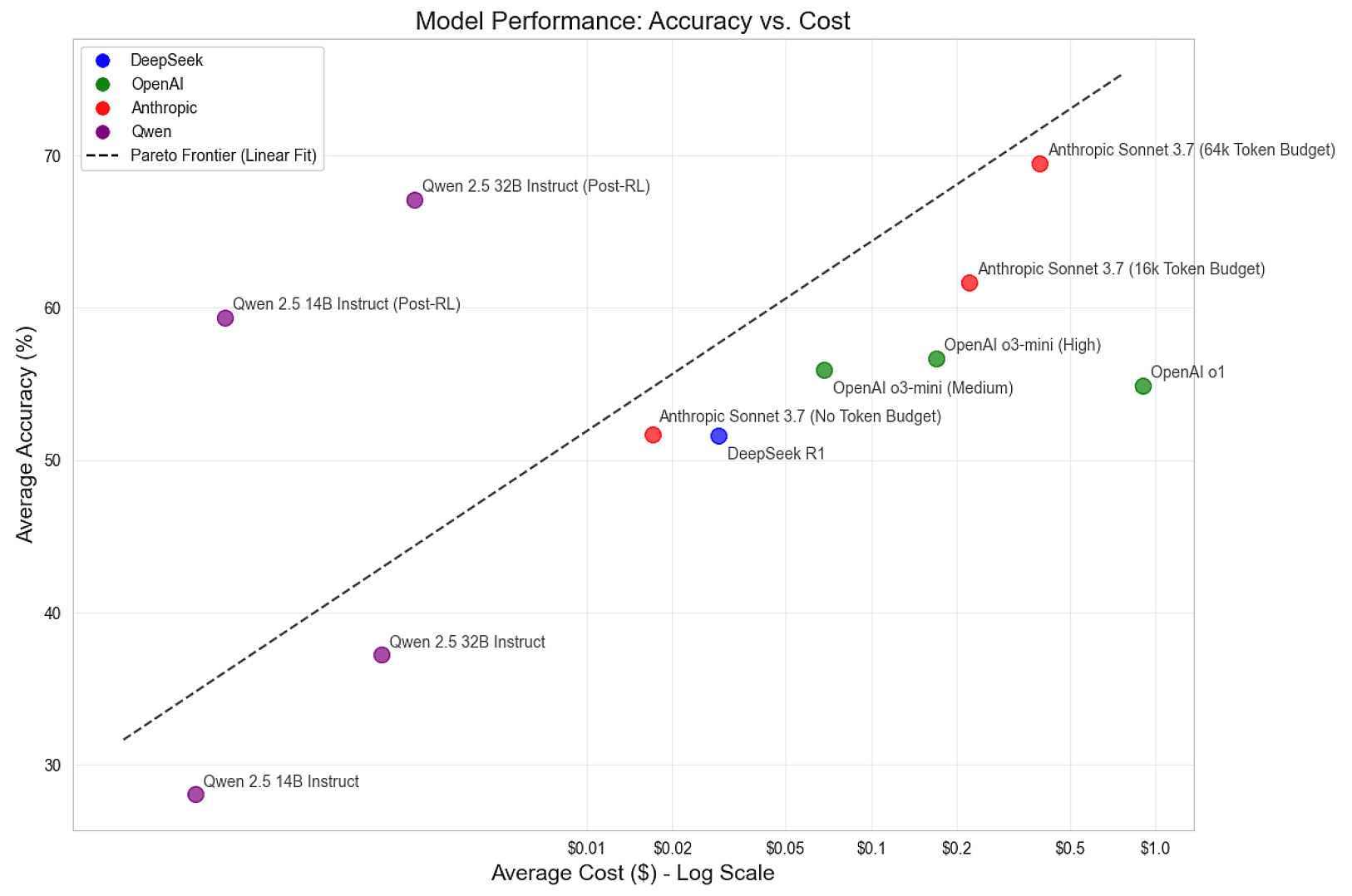

To determine the current state of the art for this inference task, they benchmarked leading inference models including DeepSeek R1, Anthropic Claude Sonnet 3.7, and Alibaba's Qwen 2.5 14B and 32B Instruct models. In addition, they provide a preview of the final results:

| organization | mould | reasoning ability | Average accuracy | average cost |

|---|---|---|---|---|

| DeepSeek | R1 | default (setting) | 51.6% | $0.029 |

| Anthropic | Sonnet 3.7 | not have | 51.7% | $0.017 |

| Anthropic | Sonnet 3.7 | 16k | 61.7% | $0.222 |

| Anthropic | Sonnet 3.7 | 64k | 69.5% | $0.392 |

| Ali Baba, character from The Arabian Nights | Qwen 2.5 14B Instruct | not have | 28.1% | $0.001 |

| Ali Baba, character from The Arabian Nights | Qwen 2.5 32B Instruct | not have | 37.3% | $0.002 |

From these benchmarks, it can be seen that having 64k token Anthropic's Claude Sonnet 3.7 was the best performer on this task, but all of the leading models have room for improvement.DeepSeek R1, a popular open-source model, performed close to the average accuracy of Anthropic's Claude Sonnet 3.7 51.7%. However, the performance of the untuned Qwen 2.5 Instruct model is relatively poor. The big question is: can these smaller, open-source models be trained to cutting-edge levels?

train

To train a cutting-edge inference model, they used reinforcement learning - a method that allows intelligences to learn from their own experience in a controlled environment. Here, the LLMs are the intelligences and the puzzles are the environment. They guided the LLMs' learning by having them generate multiple responses for each puzzle to explore the problem landscape. They reinforce reasoning that leads to the correct solution and penalize reasoning that leads the model astray.

Among the various RL methods, they chose the popular Group Relative Policy Optimization (GRPO) algorithm developed by DeepSeek. Compared to more traditional methods such as Proximal Policy Optimization (PPO), GRPO simplifies the training process while still providing strong performance. To speed up their experiments, they omitted the Kullback-Leibler (KL) dispersion punishment, even though their training regimen supports it.

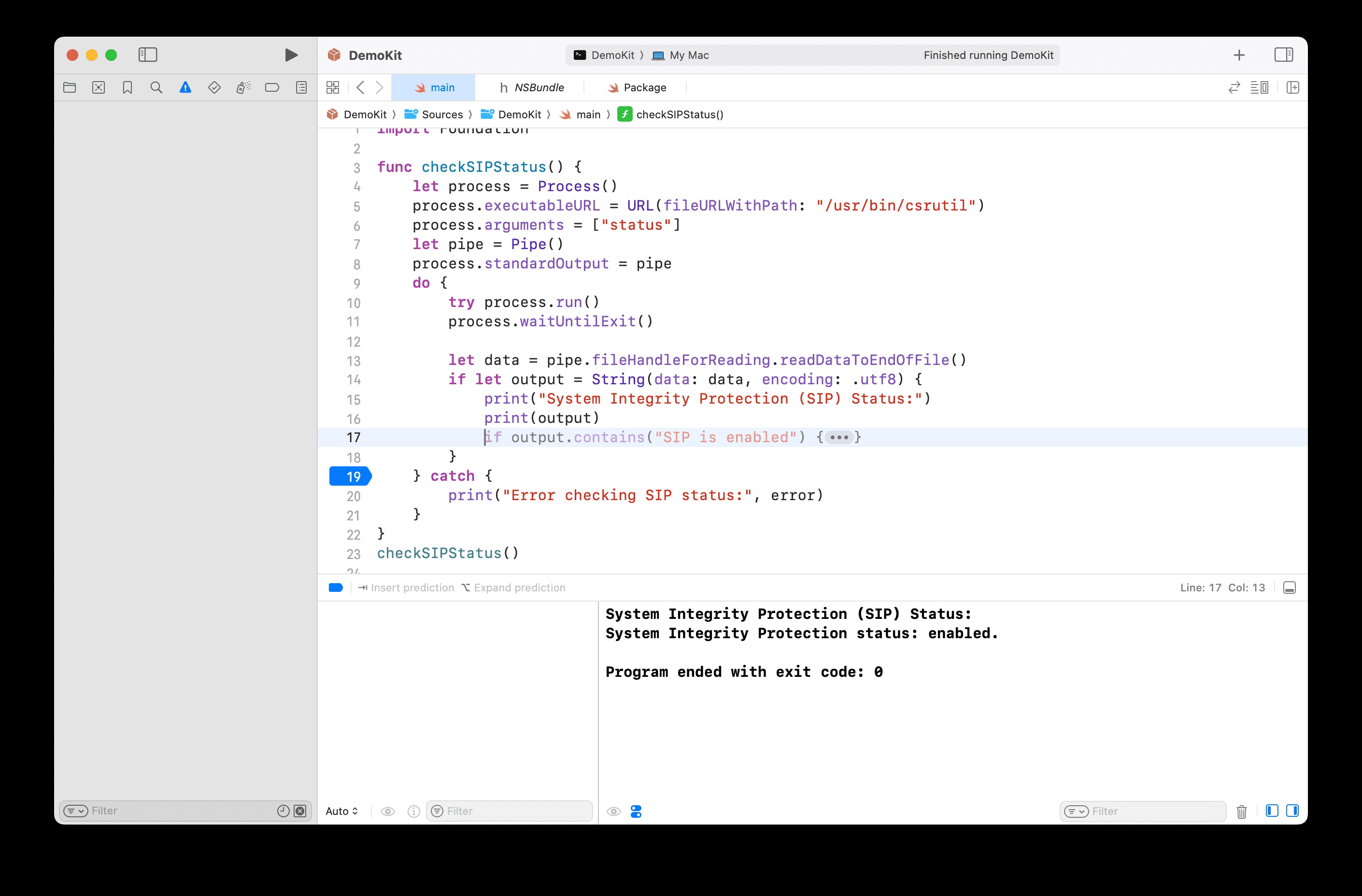

In summary, the training cycle follows these basic steps:

- Generating model responses to puzzle tasks.

- Score the responses and estimate the advantage of completing each group chat (this is the "Group Relative" part of GRPO).

- The model is fine-tuned using a gradient of tailoring strategies guided by these dominance estimates.

- Repeat these steps with new puzzles and the latest version of the model until optimal performance is achieved.

To generate the response, they use the popular vLLM Inference Engine. They tuned the parameter selection to maximize throughput and minimize startup time. Prefix caching is particularly important because they sample many responses for each task, and caching hints helps avoid redundant computations.

They observed that too many requests would make the vLLM overwhelmed, which leads to preemption or swapping out of ongoing requests. To address this problem, they limit requests using a semaphore that is tuned to maintain high key-value (KV) cache utilization while minimizing swapping. More advanced scheduling mechanisms can produce higher utilization while supporting flexible generation lengths.

After sampling, a standard HuggingFace Transformers AutoTokenizer Processing is complete. Its chat template feature presents the message object as a prompt string that includes a helper mask for determining which tokens were generated by the LLM. they are missing the required %generation% tags, so they are modified in the tokenization step. The generated helper masks are included in the tensor dictionary used for tuning and are used to identify which positions are required to compute losses.

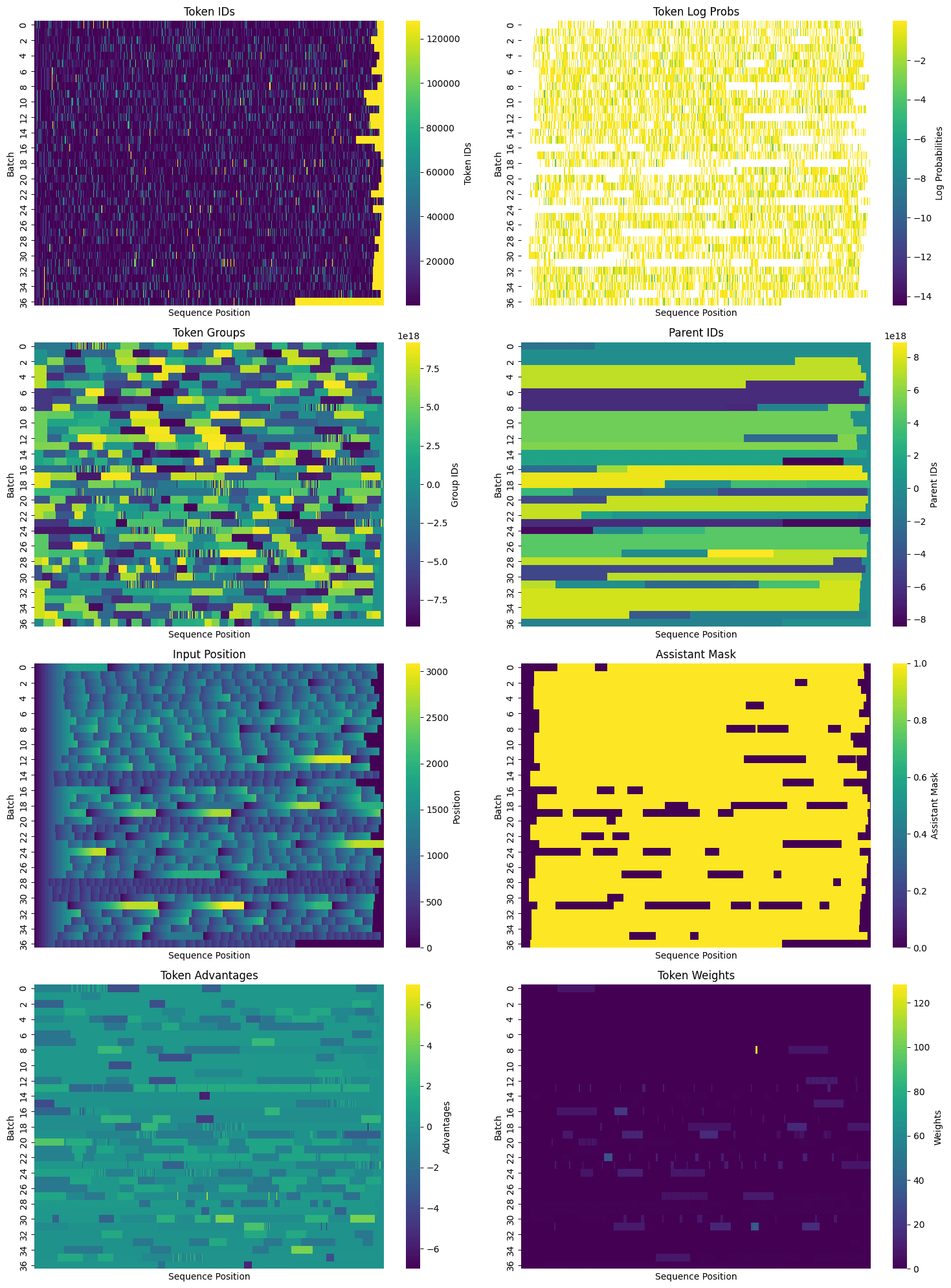

After binning the responses and obtaining helper masks, they packaged the data for tuning. In addition to including multiple hint/response pairs in each packed sequence, they identified shared hint tokens and assigned each token a parent ID as well as a standard group ID.Especially for tasks like Temporal Clue (which averages more than 1,000 tokens per puzzle), generating a large number of responses for each task and efficiently packing the tensor can significantly reduce redundancy. Once all the necessary information has been packed, the training dataset can be visualized in two dimensions, with each row being a sequence of tokens that may contain multiple hints and completions:

With tightly packed data, it's time for tuning. The models have been pre-trained, instructionally tuned, are fairly intelligent, and are good at following instructions. However, they cannot yet reliably solve the Temporal Clue puzzle. However, they occasionally succeed, and that is enough. Gradually, the model is guided to a "sleuth" state by increasing the probability of good reasoning and decreasing the probability of bad reasoning. This is done using standard machine learning techniques, using a policy gradient approach to calculate losses and shift weights favorably.

For training, the PyTorch team provided the torchtune Torchtune has a highly efficient decoder-only transformer implementations for popular models including Llama, Gemma, Phi, and others. While primarily using the Qwen model for this project, they also experimented with Meta's Llama 8B and 70B models.Torchtune also provides memory-saving and performance-enhancing utilities, including:

- Activation checkpoints

- Activation Uninstallation

- quantize

- Parameter Efficient Fine Tuning (PEFT)For example Low Rank Adaptive (LoRA)

For a complete list of supported optimizations, see theSelf-explanatory document hereThe

In addition, Torchtune supports multi-device (and nowmultinode) training, making it ideal for large models. It supports both Fully Sliced Data Parallel (FSDP) and Tensor Parallel (TP) training, which can be used in combination. They also provideA dozen programs, encouraging users to replicate and customize it for their own use cases. They created a modified version of the full fine-tuning program that supports:

- Multi-equipment and single-equipment training

- Reference model loading and weight exchange for computing KL dispersion

- Advanced Causal Mask Calculation Using Group IDs and Parent IDs

- GRPO Loss Integration and Component Logging

The program is available inhere areSee. In the future, they hope to add tensor parallelism support and explore PEFT and quantization.

The RL training process involves the selection of a large number of hyperparameters. While training the model, they tested various configurations and mainly identified the following configurations:

- Model: Qwen 2.5 Instruct 14B and 32B

- Number of tasks per iteration: 32

- Number of samples per task per iteration: 50

- Total number of samples per iteration: 32 * 50 = 1600

- Learning rate: 6e-6

- Microbatch size: 4 sequences for 14B model, 8 sequences for 32B model

- Batch size: variable, depending on the number of sequences

The batch size is variable because the response length may change during training, the sequence packing efficiency fluctuates in each iteration, and zero dominance responses (i.e., responses for which the model does not give any positive or negative feedback) are discarded. In one run, an attempt was made to dynamically adjust the learning rate based on batch size, but this resulted in a learning rate that was too high for small batch sizes and needed to be capped. The version with the cap set was not significantly different from using a constant learning rate, but adjusting batch size and learning rate remains an interesting area for future experiments.

They also conducted brief experiments increasing the number of tasks per iteration while decreasing the number of samples per task and vice versa, keeping the total number of samples per iteration roughly equal. These changes did not show meaningful differences over a short training period, suggesting that the scheme is robust to different tradeoffs between the number of tasks and the number of samples per task.

in the end

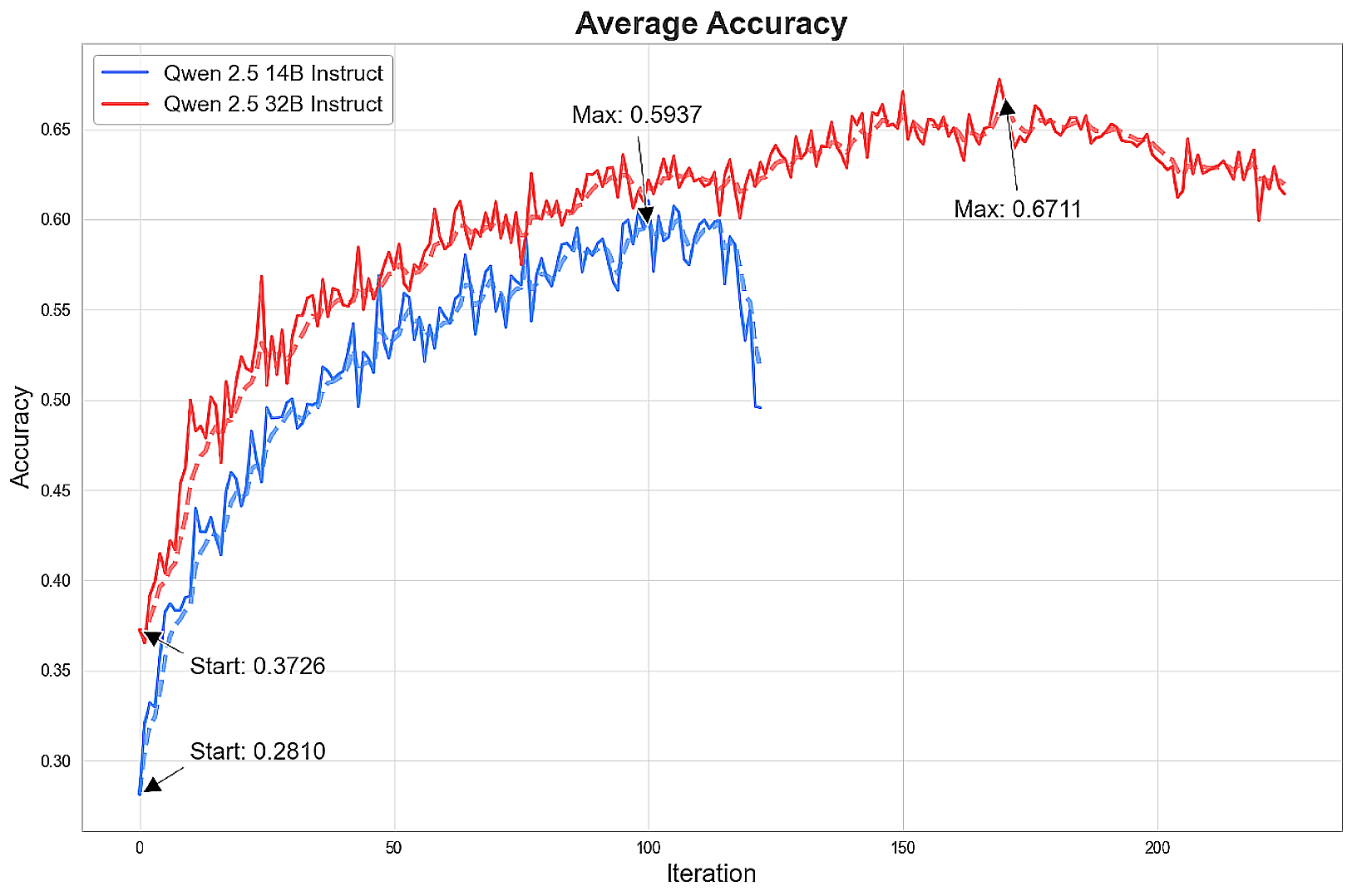

After training the model for more than 100 iterations, a cutting-edge level of inference was achieved.

The model improves rapidly, and then the improvement in accuracy begins to diminish and eventually declines, sometimes dramatically. At its best, the 14B model approaches the performance of Claude Sonnet 3.7 at 16k tokens, and the 32B model nearly matches Sonnet's results at 64k tokens.

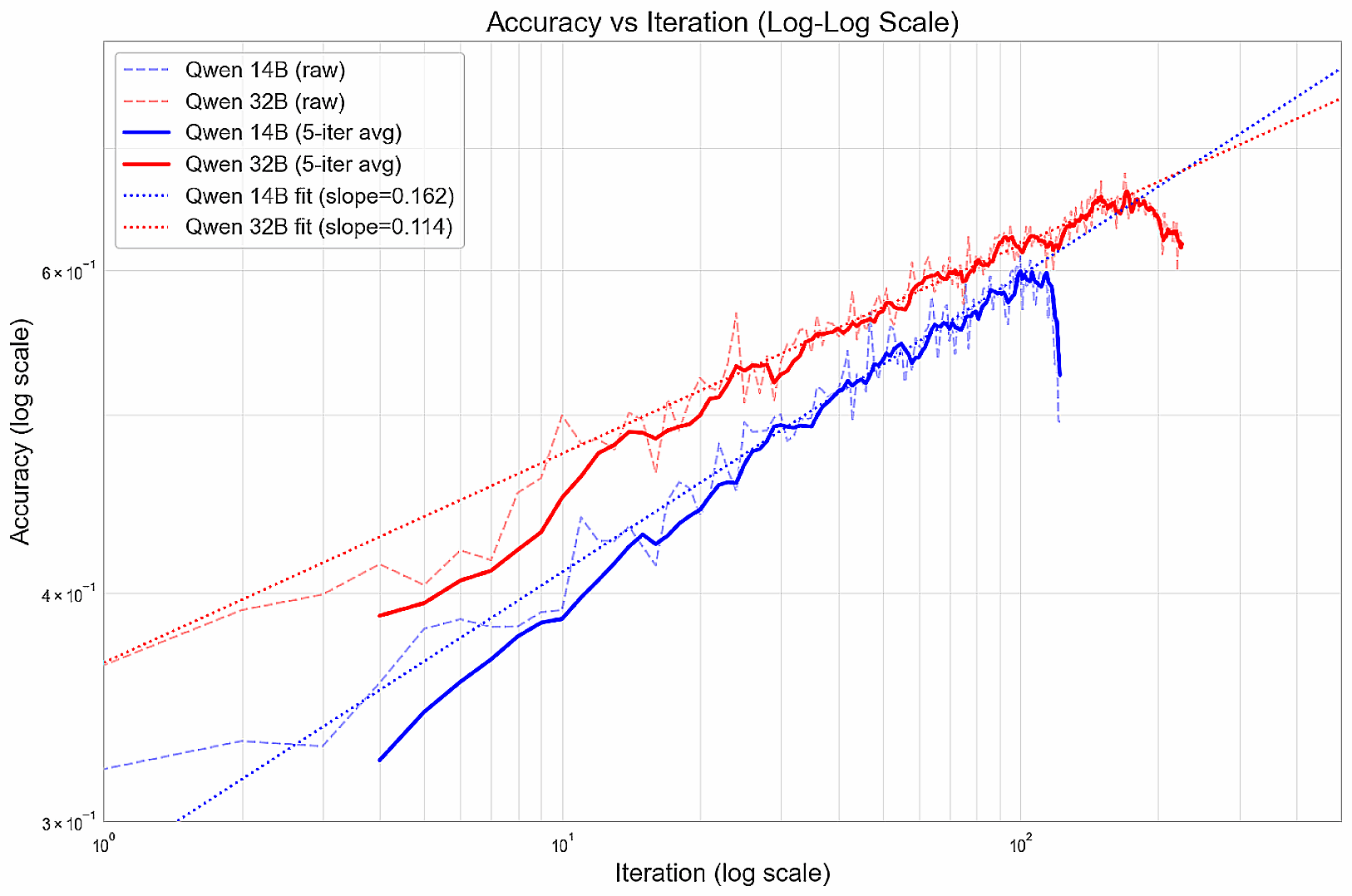

During training, performance improvement follows a power law, forming a linear relationship on the log-log plot (before deterioration).

They suspect that these models may prematurely converge on greedy strategies that work at the outset, but may limit their long-term prospects. The next step could be to explore ways to encourage diverse responses, or ways to build competence incrementally (e.g., course work), or to assign greater rewards to particularly good solutions to incentivize thorough exploration.

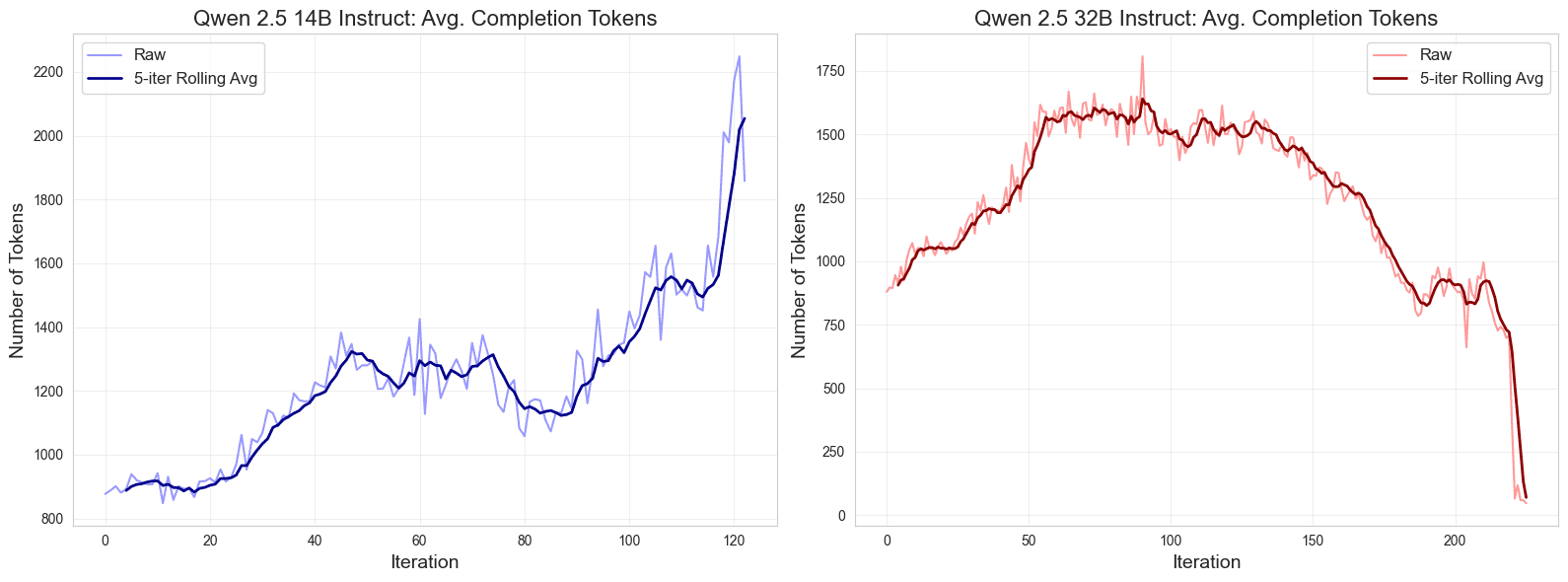

In addition, they noticed an interesting pattern in the length of the output during training. Initially, the response becomes longer, then stabilizes, and then diverges near the end of training, with the 14B model's response becoming longer and the 32B model's response length shortening, especially after peak performance is reached.

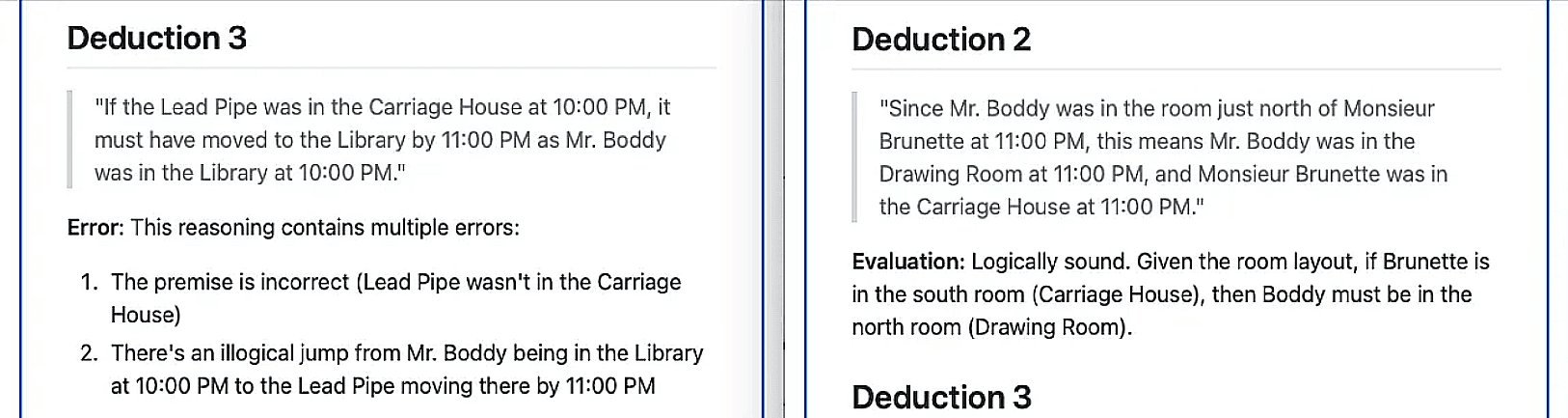

To qualitatively assess improvements in logical reasoning, they had the most powerful cutting-edge model, Claude Sonnet 3.7, identify and assess the plausibility of reasoning performed by the Qwen 32B model on similar puzzles - before and after training for more than 100 iterations.Sonnet identified 6 inferences from the base model, of which all but 1 were determined to be false;Instead, it identified 7 inferences from the trained model, all but 1 of which were judged to be logically sound.

Finally, the assumption thaton-demand deploymenthaveSufficient throughputThey are based on Fireworks AI (used form a nominal expression)Serverless Pricing Tiersestimated the cost of the Qwen model. They compared accuracy to the natural logarithm of the average inference cost per response and observed a clear linear Pareto frontier in the unadjusted model. The tradeoff between cost and accuracy was significantly improved by successfully training the open source model to the accuracy level of the frontier.

summarize

In this investigation, they sought to explore whether smaller, open-source language models could achieve cutting-edge reasoning capabilities through reinforcement learning. After training Qwen 14B and 32B models on challenging Temporal Clue puzzles using carefully chosen hyperparameters and the GRPO method, they achieved impressive performance gains. These improvements bring open source models to the forefront of inference performance while significantly reducing cost. The results highlight the potential of reinforcement learning to efficiently train open models on complex reasoning tasks.

As mentioned earlier.data set,test,Training programs and model weights (14B, 32B) are available free of charge under the MIT license.

In addition, they found that just 16 training examples Meaningful performance gains of up to 10-15% can be realized.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...