One of the easiest and most understandable tutorials for building RAG apps

ChatGPT The introduction of AI has created an important moment in enabling organizations to conceptualize new application scenarios and accelerating the adoption of AI by these companies. A typical application in the enterprise space is enabling users to talk to chatbots and get answers to questions based on the company's internal knowledge base. However, ChatGPT or other big language models are not trained on this internal data and therefore cannot directly answer questions based on internal knowledge bases. An intuitive solution is to provide the internal knowledge base to the model as context, i.e., as part of the prompt. However, most big language models of token The limit is only a few thousand, which is far from enough to accommodate the huge knowledge base of most organizations. Therefore, simply using off-the-shelf large language models will not solve this challenge. However, the following two popular approaches can be used individually or in combination to tackle this problem.

Fine-tuning the Open Source Big Language Model

This approach involves fine-tuning an open-source large language model like Llama2 on the client's corpus. The fine-tuned model is able to assimilate and understand the customer's domain-specific knowledge to answer relevant questions without additional context. However, it is worth noting that many customers' corpora are limited in size and often contain grammatical errors. This can pose a challenge when fine-tuning large language models. However, encouraging results have been observed when using the fine-tuned large language model in the retrieval-enhanced generation techniques discussed below.

Search Enhanced Generation

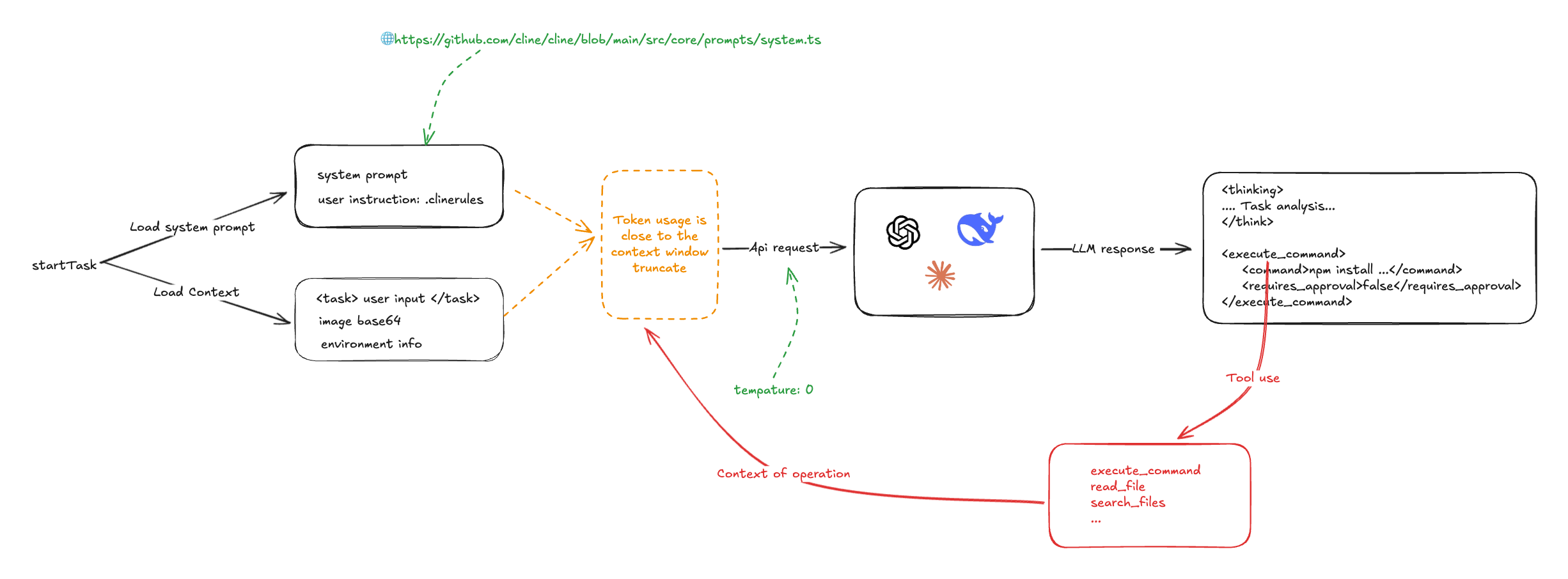

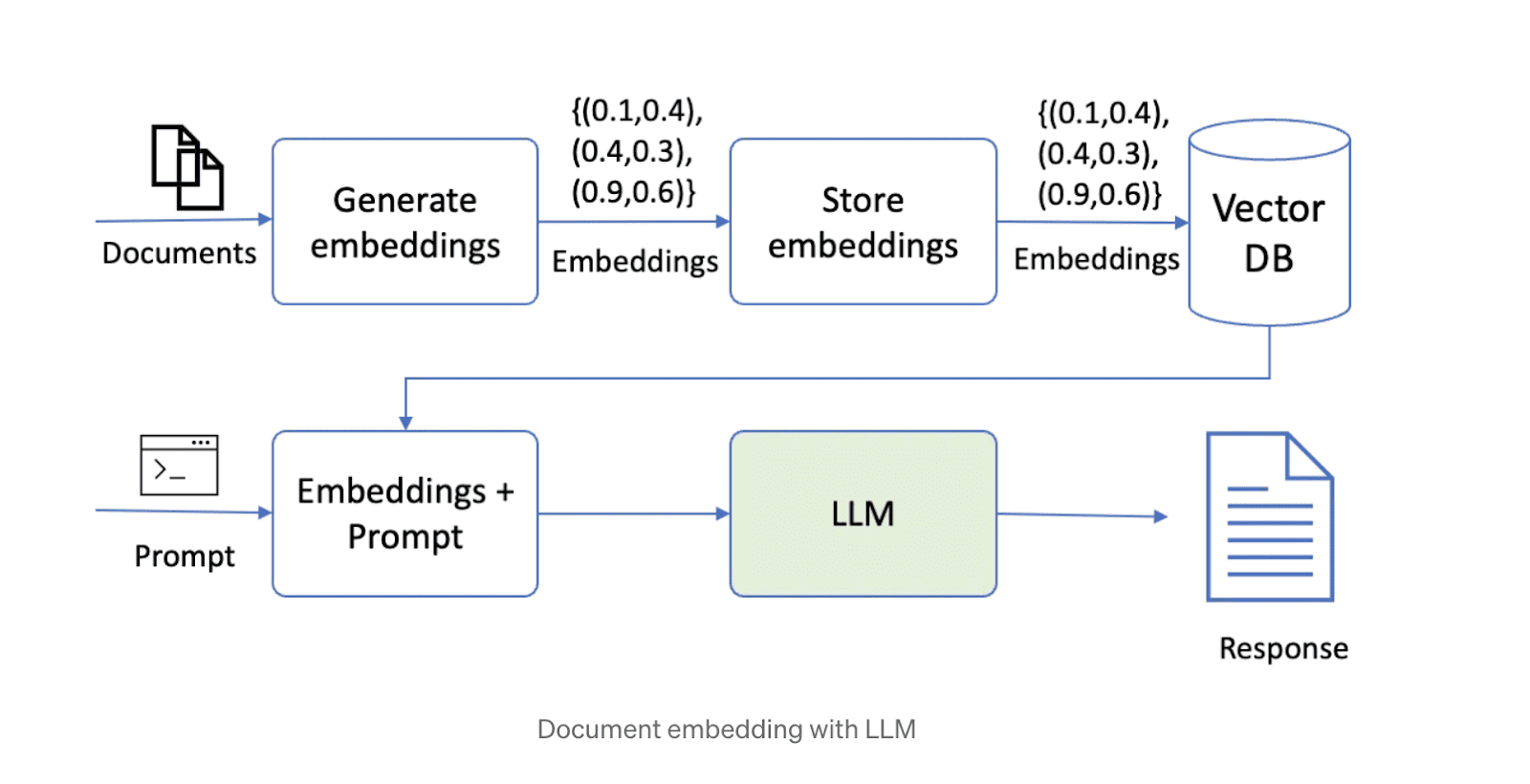

The second way to solve this problem is to retrieve the augmented generation (RAG). This approach first chunks the data and then stores it in a vector database. When answering a question, the system retrieves the most relevant chunks of data based on the query and passes them to the big language model to generate the answer. Currently, a number of open-source technology solutions combining big language models, vector storage and orchestration frameworks are quite popular on the Internet. A schematic of a solution using RAG technology is shown below.

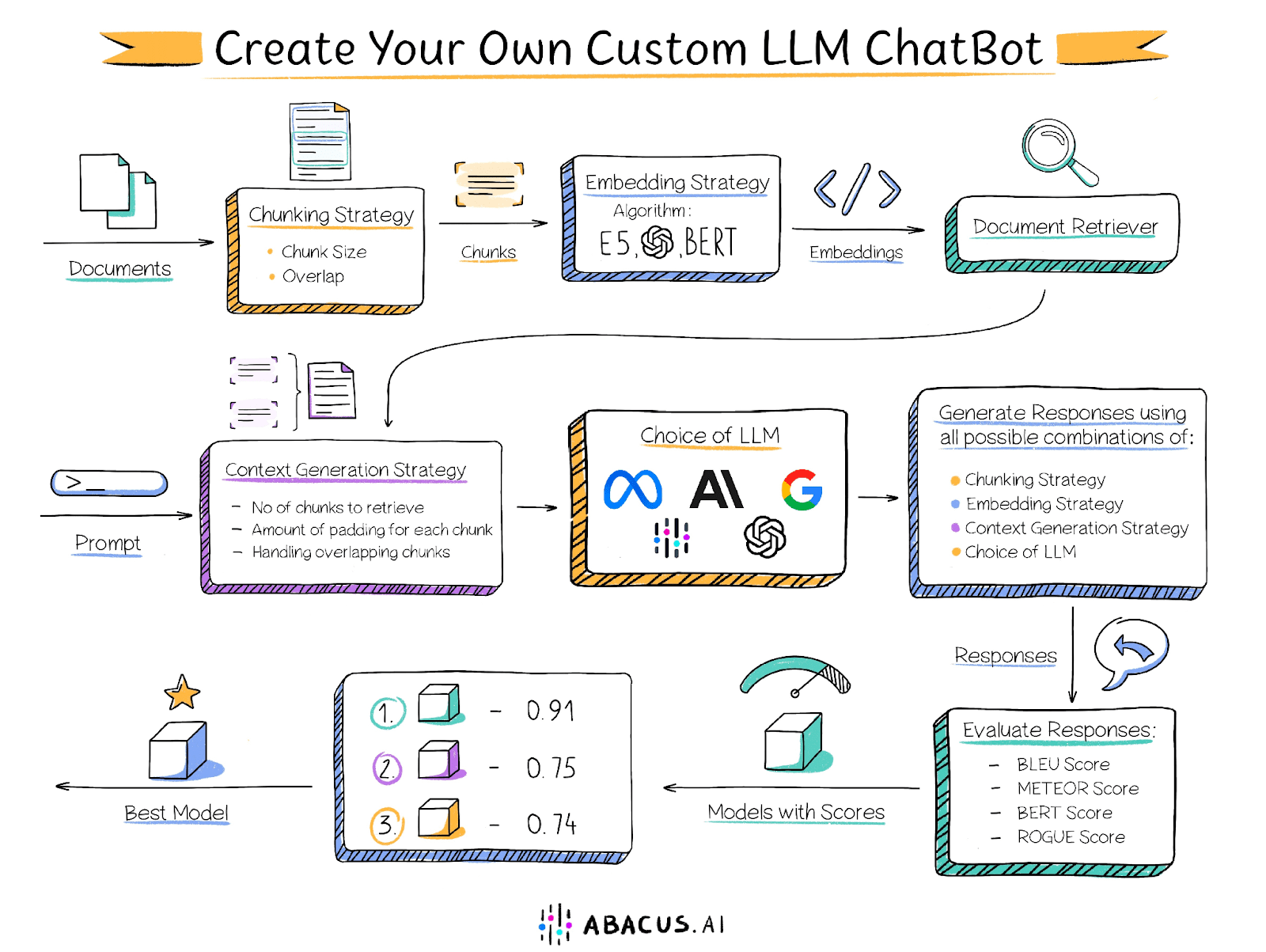

However, there are some challenges in building a solution using the above approach. The performance of the solution depends on a number of factors, such as the size of the text chunks, the degree of overlap between the chunks, the embedding technique, etc., and it is up to the user to determine the optimal settings for each of these factors. Below are a few key factors that may affect performance:

Document chunk size

As mentioned earlier, the context length of a large language model is limited, and therefore the document needs to be divided into smaller chunks. However, the size of the chunks is critical to the performance of the solution. Chunks that are too small cannot answer questions that require analyzing information across multiple passages, while chunks that are too large quickly take up the context length, resulting in fewer chunks that can be processed. In addition, chunk size together with the embedding technique determines the relevance of the retrieved chunks to the question.

Overlap between neighboring chunks

Chunking requires appropriate overlap to ensure that information is not cut off in a rigid manner. Ideally, it should be ensured that all the context needed to answer a question is present in its entirety in at least one chunk. However, too much overlap, while solving this problem, can create a new challenge: multiple overlapping chunks containing similar information, resulting in search results filled with duplicate content.

Embedded Technology

Embedding techniques are algorithms that convert text chunks into vectors that are subsequently stored in a document retriever. The technique used to embed chunks and questions determines the relevance of the retrieved chunks to the question, which in turn affects the quality of the content provided to the larger language model.

document finder

A document retriever (also known as a vector store) is a database for storing embedded vectors and retrieving them quickly. The algorithms used to match nearest neighbors in the retriever (e.g., dot product, cosine similarity) determine the relevance of the retrieved chunks. In addition, document retrievers should be able to scale horizontally to support large knowledge bases.

macrolanguage model

Choosing the right large language model is a critical component of the solution. The choice of the best model depends on several factors, including dataset characteristics and the other factors mentioned above. To optimize the solution, it is recommended to try different big language models and determine which one provides the best results. While some organizations are comfortable with this approach, others may be limited by the inability to use GPT4, Palm, or Claude Abacus.AI offers a variety of large language modeling options, including GPT3.5, GPT4, Palm, Azure OpenAI, Claude, Llama2, and Abacus.AI's proprietary models. In addition, Abacus.AI has the ability to fine-tune the big language model on user data and use it for retrieval-enhanced generation techniques, taking advantage of both.

Number of chunks

Some questions require information from different parts of the document or even across documents. For example, answering a question such as "List some movies that contain wild animals" requires clips or chunks from different movies. Sometimes, the most relevant chunks may not appear at the top of the vector search. In these cases, it is important to provide multiple chunks of data to the large language model for evaluation and response generation.

Adjusting each of these parameters requires significant user effort and involves a tedious manual evaluation process.

Abacus.AI solutions

To address this problem, Abacus.AI has adopted an innovative approach to provide users with AutoML capabilities. This approach automatically iterates over various combinations of parameters, including fine-tuning the large language model, in order to find the best combination for a given use case. In addition to user-supplied documentation, an evaluation dataset containing a series of questions and corresponding manually-written standardized answers is required, which Abacus.AI uses to compare the responses generated by different parameter combinations to determine the optimal configuration.

Abacus.AI generates the following evaluation metrics, and the user can select their preferred metrics to determine which combination performs best.

BLEU score

The BLEU (Bilingual Evaluation of Alternatives) score is a commonly used automated assessment metric that is primarily used to assess the quality of machine translations. It aims to provide a quantitative measure of translation quality that is highly correlated with human scores.

The BLEU score derives a score by comparing a candidate translation (the translation output by the machine) with one or more reference translations (human-generated translations) and calculating the degree of n-gram overlap between the candidate and reference translations. Specifically, it evaluates how accurately the n-gram (i.e., a sequence of n words) in the candidate translation matches that in the reference translation.

METEOR score

The METEOR (Metrics for Evaluating Explicitly Ordered Translations) score is another automatic evaluation metric commonly used to assess the quality of machine translations. It is designed to compensate for some of the shortcomings of other evaluation metrics such as BLEU, in particular by introducing explicit word order matching and taking into account synonyms and prosody.

BERT Score

The BERT score is an automated evaluation metric designed to assess the quality of text generation. The score is derived by calculating the similarity between each Token in the candidate and reference sentences. Unlike exact matching, this metric utilizes contextual embedding to determine Token similarity.

ROUGE score

The ROUGE (Recall-Oriented Alternatives for Summarization Evaluation) scores are a set of automated evaluation metrics commonly used in natural language processing and text summarization. Originally, these metrics were designed to assess the quality of text summarization systems, but they are now widely used in other areas such as machine translation and text generation.

The ROUGE score measures the quality of the generated text (e.g., a summary or translation) by comparing it to one or more reference texts (usually human-generated summaries or translations). It primarily measures the n-gram (i.e., a sequence of n words) and the degree of overlap of word sequences between the candidate text and the reference text.

Each of these scores ranges from 0 to 1, with higher scores indicating better model performance. With Abacus.AI, you can experiment with multiple models and metrics to quickly find the one that works best with your data and your specific application.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...