Gemini Cursor: an AI desktop smart assistant built on Gemini that can see, hear and speak

General Introduction

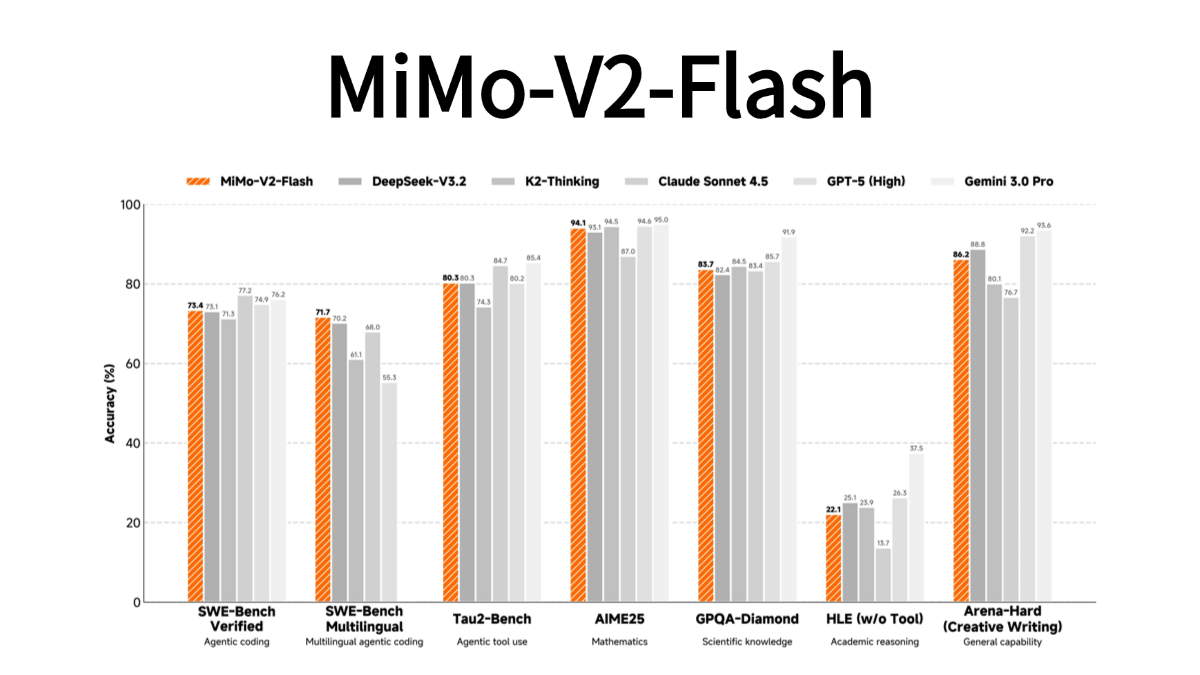

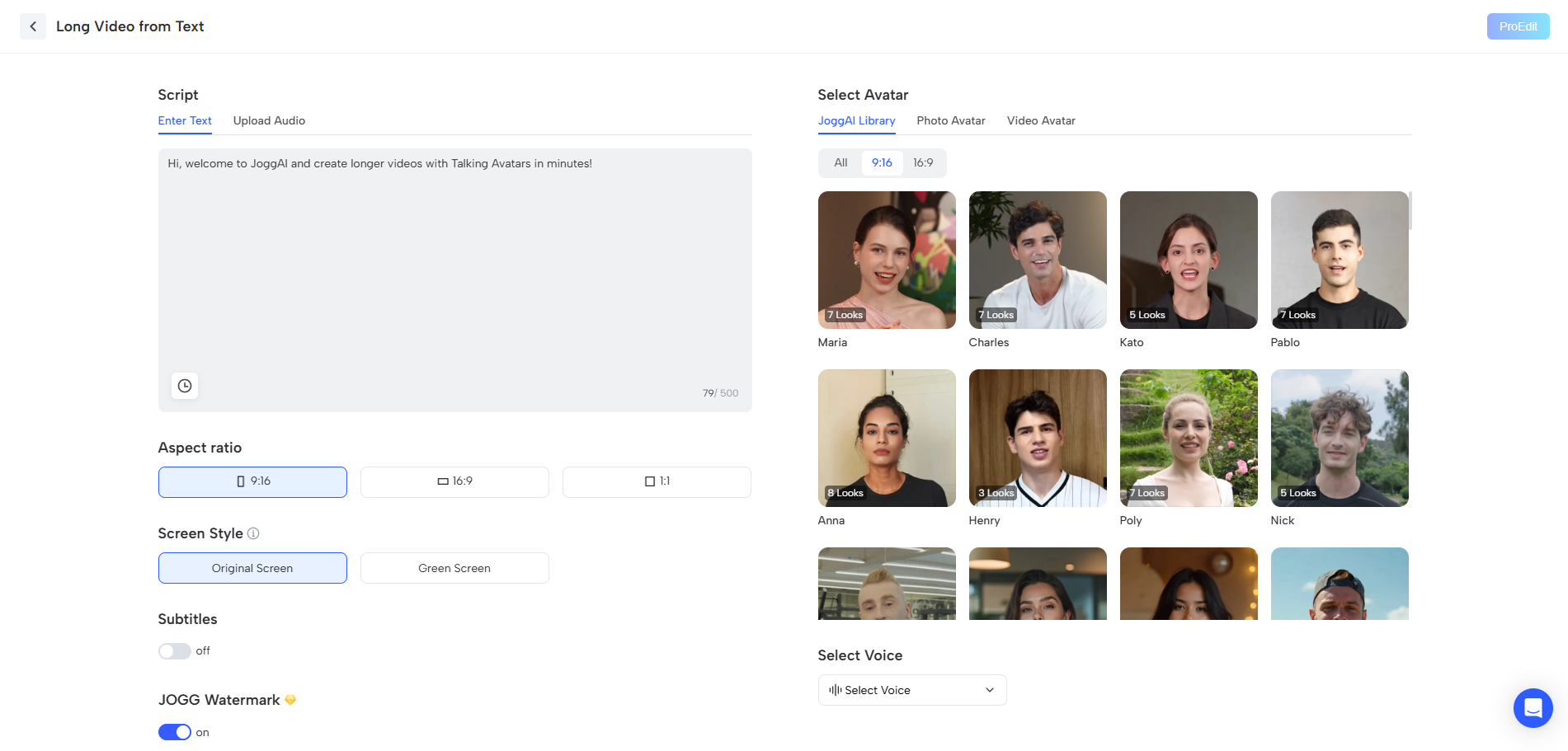

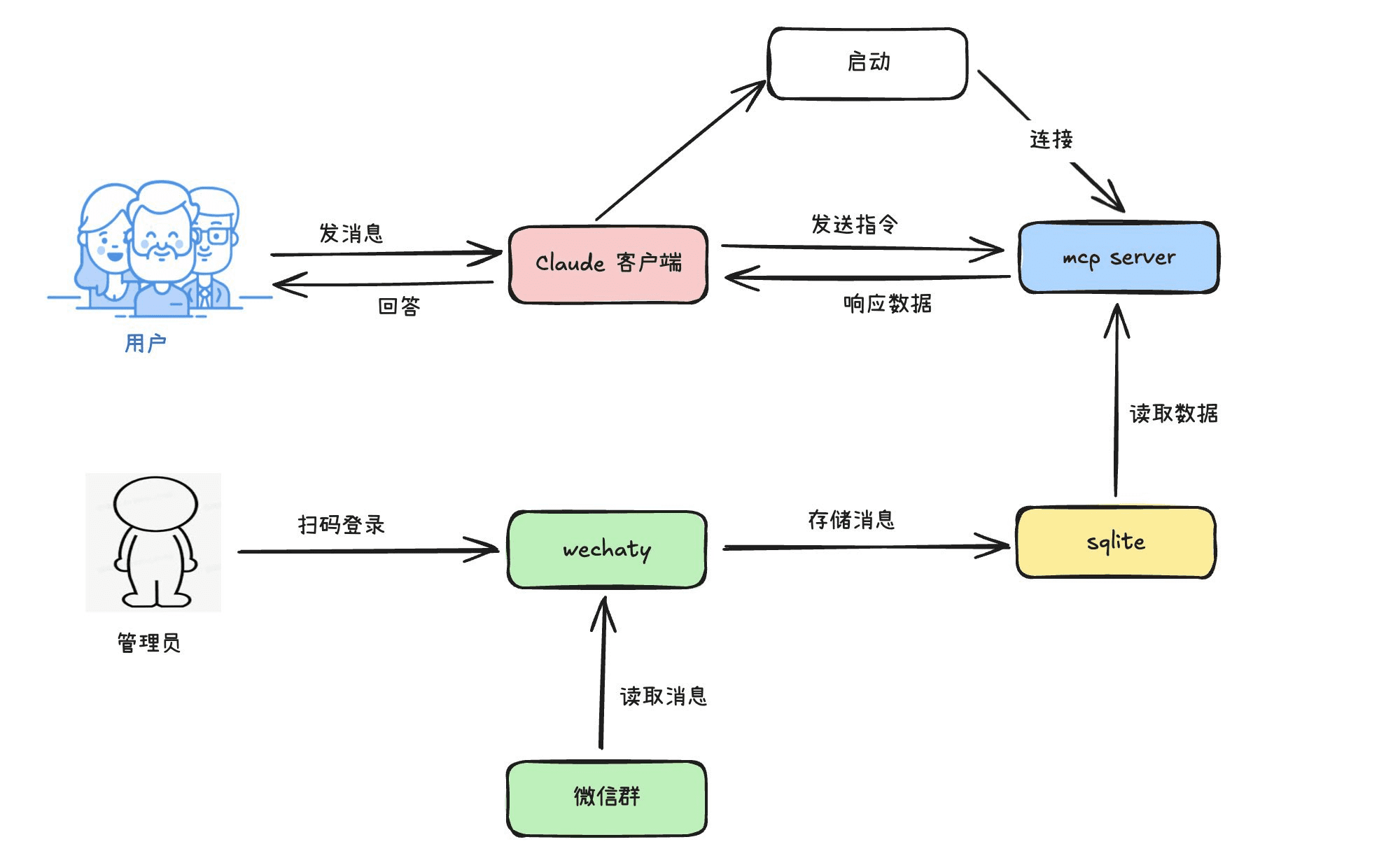

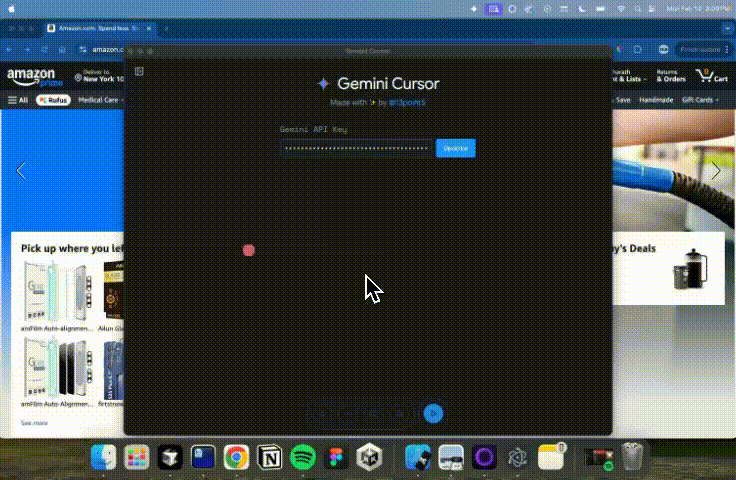

GeminiCursor is a desktop intelligent assistant based on Google's Gemini 2.0 Flash (experimental) model. It is capable of visual, auditory, and voice interactions through a multimodal API, providing a real-time, low-latency user experience. Created by @13point5, the project aims to use the AI assistant to help users perform complex tasks more efficiently, such as understanding complex diagrams in research papers, performing tasks on websites (e.g., adding payment methods on Amazon), and teaching as a real-time AI teacher using a whiteboard.

Function List

- AI intelligent assistant: Add an intelligent assistant to your desktop that can see the screen, hear and talk to the user.

- multimodal interaction: Supports visual, auditory and voice interactions for a more natural user experience.

- Real-time low latency: Ensure low latency during interactions to enhance the user experience.

- Complex task navigation: Helps users perform tasks on complex websites, such as adding payment methods.

- Real-time AI Teachers: Real-time teaching through whiteboard functionality for understanding complex diagrams and architectural maps.

Using Help

Installation process

- clone warehouse::

git clone https://github.com/13point5/gemini-cursor.git

cd gemini-cursor

- Installation of dependencies::

npm install

- Running the application::

npm run start

- Configuring API Keys::

- In the application, enter Gemini API Key.

- Click the Play button and the Share Screen button.

- Minimize the app and get started.

Function Operation Guide

- AI intelligent assistant::

- After launching the app, the AI Assistant will appear on your desktop.

- The assistant is able to see the screen content, hear the user's voice commands, and interact with the user via voice.

- multimodal interaction::

- The app supports capturing screen content through the camera and receiving user voice commands through the microphone.

- Users can control the assistant through voice commands to perform various operations such as opening files and browsing the web.

- Complex task navigation::

- Users can use voice commands to allow the assistant to perform tasks on complex websites.

- For example, when adding a payment method on Amazon, the user simply tells the assistant the steps that need to be completed, and the assistant will automatically navigate and perform the action.

- Real-time AI Teachers::

- After launching the whiteboard function, users can use voice commands to let the assistant draw diagrams, mark highlights, etc. on the whiteboard.

- Ideal for teaching and demonstrating complex concepts, such as diagrams and architectural maps in research papers.

common problems

- How to get Gemini API key?

- Users need to visit Google's Gemini API platform to register and obtain an API key.

- What should I do if I get an error while the app is running?

- Make sure that the Node.js version is v16 or higher and that all dependencies are installed correctly.

- Check that the API key is properly configured.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...