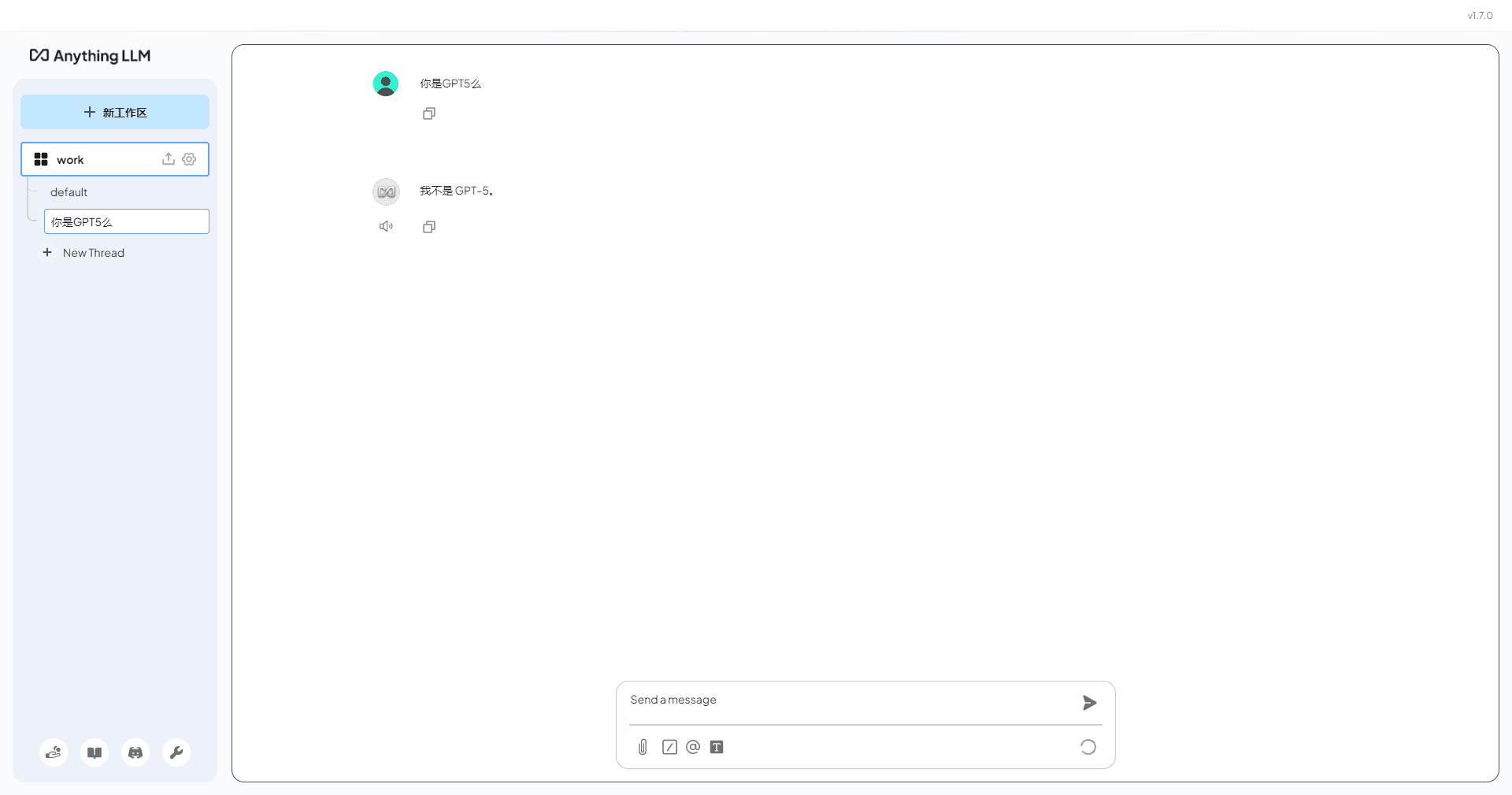

Flock: low-code workflow orchestration to build chatbots quickly

General Introduction

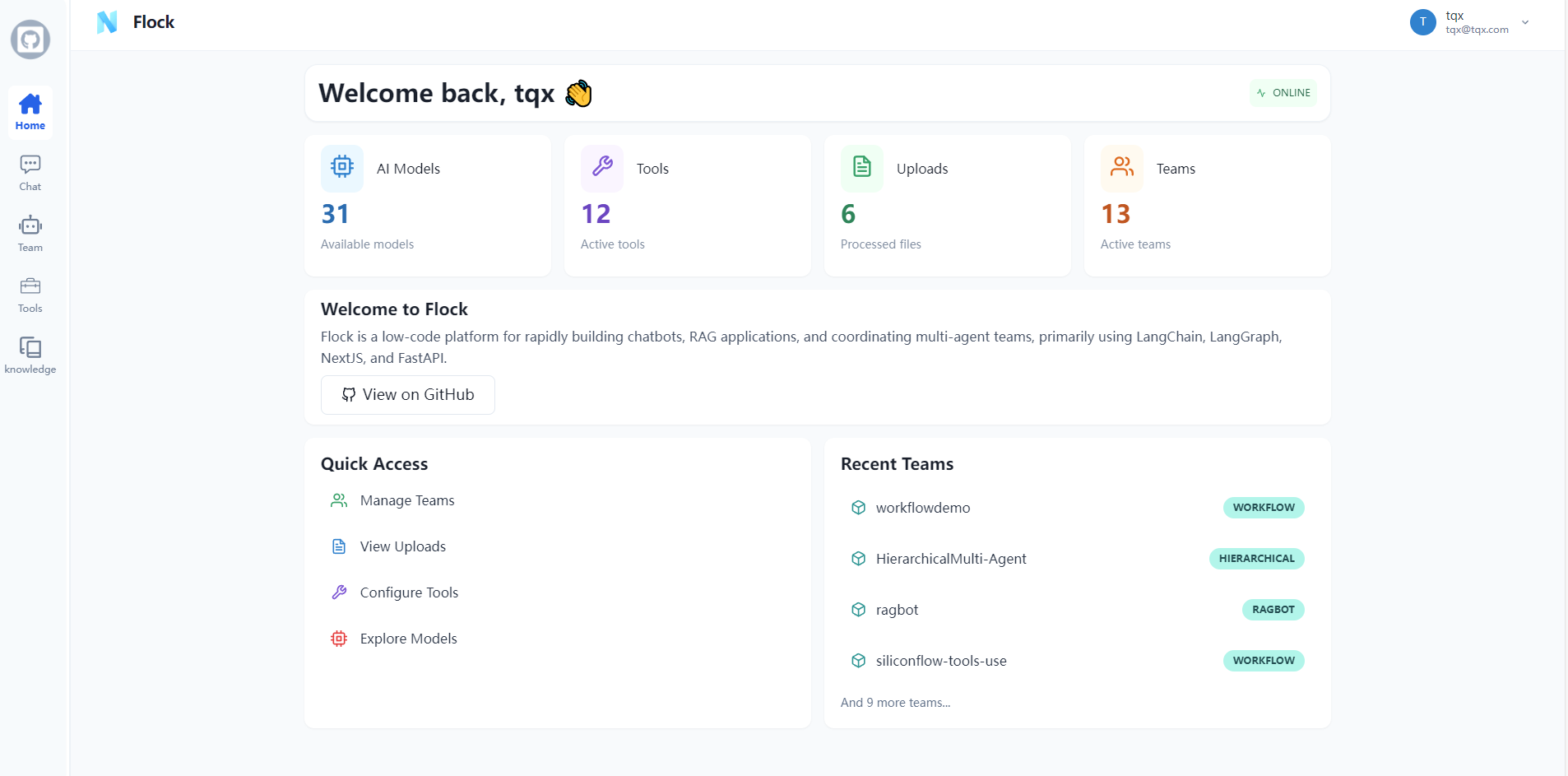

Flock is an open source workflow low-code platform hosted on GitHub and developed by the Onelevenvy team. It is based on LangChain and LangGraph technology, focusing on helping users quickly build chatbots, retrieval augmented generation (RAG) applications, and coordinating multi-agent teams.Flock makes it easy for users who are not good at programming to create intelligent applications through a flexible workflow design. It supports rich node features such as conditional logic, code execution, and multimodal dialog, and is widely applicable to business automation, data processing, and other scenarios. The project uses front-end technologies such as React and Next.js, and the back-end relies on PostgreSQL, making the technology stack modern and easy to extend. Flock is currently supported by an active community on GitHub and is very popular among developers.

Function List

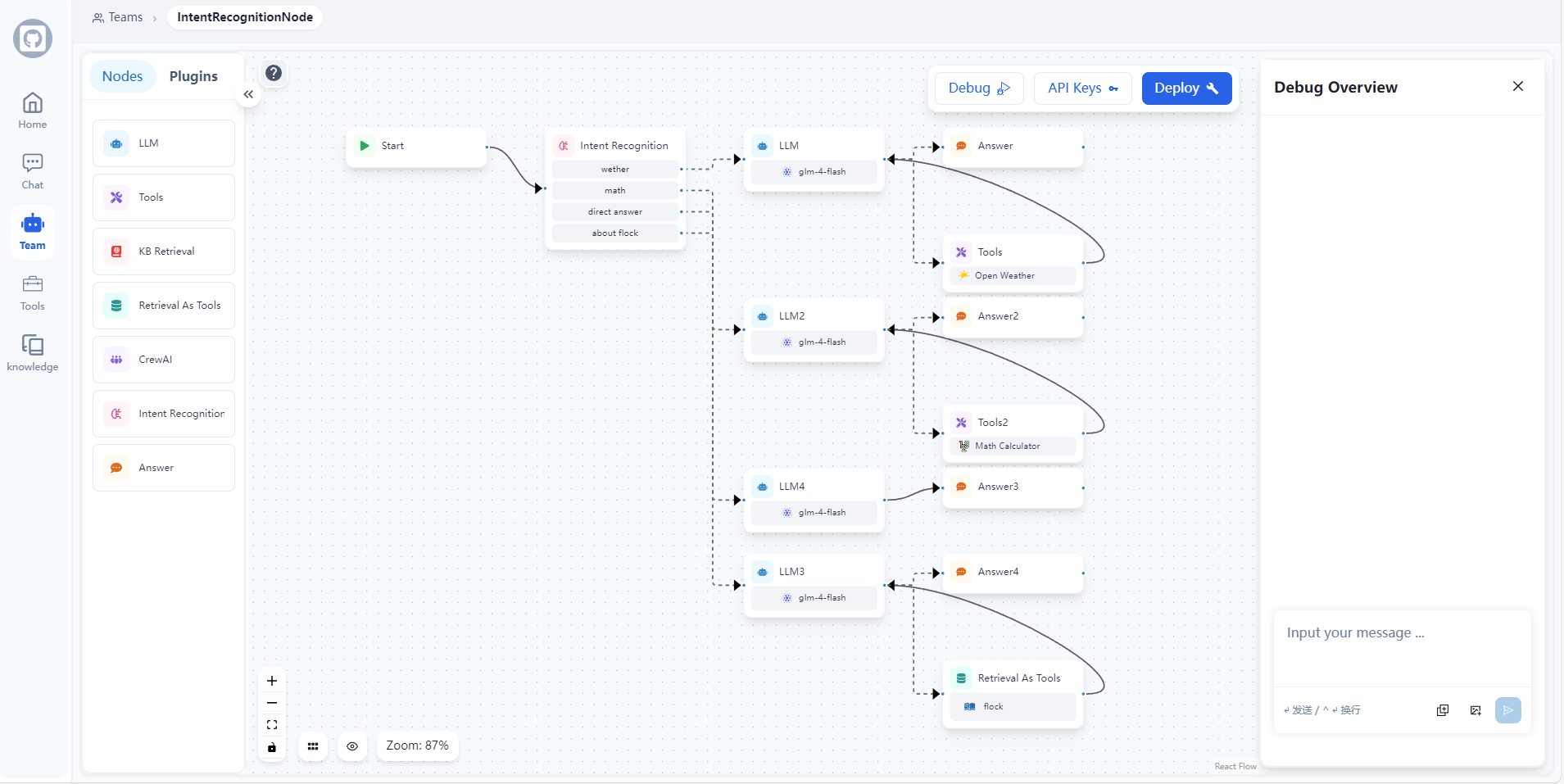

- Workflow orchestration:: Design complex workflows by dragging and dropping nodes to support multi-agent collaboration and tasking.

- Chatbot Building: Rapidly create chatbots that support natural language interactions and can handle text and image input.

- RAG Application Support: Integrate search enhancement generation to extract information from documents and generate answers.

- conditional logic control: Implement branching logic using If-Else nodes to dynamically adjust the flow based on input.

- Code Execution Capability: Built-in Python script node to perform data processing or custom logic.

- multimodal interaction: Supports multimodal inputs such as images to enhance dialog flexibility.

- Sub-workflow encapsulation:: Improve development efficiency by reusing complex processes through subgraph nodes.

- Human intervention nodes: Allow manual review of LLM output or tool call results.

- Intent recognition:: Automatic recognition of user input intent and multi-category routing.

Using Help

Installation process

Flock is a Docker-based local deployment tool that requires certain environment configurations to run. Here are the detailed installation steps to make sure you get started.

1. Preparing the environment

- Installing Docker: Install Docker on your operating system, Windows/Mac users can download it from the official Docker website, Linux users run the following command:

sudo apt update && sudo apt install docker.io sudo systemctl start docker

- Installing Git: is used to clone the repository, please refer to the Git website for the installation method.

- Checking Python: Make sure your system has Python 3.8+ for key generation:

python --version

2. Cloning projects

Open a terminal and run the following command to get the Flock source code:

git clone https://github.com/Onelevenvy/flock.git

cd flock/docker

3. Configuring environment variables

Copy the example configuration file and modify it:

cp ../.env.example .env

show (a ticket) .env file, change the default value of changethis Replace the key with a secure one. Generate the key with the following command:

python -c "import secrets; print(secrets.token_urlsafe(32))"

Fill the generated results into the .env document, for example:

SECRET_KEY=your_generated_key_here

4. Activation of services

Start Flock with Docker Compose:

docker compose up -d

If you need to build the image locally, run it first:

docker compose -f docker-compose.localbuild.yml build

docker compose -f docker-compose.localbuild.yml up -d

After a successful startup, Flock runs by default in the http://localhost:3000The

Usage

Once the installation is complete, you can access Flock through your browser and start using its features. Below are detailed instructions on how to use the main features.

Feature 1: Creating Chatbots

- Access to the workbench: Open your browser and type

http://localhost:3000, login screen (registration may be required for first time users). - New Workflow: Click "New Project" and select "Chatbot Template".

- Add Node:

- Drag in the Input Node to receive user messages.

- Connect "LLM nodes" to process natural language and bind your models (e.g. OpenAI API configured via LangChain).

- Add an "output node" to return results.

- configuration model: Fill in the API key and model parameters in the LLM Node settings.

- test run: Click the "Run" button and type "Hello, what's the weather like today?" See the bot's reply.

Function 2: Build RAG Application

- Prepare the document: Upload the document to be retrieved (e.g., PDF) to the working directory.

- design process:

- Add a File Input node to specify the document path.

- Connect the RAG Node and configure the search parameters (e.g. vector database).

- Link to "LLM Node" to generate a response.

- operational test:: Enter questions such as "What are the most mentioned keywords in the document?" View results.

- make superior:: Adjust the search range or model parameters to improve answer accuracy.

Feature 3: Multi-Agent Collaboration

- Create a team:: Add multiple agent roles (e.g., "Data Analyst" and "Customer Service") in "Agent Management".

- task sth.:

- Define each agent's tasks (e.g., analyzing data, responding to users) with "workflow nodes".

- Add "Collaboration Node" to coordinate inter-agent communication.

- running example:: Enter the task "Analyze sales data and generate a report" and observe agents collaborating to complete it.

Function 4: Conditional Logic and Code Execution

- Adding the If-Else node:

- Drag in the If-Else node and set the condition (e.g. "Input contains 'Sales'").

- Connect different branches, e.g. "Yes" to "Data Analysis" and "No" to "Prompt for Re-entry". Connect different branches, e.g.

- Insert code node:

- Add a "Python node" and enter the script:

def process_data(input): return sum(map(int, input.split(','))) - Used to calculate the sum of the input numbers.

- Add a "Python node" and enter the script:

- test (machinery etc): Enter "1,2,3" to verify that the result is "6".

Function 5: Human intervention

- Adding manual nodes:: Insertion of a "manual intervention node" in the workflow.

- Configuration review: Set to audit LLM output, save and run.

- manipulate:: The system pauses and prompts for manual intervention, and continues after the modified content is entered.

caveat

- network requirement: Ensure that the Docker network is open and configure a proxy if you are using an external model API.

- performance optimization: It is recommended to allocate at least 4GB of memory to Docker for local runtime.

- Log View: In case of problems, run

docker logs <container_id>Check for errors.

With these steps, you can use Flock to build chatbots, collaborate with multiple agents, and take full advantage of its low code!

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...