DeepSeek-R1-FP4: FP4-optimized version of DeepSeek-R1 inference 25x faster

General Introduction

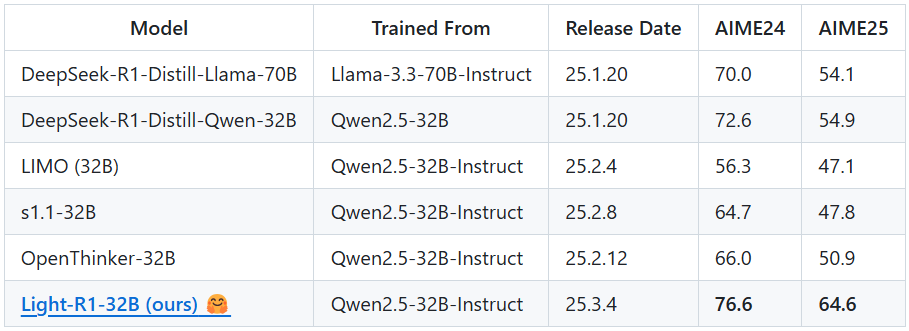

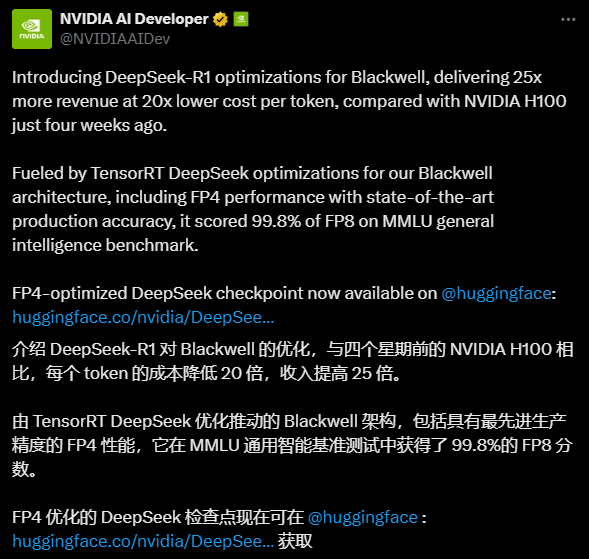

DeepSeek-R1-FP4 is a quantized language model open-sourced and optimized by NVIDIA based on the DeepSeek AI's DeepSeek-R1 Developed by TensorRT Model Optimizer. It quantizes weights and activation values into FP4 data types with the TensorRT Model Optimizer, enabling the model to significantly reduce resource requirements while maintaining high performance. With approximately 1.6x less disk space and GPU memory than the original model, it is ideal for efficient inference in production environments. Optimized specifically for NVIDIA's Blackwell architecture, the model is claimed to improve inference speed by up to 25X per token It is 20 times cheaper and demonstrates strong performance potential. It supports context lengths up to 128K, is suitable for processing complex text tasks, and is open for commercial and non-commercial use, providing developers with a cost-effective AI solution.

Function List

- Efficient Reasoning: Dramatically improve inference speed and optimize resource usage using FP4 quantization.

- Long Context Support: Supports a maximum context length of 128K, which is suitable for processing long text generation tasks.

- TensorRT-LLM Deployment: Can be rapidly deployed to run on NVIDIA GPUs through the TensorRT-LLM framework.

- open source use:: Support for commercial and non-commercial scenarios, allowing free modification and derivative development.

- performance optimization: Designed for the Blackwell architecture, it provides ultra-high inference efficiency and cost-effectiveness.

Using Help

Installation and Deployment Process

Deployment of DeepSeek-R1-FP4 requires certain hardware and software environment support, especially NVIDIA GPU and TensorRT-LLM framework. Below is a detailed installation and usage guide to help users get started quickly.

1. Environmental preparation

- hardware requirement: NVIDIA Blackwell architecture GPUs (e.g. B200) are recommended, requiring at least 8 GPUs (each with ~336GB VRAM unquantized and ~1342GB quantized to meet model requirements). For smaller tests, at least 1 high-performance GPU (e.g. A100/H100) is recommended.

- software dependency:

- Operating system: Linux (e.g. Ubuntu 20.04 or later).

- NVIDIA driver: latest version (supports CUDA 12.4 or higher).

- TensorRT-LLM: The latest master branch version should be compiled from GitHub sources.

- Python: 3.11 or later.

- Other libraries:

tensorrt_llm,torchetc.

2. Download model

- interviews Hugging Face PageClick on the "Files and versions" tab.

- Download the model file (e.g.

model-00001-of-00080.safetensors(etc., a total of 80 slices with a total size of more than 400GB). - Save the file to a local directory, e.g.

/path/to/model/The

3. Installation of TensorRT-LLM

- Clone the latest TensorRT-LLM repository from GitHub:

git clone https://github.com/NVIDIA/TensorRT-LLM.git cd TensorRT-LLM

- Compile and install:

make build pip install -r requirements.txt - Verify the installation:

python -c "import tensorrt_llm; print(tensorrt_llm.__version__)"

4. Deployment model

- Load and run the model using the example code provided:

from tensorrt_llm import SamplingParams, LLM # 初始化模型 llm = LLM( model="/path/to/model/nvidia/DeepSeek-R1-FP4", tensor_parallel_size=8, # 根据 GPU 数量调整 enable_attention_dp=True ) # 设置采样参数 sampling_params = SamplingParams(max_tokens=32) # 输入提示 prompts = [ "你好,我的名字是", "美国总统是", "法国的首都是", "AI的未来是" ] # 生成输出 outputs = llm.generate(prompts, sampling_params) for output in outputs: print(output) - Before executing the above code, make sure that the GPU resources have been allocated correctly. If the resources are insufficient, adjust the

tensor_parallel_sizeParameters.

5. Functional operation guide

Efficient Reasoning

- The core strength of DeepSeek-R1-FP4 is its FP4 quantization technology. Instead of manually tweaking model parameters, users can experience increased inference speed by simply ensuring that their hardware supports the Blackwell architecture. At runtime, it is recommended to set

max_tokensparameter controls the output length to avoid wasting resources. - Example: Run a Python script in a terminal, enter different prompts, and observe the speed and quality of the output.

Long Context Processing

- The model supports context lengths up to 128K, which is suitable for generating long articles or processing complex dialogs.

- Operation: In the

promptsEnter a long context, such as the beginning of a 5,000-word article, and then set themax_tokens=1000Generate subsequent content. Check the coherence of the generated text after running. - Caveat: Long contexts may increase memory usage, it is recommended to monitor GPU memory usage.

performance optimization

- If using Blackwell GPUs, you can directly benefit from a 25x speedup in inference. With other architectures (e.g., A100), the performance gain may be slightly lower, but is still significantly better than unquantized models.

- Suggestion for operation: In a multi-GPU environment, adjust the

tensor_parallel_sizeparameters to fully utilize hardware resources. For example, 8 GPUs is set to 8 and 4 GPUs is set to 4.

6. Frequently asked questions and solutions

- insufficient video memory: If prompted for a memory overflow, reduce the

tensor_parallel_sizeor use a less quantized version (e.g., the community-provided GGUF format). - Slow reasoning: Ensure that TensorRT-LLM has been compiled correctly and GPU acceleration is enabled, and check that the driver version matches.

- output anomaly: Check the input prompt format to ensure that no special characters interfere with the model.

Recommendations for use

- initial use: Start with simple hints and gradually increase the context length to familiarize yourself with model performance.

- production environment: Test multiple sets of prompts before deployment to ensure that the output meets expectations. It is recommended to optimize multi-user access with load balancing tools.

- Developer Customization:: Modifications to the model can be made based on open source licenses, adapted to specific tasks such as code generation or question and answer systems.

With the above steps, users can quickly deploy and use DeepSeek-R1-FP4 to enjoy the convenience of efficient inference.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...