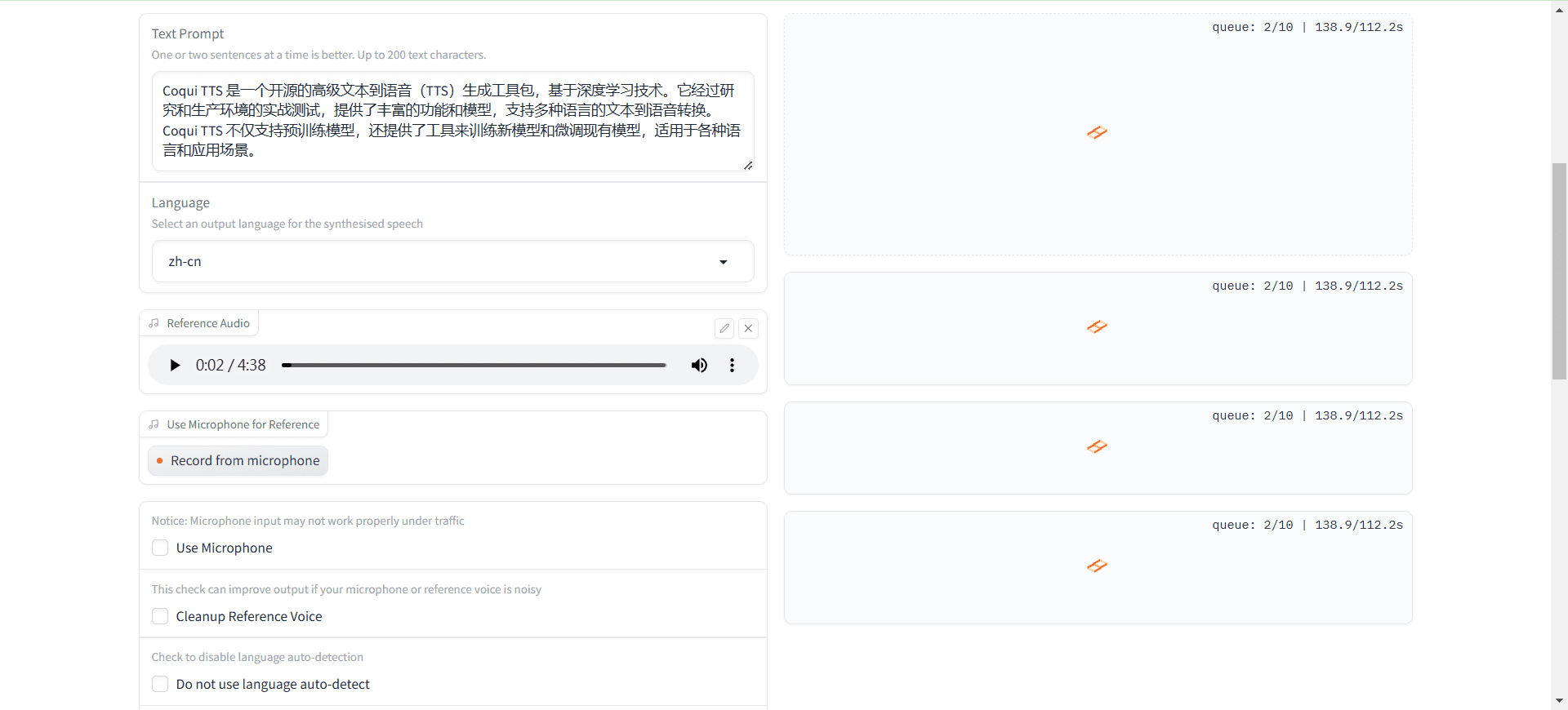

Coqui TTS (xTTS): Deep Learning Toolkit for Text-to-Speech Generation with Multiple Language Support and Voice Cloning Capabilities

General Introduction

Coqui TTS is an open source advanced text-to-speech (TTS) generation toolkit based on deep learning techniques. It has been battle-tested in both research and production environments, and provides a rich set of features and models to support text-to-speech conversion in multiple languages.Coqui TTS not only supports pre-trained models, but also provides tools to train new models and fine-tune existing ones for a variety of languages and application scenarios.

The author is no longer updating the project, the branch project is under continuous maintenance: https://github.com/idiap/coqui-ai-TTS

Demo: https://huggingface.co/spaces/coqui/xtts

Function List

- Multi-language support: Supports text-to-speech conversion in over 1100 languages.

- Pre-trained models: Provides a variety of pre-trained models that users can use directly.

- model training: Support for training new models and fine-tuning existing ones.

- sound cloning: Supports the voice cloning function, which allows you to generate a voice for a specific sound.

- Efficient training: Provide fast and efficient model training tools.

- Detailed log: Provide detailed training logs on the terminal and Tensorboard.

- Utilities: Provide tools for data set analysis and organization.

Using Help

Installation process

- clone warehouse: First, clone the Coqui TTS GitHub repository.

git clone https://github.com/coqui-ai/TTS.git cd TTS

2. **安装依赖** :使用 pip 安装所需的依赖。

```bash

pip install -r requirements.txt

- Installing TTS : Run the following command to install TTS.

python setup.py install

Usage

- Loading pre-trained models : Text-to-speech conversion can be performed using pre-trained models.

from TTS.api import TTS

tts = TTS(model_name="tts_models/en/ljspeech/tacotron2-DDC", progress_bar=True)

tts.tts_to_file(text="Hello, world!", file_path="output.wav")

- Training a new model : You can train new models based on your own dataset.

python TTS/bin/train_tts.py --config_path config.json --dataset_path /path/to/dataset

- Fine-tuning of existing models : Existing models can be fine-tuned to fit specific application scenarios.

python TTS/bin/train_tts.py --config_path config.json --dataset_path /path/to/dataset --restore_path /path/to/pretrained/model

Detailed Operation Procedure

- Data preparation : Prepare the training dataset and make sure that the data format meets the requirements.

- configuration file : Edit Configuration File

config.json, set the training parameters. - Start training : Run the training script to start model training.

- Monitor training : Monitor the training process, view training logs and model performance through the terminal and Tensorboard.

- Model Evaluation : After the training is completed, the model performance is evaluated and necessary adjustments and optimizations are made.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...