CoD: Chain of Density

Original text:https://arxiv.org/pdf/2309.04269

Speed Reading:From Sparse to Dense: GPT-4 Abstract Generation Using Chained Density Hints

Collected into:Summarize knowledge common prompts

summaries

Determining the "right" amount of information to include in an automated text summary is a challenging task. A good summary needs to be detailed and entity-centered while avoiding being too long and difficult to understand. To better understand this trade-off, we propose a cueing method called Chain of Density (CoD), by which GPT-4 is able to generate increasingly dense summaries. We find that CoD-generated summaries are more abstract, more convergent, and have less opening bias than GPT-4 summaries generated using normal cues. Using a human preference study of 100 CNN/DailyMail articles, we find that humans prefer denser abstracts than GPT-4 abstracts generated by normal prompts, and that their density is almost comparable to that of manually written abstracts.

1. Introduction

Automated summarization techniques have come a long way in recent years, thanks largely to the paradigm shift from supervised fine-tuning to zero-sample cueing using large-scale language models (LLMs). For example, GPT-4 can achieve fine-grained control over summarization features (e.g., length, topic, and style) with well-designed cues without additional training.

However, the information density of a summary is an aspect that is often overlooked. Theoretically, as a compression of another text, a summary should contain a higher concentration of information than the source document. However, the density of a summary is an open question. If a summary contains insufficient information, it will be inconsequential; if it contains too much information, it may become difficult to understand without adding to the overall length. Communicating more information within a fixed token budget requires a combination of abstraction, compression, and fusion. There is a limit to the amount of space that can be made for additional information before it becomes incomprehensible or even factually incorrect.

2. Chained density cues (CoD)

2.1 Objectives

Our goal was to generate a set of GPT-4 summaries with varying information densities while controlling for length, which has been shown to be a strong confounder when evaluating summaries. To this end, we designed a single Chained Density (CoD) cue by which an initial summary is generated and made progressively denser in entities. Specifically, for a fixed number of rounds, a unique set of salient entities is identified from the source text and fused into the previous summary without increasing the length. The first summary is entity sparse since it focuses on only 1-3 initial entities.

2.2 Data

We randomly selected 100 articles from the CNN/DailyMail summary test set for generating CoD summaries.

2.3 Reference points

For comparison, we compared the statistics of CoD abstracts with manually written bullet-point reference abstracts and GPT-4 abstracts generated using common prompts:

- Ordinary tips:: "Write a very brief summary of the article in no more than 70 words."

We set the required token length to match the length of the CoD digest (as shown in the table below).

2.4 Examples of tips

Below is an example of a CoD prompt:

请生成一个非常简短的摘要,不超过70个单词。然后,逐步添加1-3个缺失的重要实体,而不增加长度。

Example of a complete CoD prompt (original English).

Article: {{ ARTICLE }}

You will generate increasingly concise, entity-dense summaries of the above article.

Repeat the following 2 steps 5 times.

Step 1. Identify 1-3 informative entities (";" delimited) from the article which are missing from the previously generated summary.

Step 2. Write a new, denser summary of identical length which covers every entity and detail from the previous summary plus the missing entities.

A missing entity is:

- relevant to the main story,

- specific yet concise (5 words or fewer),

- novel (not in the previous summary),

- faithful (present in the article),

- anywhere (can be located anywhere in the article).

Guidelines:

- The first summary should be long (4-5 sentences, ~80 words) yet highly non-specific, containing little information beyond the entities marked as missing. Use overly verbose language and fillers (e.g., "this article discusses") to reach ~80 words.

- Make every word count: rewrite the previous summary to improve flow and make space for additional entities.

- Make space with fusion, compression, and removal of uninformative phrases like "the article discusses".

- The summaries should become highly dense and concise yet self-contained, i.e., easily understood without the article.

- Missing entities can appear anywhere in the new summary.

- Never drop entities from the previous summary. If space cannot be made, add fewer new entities.

Remember, use the exact same number of words for each summary.

Answer in JSON. The JSON should be a list (length 5) of dictionaries whose keys are "Missing_Entities" and "Denser_Summary".

Cue word explanation.

This detailed CoD cue word is designed to guide GPT-4 towards progressively generating denser summaries, with the core idea being to iteratively add new key entities to the summary at each round while keeping the length of the summary constant. Let's break down the parts of this cue word:

Article: {{ ARTICLE }}: This is a placeholder for the content of the article you want to summarize{{ ARTICLE }}TheYou will generate increasingly concise, entity-dense summaries of the above article.: This is the core of the directive, telling the model that the goal is to generate increasingly concise and entity-dense summaries.Repeat the following 2 steps 5 times.: Specifying the number of iterations as 5 means that the model will repeat the next two steps for five rounds, gradually increasing the density of the summaries.Step 1. Identify 1-3 informative entities (";" delimited) from the article which are missing from the previously generated summary.: Step 1: Identify missing entities. The model needs to identify one to three informative entities from the original article, which must not have appeared in previously generated abstracts. Entities are separated by a semicolon;Separation.Step 2. Write a new, denser summary of identical length which covers every entity and detail from the previous summary plus the missing entities.: Step 2: Generate a denser summary.. The model needs to generate a new summary based on the previous summary, incorporating the missing entities identified in step 1. It is critical that the new summary be exactly the same length as the previous summary, which forces the model to compress and fuse the information to accommodate the new entities.A missing entity is::: Defines criteria for "missing entities" to ensure that the entities selected are of high quality and relevant:- relevant to the main story: Relates to the main story of the article.- specific yet concise (5 words or fewer):: Specific and concise, no more than five words.- novel (not in the previous summary): Not present in previous summaries.- faithful (present in the article): Must be real in the article.- anywhere (can be located anywhere in the article): can be located anywhere in the article.

Guidelines:: A series of guides are provided to instruct the model in more detail on how to perform the summary generation task:- The first summary should be long (4-5 sentences, ~80 words) yet highly non-specific...: Guidance for the first summary: Requires the first summary to be longer (~80 words, 4-5 sentences) but less informative, with the primary purpose of laying the groundwork for subsequent iterations. Redundant language and filler words may be used.- Make every word count: rewrite the previous summary to improve flow and make space for additional entities.: simplify language:: Emphasize the need to rewrite previous summaries to optimize linguistic expression and make room for the inclusion of new entities.- Make space with fusion, compression, and removal of uninformative phrases like "the article discusses".: Space scaling techniques:: Explicitly state the use of techniques such as fusion, compression and deletion of uninformative phrases to compress summaries.- The summaries should become highly dense and concise yet self-contained, i.e., easily understood without the article.: Summary quality objectives:: The final summary is required to be highly dense and concise, but at the same time self-contained, i.e., the content of the summary can be understood without reading the original text.- Missing entities can appear anywhere in the new summary.: physical location: The newly added entity can appear anywhere in the new summary.- Never drop entities from the previous summary. If space cannot be made, add fewer new entities.: Entity reservations:: Emphasize that entities from previous summaries should never be deleted. If there is not enough space, new entities can be added sparingly.- Remember, use the exact same number of words for each summary.: uniform:: Re-emphasize the importance of consistency in the length of the summaries generated in each round.Answer in JSON. The JSON should be a list (length 5) of dictionaries whose keys are "Missing_Entities" and "Denser_Summary".: output format: Require the model to output the results in JSON format. the JSON should be a list of 5 dictionaries, each containing the keys "Missing_Entities" (missing entities added in this round) and "Denser_Summary" (denser summary generated in this round).

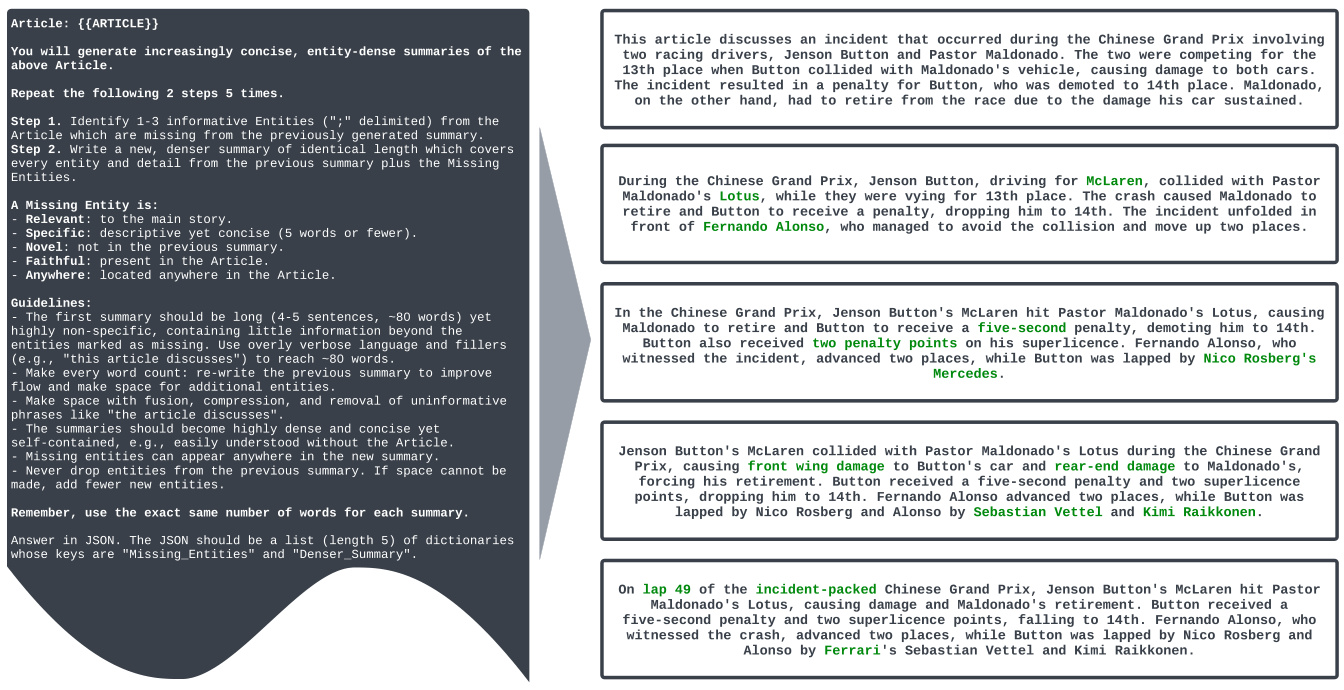

Figure 1:: Chained density (CoD) hints and sample output. In each step, 1-3 additional details (entities) are added to the previous summary without increasing the length. Existing content is rewritten (e.g., compressed, fused) in order to make room for new entities. Half of the annotators (2/4) preferred the penultimate abstract and the rest preferred the last one.

Examples of actual working use of CoD cue words:

Suppose we have a news article about "President Biden's visit to Poland" and we want to generate a series of summaries of different densities using CoD cues.

Step 1: Prepare to enter the article

First, we need to get the content of the news article ready, for example:

美国总统乔·拜登 неожиданно 抵达波兰进行访问,与波兰总统安杰伊·杜达举行会晤。此次访问正值俄乌冲突一周年之际,预计两国领导人将讨论地区安全、对乌克兰的进一步支持以及加强北约东翼等议题。拜登的访问是对波兰在支持乌克兰难民和向乌克兰提供军事援助方面所做努力的肯定。此外,拜登还计划在华沙就俄乌冲突发表重要讲话,并会见在波兰的美军士兵。

Step 2: Build the API request (OpenAI API as an example)

We will use the OpenAI API (or another API that supports the GPT-4 model) to send the request. The body portion of the request will contain our CoD prompt word, and the {{ ARTICLE }} Replace it with the content of the prepared article.

{

"model": "gpt-4",

"prompt": "Article: 美国总统乔·拜登 неожиданно 抵达波兰进行访问,与波兰总统安杰伊·杜达举行会晤。此次访问正值俄乌冲突一周年之际,预计两国领导人将讨论地区安全、对乌克兰的进一步支持以及加强北约东翼等议题。拜登的访问是对波兰在支持乌克兰难民和向乌克兰提供军事援助方面所做努力的肯定。此外,拜登还计划在华沙就俄乌冲突发表重要讲话,并会见在波兰的美军士兵。\nYou will generate increasingly concise, entity-dense summaries of the above article.\n\nRepeat the following 2 steps 5 times.\n\nStep 1. Identify 1-3 informative entities (\";\" delimited) from the article which are missing from the previously generated summary.\nStep 2. Write a new, denser summary of identical length which covers every entity and detail from the previous summary plus the missing entities.\n\nA missing entity is:\n- relevant to the main story, \n- specific yet concise (5 words or fewer), \n- novel (not in the previous summary), \n- faithful (present in the article), \n- anywhere (can be located anywhere in the article).\n\nGuidelines:\n\n- The first summary should be long (4-5 sentences, ~80 words) yet highly non-specific, containing little information beyond the entities marked as missing. Use overly verbose language and fillers (e.g., \"this article discusses\") to reach ~80 words.\n- Make every word count: rewrite the previous summary to improve flow and make space for additional entities.\n- Make space with fusion, compression, and removal of uninformative phrases like \"the article discusses\".\n- The summaries should become highly dense and concise yet self-contained, i.e., easily understood without the article. \n- Missing entities can appear anywhere in the new summary.\n- Never drop entities from the previous summary. If space cannot be made, add fewer new entities. \n\nRemember, use the exact same number of words for each summary.\nAnswer in JSON. The JSON should be a list (length 5) of dictionaries whose keys are \"Missing_Entities\" and \"Denser_Summary\".",

"max_tokens": 1000, // 确保模型有足够的token生成完整JSON输出

"temperature": 0.7// 可调整temperature控制生成结果的随机性

}

Step 3: Send API request and receive JSON response

Send the above JSON request to the OpenAI API endpoint. The API returns a JSON-formatted response with a summary of the five different densities and the missing entities added at each step.

Step 4: Parsing the JSON Response and Using the Digest

Parses the JSON response returned by the API. You will get a JSON list, similar to:

{

"Missing_Entities": [],

"Denser_Summary": "This article discusses a visit. It is about a president visiting a country. The president is American. The country is Poland. This is related to international relations. The visit is happening during a time of conflict. This conflict involves Russia and Ukraine. The visit aims to show support and discuss important matters. More details will be provided."

},

{

"Missing_Entities": ["Joe Biden"; "Andrzej Duda"],

"Denser_Summary": "U.S. President Joe Biden visited Poland and met with Polish President Andrzej Duda. This visit is about international relations and is happening during the Russia-Ukraine conflict. They will discuss support and security. More details are expected."

},

{

"Missing_Entities": ["one-year anniversary of the Russia-Ukraine conflict"; "regional security"; "support for Ukraine"],

"Denser_Summary": "U.S. President Joe Biden met Polish President Andrzej Duda in Poland on the one-year anniversary of the Russia-Ukraine conflict. They discussed regional security and support for Ukraine, focusing on international relations."

},

{

"Missing_Entities": ["strengthening NATO's eastern flank"; "support for Ukrainian refugees"; "military aid to Ukraine"],

"Denser_Summary": "On the Russia-Ukraine conflict's one-year anniversary, U.S. President Joe Biden visited Poland, meeting President Andrzej Duda to discuss regional security, support for Ukraine (including refugees and military aid), and strengthening NATO's eastern flank."

},

{

"Missing_Entities": ["Warsaw"; "major speech"; "U.S. troops in Poland"],

"Denser_Summary": "To mark the Russia-Ukraine conflict's one-year mark, U.S. President Joe Biden visited Warsaw, Poland, meeting President Andrzej Duda to discuss regional security, Ukrainian refugee and military aid support, strengthening NATO's eastern flank, and Biden's planned major speech and meeting with U.S. troops in Poland."

}

]

You can choose the right density of summaries for your needs. For example, if you need a very short summary, you can choose the first or second; if you need a more detailed but still concise summary, you can choose the fourth or fifth.

Practical application scenarios:

- News aggregation and content recommendation: Provide different density of summaries for different users or platforms to meet different information needs.

- Information Retrieval: Display different density of abstracts in the search results to help users quickly determine the relevance of the article.

- Reading comprehension aid: Helps readers understand the content of the text from the beginning to the end by gradually increasing the density of the summaries.

- Social Media Content Generation: Generate summaries of different lengths and densities for different social media platforms (e.g. Twitter, Facebook).

With the above steps, you can effectively use CoD cue words to generate high-quality, multi-density text summaries with GPT-4 in practice.

3. Statistical data

3.1 Direct statistics

In Table 1, we compute tokens using NLTK, measure unique entities using Spacy, and compute entity densities as ratios.The CoD cue follows a fixed token budget to a large extent. In fact, the second step leads to an average reduction of 5 tokens (from 72 to 67) as unnecessary words are removed from the initially lengthy summary. Entity density increased - starting from an initial 0.089, which is lower than human and regular GPT-4 (0.151 and 0.122, respectively) - to 0.167 after 5 steps of densification.

| CoD Steps | Token | thing that has a material existence (as opposed a conceptual, virtual or online existence) | Density (E/T) |

|---|---|---|---|

| 1 | 72 | 6.4 | 0.089 |

| 2 | 67 | 8.7 | 0.129 |

| 3 | 67 | 9.9 | 0.148 |

| 4 | 69 | 10.8 | 0.158 |

| 5 | 72 | 12.1 | 0.167 |

| humankind | 60 | 8.8 | 0.151 |

| General GPT-4 | 70 | 8.5 | 0.122 |

3.2 Indirect statistics

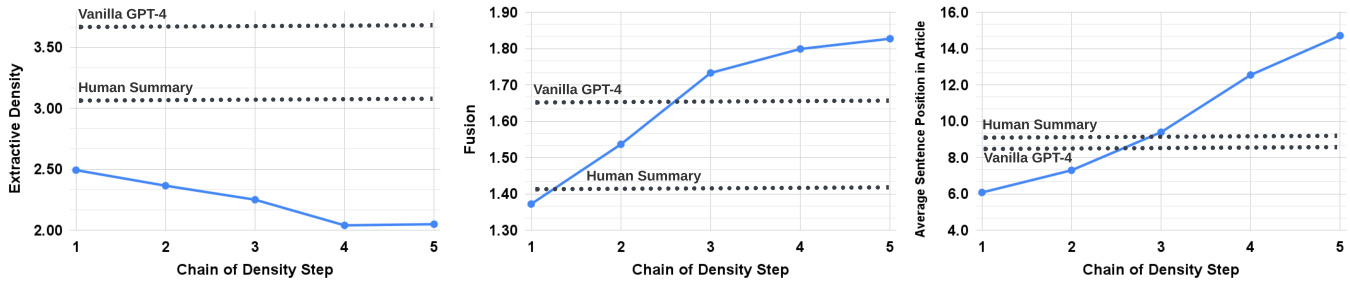

With each step of the CoD, abstraction should increase as the abstract is repeatedly rewritten to make room for each additional entity. We measure abstraction using extractive density: the average squared length of extracted fragments. Similarly, the level of Fusion should increase monotonically as entities are added to a fixed-length abstract. We proxy fusion as the average number of source sentences aligned for each abstract sentence. For alignment, we use the relative ROUGE gain method, which aligns the source sentences to the target sentences until the relative ROUGE gain of the added sentences is no longer positive. We also expect the Content Distribution-the position in the article from which the summary content was sourced-to change. Specifically, we expect CoD abstracts to initially exhibit a strong beginning bias (Lead Bias), but gradually begin to introduce entities from the middle and end of the article. To measure this, we use alignment in fusion and measure the average sentence rank of all aligned source sentences. Figure 2 confirms these hypotheses: as the rewriting step increases, the abstraction increases (left panel), the fusion rate rises (middle panel), and the abstracts start to include content from the middle and end of the article (right panel). Interestingly, all CoD abstracts are more abstract than the hand-written and baseline abstracts.

Figure 2: CoD-generated abstracts are becoming more abstract, while exhibiting more convergence and less beginning bias.

4. Results

4.1 Human preferences

To better understand the tradeoffs presented by the CoD abstracts, we conducted a preference-based study with a scoring assessment of the GPT-4.

| CoD Steps | Percentage of first-place votes (single annotator) | add up the total | ||

|---|---|---|---|---|

| 1 | 3.0 2.0 | 13.0 | 17.4 | 8.3 |

| 2 | 25.0 28.0 | 43.0 | 31.4 | 30.8 |

| 3 | 22.0 28.0 21.0 | 24.4 | 23.0 | |

| 4 | 29.0 25.0 13.0 | 26.7 | 22.5 | |

| 5 | 21.0 17.0 10.0 | 16.3 | 15.5 |

Table 1:: Percentage of first-place votes by step for CoD summaries. Based on the combined preferences, the CoD Steps has a plurality of 2, a median of 3, and an expected value of 3.06.

First, we report a low value of 0.112 for Fleiss' kappa (Fleiss, 1971), suggesting that subtle differences between summaries and the nature of the task are subjective. Recent studies have also noted low instance-level agreement when judging GPT-based summaries (Goyal et al., 2022).

However, at the system level, some trends are beginning to emerge. For 3 of the 4 annotators, CoD step 1 received the largest share of first-place votes out of 100 examples (28%, 43%, and 31.4%, respectively). However, taken together, the first-place summary of 61% (23.0+22.5+15.5) involved ≥3 densification steps. The median number of preferred CoD steps was in the middle (3), and the expected step was 3.06.

Based on the average density of the step 3 summaries, we can roughly infer that the preferred entity density among CoD candidates is about 0.15. As we can see from Table 1, this density is consistent with the manually-written summaries (0.151) but significantly higher than the summaries generated using the normal GPT-4 prompt (0.122).

4.2 Automatic indicators

As an evaluator, the GPT-4 has been shown to adequately align with human judgments (Fu et al., 2023; Liu et al., 2023a) and may even outperform crowdsourced workers on some annotation tasks (Gilardi et al., 2023). As a complement to our human assessment, we prompted the GPT-4 to rate CoD summaries on a scale of 1-5 on the following dimensions: information quantity, quality, coherence, attributability, and overall. Definitions of informativeness, quality, and attributability are from Aharoni et al. (2023), while coherence is from Fabbri et al. (2021). The overall aim is to capture joint quality. See Appendix A for tips used to capture scores for each dimension. Table 3 shows that densification is correlated with the amount of information, but there is a limit, with scores peaking at step 4 (4.74). The no-article dimensions: quality and coherence, declined after steps 2 and 1, respectively. All abstracts were considered attributable to the source article. Overall scores were skewed towards denser and more informative abstracts, with the highest scores at step 4. On average, the first and last CoD steps were least favored, while the three middle steps had close scores (4.78, 4.77, and 4.76, respectively).

In Appendix A, we report the highest summary-level correlation of the overall metrics with human judgments (Pearson correlation of 0.31), but note that the overall correlation remains low-a phenomenon also observed in Deutsch et al.'s (2022) study when summaries were of similar quality.

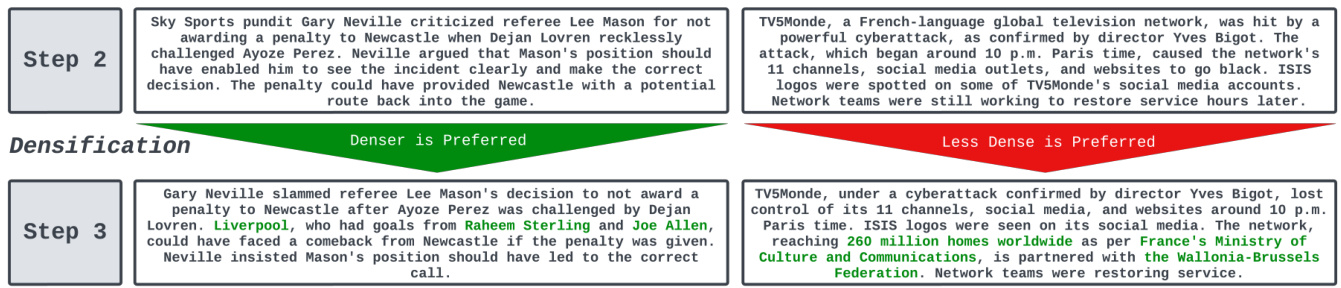

4.3 Qualitative analysis

There is a clear trade-off between the coherence/readability of abstracts and the amount of information they contain. To illustrate this, in Figure 4 we show two CoD steps: one summary is improved by more detail and the other is compromised. On average, the middle CoD summary best achieves this balance, but we leave it to future work to precisely define and quantify this tradeoff.

Figure 4Examples of human-preferred densification steps (left) and an example of non-preferred ones (right). For the left, the bottom summary is preferred because the addition of "Liverpool" and the goal scorer are thematically relevant. The second summary makes room for sensible compression, e.g. synthesizing "potential routes back into the game" into "counterattacks". For the right, adding more details about "TVMonde" does not compensate for the awkward fusion of entities ("Cyber Attack" and "Yves Bigot"), which was a direct result of having to tighten up the previous summary.

5. Related work

GPT Summary

Goyal et al. (2022) benchmarked GPT-3 in news article summary generation and found that humans preferred GPT-3 summaries over a previous supervised baseline, which did not reflect existing reference-based and no-reference metrics.Zhang et al. (2023) found that by soliciting high-quality summaries from freelancers, zero sample GPT-3 abstracts were comparable to human performance.

Entity-based summaries

Narayan et al. (2021) propose generating chains of entities as a planning step for supervised fine-tuning of the summarization model, in contrast to keywords (Li et al., 2020; Dou et al., 2021) or purely extracted units (Dou et al., 2021; Adams et al., 2023a). Entities have also been included in abstracts as a form of control (Liu and Chen, 2021; He et al., 2022; Maddela et al., 2022) to increase fidelity (Nan et al., 2021; Adams et al., 2022) and as units of assessment (Cao et al., 2022; Adams et al., 2023b).

6. Conclusion

We investigated the effect of abstract densification on human preferences for overall quality. We found that a certain level of densification is desirable, however, it is difficult to maintain readability and coherence when abstracts contain too many entities. We open-sourced the test set with annotations as well as a larger unannotated training set to further investigate the topic of fixed-length, variable-density abstracts.

7. Limitations

We only analyzed CoDs for a single domain, i.e., news summaries. The annotations did not show consistency at the high summary level, but did begin to show system-level trends, which is consistent with previous work on LLM-based assessments (Goyal et al., 2022). Finally, GPT-4 is a closed-source model, so we were unable to share model weights. However, we did release all assessment data, annotations, and 5,000 unannotated CoDs for downstream use cases, such as extracting densities into open-source models such as LLAMA-2 (Touvron et al., 2023).

bibliography

(List of references omitted here)

GPT-4 indicators

For the Likert style assessment of the GPT-4, we use the following prompt template.

摘要: {{摘要}} {{定义}}

The following are examples of the definitions provided for each quality indicator.

- volume of information:: Informative summaries capture important information from the article and present it in an accurate and concise manner.

- mass (in physics):: High-quality summaries are easy to understand and comprehend.

- consistency:: Well-structured and well-organized coherent abstracts.

- attributable: Is all the information in the summary fully attributable to the article?

- overall preference: A good abstract should convey the main ideas of the article in a concise, logical and coherent manner.

Quality and coherence cues do not include cues in articles. These definitions were adapted from previous work on abstract annotation: (Fabbri et al., 2021; Aharoni et al., 2023).

| dimension (math.) | relevance |

|---|---|

| volume of information | 0.215 |

| mass (in physics) | 0.120 |

| consistency | 0.178 |

| attributable | 0.245 |

| population (statistics) | 0.311 |

Table 2: Summary-level Pearson correlation coefficients between human preferences and GPT-4 Likert scores.

meta-assessment

To compute summary-level correlations, we first converted the preference data into a vector indicating the number of times the summary received a first-place vote. Table 4 shows that, unsurprisingly, cues designed to capture overall summary ratings have the highest overall-level Pearson correlation with overall preferences (0.31), but the overall correlation remains low.

summarize

This paper explores how GPT-4 can be utilized to generate increasingly denser abstracts through the Chained Density Cueing (CoD) method. It finds that a certain level of densification can improve the quality of abstracts, but too much density can impair readability and coherence. The research team validated this finding through human preference studies and automated GPT-4 evaluation, and open-sourced the associated annotated data and unannotated summary data, providing a valuable resource for future research.

Summary of key points:

- Chained Density Cues (CoD): Generate denser summaries by incrementally adding missing entities without increasing the length.

- physical density: Using entity density as a measure of the informativeness of summaries, it was found that human preferences approach the density of manually written summaries.

- Abstractness and integration: CoD generates summaries that are more abstract and convergent, and with less beginning bias.

- human preference: Humans prefer denser than normal GPT-4 summaries, but too much density reduces readability.

- Automated assessment: The results of the automated assessment of the GPT-4 were generally consistent with human preferences, but the overall correlation was low.

Future Research Directions:

- A more precise definition of trade-offs:: Future research could further define and quantify the trade-off between information content and readability.

- Multi-disciplinary applications: Apply the CoD methodology to other domains, such as academic papers, technical documentation, etc., to validate its generalizability.

- Model improvements:: Explore ways to improve the model to enhance readability while maintaining a high level of information.

Through this paper, we can see that AI has made impressive progress in text processing and generation, but still faces the challenge of balancing information content with readability. In the future, as technology continues to advance, we have reason to believe that AI-generated abstracts will be more intelligent and humanized.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...