The Chinchilla Moment and the o3 Moment: The Evolution of the Law of Scale for Large Language Models

Quick read of the article

This article provides a comprehensive and in-depth look at the past and present of the Scaling Law of Large Language Models (LLMs), as well as the future direction of AI research. With clear logic and abundant examples, author Cameron R. Wolfe takes readers from basic concepts to cutting-edge research, presenting an expansive picture of the AI field. The following is a summary and commentary on the core content of the article and the author's viewpoints:

1. Origins and development of the law of scale: from GPT to Chinchilla

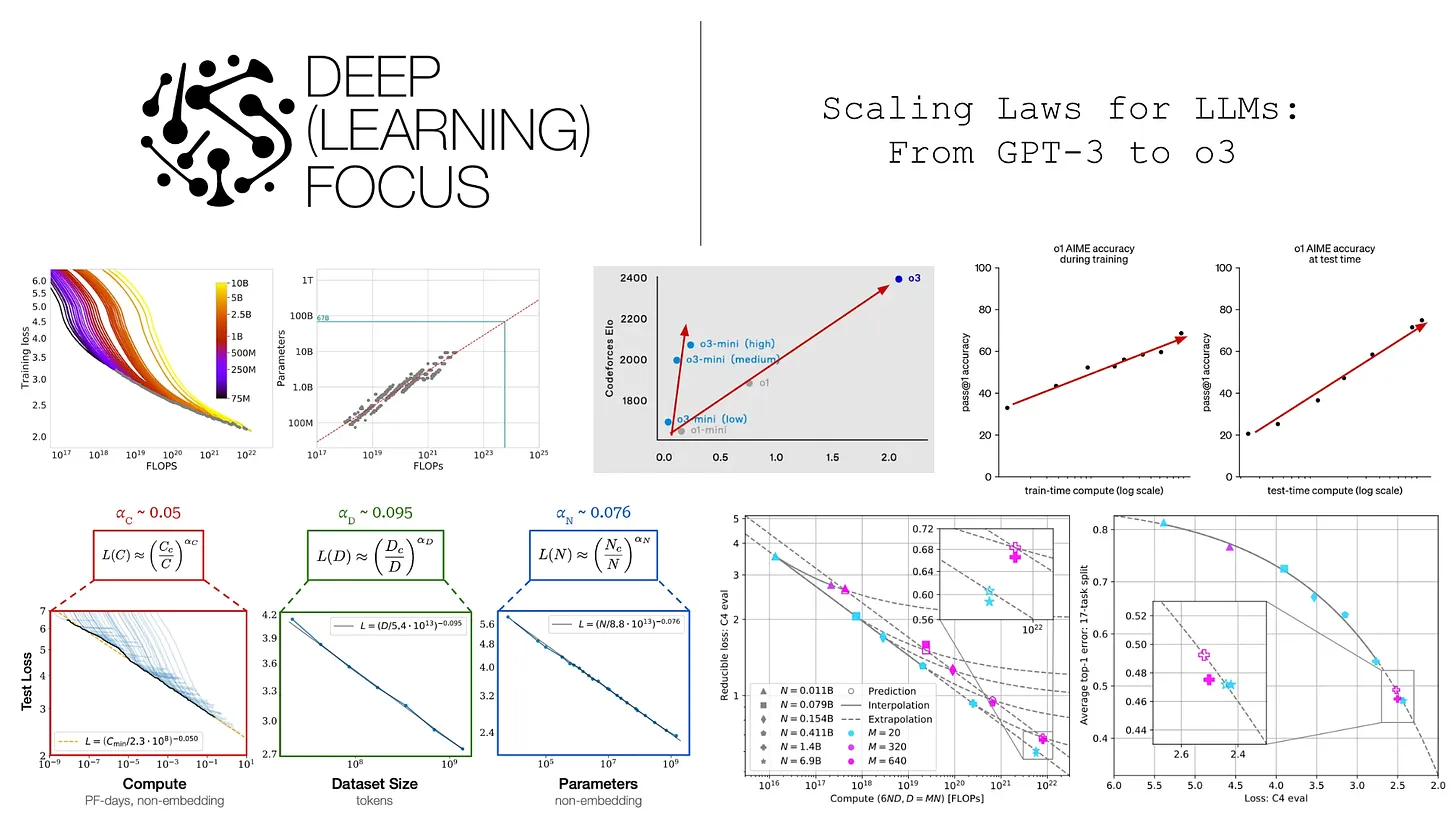

- The authors open by stating that the law of scale is the central driver of the development of large language models. By introducing the basic concept of the Power Law, the authors explain how the test loss of a large language model decreases as the model parameters, dataset size, and computational effort increase.

- The authors take a look at the evolution of the GPT family of models and vividly demonstrate the application of the laws of scale in practice. From the early GPT and GPT-2, to the landmark GPT-3, to the mysterious GPT-4, OpenAI has always adhered to the strategy of "making miracles out of great efforts", and has continuously refreshed the upper limit of the capabilities of the large language models. The article introduces the key innovations, experimental settings, and performance of each model in detail, and points out that the emergence of GPT-3 marks the transition from a specialized model to a generalized base model of the large language model, which opens up a new era of AI research.

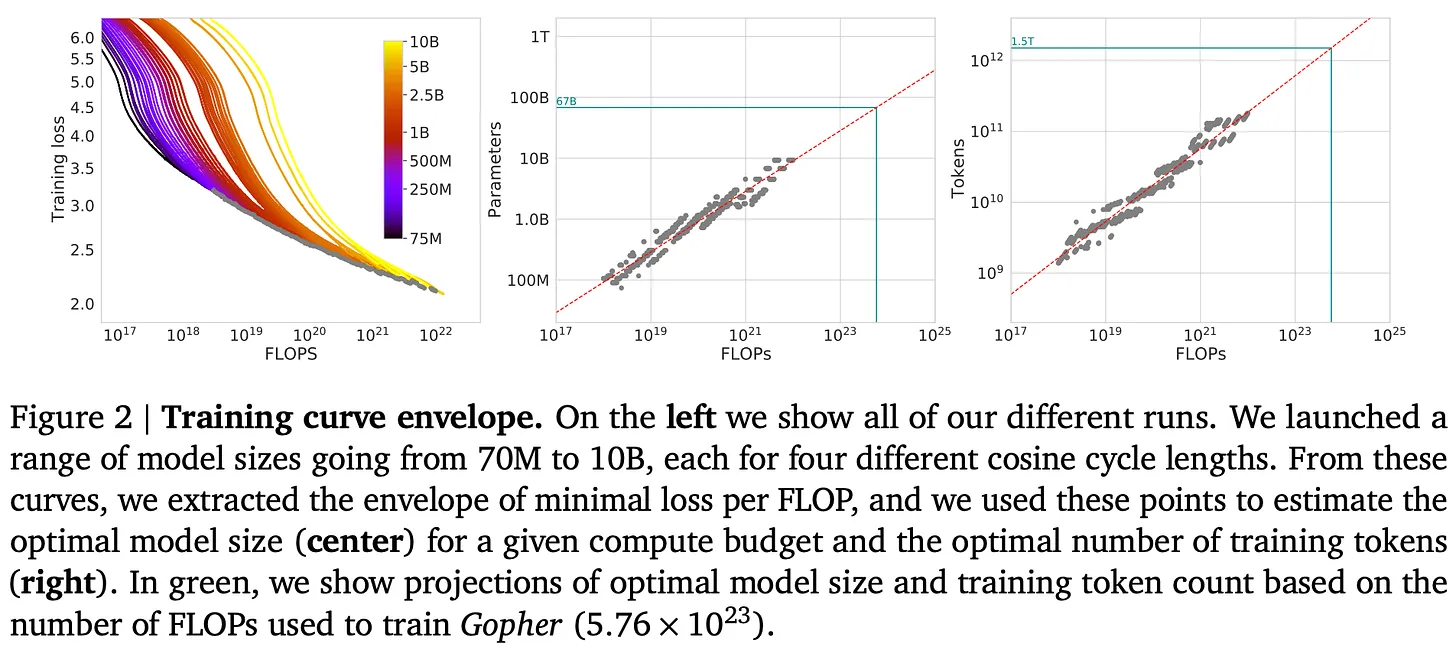

- The authors do not blindly advocate "bigger is better", but rationally analyze the "computationally optimal" size law proposed by the Chinchilla model. DeepMind's research has shown that previous models have generally been "under-trained", i.e., the dataset size has been too small relative to the model size, and the success of Chinchilla's model has demonstrated that increasing the dataset size appropriately is more effective than scaling up the model size for the same computational budget. This finding has had a profound impact on the training of subsequent large language models.

Comment: The authors provide an in-depth introduction to the Law of Scale, with both theoretical explanations and supporting examples, so that even non-specialized readers can understand its core ideas. By reviewing the evolution of the GPT series of models, the authors link the abstract law of scale to concrete model development, which enhances the readability and persuasiveness of the article. The analysis of Chinchilla's model demonstrates the author's discursive spirit, not blindly advocating scale, but guiding readers to think about how to utilize computing resources more effectively.

2. The "demise" of the law of size: questions and reflections

- The second part of the article focuses on the recent questioning of the law of scale in AI. The authors cite multiple media reports that suggest the industry is beginning to wonder if the law of scale has hit its ceiling as the rate of model improvement slows. At the same time, the authors cite industry insiders such as Dario Amodei and Sam Altman who argue the opposite, suggesting that scale is still an important force driving AI progress.

- The authors note that the "slowdown" in the law of scale was to some extent expected. The law of scale itself predicts that as scale increases, the difficulty of improving performance increases exponentially. In addition, the authors emphasize the importance of defining "performance". A decrease in test loss does not necessarily equate to an increase in the capabilities of the Big Language Model, and the industry's expectations of the Big Language Model vary widely.

- The "data bottleneck" is one of the issues the authors focus on. The Chinchilla model, as well as subsequent research, emphasizes the importance of data size, but the limited availability of high-quality data on the Internet may be a bottleneck to the further development of biglanguage models.

Comment: This section demonstrates the author's critical thinking and keen insight into the industry. Instead of avoiding the controversy, the authors objectively present the views of various parties and analyze the possible reasons for the "slowdown in scale" from a technical point of view. The author's emphasis on the "data bottleneck" is particularly important, which is not only a real challenge for the development of large language models, but also a direction for future research.

3. The future of AI research: beyond pre-training

- The final part of the article looks at future directions in AI research, focusing on large language models system/agent and inference models.

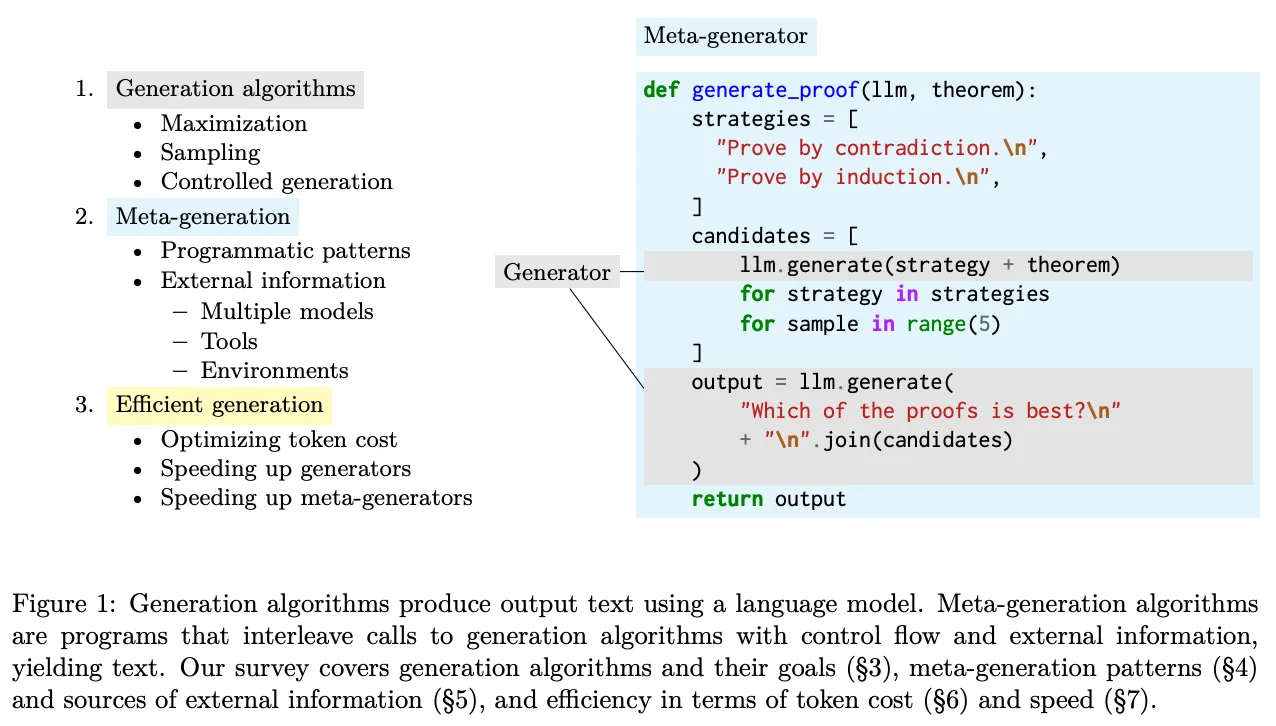

- The authors argue that even if the growth in the size of pre-trained models hits a bottleneck, we can still improve the capabilities of AI by building complex systems of large language models. The article describes the two main strategies of task decomposition and linking, and uses a book summary as an example of how to break down a complex task into sub-tasks that a large language model excels at handling.

- The authors also discuss the prospects for the application of big language modeling in product development, pointing out that building truly useful big language modeling products is an important direction for current AI research. In particular, the article emphasizes the notion of agent, which extends the application scenarios of large language models by giving them the ability to use tools. However, the authors also point out the robustness challenges of building complex large language modeling systems and present research directions to improve system reliability by improving meta-generation algorithms.

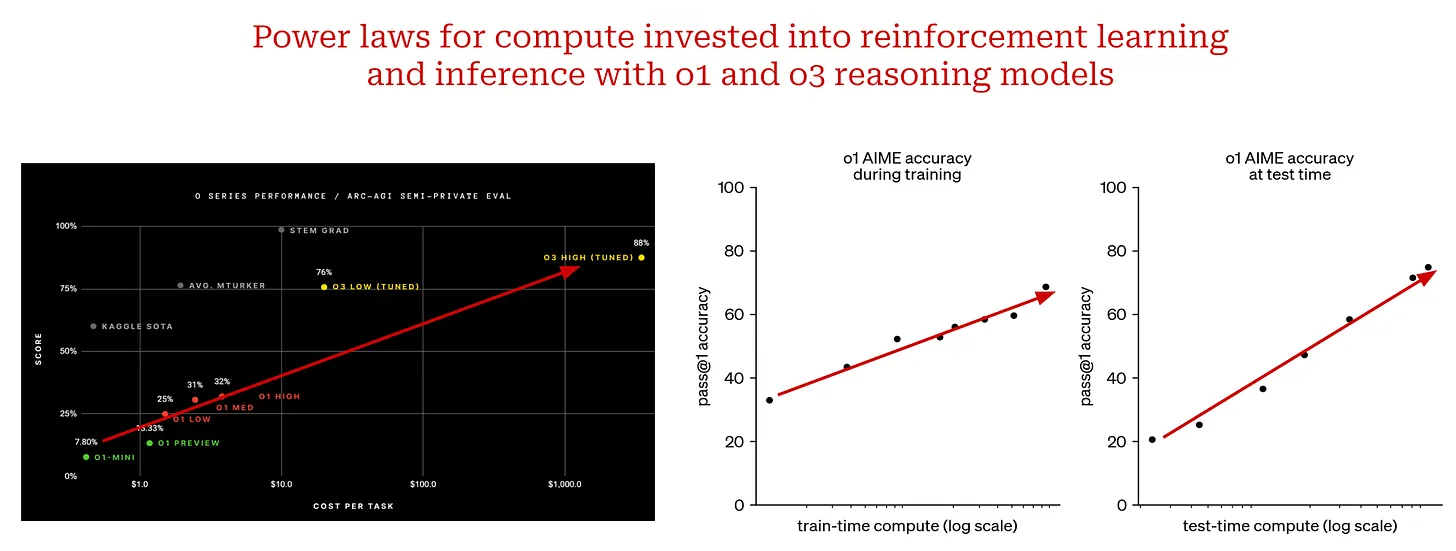

- In terms of inference models, the authors present OpenAI's o1 and o3 models, which have achieved impressive results on complex inference tasks. o3, in particular, outperformed human levels on several challenging benchmarks and even broke Terence Tao's predictions for some problems. The authors point out that the success of the o1 and o3 models shows that in addition to scaling up pre-training, the inference of models can also be significantly improved by increasing the computational input at the time of inference, which is a new paradigm for scaling.

Comment: This section is very forward-looking, and the authors' introduction to Big Language Model systems/agents and inference models opens up new horizons for readers. The authors' emphasis on building products for big language modeling is of great relevance, not only for academic research, but also for the application of AI technology on the ground. The introduction of the o1 and o3 models is encouraging, as they show the great potential of AI for complex reasoning tasks and indicate new directions for future AI research.

Summarize and reflect:

Cameron R. Wolfe's article is an excellent piece of work that combines breadth and depth, not only providing a systematic overview of the development of the Law of Scale for Large Language Models, but also offering an insightful outlook on the future of AI research. The author's viewpoint is objective and rational, affirming the important role of the law of scale, but also pointing out its limitations and challenges. The logic of the article is clear, and the arguments are sufficiently well-reasoned so that even readers who are not familiar with the AI field can benefit from it.

Notable highlights:

- An in-depth explanation: The author has a knack for explaining complex concepts in easy-to-understand language, such as the introduction of power laws, laws of scale, and task decomposition.

- Rich example support: The article lists a large number of models and experimental results, such as the GPT series, Chinchilla, Gopher, o1, o3, etc., to make abstract theories tangible and relatable.

- A comprehensive literature review: The article cites a large number of references covering classic papers and recent research results in the field of large language modeling, providing readers with resources for in-depth study.

- Open-minded thinking: The authors don't give a final answer about the future of the law of scale, but rather lead the reader to independent thinking, such as "What do we scale next?" This question will inspire more researchers to explore the frontiers of AI.

This is an article that deserves to be read carefully by all those who follow the development of AI. It not only summarizes the past, but also enlightens the future. The value of the article lies not only in the content itself, but also in its spirit of stimulating thinking and leading innovation. I believe that in future AI research, we will see more breakthroughs like o3, and ideas at scale will continue to drive the progress of AI technology in new forms.

Title:Laws of Scale for Large Language Models: From GPT-3 to o3.

Original text:https://cameronrwolfe.substack.com/p/llm-scaling-laws

Learn about the current state of big language modeling at scale and the future of AI research...

(Sources [1, 7, 10, 21])

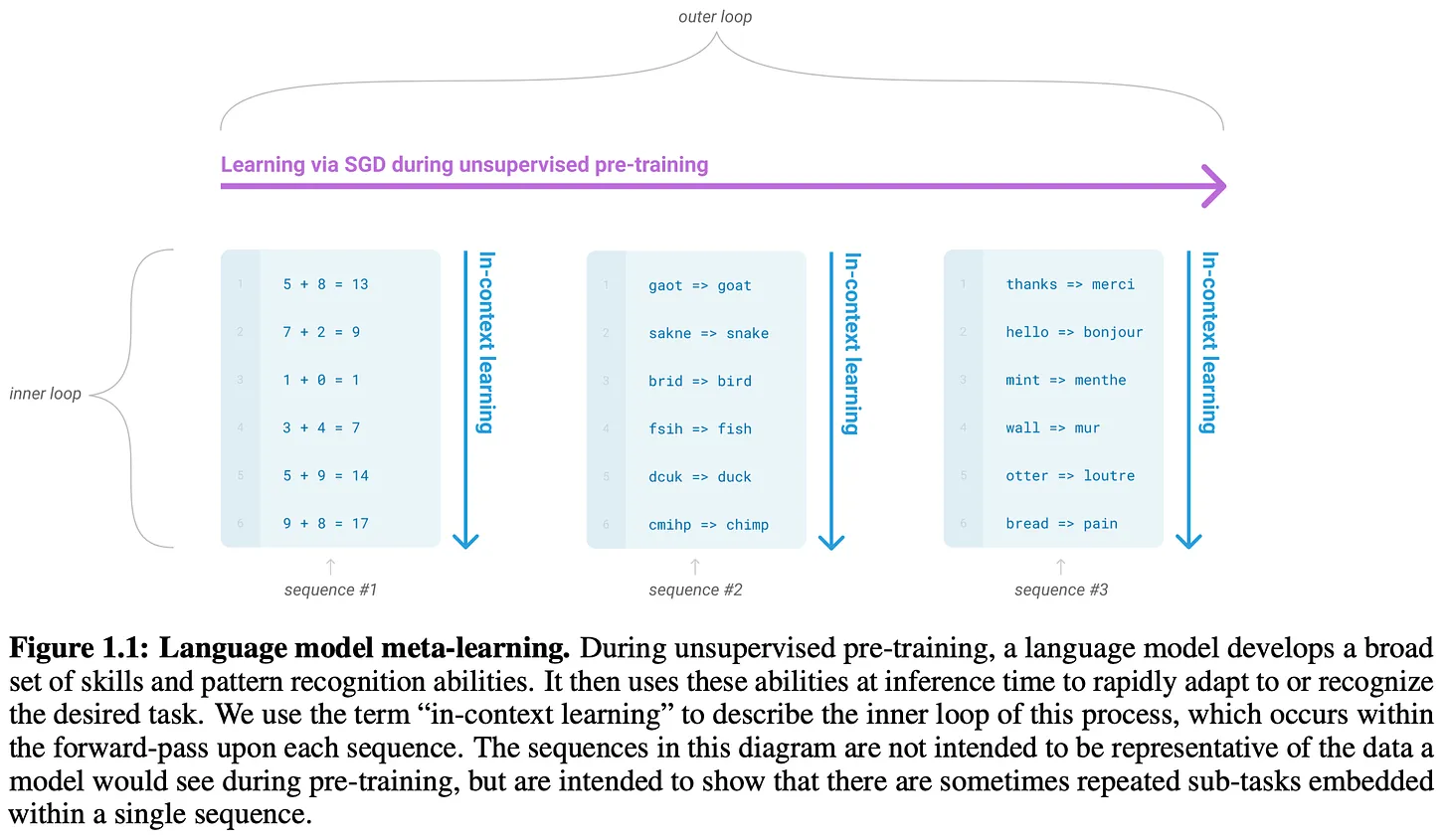

Most of the recent advances in AI research -In particular, Large Language Modeling (LLM)- - It's all driven by scale. If we train larger models with more data, we get better results. This relationship can be defined more rigorously by the law of scale, which is an equation that describes how the test loss of a large language model decreases as we increase some relevant quantity (e.g., the amount of training computation). The law of size helps us predict the results of larger, more expensive training runs, thus giving us the necessary confidence to continue investing in scaling.

"If you have a large dataset and you train a very large neural network, success is guaranteed!" - Ilya Sutskever

For years, the law of scale has been a predictable North Star for AI research. In fact, the success of early frontier labs like OpenAI has even been attributed to their adherence to the law of scale. However, the persistence of scaling has recently been questioned by1 reports that top research labs are working to create the next generation of better models of large languages. These claims may make us skeptical:Will there be a bottleneck at scale? If so, are there other ways forward?

This overview will answer these questions from the ground up, starting with an in-depth explanation of the Big Language Model Laws of Scale and the surrounding research. The concept of the Law of Scale is simple, but there are all sorts of public misconceptions around scaling - theThe science behind this study is actually very specificThe Law of Scale. Using this detailed understanding of scaling, we will discuss the latest trends in big language modeling research, as well as the factors that have caused the law of scale to "stall". Finally, we will use this information to provide a clearer picture of the future of AI research, focusing on a few key ideas -Includes scale--These ideas can continue to drive progress.

Basic Scaling Concepts for Large Language Models

To understand the scaling state of a large language model, we first need to establish a general understanding of the laws of scale. We will build this understanding from scratch, starting with the concept of power laws. We will then explore how power laws have been applied in the study of large language models to derive the scale laws we use today.

What is a power law?

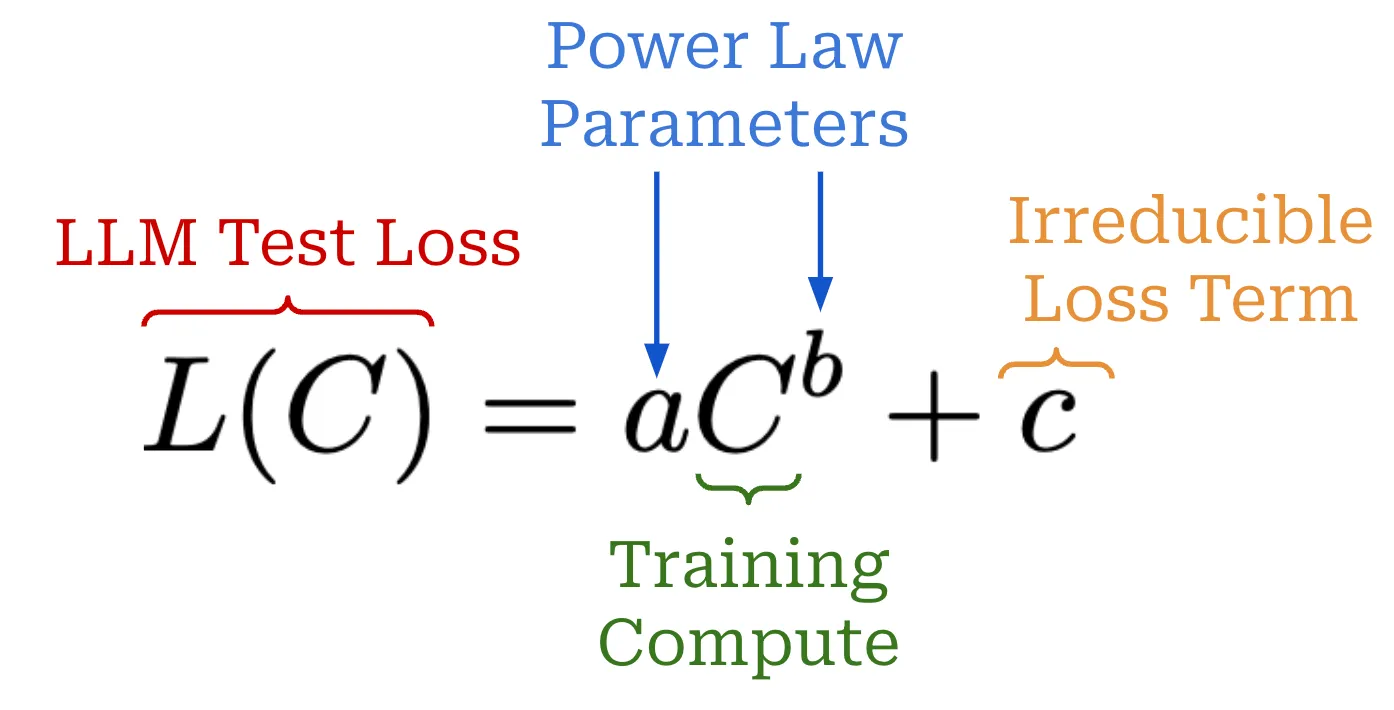

Power laws are a fundamental concept in the scaling of large language models. In short, a power law simply describes the relationship between two quantities. In the case of a large language model, the first quantity is the test loss of the large language model-or other relevant performance metrics (e.g., downstream task accuracy [7])- - The other is some setting that we are trying to scale, such as the number of model parameters. For example, when investigating the scaling properties of a large language model, we might see the following statement.

"If there is enough training data, the scaling of the validation loss should be an approximate smooth power-law function of the model size." - Source [4]

Such a statement tells us that there is a measurable relationship between the test loss of the model and the total number of model parameters. A change in one of these quantities will result in an invariant change in the relative scale of the other. In other words, we know from this relationship that an increase in the total number of model parameters--theAssuming other conditions are met (e.g., sufficient training data is available)--will cause test losses to be reduced by a predictable factor.

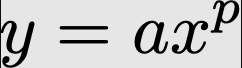

Power law formula. The basic power law is expressed through the following equation.

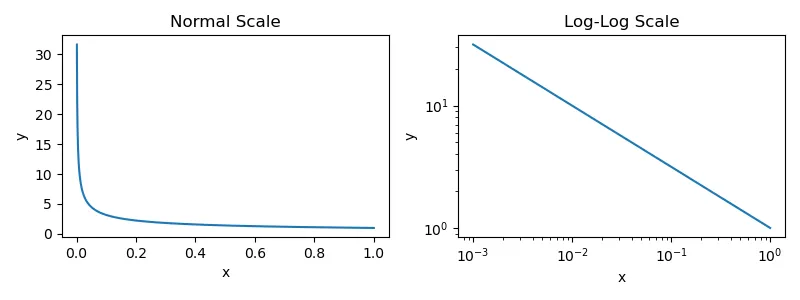

The two quantities studied here are x cap (a poem) ybut (not) a cap (a poem) p is a constant that describes the relationship between these quantities. If we plot this power law function2 , we get the following graph. We provide plots on both normal and logarithmic scales because most papers that study the scaling of large language models use logarithmic scales.

Graph of the fundamental power law between x and y

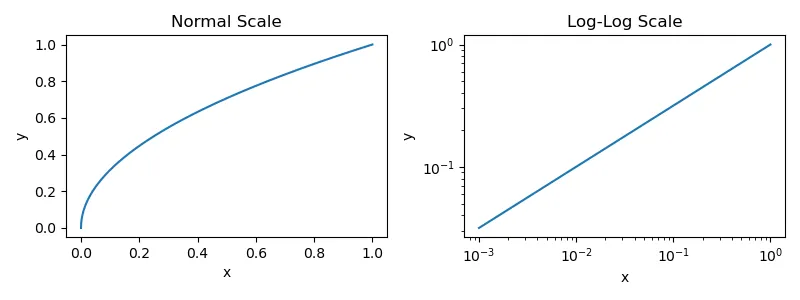

However, the diagrams provided for scaling large language models do not look like the diagrams shown above - theThey're usually upside down.See the example below.

(Source [1])

The equation for the inverse power law is almost the same as the equation for the standard power law, but we are interested in the p Use negative exponents. Making the exponent of a power law negative will turn the graph upside down; see the example below.

Graph of the inverse power law between x and y

When plotted using the logarithmic scale, this inverse power law produces the linear relationship that characterizes the size laws of most large language models. Almost every paper covered in this overview will generate such plots in order to investigate how scaling a variety of different factors (e.g., size, computation, data, etc.) affects the performance of a large language model. Now, let's take a more practical look at power laws by learning about one of the first papers to study them in the context of large language model scaling [1].

Scale laws for neurolinguistic modeling [1]

In the early days of language modeling, we did not understand the impact of scale on performance. Language modeling was a promising area of research, but the current generation of models at the time (e.g., the original GPT) had limited power. We had not yet discovered the power of larger models, and the path to creating better language models was not clear.Does the shape of the model (i.e., number of layers and size) matter? Does making the model bigger help it perform better? How much data is needed to train these larger models?

"As model size, dataset size, and the amount of computation used for training increase, the loss scales as a power law, with some of these trends spanning more than seven orders of magnitude." - Source [1]

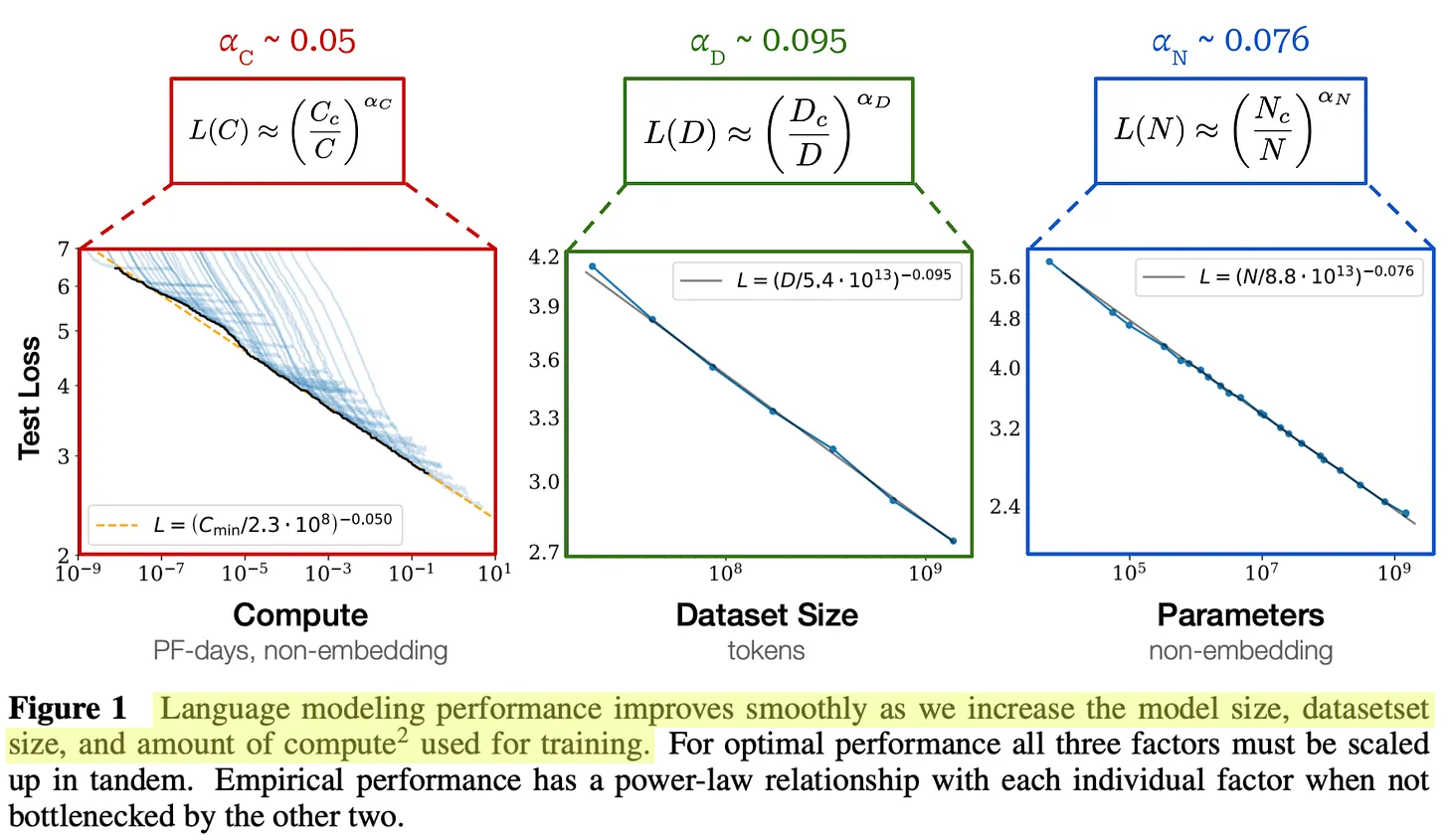

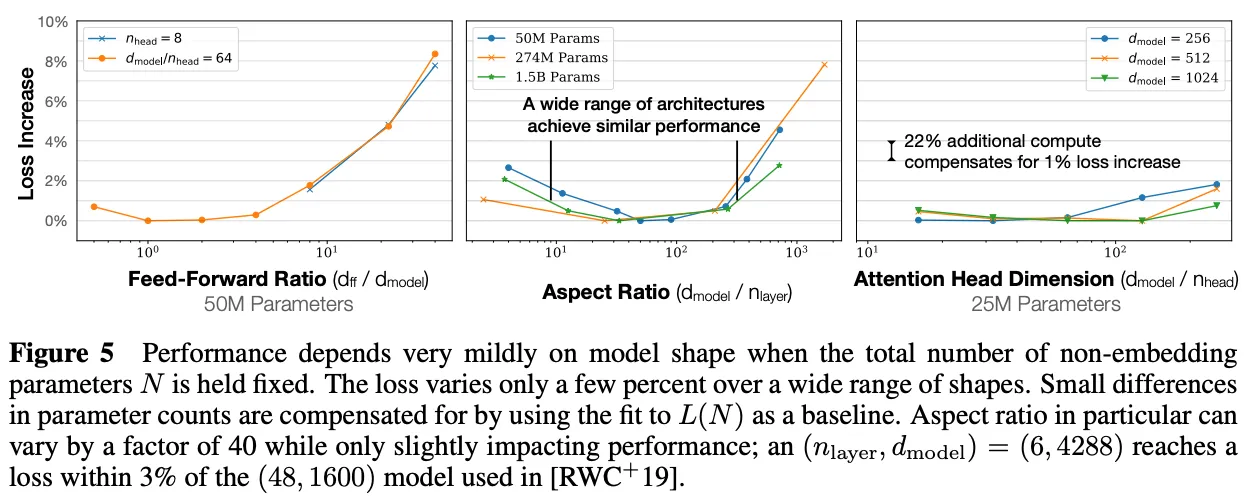

In [1], the authors aimed at analyzing several factors by-Examples include model size, model shape, dataset size, training computation and batch size- - on model performance to answer these questions. From this analysis, we learn that the performance of the large language model improves smoothly as we add more:

- The number of model parameters.

- The size of the dataset.

- The amount of computation used for training.

More specifically.When performance is not bottlenecked by the other two factors, a power-law relationship is observed between each factor and the test loss of the large language model.

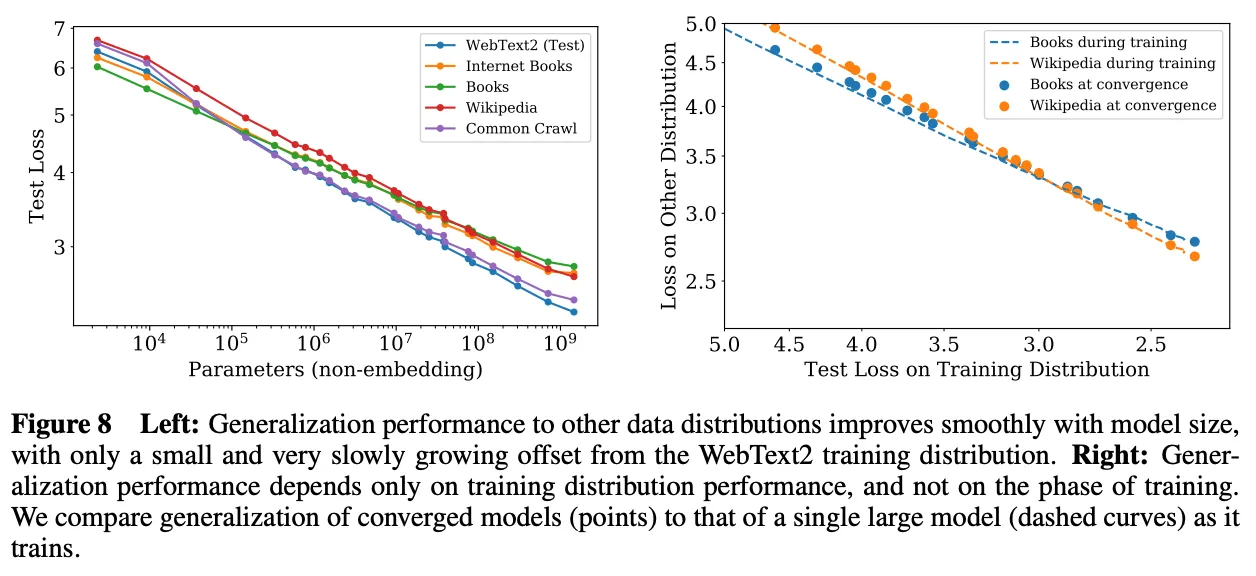

Experimental Setup.To fit their power laws, the authors pre-trained large language models on a subset of the WebText2 corpus, with a size of up to 1.5 billion parameters and containing from 22 to 23 billion tokens. all models were modeled using 1,024 Token of fixed context length and standard next Token prediction (cross-entropy) loss for training. The same loss is measured on a reserved test set and used as our main performance metric.This setting matches the standard pre-training settings for most large language modelsThe

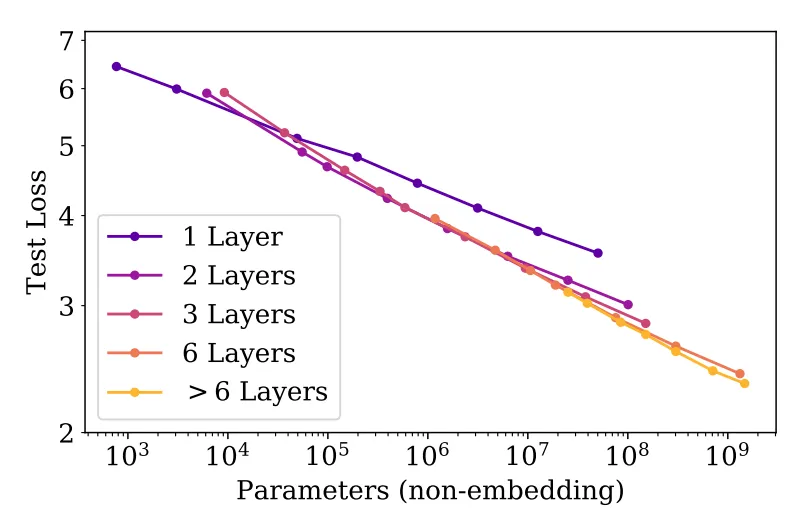

(Source [1])

Large Language Models Power Laws of Scaling.The performance of the large language model trained in [1] -In terms of their test losses on WebText2- - is shown to improve steadily with more parameters, data, and computation3 . These trends span eight orders of magnitude in computation, six orders of magnitude in model size, and two orders of magnitude in dataset size. The exact power law relationships and the equations fitted to each relationship are provided in the figure above. Each of the equations here is very similar to the inverse power law equations we have seen before. However, we set a = 1 and add an additional multiplication constant of 4 inside the parentheses.

(Source [1])

These power laws only apply when training is not bottlenecked by other factors. Thus, all three components - theModel size, data and calculations-should all be scaled up at the same time for optimal performance. If we expand any of these components in isolation, we will reach a point of diminishing returns.

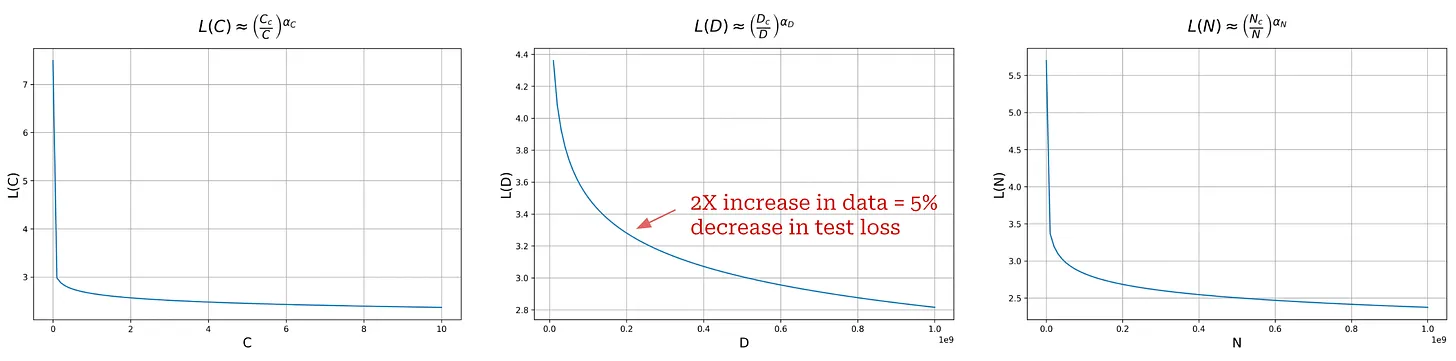

What does the power law tell us?Although the power law plots provided in [1] look promising, we should note that these plots were generated using a logarithmic scale. If we generate normal plots (i.e., without logarithmic scaling), we get the following figure, in which we see that the shape of the power law resembles exponential decay.

Power law graphs without logarithmic scales

Given much of the online discourse around scaling and AGI, such a finding seems counterintuitive. In many cases, the intuition we have been fed seems to be that the quality of large language models increases exponentially with the logarithmic growth of computation, but this is not the case. In fact, theImproving the quality of large language models becomes increasingly difficult as the scale increasesThe

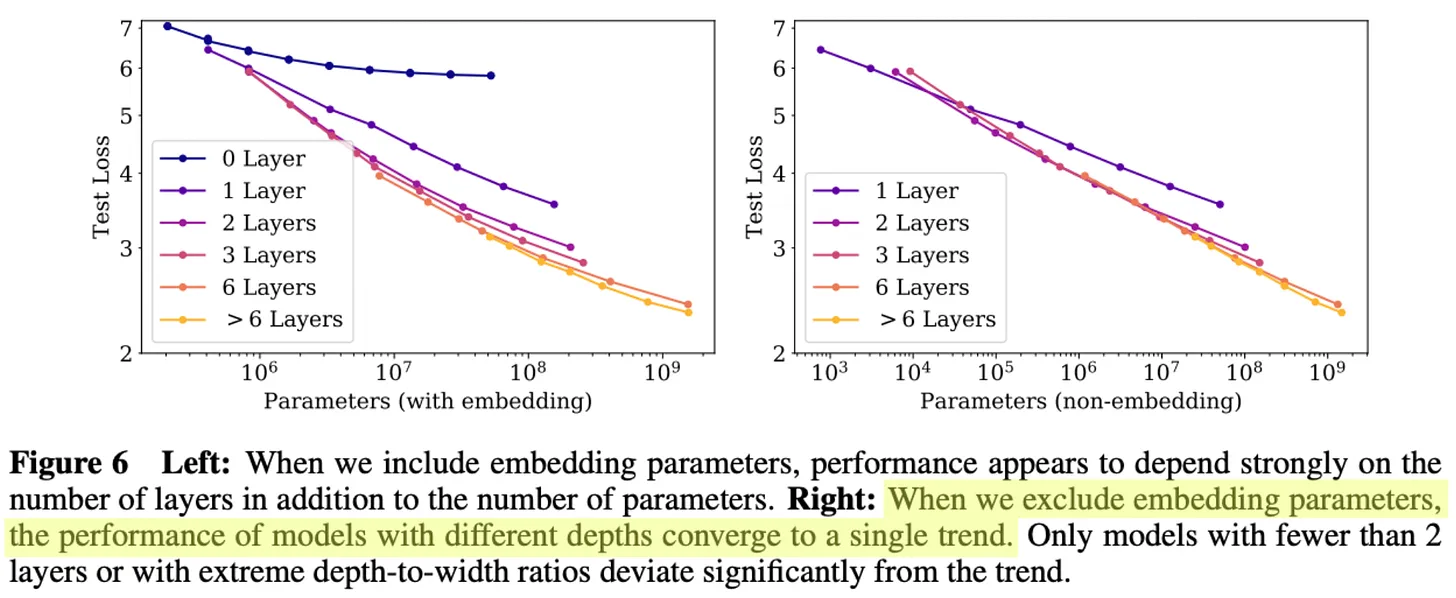

(Source [1])

Other useful findings.In addition to the power law observed in [1], we have seen that other factors considered, such as model shape or architectural settings, have little impact on model performance; see above. By far, scale is the largest contributing factor in creating better models for large languages - theMore data, computation and model parameters lead to a smooth increase in the performance of large language modelsThe

"Larger models have significantly higher sample efficiencies, so optimal computationally efficient training involves training very large models on relatively modest amounts of data and stopping significantly before convergence." - Source [1]

Interestingly, the empirical analysis in [1] shows that larger large language models tend to have higher sample efficiency, meaning that they use less data to achieve the same level of test loss compared to smaller models. For this reason, thePre-training a large language model to convergence is (arguably) sub-optimal.. Instead, we can train larger models on less data and stop the training process before convergence. This approach is optimal in terms of the amount of training computation used, but it does not take into account inference costs. In fact, we usually train smaller models on more data because smaller models are less expensive to host.

The authors also extensively analyzed the relationship between model size and the amount of data used for pre-training and found that the size of the dataset does not need to increase as quickly as the model size.Increase in model size by about 8 times requires an increase in training data volume by about 5 times to avoid overfittingThe

(Source [1])

"These results show that language modeling performance improves smoothly and predictably when we appropriately scale model size, data, and computation. We expect larger language models to perform better than current models and have higher sample efficiency." - Source [1]

Practical application of the law of size

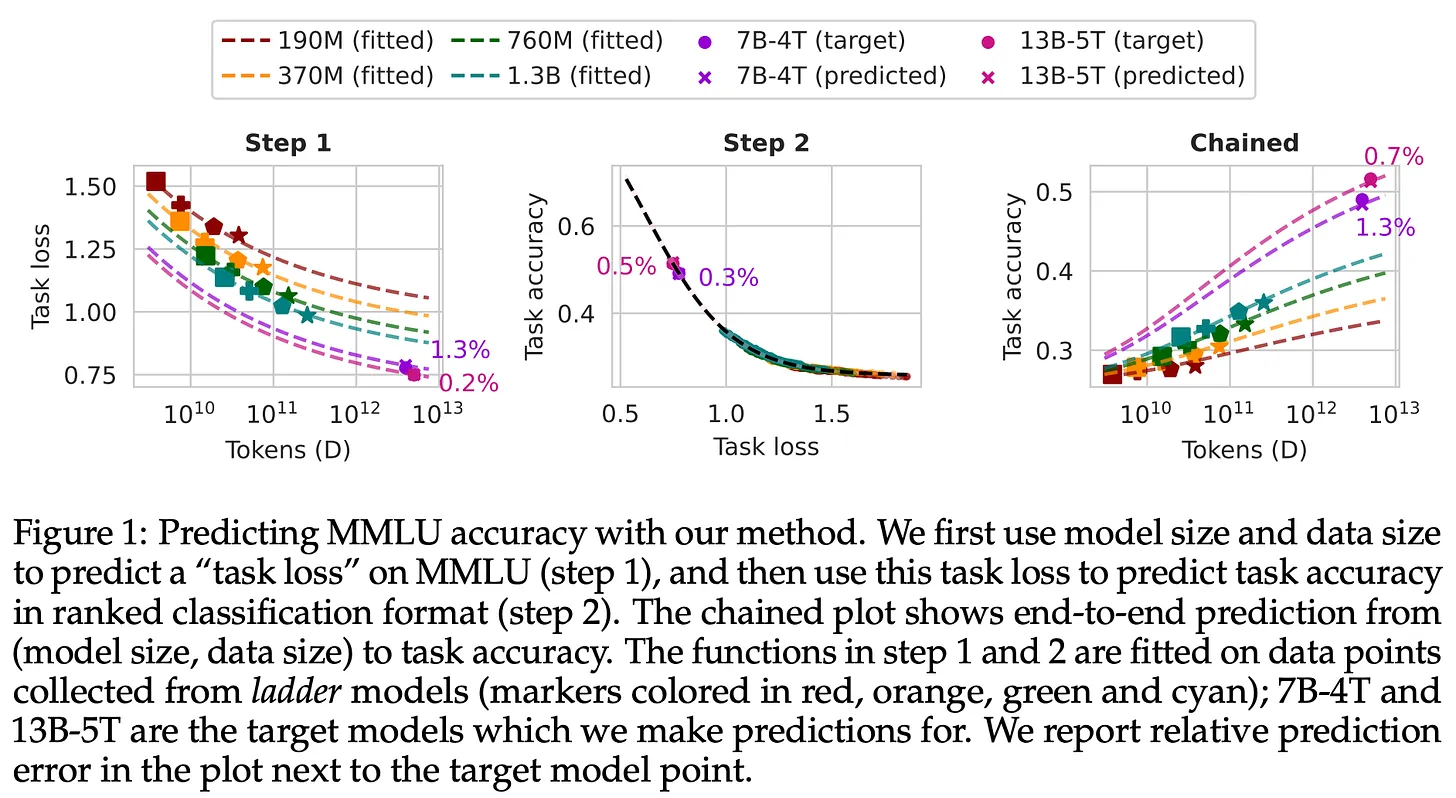

The fact that large-scale pre-training is so beneficial presents us with a small dilemma. The best results can be obtained by training large models on large amounts of data. However, these training runs are very expensive andThis means that they also take on a lot of riskWhat if we spend $10 million to train a model that fails to meet our expectations? What if we spend $10 million to train a model that fails to meet our expectations? Given the cost of pre-training, we can't perform any model-specific tuning, and we have to make sure that the models we train perform well. We need to develop a strategy to tune these models and predict their performance without spending too much money.

(Source [11])

- Train a bunch of smaller models using various training settings.

- Fit the scale law to the performance of smaller models.

- Use the law of size to infer the performance of a larger model.

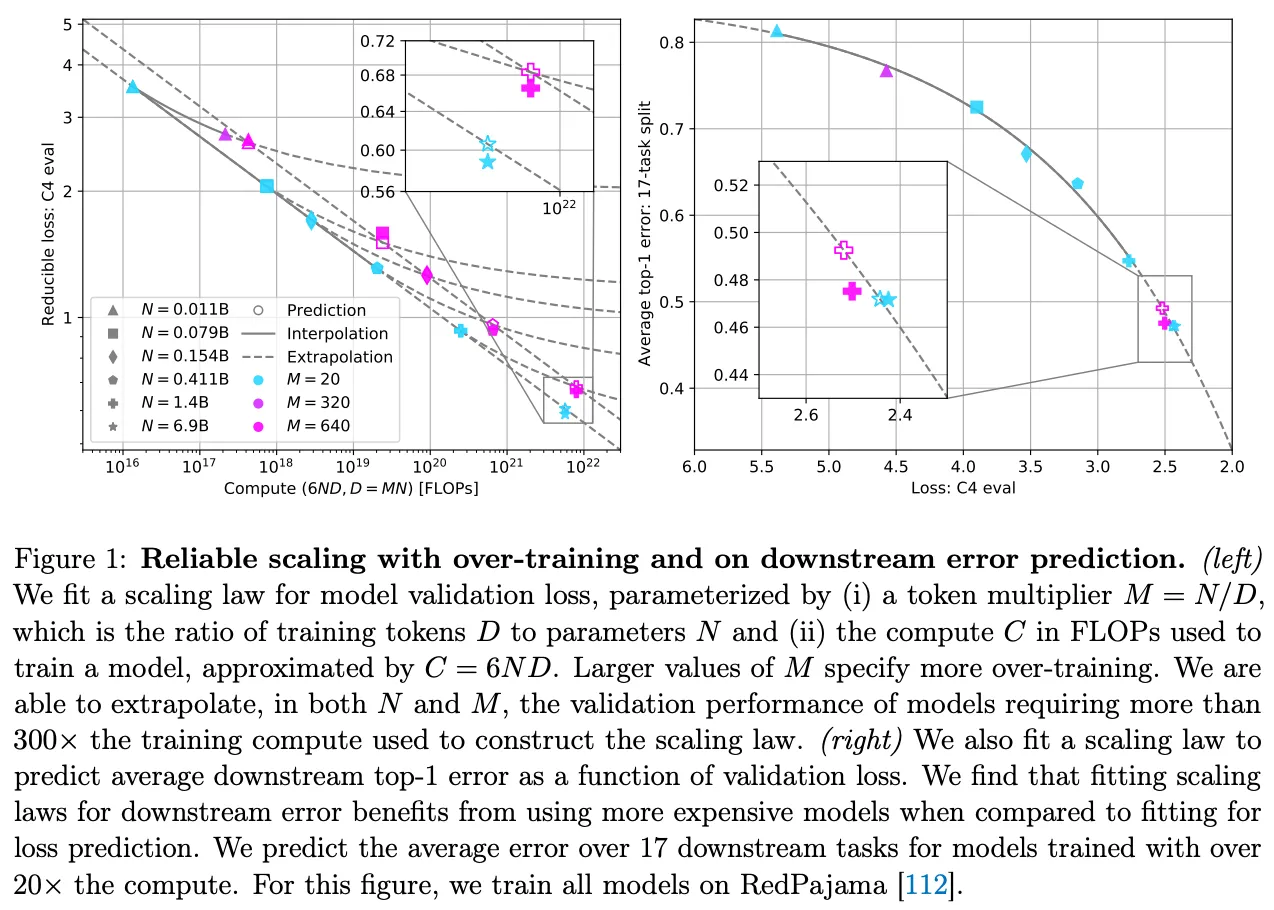

Of course, this approach has its limitations. Predicting the performance of larger models from smaller models is difficult and can be inaccurate. Models may behave differently depending on size. However, various methods have been proposed to make this more feasible, and size laws are now commonly used for this purpose. The ability to use scale laws to predict the performance of larger models gives us more confidence (and peace of mind) as researchers. In addition, the law of size provides a simple way to justify investments in AI research.

Scale up and pre-training era

"That's what's driving all the advances we're seeing today - mega neural networks trained on huge datasets." - Ilya Sutskever

The discovery of the law of size has catalyzed many recent advances in the study of large language models. To get better results, we simply need to train larger (and better!) datasets to train increasingly larger models. This strategy has been used to create several models in the GPT family, as well as most of the well-known models from teams outside of OpenAI. Here, we'll take a deeper look at the progress of this scaling research - theRecently described by Ilya Sutskever as "the age of pre-training." 5.

GPT series: GPT [2], GPT-2 [3], GPT-3 [4], and GPT-4 [5].

Large Language Models The most widely known and visible application of the Scale Laws is in the creation of OpenAI's GPT family of models. We will focus primarily on the earlier open models in the series - theUntil GPT-3-Because:

- Details of these models are being shared more openly.

- Later models have benefited from advances in post-training research in addition to expanding the pre-training process.

We will also present some known scaling results, such as models from GPT-4.

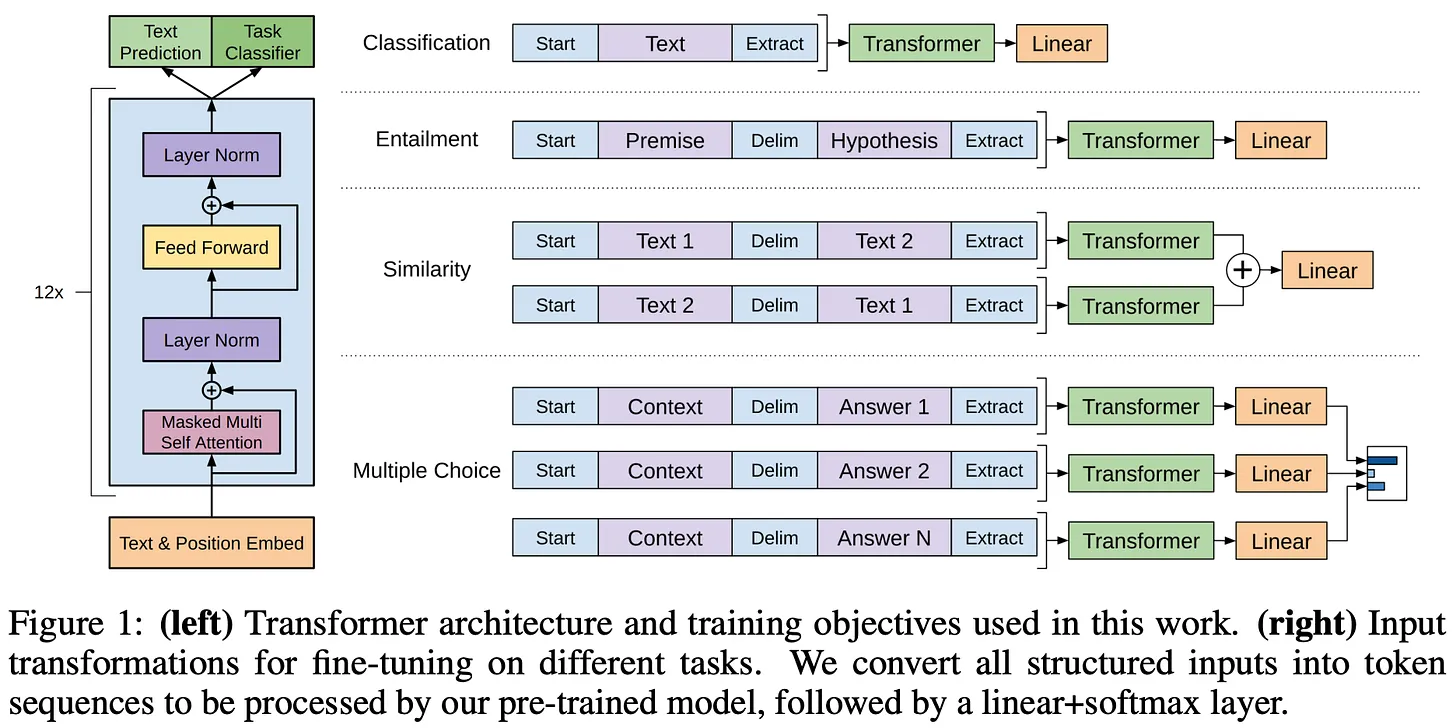

(Source [2])

- Self-supervised pretraining on flat text is very effective.

- It is important to use long, continuous spans of text for pre-training.

- When pretrained in this manner, individual models can be fine-tuned to solve a variety of different tasks with state-of-the-art accuracy6 .

Overall, GPT is not a particularly noteworthy model, but it laid some important groundwork for later work (i.e., decoder-only Transformer and self-supervised pretraining) that explored similar models on a larger scale.

(Source [3])

- These models are pretrained on WebText, which i) Much larger than BooksCorpus, and ii) Created by grabbing data from the internet.

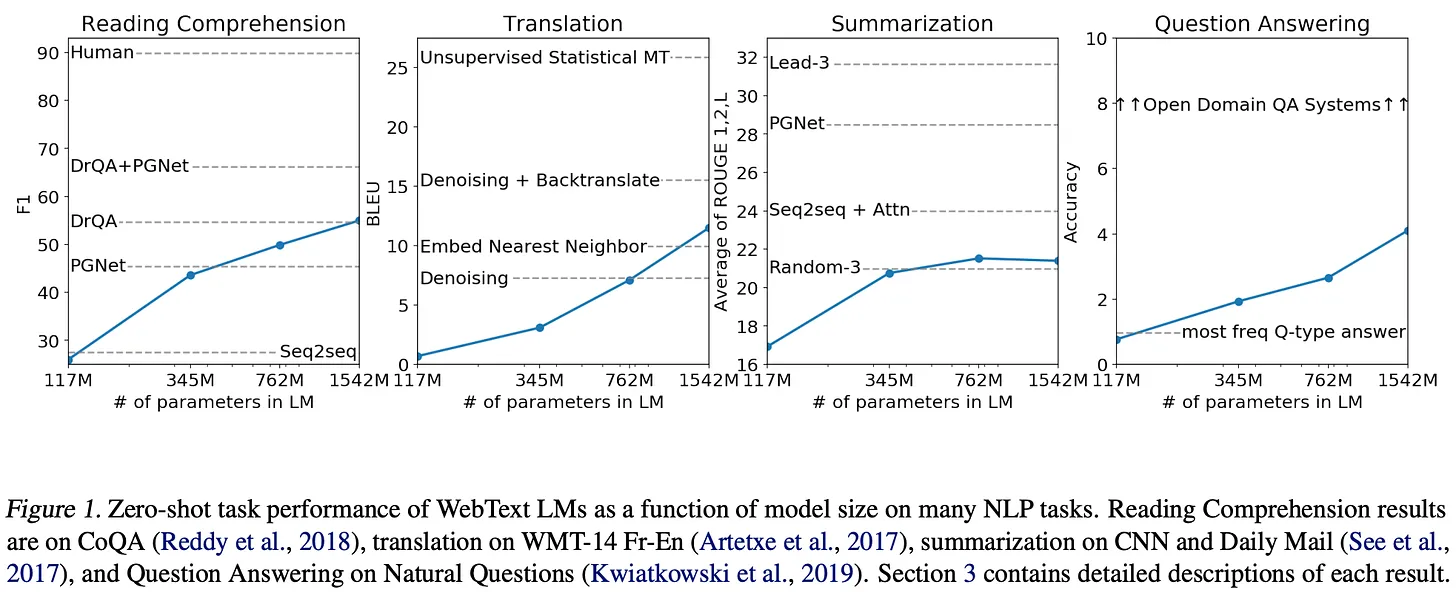

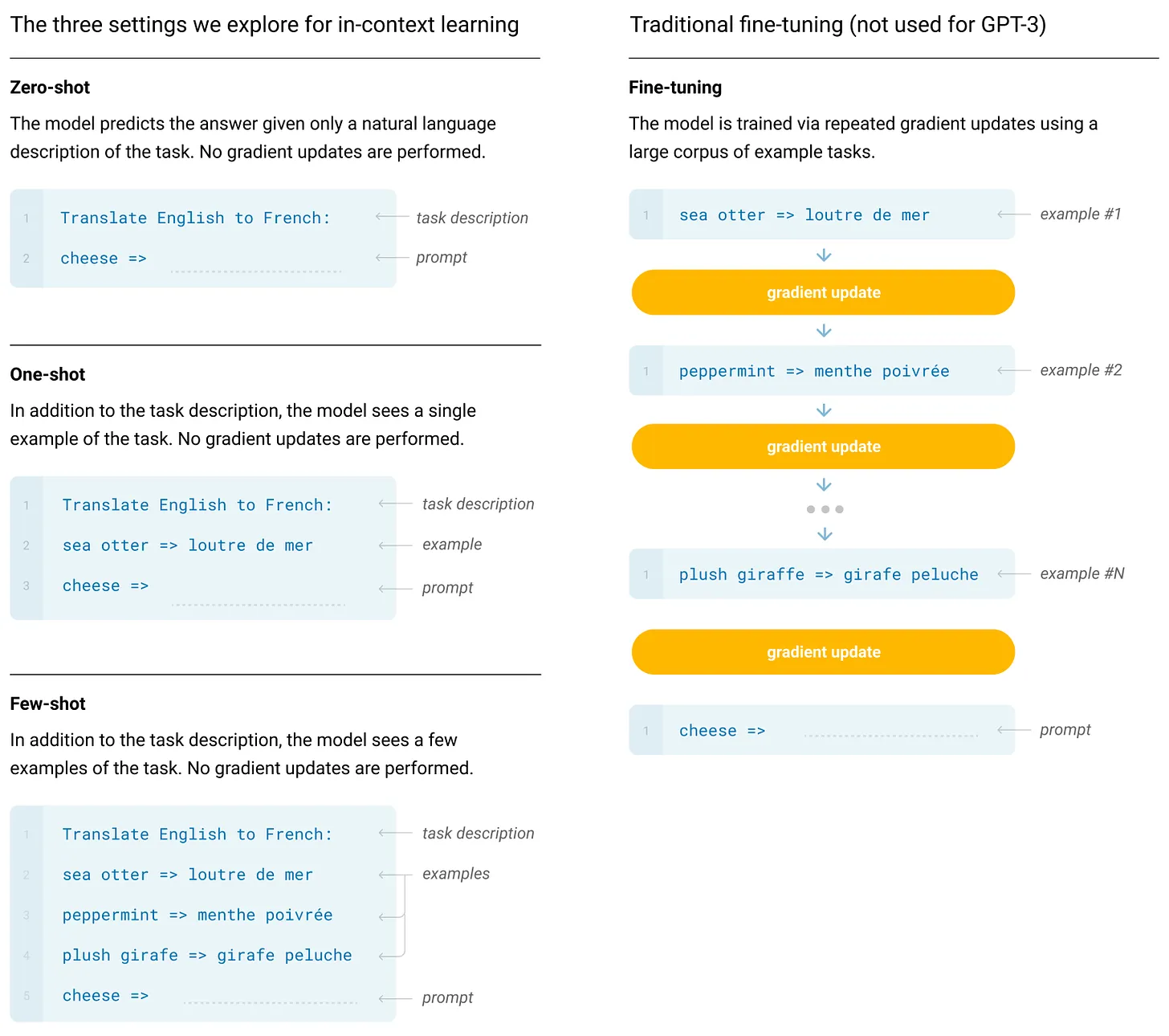

- These models are not fine-tuned for downstream tasks. Instead, we solve the task by performing zero-sample inference7 using pre-trained models.

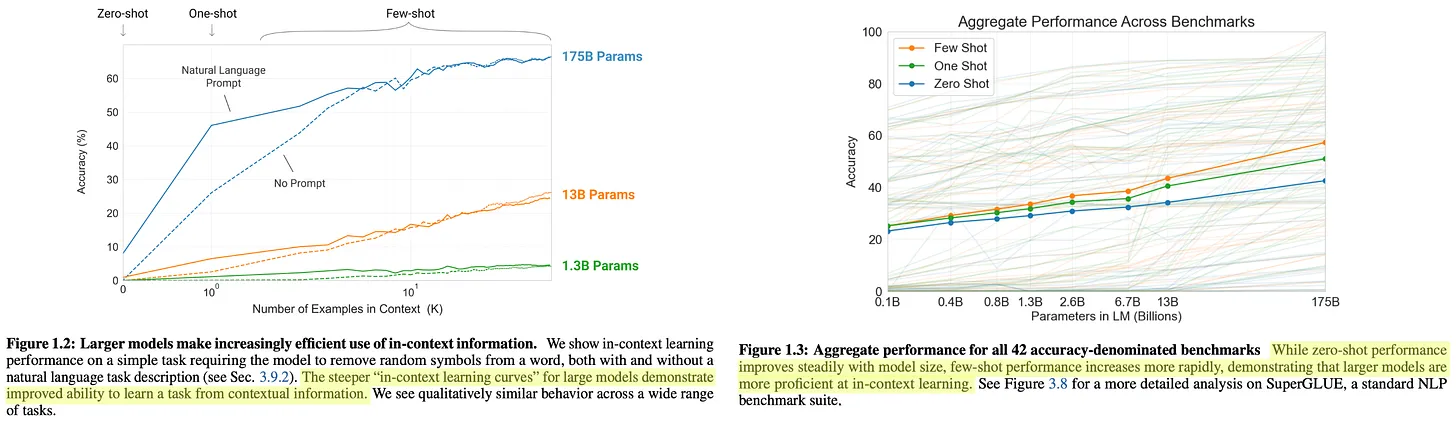

GPT-2 models do not reach state-of-the-art performance in most benchmarks8 , but their performance consistently improves with increasing model size - theExpanding the number of model parameters yields clear benefitsSee below.

(Source [3])

"Language models with sufficient capacity will begin to learn to infer and perform tasks demonstrated in natural language sequences in order to better predict them, regardless of their method of acquisition." - Source [3]

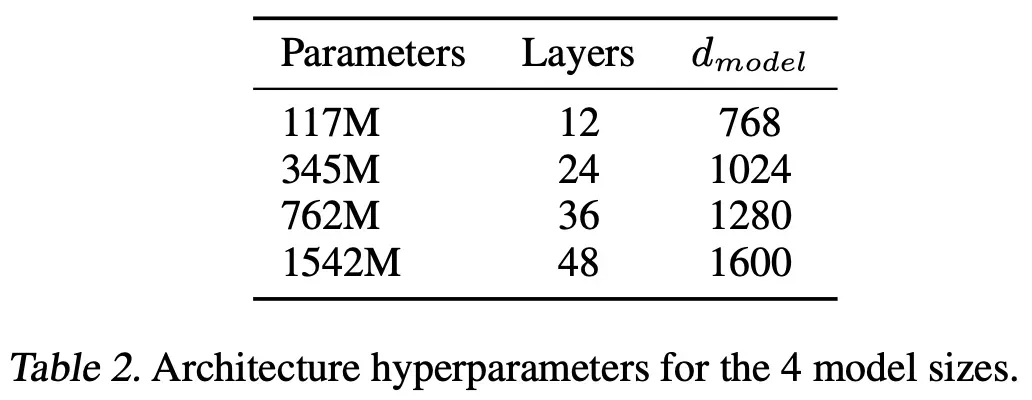

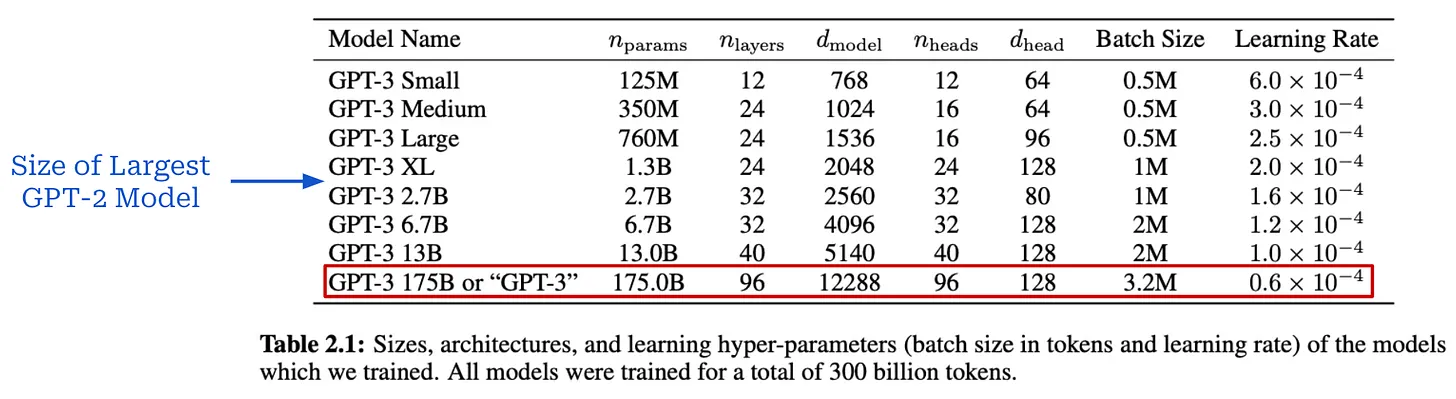

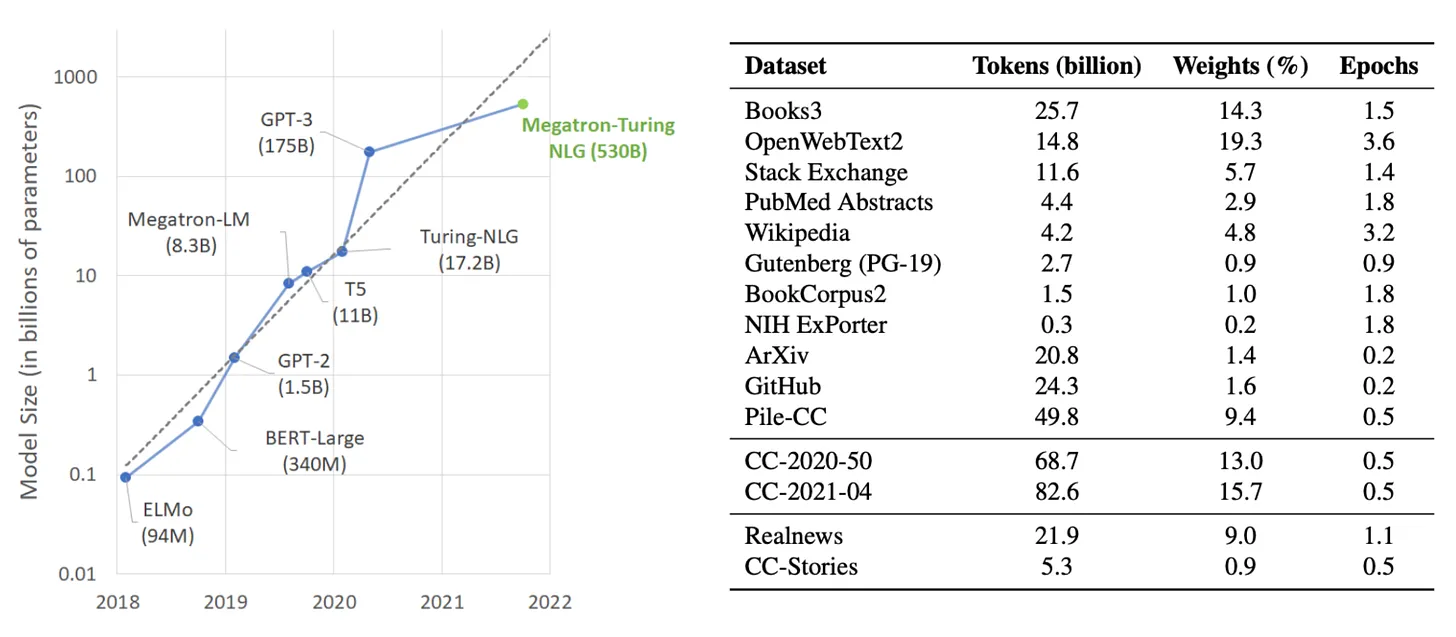

GPT-3 [4] was a watershed moment in AI research that clearly confirmed the benefits of large-scale pre-training of a large language model. With more than 175 billion parameters, this model is more than 100 times larger than the largest GPT-2 model; see below.

(Source [4])

(Source [4])

(Source [4])

(Source [4])

(Source [4])

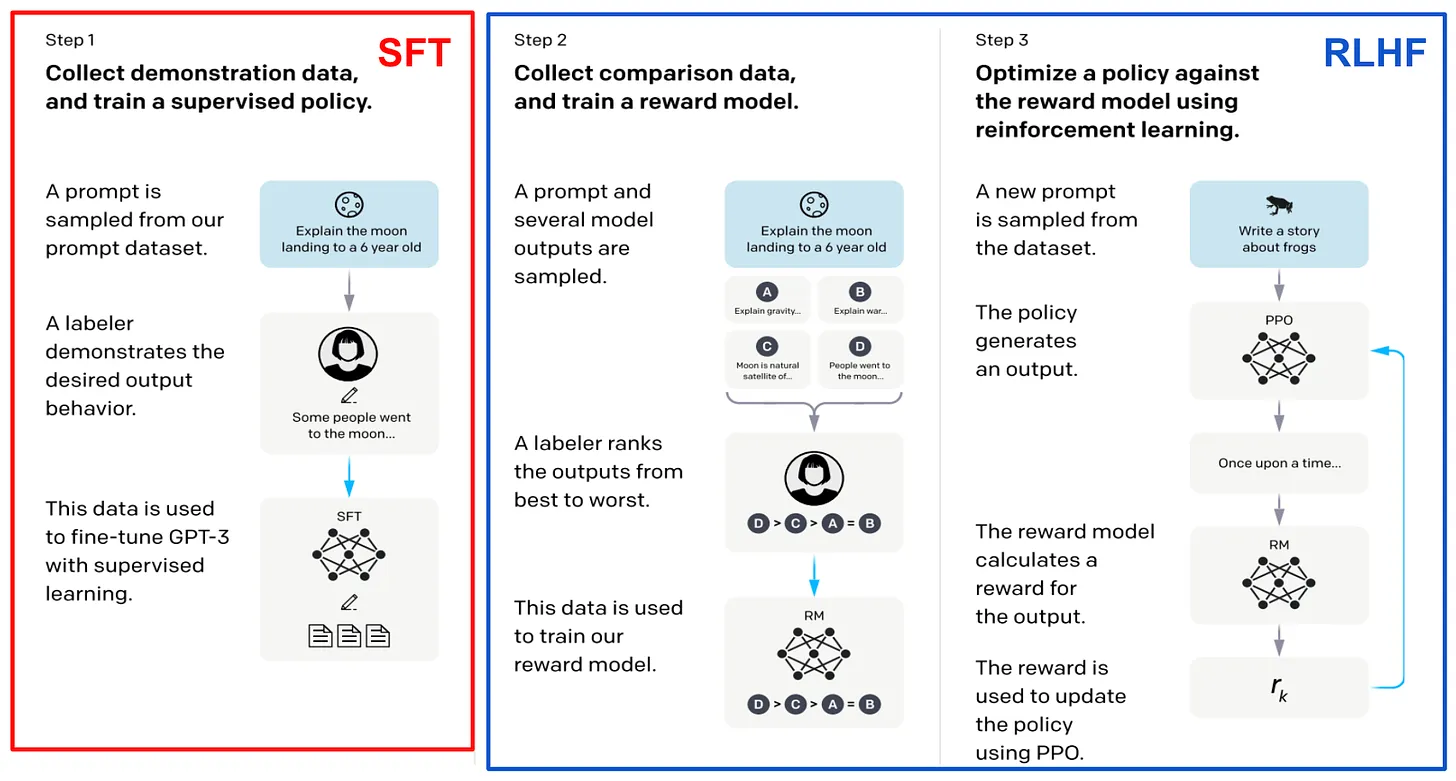

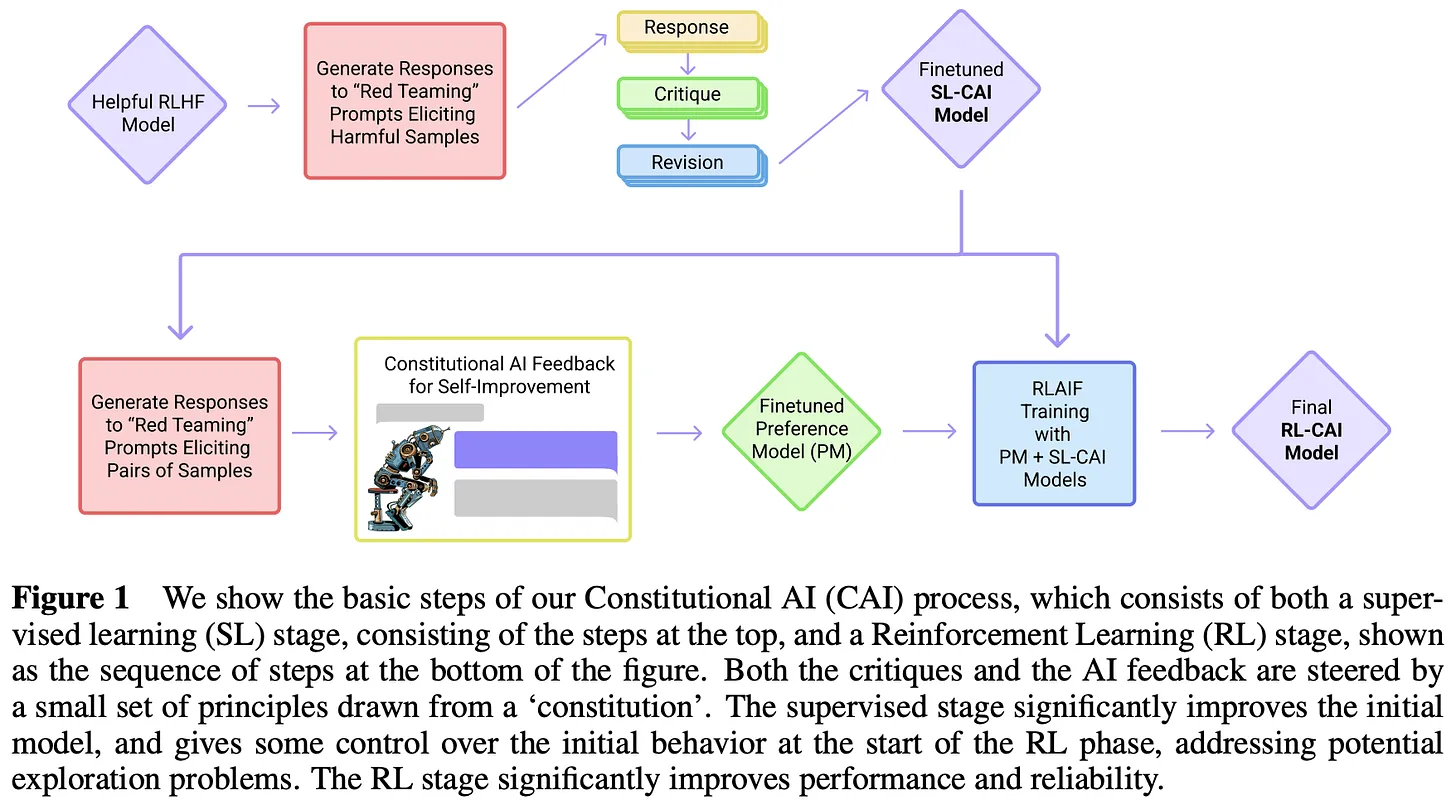

After GPT-3. The impressive performance of GPT-3 has sparked a great deal of interest in the study of large language models, focusing mainly on large-scale pre-training.The next few models released by OpenAI - theInstructGPT [8], ChatGPT, and GPT-4 [5]--The use of a combination of large-scale pre-training and new post-training techniques (i.e., supervised fine-tuning and reinforcement learning from human feedback) has dramatically improved the quality of large language models. These models are so impressive that they have even led to a surge in public interest in AI research.

"GPT-4 is a Transformer-based model that is pre-trained to predict the next Token in a document. the post-training alignment process improves the factoredness metric and adherence to the desired behavior." - Source [5]

At this point, OpenAI began releasing fewer details about their research. Instead, new models were only released through their API, which prevented the public from understanding how these models were created. Fortunately, some useful information can be gleaned from the materials OpenAI does release. For example, InstructGPT [8]-ChatGPT predecessors--There is a related paper that documents the model's post-training strategy in detail; see below. Given that this paper also states that GPT-3 is the model underlying InstructGPT, it is reasonable to infer that the model's improvement in performance is largely independent of the extended pre-training process.

(Source [8])

- GPT-4 is based on Transformer.

- The model is pretrained using the next Token prediction.

- Use of public and licensed third-party data.

- The model is fine-tuned using reinforcement learning from human feedback.

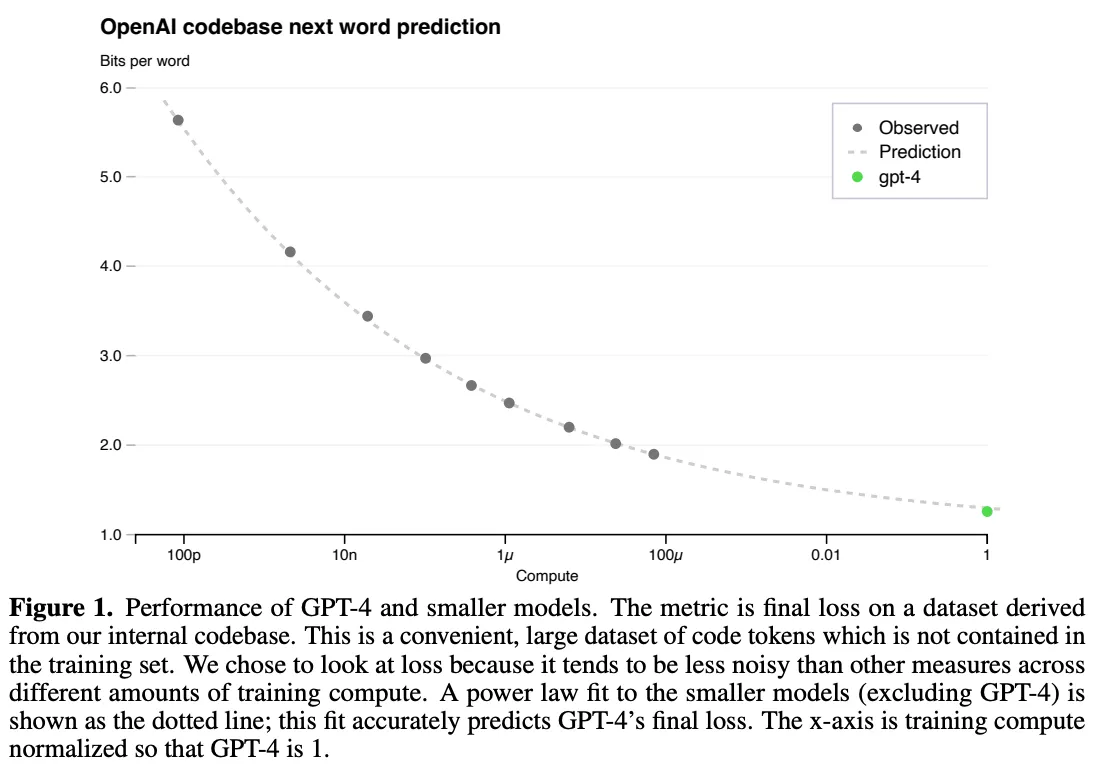

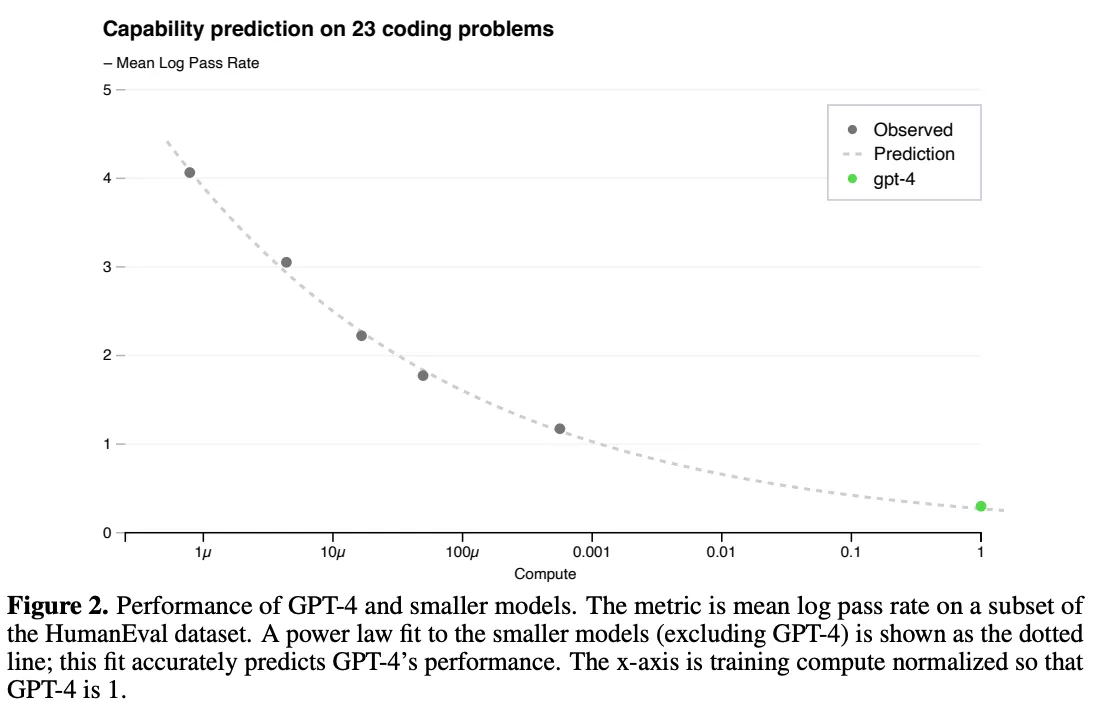

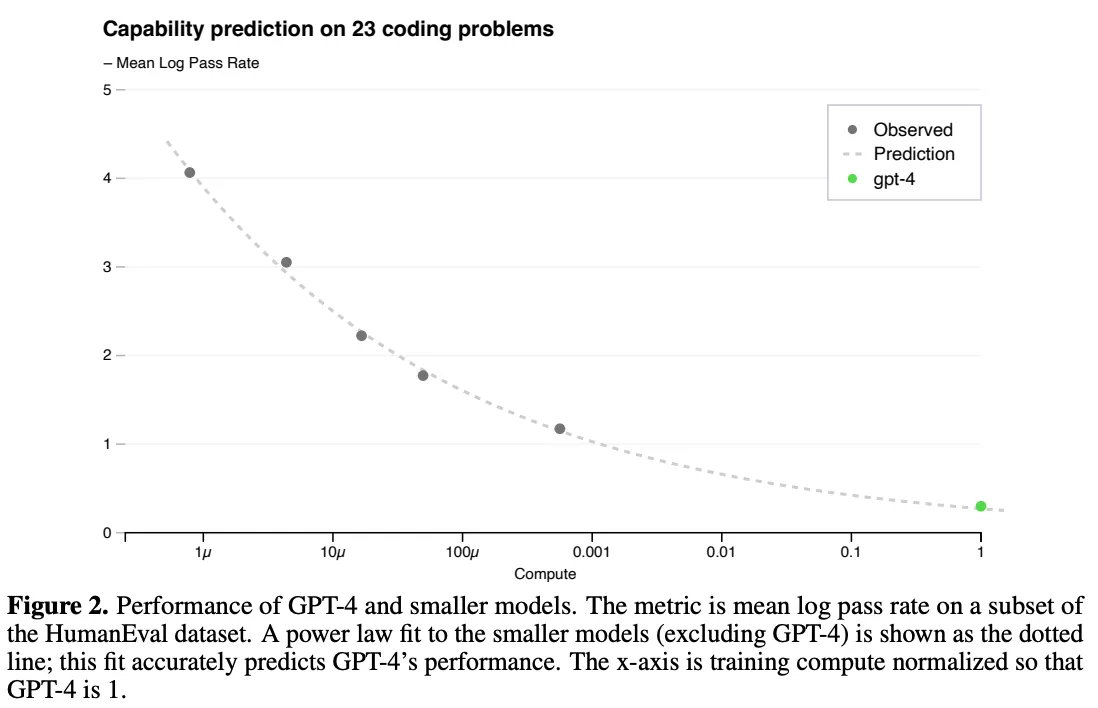

Nonetheless, the importance of scaling is very apparent in this technical report. The authors note that a key challenge in this work is to develop a scalable training architecture that performs predictably at different scales, allowing for the extrapolation of results from smaller runs and thus providing confidence for larger (and much more expensive!) training exercises that provide confidence.

"The final loss of a properly trained large language model ...... can be approximated by a power law of the amount of computation used to train the model." - Source [5]

Massive pre-training is very expensive, so we usually only get one chance to get it right-No room for model-specific adjustments. The law of size plays a key role in this process. We can train a model using 1,000-10,000 times fewer computations and use the results of these training runs to fit power laws. These power laws can then be used to predict the performance of larger models. In particular, we saw in [8] that the performance of GPT-4 is predicted using power laws that measure the relationship between computation and test loss; see below.

GPT-4 Scale law formula for training (Source [5])

(Source [5])

(Source [5])

Chinchilla. Training Computationally Optimal Large Language Models [5]

(Source [9])

(Source [10])

(Source [10])

"The amount of training data expected to be needed far exceeds the amount of data currently used to train large models." - Source [6]

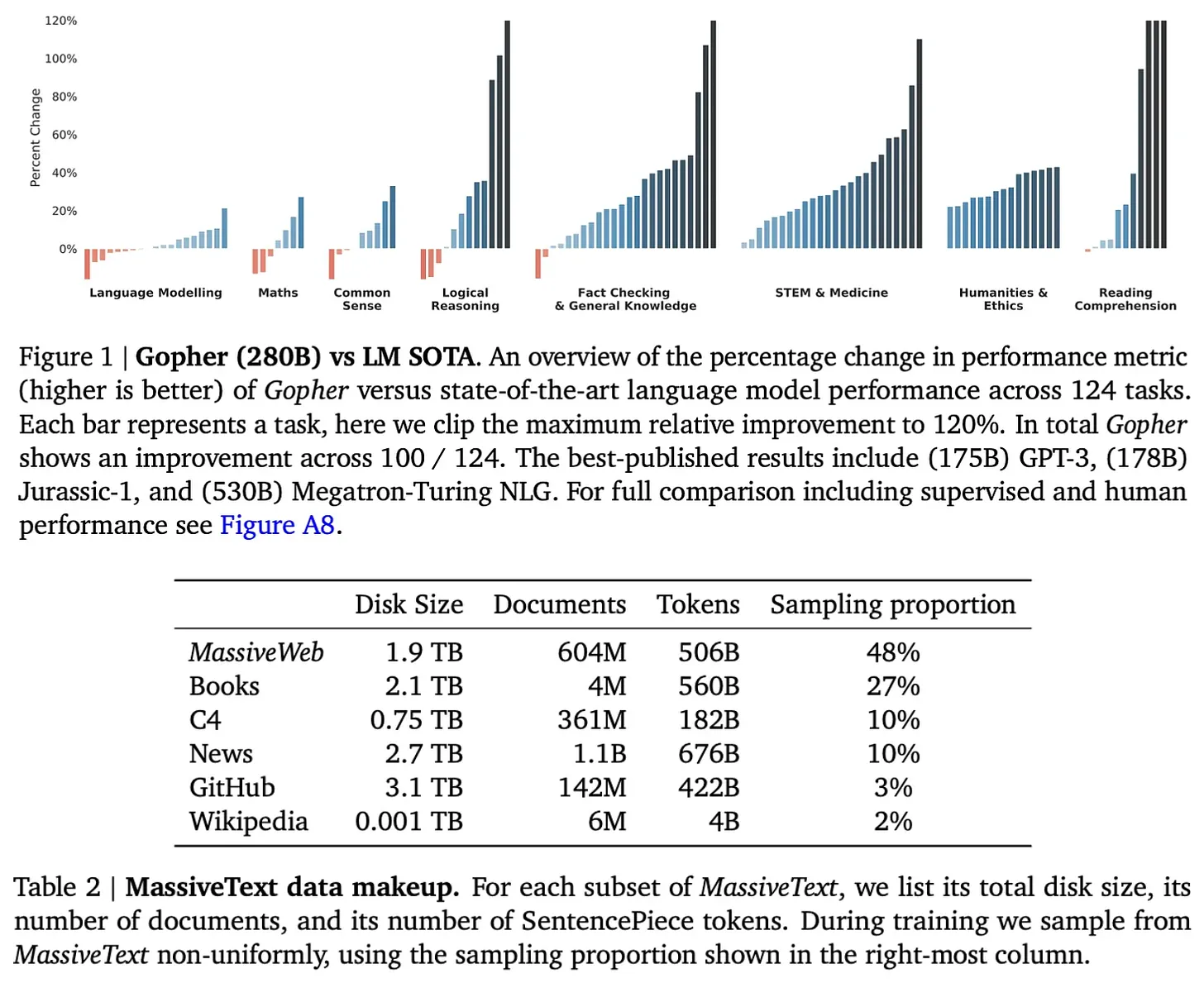

Chinchilla.The analysis provided in [6] emphasizes the importance of data size.Large models need to be trained on more data to achieve optimal performanceTo verify this finding, the authors trained a 70 billion parameter large language model called Chinchilla. To verify this finding, the authors trained a 70-billion-parameter large language model called Chinchilla. Compared to previous models, Chinchilla is smaller, but has a larger pre-training dataset - the1.4 trillion training tokens in totalChinchilla uses the same data and evaluation strategy as Gopher [10]. Although four times smaller than Gopher, Chinchilla consistently outperforms larger models; see below.

(Source [6])

The "demise" of the law of scale

The law of scale has recently become a hot (and controversial) topic in AI research. As we have seen in this overview, scale drove much of the improvement in AI during the pre-training era. However, as the pace of model releases and improvements slows in the second half of 2024,11 we are beginning to see widespread skepticism about model scaling, which seems to indicate that AI research - theIn particular, the law of scale--May be hitting a wall.

- OpenAI is shifting its product strategy because of bottlenecks in scaling its current approach, Reuters said.

- The Information says that improvements to the GPT model are starting to slow down.

- Bloomberg highlights the difficulties several cutting-edge labs are facing as they try to build more powerful AI.

- TechCrunch says that scale is starting to yield diminishing returns.

- Time Magazine published a nuanced article highlighting the various factors that are causing AI research to be slowing down.

- Ilya Sutskever in NeurIPS'24 said *"Pre-training as we know it will end "*.

At the same time, many experts hold the opposite view. For example, (Anthropic CEO) has said that scaling *"may ...... continue"And Sam Altman continues to preach"There are no walls "* argument. In this section, we will add more color to this discussion by providing an informed explanation of the current state of scale and the various problems that may exist.

Scaling down: what does it mean? Why is it happening?

"Both statements are probably true: on a technical level, scaling still works. For users, the rate of improvement is slowing." - Nathan Lambert

Then ......Is scaling up slowing down? The answer is complex and depends very much on our precise definition of "slowdown". So far, my most reasonable response to this question is that both answers are correct. For this reason, we will not attempt to answer this question. Instead, we will delve deeper into what is being said on this topic so that we can build a more nuanced understanding of the current (and future) state of large language model scaling.

What does the law of scale tell us?First, we need to review the technical definition of the law of size. The law of size defines the relationship between the training computation (or model/dataset size) and the testing loss of a large language model according to a power law. However, theThe nature of this relationship is often misunderstood. The idea of getting exponential performance gains from logarithmic growth of computation is a myth. The law of scale looks more like exponential decay, which means that over time we will have to work harder to get further performance gains; see below.

(Source [5])

"Practitioners typically use downstream benchmark accuracy as a proxy for model quality, rather than loss on the perplexity assessment set." - Source [7]

Define performance.How do we measure whether the Big Language Model is improving? From the perspective of the law of scale, the performance of a large language model is usually measured by the test loss of the model during pre-training, but the effect of lower test loss on the capability of a large language model is not clear.Does lower loss lead to higher accuracy in downstream tasks? Will lower losses lead to new capabilities for large language models? There is a disconnect between what the law of scale tells us and what we actually care about:

- The law of size tells us that increasing the size of pre-training will smoothly reduce the testing loss of large language models.

- Our concern is to obtain a "better" model of the larger language.

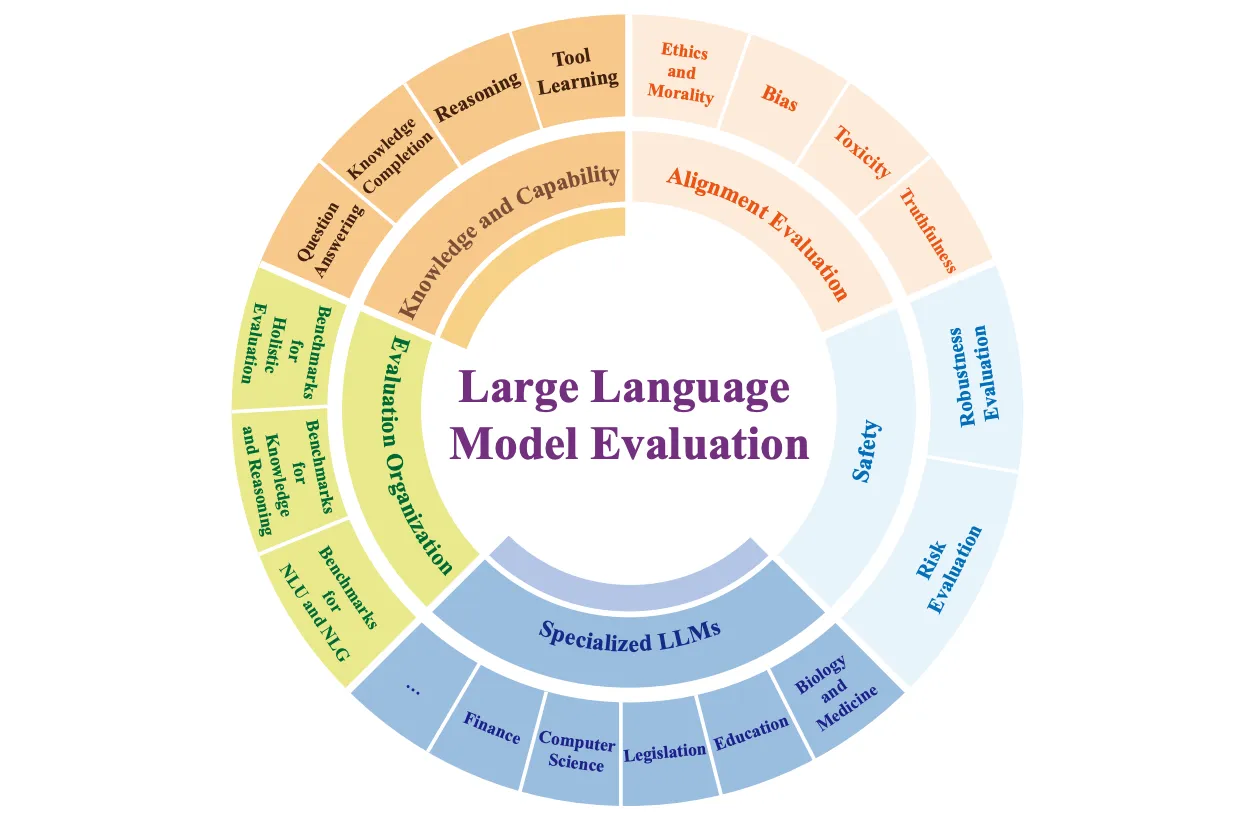

Depending on who you are and what you expect from a new AI system-and the methods you used to evaluate these new systems-will be very different. Ordinary AI users tend to focus on general-purpose chat applications, while practitioners often care about the performance of large language models on downstream tasks. In contrast, researchers in top cutting-edge labs seem to have high (and very specific) expectations of AI systems; for example, to write doctoral dissertations or solve advanced mathematical reasoning problems. Given the wide range of capabilities that are difficult to evaluate, there are many ways to look at the performance of big language models; see below.

(Source [15])

Data demise.In order to scale up large language modeling pretraining, we must increase both model and dataset size. Early research [1] seems to suggest that the amount of data is less important than the size of the model, but we see in Chinchilla [6] that the size of the dataset is equally important. In addition, recent work suggests that most researchers prefer to "over-train" their models - theor pre-train them on datasets whose size exceeds Chinchilla's optimality-to save inference costs [7].

"Scale-up studies often focus on computing optimal training mechanisms ...... Since larger models are more expensive to reason about, it is now common practice to over-train smaller models." - Source [7]

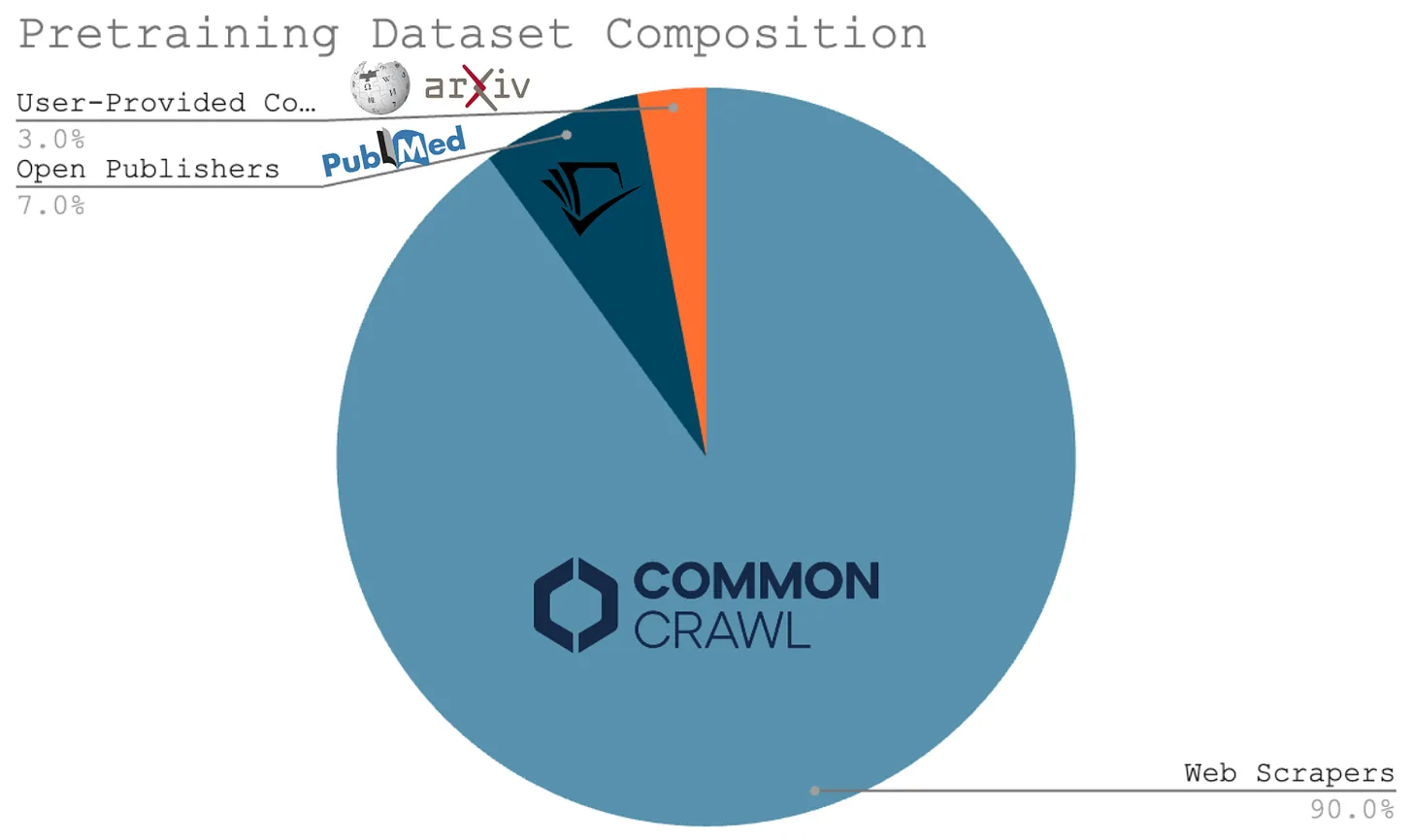

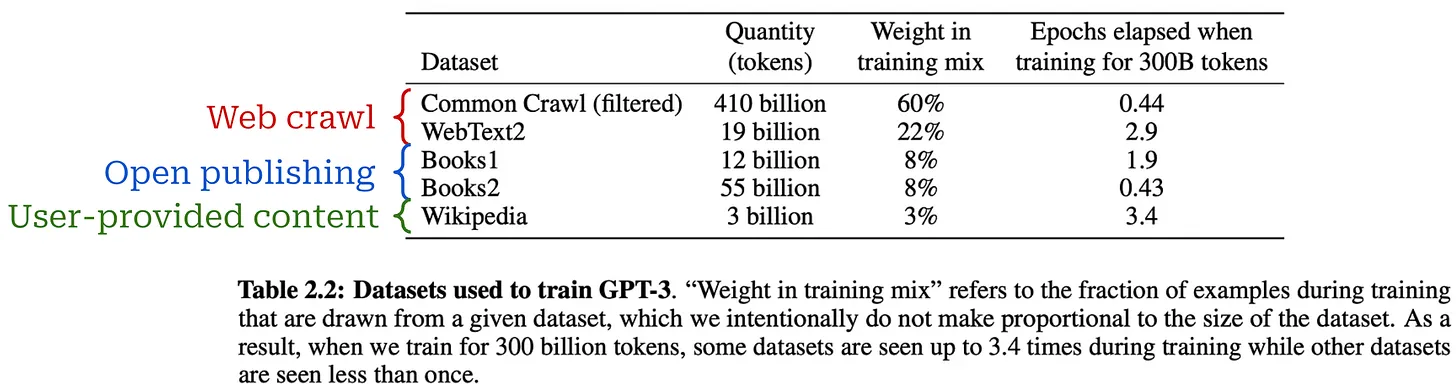

All of these studies lead us to a simple conclusion - theExpanding the Big Language Model Pre-training will require us to create larger pre-training datasetsThis fact constitutes one of the main criticisms of the scale law of large language models. This fact constitutes one of the main criticisms of the law of size for large language models. Many researchers have argued that there may not be enough data to continue to scale the pre-training process. By way of background, the vast majority of the pretraining data used for the current Big Language Model was obtained through web crawling; see below. Given that we only have one Internet, finding entirely new sources of large-scale, high-quality pretraining data may be difficult.

Even Ilya Sutskever has recently made this argument, claiming that i) Computing is growing rapidly, but ii) The data has not grown due to the reliance on web crawling. As a result, he argues that we cannot continue to expand the pre-training process forever. Pre-training as we know it will come to an end, and we must find new ways for AI research to progress. In other words."We've reached peak data."The

Next-generation pre-training scale

Scaling will eventually lead to diminishing returns, and the data-centric arguments against continued scaling are both reasonable and compelling. However, there are still several research directions that could improve the pre-training process.

Synthesized data.In order to scale up the pre-training process by several orders of magnitude, we may need to rely on synthetically generated data. Despite concerns that over-reliance on synthetic data can lead to diversity problems [14], we are seeing big language models increasingly-And it seems to have succeeded in--using synthetic data [12]. In addition, course learning [13] and continuous pretraining strategies have led to a variety of meaningful improvements by adapting pretraining data; for example, changing the data mix or adding instruction data at the end of pretraining.

(Source [7])

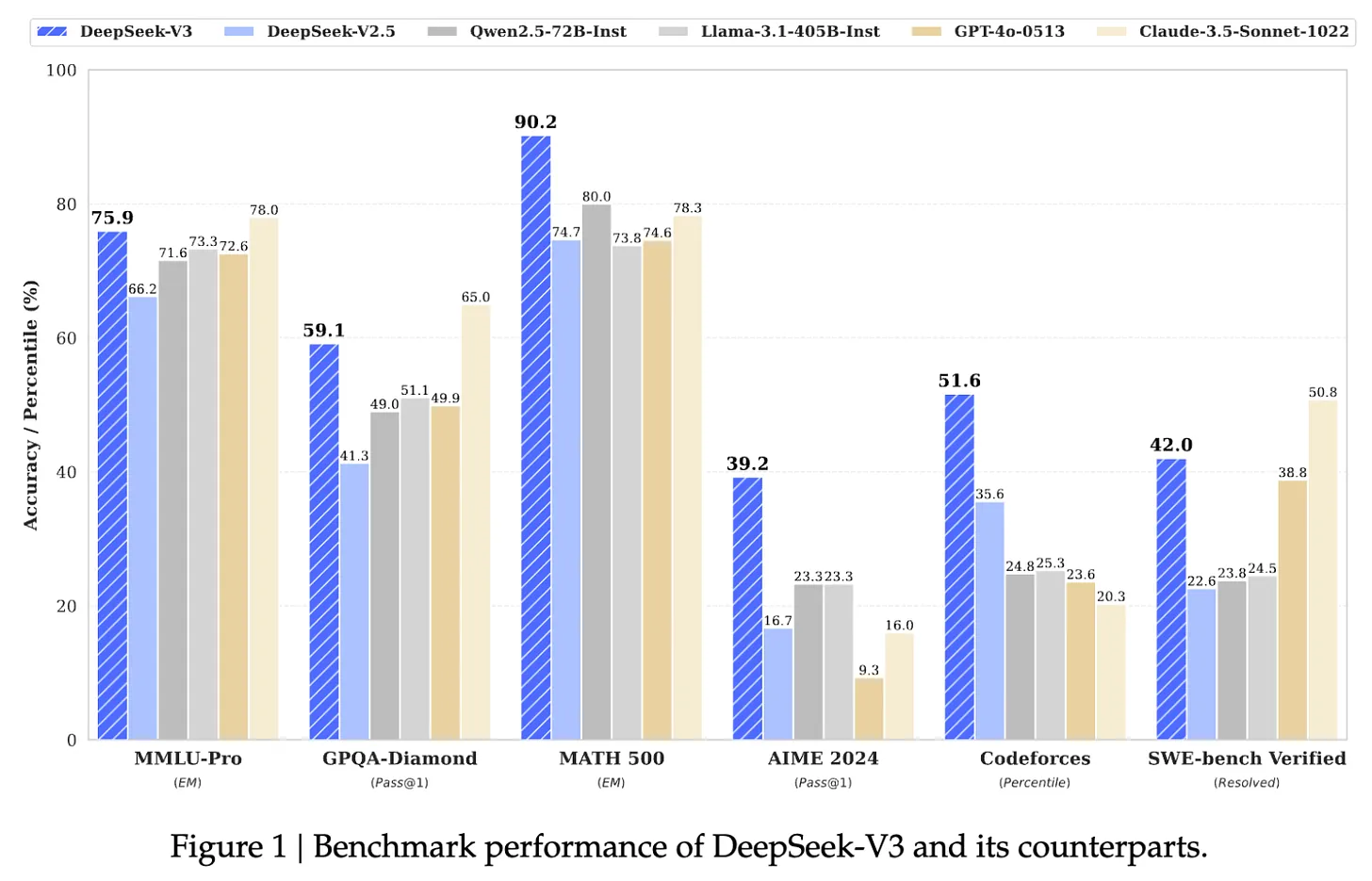

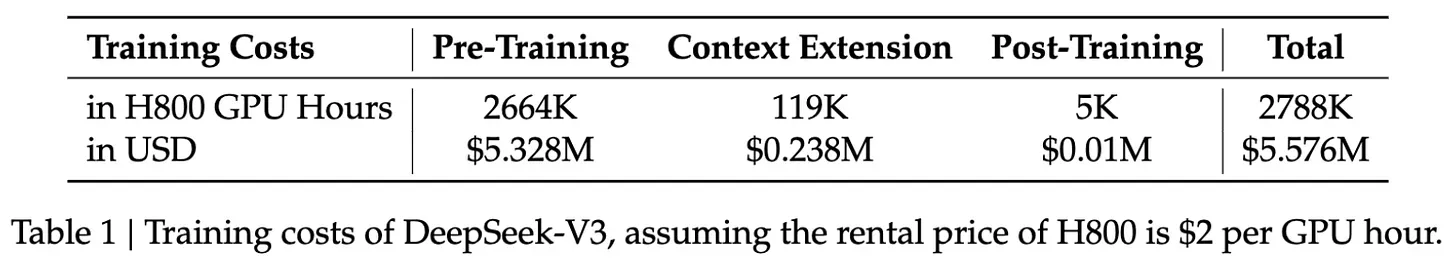

DeepSeek-v3.Despite recent controversies, we continue to see semi-frequent progress by extending the pre-training process for large language models. For example, the recent release of DeepSeek-v3 [18] -- theA 671 billion parameter12 The Mixed-Mode Expert (MoE) model. In addition to being open source, the model was pre-trained on 14.8 trillion Token of text and outperformed both GPT-4o and Claude-3.5-Sonnet; see the chart below for the model's performance and here for the license. For reference, the LLaMA-3 model was trained on over 15 trillion pieces of raw text data; see here for more details.

(Source [18])

- Optimized MoE architecture from DeepSeek-v2.

- A new unaided loss strategy for load balancing MoE.

- Multi-Token prediction of training goals.

- Refining reasoning capabilities from long thought chain models (i.e., similar to OpenAI's o1).

The model also underwent post-training, including supervised fine-tuning and reinforcement learning from human feedback, to align it with human preferences.

"We trained DeepSeek-V3 on 14.8 trillion high-quality and diverse Token. the pre-training process was very stable. We did not experience any unrecoverable loss spikes or have to roll back throughout the training process." - Source [8]

The biggest key to DeepSeek-v3's impressive performance, however, is the pre-training scale- theThis is a large model trained on an equally large data set! Training such a large model is difficult for a variety of reasons (e.g., GPU failures and loss spikes.) DeepSeek-v3 has an amazingly stable pre-training process and is trained at a reasonable cost according to the Large Language Model standard; see below.These results suggest that larger pre-training operations become more manageable and efficient over timeThe

(Source [18])

- Larger computing clusters13.

- More (and better) hardware.

- Lots of power.

- new algorithms (e.g., for larger-scale distributed training,).

Training next-generation models isn't just a matter of securing funding for more GPUs; it's a multidisciplinary engineering feat. Such a complex endeavor takes time. For reference, GPT-4 was released in March 2023, nearly three years after the release of GPT-3 - theIn particular, 33 monthsThe timeframe for unlocking another 10-100x increase in scale A similar timeline (if not longer) can reasonably be expected to unlock another 10-100x increase in scale.

"At every order of magnitude of scale growth, different innovations must be found." - Ege Erdil (Epoch AI)

The Future of AI Research

Now that we have a deeper understanding of the state of pre-training at scale, let's assume (purely for the purposes of this discussion) that pre-training research will suddenly hit a wall. Even if model capabilities don't improve at all in the near future, there are a number of ways in which AI research can continue to evolve rapidly. We have already discussed some of these topics (e.g., synthetic data). In this section, we will focus on two currently popular themes:

- Large Language Modeling System/Agent.

- Reasoning Models.

Building a Useful Large Language Modeling System

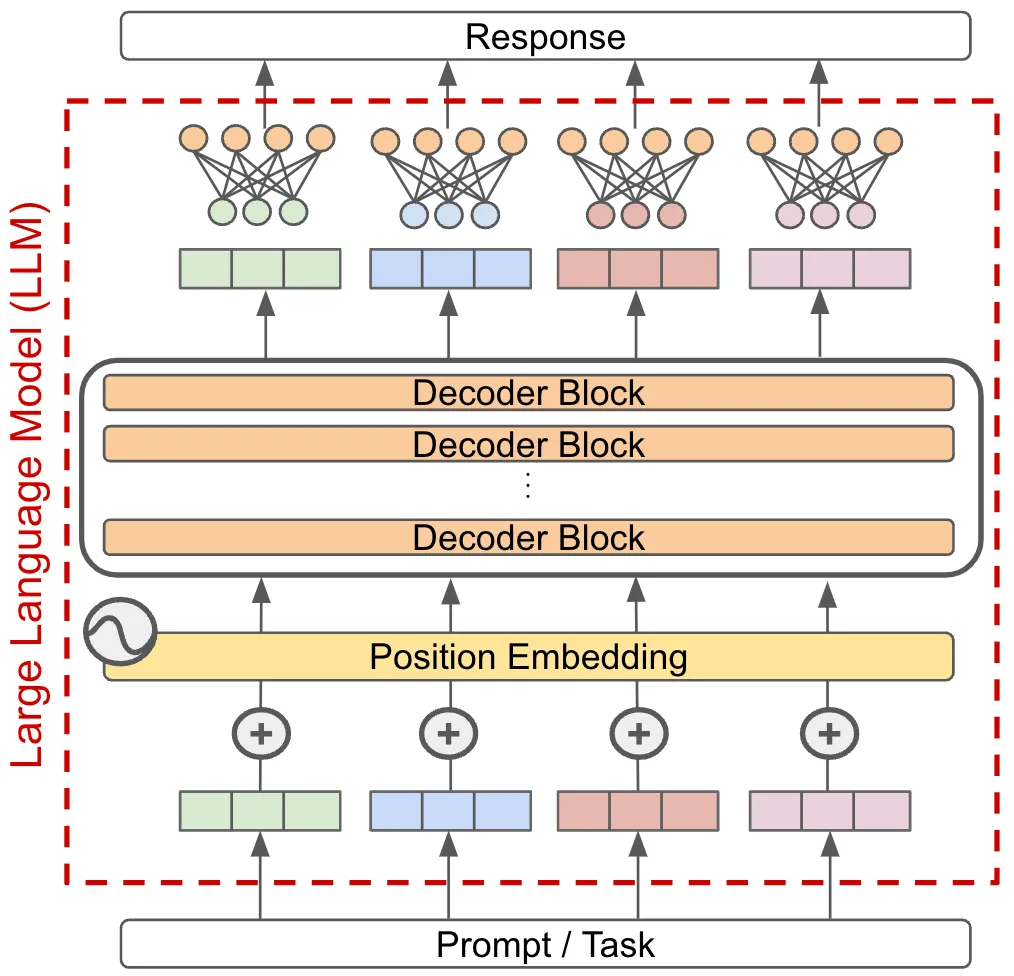

Most of today's Big Language Model-based applications run in a single-model paradigm. In other words, we solve a task by passing it to a single Big Language Model and directly using the model's output as the answer to that task; see below.

If we wanted to improve such a system (i.e., solve harder tasks with greater accuracy), we could simply improve the capabilities of the underlying model, but this approach relies on creating more powerful models. Instead, we can move beyond the single-model paradigm by building a system based on a large language model that combines multiple large language models- theor other component-- to solve complex tasks.

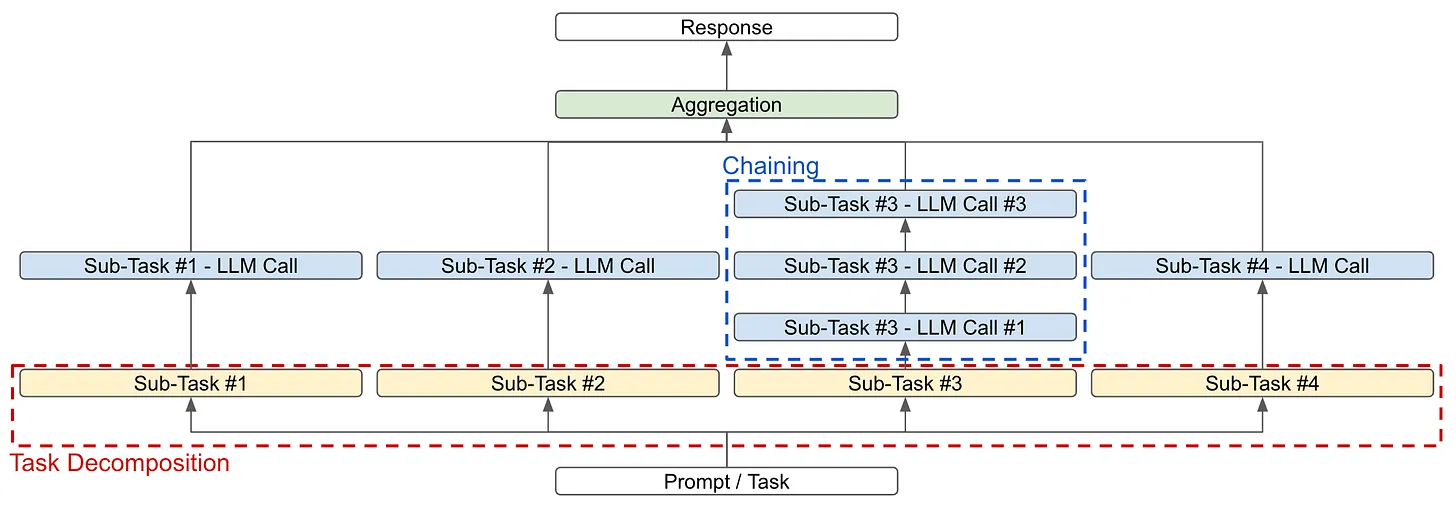

Large Language Modeling System Foundations. The goal of a Big Language Model system is to decompose complex tasks into smaller parts that can be more easily solved by the Big Language Model or other modules. There are two main strategies that can be used to achieve this goal (as shown in the figure above):

- Breakdown of tasks: Break down the task itself into smaller subtasks that can be solved individually and later aggregated14 to form the final answer.

- link (on a website): Solve tasks or subtasks by making multiple sequential calls to the large language model rather than a single call.

These strategies can be used individually or in tandem. For example, suppose we want to build a system for summarizing books. To do this, we can break down the task by first summarizing each chapter of the book. From here, we can:

- Summarize the task by further breaking it down into smaller chunks of text (i.e., similar to recursive/hierarchical decomposition).

- Link multiple LM calls together; for example, have one LM extract all the important facts or information in a chapter, and have another LM generate a chapter summary based on those key facts.

We can then aggregate these results by having the Big Language Model summarize the connected chapter summaries to form a summary of the entire novel. The fact that most complex tasks can be decomposed into simple parts that are easy to solve makes such big language modeling systems very powerful. As we perform more extensive decomposition and linking, these systems can become very complex, making them an interesting (and influential) area of applied AI research.

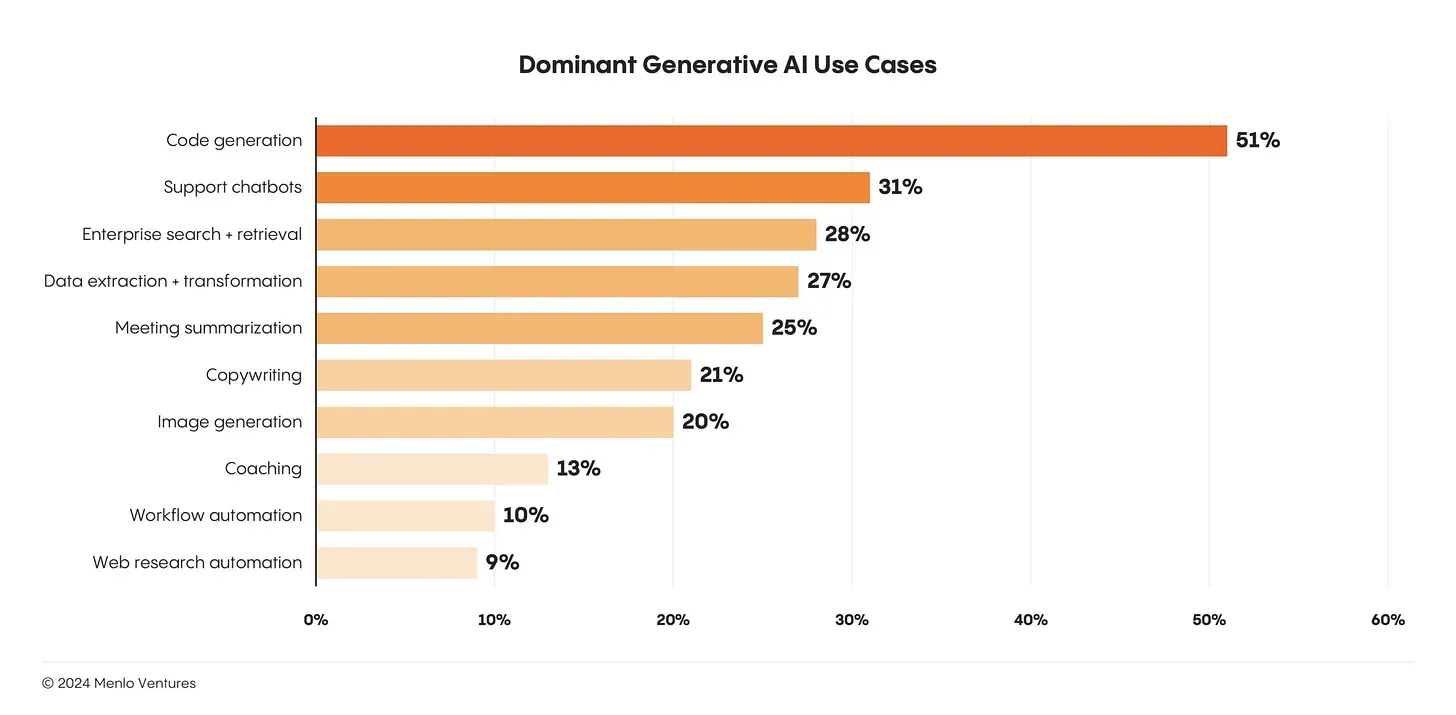

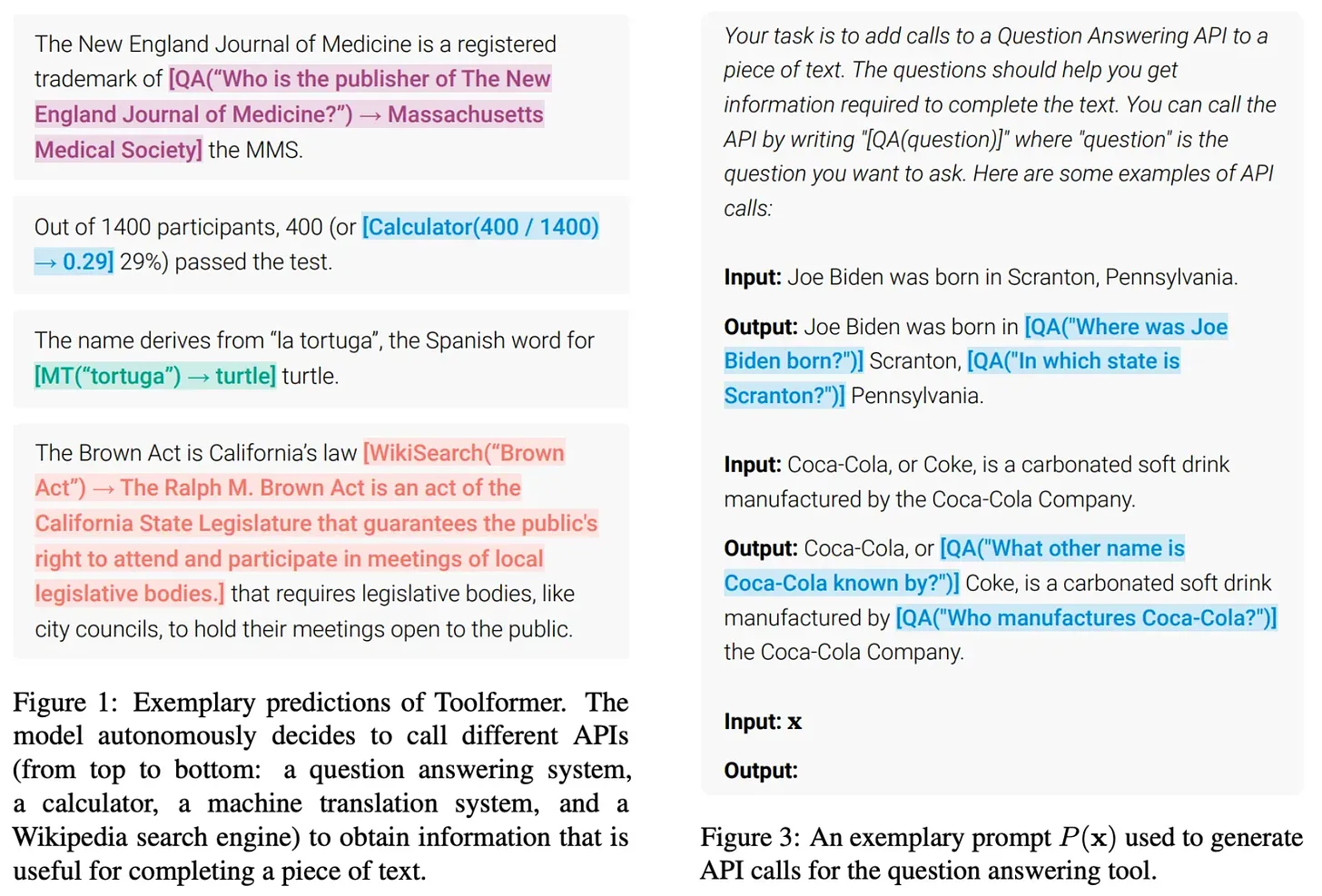

Building products based on the Big Language Model.Despite the success and popularity of the Big Language Model, the number of actual (and widely adopted) use cases for the Big Language Model is still very small. Today, the largest use cases of the Big Language Model are code generation and chat, both of which are relatively obvious applications of the Big Language Model15 ; see below.

Given that there are so many areas of application for large language modeling.Simply building more genuinely useful products based on large language models is an important area of applied AI research. Very powerful models are already available to us, but using them to build products worth using is an entirely different problem. Solving this problem requires learning how to build reliable and powerful large language modeling systems.

(Source [19])

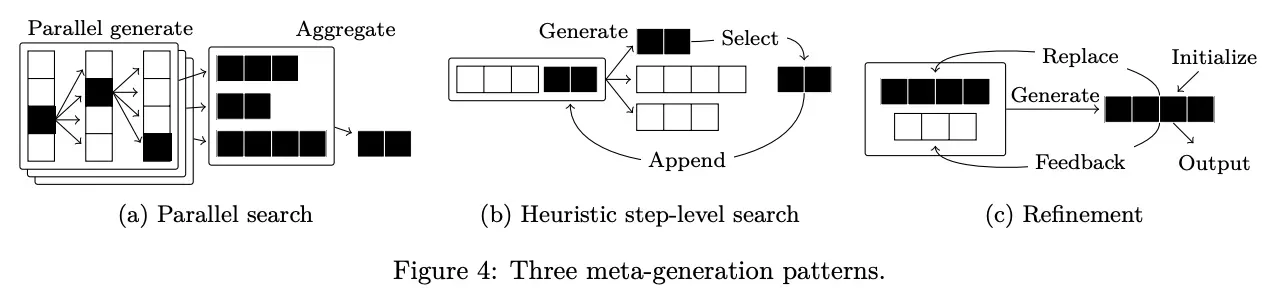

robustnessis one of the biggest obstacles to building more robust Big Language Model/agent systems. Suppose we have a Big Language Model system that makes ten different calls to the Big Language Model. Furthermore, let us assume that each call to the Big Language Model has a 95% probability of success, and that all calls need to succeed in order to generate the correct final output. Although the components of the system are reasonably accurate, theHowever, the success rate of the whole system is only 60%!

(Source [20])

(Source [20])

Reasoning models and new scaling paradigms

A common criticism of early big language models was that they simply memorized data and had little reasoning power. However, the claim that big language models are incapable of reasoning has largely been debunked over the past few years. We know from recent research that these models may have always had inherent reasoning power, but we had to use the right cues or training methods to elicit that power.

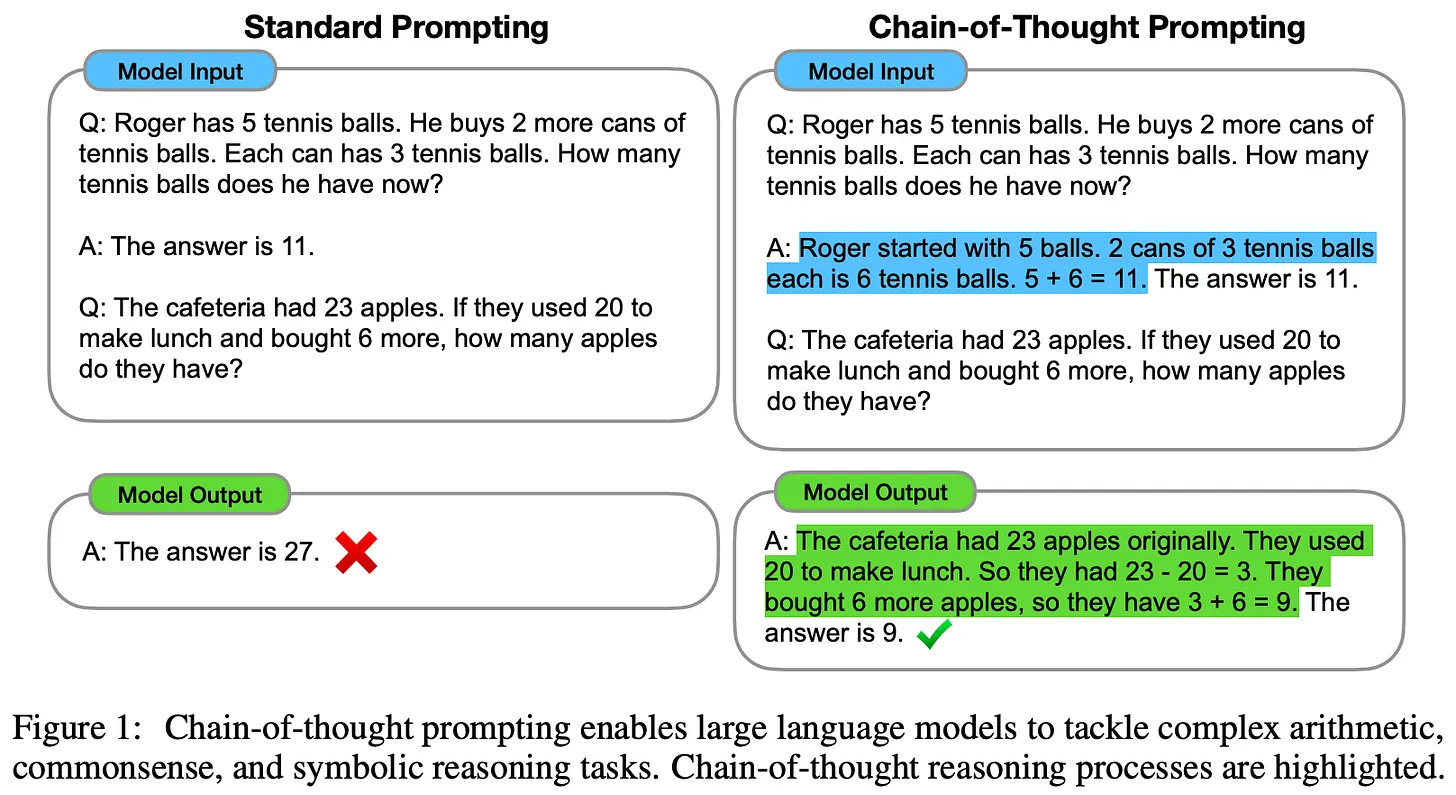

Chain of Thought (CoT) Tips [22] was one of the first techniques to demonstrate the reasoning power of large language models. The method is simple and cue-based. We simply ask the Big Language Model to provide an explanation of its response before generating the actual response; see here for more details. The reasoning power of the Big-Language Model improves significantly when it generates a rationale that summarizes the step-by-step process used to arrive at a response. In addition, the explanation is human-readable and makes the model's output more interpretable!

(Source [22])

- Class Large Language Model - Judge Evaluation Models usually provide the basis for scoring before generating the final evaluation results [23, 24].

- Supervised fine-tuning and instruction tuning strategies have been proposed for teaching smaller/open large language models to write better thought chains [25, 26].

- Large language models are often asked to reflect on and comment on or validate their own outputs, and then modify their outputs based on this information [12, 27].

Complex reasoning is an active research topic and is rapidly evolving. New training algorithms that teach large language models to incorporate (step-level) verification [28, 29] into their reasoning process have shown promising results, and we will likely continue to see improvements as new and better training strategies become available.

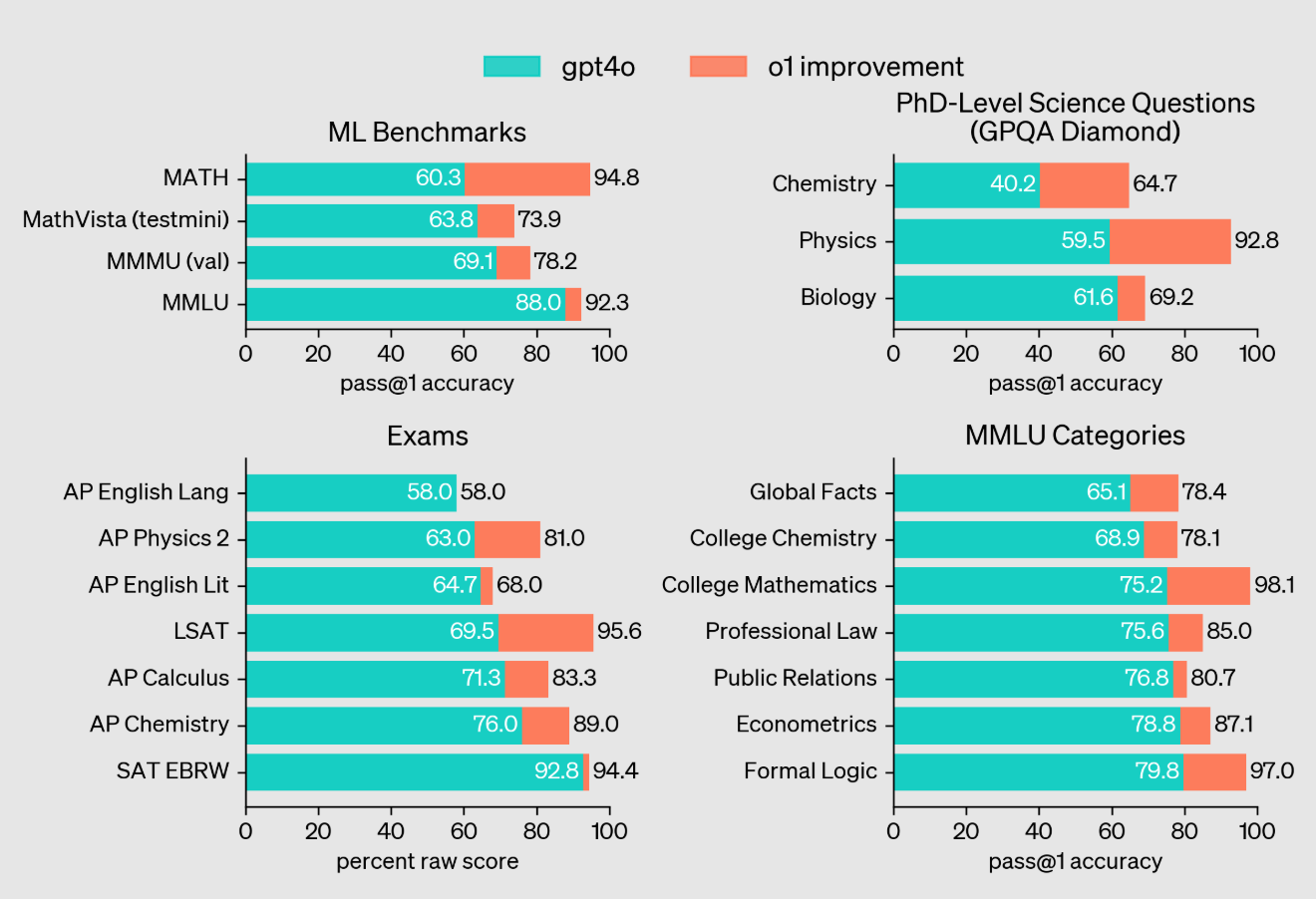

OpenAI's o1 inference model [21] marks a significant leap forward in the reasoning capabilities of the Big Language Model. o1 uses reasoning strategies that are heavily based on chains of thought. Similar to the way humans think before answering a question, o1 takes time to "think" before providing a response. In fact, the "thinking" that o1 generates is simply a long chain of thoughts that the model uses to think about the problem, break it down into simpler steps, try various approaches to solving the problem, and even correct its own mistakes16.

"OpenAI o1 [is] a new large-scale language model trained to perform complex reasoning using reinforcement learning. o1 thinks before it answers - it can generate a long internal chain of thought before responding to the user." - Source [21]

Details of o1's exact training strategy have not been shared publicly. However, we do know that o1 learns to reason using a "large-scale reinforcement learning" algorithm that "makes very efficient use of data" and focuses on improving the model's ability to generate useful chains of thought. Based on public comments from OpenAI researchers and recent statements about o1, it appears that the model was trained using pure reinforcement learning, which contradicts earlier suggestions that o1 may have used some form of tree search in its reasoning.

Comparison of GPT-4o and o1 on inference-heavy tasks (Source [21])

- Top 89% in Competitive Programming Problems at Codeforces.

- Ranked in the top 500 students in the United States in the qualifying rounds of the American Mathematical Olympiad (AIME).

- Exceeds the accuracy of human doctoral students on graduate level physics, biology, and chemistry questions (GPQA).

(Source [22])

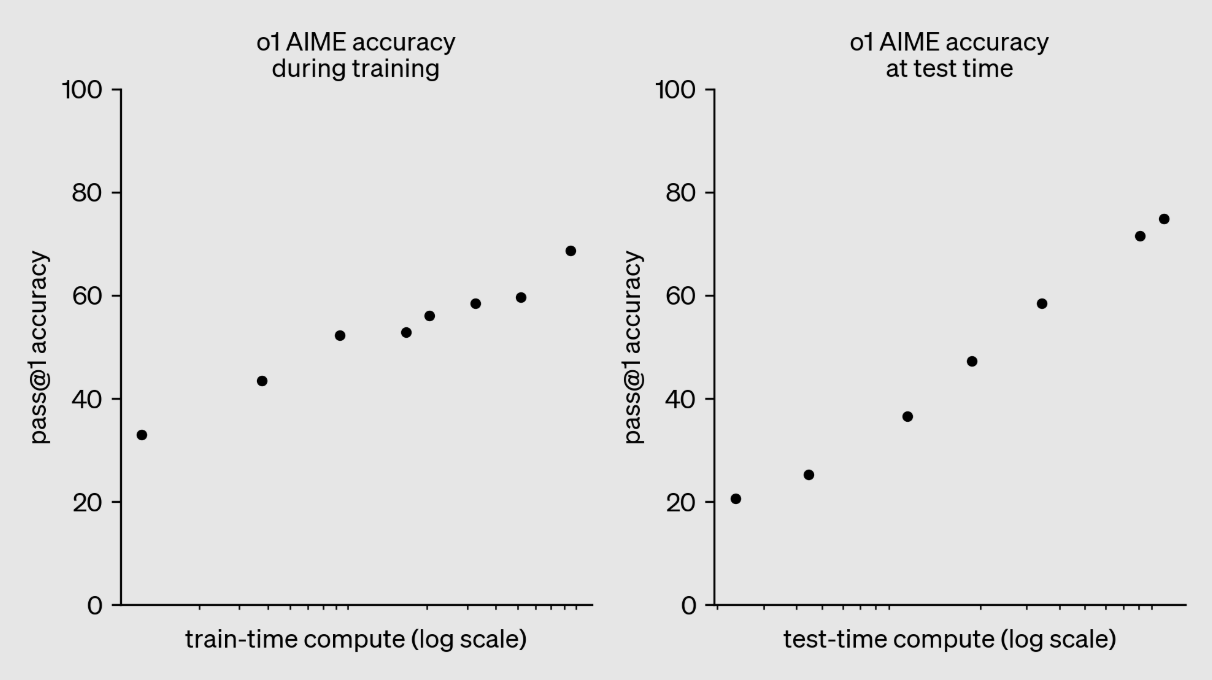

"We found that o1's performance continues to improve with more reinforcement learning (computed during training) and more think time (computed during testing)." - Source [22]

Similarly, we see in the figure above that the performance of o1 improves smoothly as we invest more computation in training through reinforcement learning. This is exactly the approach followed to create the o3 inference model. The model has been evaluated by OpenAI at the end of 2024 and very few details about o3 have been shared publicly. However, given that the model was released so quickly after o1 (i.e., three months later), it is likely that o3 is a "scaled-up" version of o1, with more computation invested in reinforcement learning.

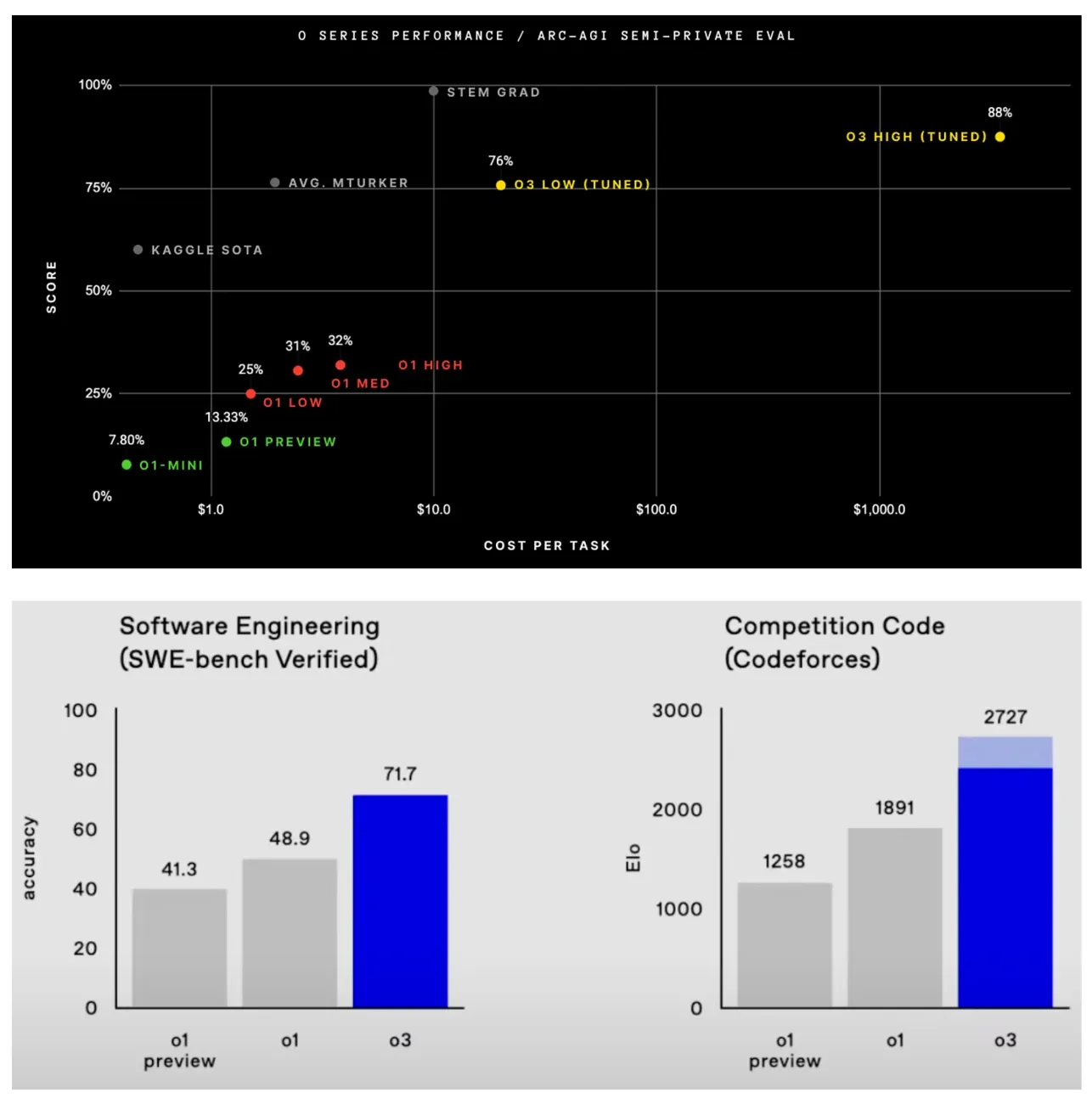

At the time of writing, the o3 model has not yet been released, but the results achieved by extending o1 are impressive (and in some cases shocking). The most notable achievements of o3 are listed below:

- scored 87.5% on the ARC-AGI benchmark, compared to GPT-4o's accuracy of 5%. o3 was the first model to exceed 85% of human-level performance on ARC-AGI. The benchmark has been described as the "North Star" to AGI and has been undefeated for over five years17 .

- An accuracy of 71.7% on SWE-Bench Verified and an Elo score of 2727 on Codeforces puts o3 among the top 200 human competitive programmers in the world.

- with an accuracy of 25.2% on EpochAI's FrontierMath benchmark.Improved state-of-the-art accuracy over the previous 2.0%The benchmark was described by Terence Tao as "extremely difficult" and likely not to be solved by AI systems for "at least a few years". The benchmark was described by Terence Tao as "extremely difficult" and probably unsolvable by AI systems "for at least a few years".

A lite version of o3, called o3-mini, was also previewed, which performs very well and offers significant improvements in computational efficiency.

(Source [21] and here are)

- Training time (intensive learning) Calculation.

- Calculate when reasoning.

Scaling o1 style models differs from the traditional law of size. Instead of scaling up the pre-training process, we scale up the amount of computation put into post-training and inference.It's a whole new paradigm of scaling, so far, the results obtained by extending the inference model have been very good. Such a finding suggests that other avenues for expansion beyond pre-training clearly exist. With the emergence of inference models, we have discovered the next mountain to climb. Although it may come in different forms, theScale will continue to drive advances in AI researchThe

concluding remarks

We now have a clearer picture of the laws of scale, their impact on large language models, and the future direction of AI research. As we have learned, there are many contributing factors to the recent criticisms of the laws of scale:

- The natural decay of the law of scale.

- Expectations for large language modeling capabilities varied widely.

- Delays in large-scale, interdisciplinary engineering efforts.

These are legitimate questions thatBut none of them indicate that scaling is still not working as expected. Investments in large-scale pretraining will (and should) continue, but improvements will become increasingly difficult over time. As a result, other directions of development (e.g., agents and inference) will become more important. However, the basic idea of scaling will continue to play a huge role as we invest in these new areas of research. Whether or not we continue to scale is not the issue.The real question is what we're going to expand on nextThe

bibliography

[1] Kaplan, Jared, et al. "Scaling laws for neural language models." arXiv preprint arXiv:2001.08361 (2020).[2] Radford, Alec. "Improving language understanding by generative pre-training." (2018).[3] Radford, Alec, et al. "Language models are unsupervised multitask learners." OpenAI blog 1.8 (2019): 9.[4] Brown, Tom, et al. "Language models are few-shot learners." Advances in neural information processing systems 33 (2020): 1877-1901.[5] Achiam, Josh, et al. "Gpt-4 technical report." arXiv preprint arXiv:2303.08774 (2023).[6] Hoffmann, Jordan, et al. "Training compute-optimal large language models." arXiv preprint arXiv:2203.15556 (2022).[7] Gadre, Samir Yitzhak, et al. "Language models scale reliably with over-training and on downstream tasks." arXiv preprint arXiv:2403.08540 (2024).[8] Ouyang, Long, et al. "Training language models to follow instructions with human feedback." Advances in neural information processing systems 35 (2022): 27730-27744.[9] Smith, Shaden, et al. "Using deepspeed and megatron to train megatron-turing nlg 530b, a large-scale generative language model." arXiv preprint arXiv:2201.11990 (2022).[10] Rae, Jack W., et al. "Scaling language models: methods, analysis & insights from training gopher." arXiv preprint arXiv:2112.11446 (2021).[11] Bhagia, Akshita, et al. "Establishing Task Scaling Laws via Compute-Efficient Model Ladders." arXiv preprint arXiv:2412.04403 (2024).[12] Bai, Yuntao, et al. "Constitutional ai: Harmlessness from ai feedback." arXiv preprint arXiv:2212.08073 (2022).[13] Blakeney. Cody., et al. "Does your data spark joy? Performance gains from domain upsampling at the end of training." arXiv preprint arXiv:2406.03476 (2024).[14] Chen, Hao, et al. "On the Diversity of Synthetic Data and its Impact on Training Large Language Models." arXiv preprint arXiv:2410.15226 (2024).[15] Guo, Zishan, et al. "Evaluating large language models: a comprehensive survey." arXiv preprint arXiv:2310.19736 (2023).[16] Xu, Zifei, et al. "Scaling laws for post-training quantized large language models." arXiv preprint arXiv:2410.12119 (2024).[17] Xiong, Yizhe, et al. "Temporal scaling law for large language models." arXiv preprint arXiv:2404.17785 (2024).[18] DeepSeek-AI et al. "DeepSeek-v3 Technical Report." https://github.com/deepseek-ai/DeepSeek-V3/blob/main/DeepSeek_V3.pdf (2024).[19] Schick, Timo, et al. "Toolformer: language models can teach themselves to use tools." arXiv preprint arXiv:2302.04761 (2023).[20] Welleck, Sean, et al. "From decoding to meta-generation: reference-time algorithms for large language models." arXiv preprint arXiv:2406.16838 (2024).[21] OpenAI et al. "Learning to Reason with LLMs." https://openai.com/index/learning-to-reason-with-llms/ (2024).[22] Wei, Jason, et al. "Chain-of-thought prompting elicits reasoning in large language models." Advances in neural information processing systems 35 (2022): 24824-24837.[23] Liu, Yang, et al. "G-eval: Nlg evaluation using gpt-4 with better human alignment." arXiv preprint arXiv:2303.16634 (2023).[24] Kim, Seungone, et al. "Prometheus: Inducing fine-grained evaluation capability in language models." The Twelfth International Conference on Learning Representations . 2023.[25] Ho, Namgyu, Laura Schmid, and Se-Young Yun. "Large language models are reasoning teachers." arXiv preprint arXiv:2212.10071 (2022).[26] Kim, Seungone, et al. "The cot collection: improving zero-shot and few-shot learning of language models via chain-of-thought fine-tuning." arXiv preprint arXiv:2305.14045 (2023).[27] Weng, Yixuan, et al. "Large language models are better reasoners with self-verification." arXiv preprint arXiv:2212.09561 (2022).[28] Lightman, Hunter, et al. "Let's verify step by step." arXiv preprint arXiv:2305.20050 (2023).[29] Zhang, Lunjun, et al. "Generative verifiers: reward modeling as next-token prediction." arXiv preprint arXiv:2408.15240 (2024).1 The two main reports are from The Information and Reuters.

2 We generated the drawing using the following settings:a = 1(math.) genusp = 0.5peace 0 < x < 1The

3 The calculation is defined in [1] as 6NBSwhich N is the number of model parameters thatB is the batch size used during training.S is the total number of training steps.

4 This extra multiplication constant does not change the behavior of the power law. To understand why this is so, we must understand the definition of scale invariance. Because power laws are scale invariant, the basic characteristics of a power law are the same even if we scale it up or down by some factor. The observed behavior is the same at any scale!

5 This description is from the Test of Time Award that Ilya received for this paper at NeurIPS'24.

6 While this may seem obvious now, we should keep in mind that at the time, most NLP tasks (e.g., summarization and Q&A) had research domains dedicated to them! Each of these tasks had associated task-specific architectures that were specialized to perform that task, and the GPT was a single general-purpose model that could outperform most of these architectures across multiple different tasks.

7 This means that we simply describe each task in the prompts of the big language model and use the same model to solve the different tasks - theOnly prompts change between tasksThe

8 This is to be expected since these models use zero-sample inference and are not fine-tuned on any downstream tasks at all.

9 By "emergent" capabilities, we mean skills that are only available to large language models once they reach a certain size (e.g., a sufficiently large model).

10 Here, we define "computationally optimal" as the training setup that yields the best performance (in terms of test loss) at a fixed training computational cost.

11 For example, Anthropic keeps delaying its release. Claude 3.5 Opus, Google has only released a flash version of Gemini-2, and OpenAI has only released GPT-4o in 2024 (until the release of o1 and o3 in December), which arguably isn't much more capable than GPT-4.

12 Only 37 billion parameters are active during the inference of a single Token.

13 For example, xAI recently built a new data center in Memphis with 100,000 NVIDIA GPUs, and Anthropic's leadership is increasing its compute spending 100-fold over the next few years.

14 The aggregation step can be implemented in a number of ways. For example, we can manually aggregate responses (e.g., through connections), use a large language model, or anything in between!

15 This is not because these tasks are simple. Code generation and chat are both hard to solve, but they are (arguably) fairly obvious applications of the Big Language Model.

16OpenAI has chosen to hide these long chains of thought from users of o1. The argument behind this choice is that these fundamentals provide insight into the model's thought processes that can be used to debug or monitor the model. However, models should be allowed to express their pure thoughts without any of the security filters necessary for user-oriented model output.

17 Currently, ARC-AGI remains technically undefeated because o3 exceeds the computational requirements for benchmarking. However, the model still achieves an accuracy of 75.7% using lower computational settings.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...