AssemblyAI: High-precision Speech-to-Text and Audio Intelligence Analysis Platform

General Introduction

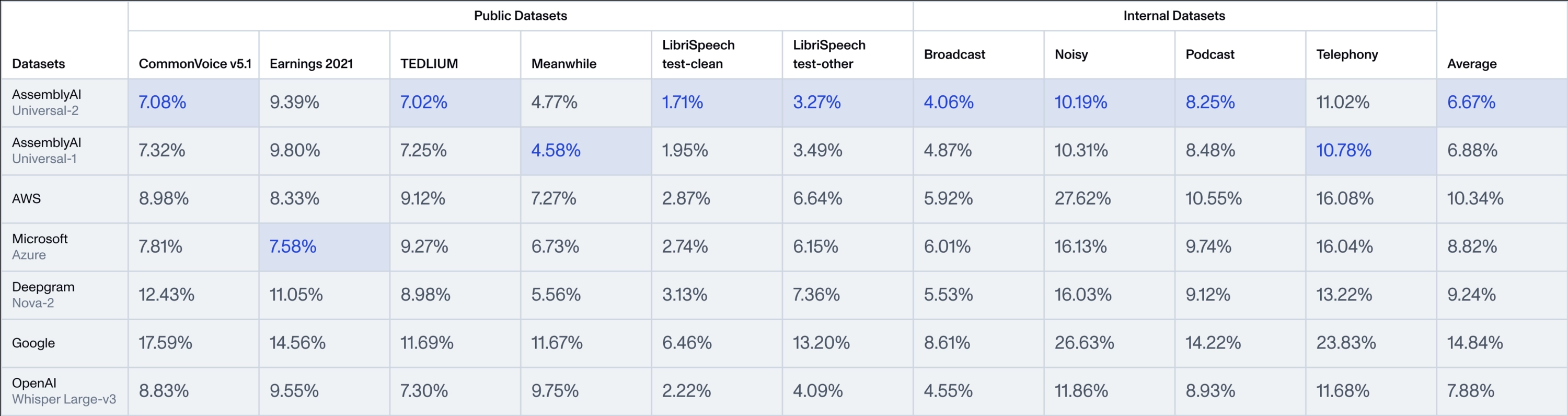

AssemblyAI is a platform focused on speech AI technology, providing developers and enterprises with efficient speech-to-text and audio analysis tools. The core highlight is the Universal series of models, especially the newly released Universal-2, which is AssemblyAI's most advanced speech-to-text model to date. Universal-2 builds on the foundation of Universal-1, with more than 12.5 million hours of multi-language audio training data, and is able to accurately capture the complexity of real conversations, providing highly accurate audio data. Universal-2 builds on Universal-1 with more than 12.5 million hours of multilingual audio training data to accurately capture the complexity of real conversations and provide highly accurate audio data. Compared to Universal-1, Universal-2 improves 241 TP3T in proper noun recognition (e.g., names, brands), 211 TP3T in mixed numeric-alphabetic content (e.g., phone numbers, mailboxes), and 151 TP3T in text formatting (e.g., punctuation, capitalization), significantly reducing the "last mile" accuracy of the traditional model. "AssemblyAI opens up these cutting-edge technologies to global users through easy-to-use APIs, and has been used by Spotify, Fireflies, and other companies to build intelligent speech products covering areas such as meeting recording and content analysis.

Function List

- speech-to-text: Convert audio files or live audio streams to high-precision text, supporting multiple languages and multiple audio formats.

- Speaker Detection: Automatically recognizes the identity of different speakers in the audio for multi-person conversation scenarios.

- emotional analysis: Analyze emotional tendencies in speech, such as positive, negative or neutral, to enhance the user experience.

- real-time transcription: Provides low-latency real-time speech-to-text functionality suitable for voice agents or live captioning.

- Audio Intelligence Model: Includes advanced features such as content review, topic detection, keyword search, and more.

- LeMUR framework: Processing transcribed text using large-scale language models, with support for summary generation, Q&A, and more.

- Subtitle Generation: Supports exporting subtitle files in SRT or VTT format for easy video content creation.

- PII Privacy: Automatically recognizes and blocks sensitive information in audio, such as names or phone numbers.

Using Help

AssemblyAI is a cloud-based API service that requires no local installation to access its powerful features. Here's a detailed guide to help you get started and dig deeper into its capabilities.

Registering and Getting API Keys

- Visit the official website: Open your browser and type

https://www.assemblyai.com/, go to the home page. - Register for an accountClick on "Sign Up" in the upper right corner and enter your email address and password to complete the registration process. After registering, you will be automatically entered into the Dashboard.

- Get the key: Find the "API Key" area in the dashboard and click "Copy" to copy the key. This is the only credential for calling the API and should be kept in a safe place.

- Free Trial: Free credits for new users, no need to bind payment methods immediately.

Core Function Operation

The core of AssemblyAI is its API integration. The following is an example of how to use the Universal family of models using Python. You can also use other languages (e.g. Java, Node.js) by referring to the documentation on the website.

Speech to text (Universal-2)

- preliminary: Make sure there is an audio file (e.g.

sample.mp3) or URL link. - Installing the SDK: Runs in the terminal:

pip install assemblyai

- code example::

import assemblyai as aai

aai.settings.api_key = "你的API密钥" # 替换为你的密钥

transcriber = aai.Transcriber()

transcript = transcriber.transcribe("sample.mp3")

print(transcript.text) # 输出文本,如“今天天气很好。”

- Universal-2 Strengths: By default, the Universal-2 model is used, which recognizes proper nouns (e.g., "Zhang Wei") and formatted numbers (e.g., "March 6, 2025") more accurately than the Universal-1 model, and is typically processed in a few seconds. It can recognize proper nouns (e.g., "Zhang Wei") and formatted numbers (e.g., "March 6, 2025") more accurately, often in seconds.

real-time transcription

- Applicable Scenarios: Live streaming, teleconferencing, and other real-time needs.

- code example::

from assemblyai import RealtimeTranscriber import asyncio async def on_data(data): print(data.text) # 实时输出文本 transcriber = RealtimeTranscriber( api_key="你的API密钥", sample_rate=16000, on_data=on_data ) async def start(): await transcriber.connect() await transcriber.stream() # 开始接收音频流 asyncio.run(start()) - workflow: Speak into the microphone after the run and the text is displayed in real time. the Universal-2's low latency feature ensures fast and accurate results.

Speaker Detection

- Enabling method::

config = aai.TranscriptionConfig(speaker_labels=True) transcript = transcriber.transcribe("sample.mp3", config=config) for utterance in transcript.utterances: print(f"说话人 {utterance.speaker}: {utterance.text}") - Examples of results::

说话人 A: 你好,今天会议几点? 说话人 B: 下午两点。 - draw attention to sth.: Universal-2 performs more consistently in multi-person conversations and reduces confusion.

emotional analysis

- Enabling method::

config = aai.TranscriptionConfig(sentiment_analysis=True) transcript = transcriber.transcribe("sample.mp3", config=config) for result in transcript.sentiment_analysis: print(f"文本: {result.text}, 情感: {result.sentiment}") - Examples of results::

文本: 我很喜欢这个产品, 情感: POSITIVE 文本: 服务有点慢, 情感: NEGATIVE

Subtitle Generation

- operating code::

transcript = transcriber.transcribe("sample.mp3") with open("captions.srt", "w") as f: f.write(transcript.export_subtitles_srt()) - in the end: Generate

.srtfile, which can be directly imported into video editing software.

Features: LeMUR Framework

- Function Introduction: LeMUR combines large-scale language models to process transcription results, e.g. to generate summaries.

- procedure::

- Obtain a transcript ID:

transcript = transcriber.transcribe("sample.mp3") transcript_id = transcript.id - Generate a summary:

from assemblyai import Lemur lemur = Lemur(api_key="你的API密钥") summary = lemur.summarize(transcript_id) print(summary.response) - Sample Output: "Progress on the project was discussed at the meeting and it is scheduled to be completed next week."

- Obtain a transcript ID:

caveat

- Supported formats: Compatible with 33 audio/video formats such as MP3, WAV, etc.

- Language Settings: 99+ languages are supported and can be accessed via

language_code="zh"Specify Chinese. - billing: Billed per audio hour, see the official website for pricing.

By following the steps above, you can fully utilize the powerful features of Universal-2 to build efficient voice applications.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...