Agent Leaderboard: AI Agent Performance Evaluation Rankings

General Introduction

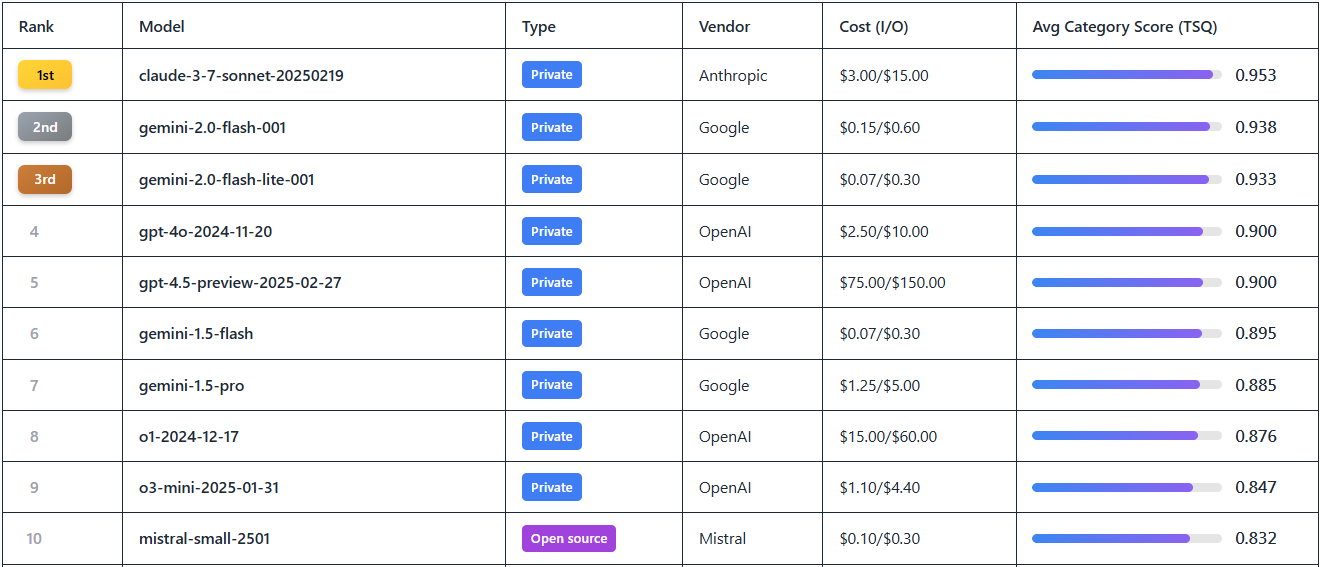

Agent Leaderboard is an online tool focused on AI agent performance evaluation launched by Galileo AI on the Hugging Face platform. It tests 17 leading Large Language Models (LLMs), covering scenarios ranging from simple API calls to complex multi-tool interactions, by synthesizing multiple authoritative datasets such as BFCL, τ-bench, xLAM, and ToolACE. The website aims to answer the question "How do AI agents perform in real business scenarios?" and help developers and organizations choose the right model for their needs. The leaderboard is updated monthly and supports viewing model rankings, scores, costs, and other information for teams that need to build an efficient AI agent system. Users can visually compare the performance of both open source and private models here. View analysis reports:Hugging Face Launches Agent Intelligence Body Rankings: Who's the Leader in Tool Calling?

Function List

- Model Performance Ranking: Displays the rankings of 17 leading LLMs, such as Gemini-2.0 Flash, GPT-4o, etc., based on Tool Selection Quality (TSQ) scores.

- Multidimensional assessment data: Provides cross-domain test results covering more than 390 scenarios in math, retail, aviation, API interactions, and more.

- Cost vs. efficiency:: Demonstrate per million for each model token Cost (e.g. Gemini-2.0 Flash $0.15 vs GPT-4o $2.5) for price/performance analysis.

- Filtering and viewing tools:: Supports filtering models by vendor, open source/private status, score, etc. to quickly locate required information.

- Open source access to datasets: Provide download links to test datasets for developers to study and validate.

- Dynamic update mechanism:: Monthly leaderboard updates to synchronize the latest model releases and performance data.

Using Help

How to access and use

Agent Leaderboard is an online tool that requires no installation and allows users to simply open a browser, visit the https://huggingface.co/spaces/galileo-ai/agent-leaderboard Ready to use. Once the page loads, you are greeted with an intuitive leaderboard table that allows you to browse all publicly available data without registering or logging in. For more in-depth involvement (such as downloading datasets or making suggestions), you can sign up for a Hugging Face account.

workflow

- Browse the charts

- When you open the page, the home page displays the current top 17 LLM models by default.

- The table columns include Rank, Model, Vendor, Score, Cost, and Type (open source/private). Score", "Cost" and "Type (Open Source/Private)".

- Example: The #1 ranked Gemini-2.0 Flash with a score of 0.9+ and a cost of $0.15/million tokens.

- Screening and Comparison Models

- Click on the filter boxes at the top of the table to select "Vendor" (e.g., Google, OpenAI), "Type" (open source or private), or "Score Range".

- For example, by typing "OpenAI", the page will filter out models such as GPT-4o, o1, etc., so that you can easily compare their performance.

- To see the cost-effectiveness, sort by the "Cost" column to find the least expensive option.

- View detailed assessment data

- Clicking on any model name (e.g. Gemini-1.5-Pro) will bring up a specific performance report.

- The report includes the performance of the model on different datasets, e.g., retail scenario scores in τ-bench, API interaction scores in ToolACE, and so on.

- The data is presented in graphical form to visualize the strengths and weaknesses of the model in multi-tool tasks or long context scenarios.

- Download Open Source Datasets

- At the bottom of the page there is a link to "Dataset", click on it to go to

https://huggingface.co/datasets/galileo-ai/agent-leaderboardThe - Users can download complete test datasets (e.g., math question banks for BFCL, cross-domain data for xLAM) for local analysis or secondary development.

- To download, you need to sign in to your Hugging Face account. If you don't have an account, you can sign up by clicking on "Sign Up" in the upper right corner of the page, which is a simple process that only requires email verification.

- At the bottom of the page there is a link to "Dataset", click on it to go to

- Get Updates

- The leaderboard promises to be updated monthly, and follow Galileo AI's official blog (link at the bottom of the page) to be notified of the latest model additions.

- For example, recent user requests in the community to join Claude 3.7 Sonnet and Grok 3, official replies will be added once the API is available.

Featured Functions

- Interpretation of Tool Selection Quality (TSQ) Scores

- TSQ is Agent Leaderboard's core evaluation metric that measures the accuracy of the model in the use of the tool.

- Example operation: select GPT-4o and see its TSQ score of 0.9, with line items showing that it performs well in multi-tool collaborative tasks, but is slightly weaker in long context scenarios.

- Usage advice: If your project involves complex workflows, choose a model with a TSQ higher than 0.85.

- Multi-Domain Test Results Analysis

- Click on "Evaluation Details" to see how the model performed in 14 benchmark tests.

- Example: Gemini-2.0 Flash scored 0.92 in BFCL (Math and Education) and 0.89 in ToolACE (API Interaction).

- Usage Scenario: Teams that need to process aviation data can refer to the τ-bench results to select models that specialize in that area.

- Cost Optimization Decision Making

- See the input/output price per million tokens in the "Cost" column of the table.

- Example: Filtering for "Cost < $1" results in Mistral-small-2501 ($0.5/million tokens), which is suitable for projects with limited budget.

- Tip: Balance performance and expense by combining score and cost.

caveat

- Data update time: Current data is as of February 2025, and it is recommended that you visit regularly for the most up-to-date rankings.

- Community Feedback: If you need a new model (e.g. Grok 3), leave a message on the Hugging Face page and the official response will be based on API availability.

- technical requirement: The web page has low network requirements, but a stable connection is needed to download the dataset, so desktop operation is recommended.

With these steps, users can quickly get started with Agent Leaderboard, a tool that provides practical support whether they are looking for high-performance models or researching the technical details of AI agents.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...