[Reprint] QwQ-32B's Tool Calling Capability and Agentic RAG Application

contexts

A recent article titled Search-R1: Training LLMs to Reason and Leverage Search Engines with Reinforcement Learning Thesis (arxiv.org/pdf/2503.09516) has attracted a lot of attention. The paper proposes a new way to train Large Language Models (LLMs) using reinforcement learning for reasoning and utilizing search engines. Notably, some of the ideas in the paper are similar to those developed by Qwen's team in the QwQ-32B The exploration on the model coincides.

Alibaba's recently released QwQ-32B (qwenlm.github.io/zh/blog/qwq-32b/) integrates Agent-related capabilities in the reasoning model. These capabilities allow the model to think critically while using the tool and adjust the reasoning process based on feedback from the environment. In the QwQ-32B model folder the added_tokens.json file, you can see the special tokens added for tool calls and tool responses:

{

"</think>": 151668,

"</tool_call>": 151658,

"</tool_response>": 151666,

"<think>": 151667,

"<tool_call>": 151657,

"<tool_response>": 151665

}

In this paper, we will use Agentic RAG As an example, the capabilities of the QwQ-32B model in terms of tool calling are demonstrated.

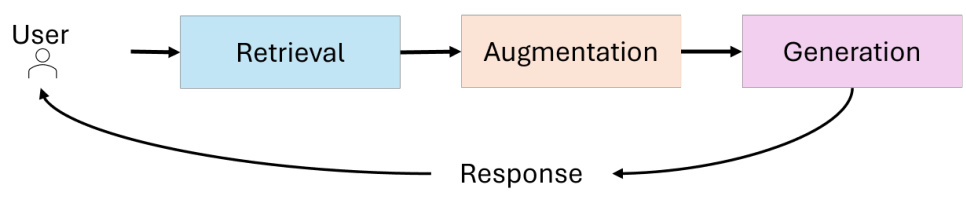

Agentic RAG vs. Traditional RAG

In order to better understand the benefits of Agentic RAG, we first need to distinguish between Agentic RAG and the current prevalent RAG practice paradigm:

- Traditional RAG: The vast majority of RAG projects today are essentially workflows, i.e., systems that orchestrate LLMs and tools through predefined code paths. This artificially pre-defined, "written to death" workflow consists of many interrelated but fragile parts such as routing, chunking, reordering, query interpretation, query expansion, source contextualization, and search engineering.

- drawbacks: It is difficult to cover all the corner cases in a human-organized workflow. Especially in complex scenarios that require multiple rounds of retrieval, the effect is more limited.

- Agentic RAG: Streamline the process with an end-to-end approach. Simply equip the model with an API tool for networked retrieval (in the case of this paper, using the Tavily API, with a certain amount of free credits), the rest of the work is all done by the model itself, including but not limited to:

- Intent to understand (determine if networking is required)

- Rewriting or splitting the question

- interface call

- Process choreography (including whether and how to conduct multi-step searches)

- cite sth. as the source of sth.

- ...

Simply put, the core concept of Agentic RAG is:Less structure, more intelligence, Less is MoreThe

precisely as Anthropic Definition of the Agent Model: Similar to Deep Search, Agents must perform the target task internally, and they "dynamically direct their own processes and use of tools to control how the task is accomplished".

Overall process

The following figure illustrates the overall flow of Agentic RAG:

![[转载]QwQ-32B 的工具调用能力及 Agentic RAG 应用-1 [转载]QwQ-32B 的工具调用能力及 Agentic RAG 应用](https://aisharenet.com/wp-content/uploads/2025/03/b04be76812d1a15.jpg)

- Adapt user questions to prompt word templates.

- Calls the model to generate a new token. if the generation process does not result in a

<tool_call> ... </tool_call>, then the return result is output directly. - In the event of

<tool_call> ... </tool_call>, then it indicates that the model initiated a tool call request during the reasoning process. Parsing this request, executingweb_searchand wraps the results of the interface call in<tool_response> ... </tool_response>format, spliced into the context of the macromodel, and requested again for macromodel generation. - Repeat the above steps until there are no more

<tool_call>(or the request limit is reached) or the presence of<|im_end|>The

The process is essentially the same as that described in the Search-R1 paper:

![[转载]QwQ-32B 的工具调用能力及 Agentic RAG 应用-2 [转载]QwQ-32B 的工具调用能力及 Agentic RAG 应用](https://aisharenet.com/wp-content/uploads/2025/03/32ec214ace69432.jpg)

Key Technology Points

- Cue word templates::

user_question = input('请输入你的问题:')

max_search_times = 5

prompt = f"""You are Qwen QwQ, a curious AI built for retrival augmented generation.

You are at 2025 and current date is {date.today()}.

You have access to the web_search tool to retrival relevant information to help answer user questions.

You can use web_search tool up to {max_search_times} times to answer a user's question, but try to be efficient and use as few as possible.

Below are some guidelines:

- Use web_search for general internet queries, like finding current events or factual information.

- Always provide a final answer in a clear and concise manner, with citations for any information obtained from the internet.

- If you think you need to use a tool, format your response as a tool call with the `action` and `action_input` within <tool_call>...</tool_call>, like this:\n<tool_call>\n{{ "action": "web_search", "action_input": {{ "query": "current stock price of Tesla" }} }}\n</tool_call>.

- After using a tool, continue your reasoning based on the web_search result in <tool_response>...</tool_response>.

- Remember that if you need multi-turn web_search to find relevant information, make sure you conduct all search tasks before you provide a final answer.

---

User Question:{user_question}"""

- Custom stop signs::

When it is detected that the model triggers an autoregressive generation process during the

<tool_call>(.*?)</tool_call>\s*$The generation stops after the format (regular expression match):

from transformers import (

AutoModelForCausalLM,

AutoTokenizer,

StoppingCriteria,

StoppingCriteriaList

)

import torch

import re

tool_call_regex = r"<tool_call>(.*?)</tool_call>\s*$"

end_regex = r"<\|im_end\|\>\s*$"

# 同时监测: <tool_call> 或 <|im_end|>

class RegexStoppingCriteria(StoppingCriteria):

def __init__(self, tokenizer, patterns):

self.patterns = patterns

self.tokenizer = tokenizer

def __call__(self, input_ids: torch.LongTensor, scores: torch.FloatTensor, **kwargs) -> bool:

decoded_text = self.tokenizer.decode(input_ids[0])

for pattern in self.patterns:

if re.search(pattern, decoded_text, re.DOTALL):

return True

return False

stopping_criteria = StoppingCriteriaList([

RegexStoppingCriteria(

tokenizer,

patterns=[tool_call_regex, end_regex]

)

])

#model.generate(..., stopping_criteria=stopping_criteria) # 加上停止符

- Web Search API::

The search API used in this practice is the Tavily API, which offers a certain amount of free credits to facilitate experimentation and replication.The Tavily API allows developers to integrate web search functionality into their applications through simple API calls.

Practice Code

For detailed practice code, please refer to the following link:

Test Cases

Testing Issues: Please give me more information about the QwQ-32B model recently released in open source by Ali.

Generate results: (see notebook for full results)

![[转载]QwQ-32B 的工具调用能力及 Agentic RAG 应用-3 [转载]QwQ-32B 的工具调用能力及 Agentic RAG 应用](https://aisharenet.com/wp-content/uploads/2025/03/7a838e98b513191.jpg)

![[转载]QwQ-32B 的工具调用能力及 Agentic RAG 应用-4 [转载]QwQ-32B 的工具调用能力及 Agentic RAG 应用](https://aisharenet.com/wp-content/uploads/2025/03/0a043ad18e517ec.jpg)

![[转载]QwQ-32B 的工具调用能力及 Agentic RAG 应用-5 [转载]QwQ-32B 的工具调用能力及 Agentic RAG 应用](https://aisharenet.com/wp-content/uploads/2025/03/ffccf8080820002.jpg)

As can be seen from the results, the inference model autonomously performs intent understanding (determining whether a networked search is required) and search keyword generation (question rewriting or splitting). The model also takes into account potential multi-round search scenarios. After triggering a web search Afterward, the model generated a final report based on the search results.

In this case, the model completed only one search interface call. This may be due to the simplicity of the case problem, or the fact that the base model is not yet capable enough to trigger complex multi-round searches. This also shows that to fully utilize the potential of the model as an intelligent body, it is still necessary to refer to Search-R1 for post training and targeted fine-tuning.

However, from the capabilities already demonstrated by the QwQ-32B model, the combination of well-designed synthetic (or manually sorted) retraining data, as well as re-enforcement training or SFT in segmented scenarios, and masking out the output returned by the tool interface response token This retraining route is expected to become the mainstream of future intelligence development and deployment, with the corresponding loss. Through retraining, various actions and boundary cases can be considered in advance, making deployment simpler and eliminating the need for human-orchestrated design workflows. section 3.1 of the Search-R1 paper describes in detail the "Loss Masking for Retrieved Tokens" Technology. This is accomplished through the use of the PPO and GRPO in which the retrieved Tokens are loss-masked, Search-R1 optimizes the LLM to generate Tokens, enhancing the model's ability to interact with search engines and perform inference.

In addition, Search-R1 supports multi-round retrieval and inference (Section 3.2, "Text Generation with Interleaved Multi-turn Search Engine Call" in the paper) through the <search> cap (a poem) </search> trigger and puts the retrieved content into the <information> cap (a poem) </information> between. The output of the final answer, on the other hand, uses the <answer> cap (a poem) </answer>The

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...