Heavyweight! The world's first decentralized 10B model is trained and open-sourced within a week!

The world's first decentrally trained 10B parametric model is born! The Prime Intellect team announced that they have completed a landmark effort: a decentralized training network across the US, Europe, and Asia that successfully trained a large model with 10B parameters. This marks a revolutionary step in the field of AI training.

The Prime Intellect team announced that they have completed a landmark effort: a decentralized training network across the US, Europe, and Asia that successfully trained a large model with 10B parameters. This marks a revolutionary step in the field of AI training.

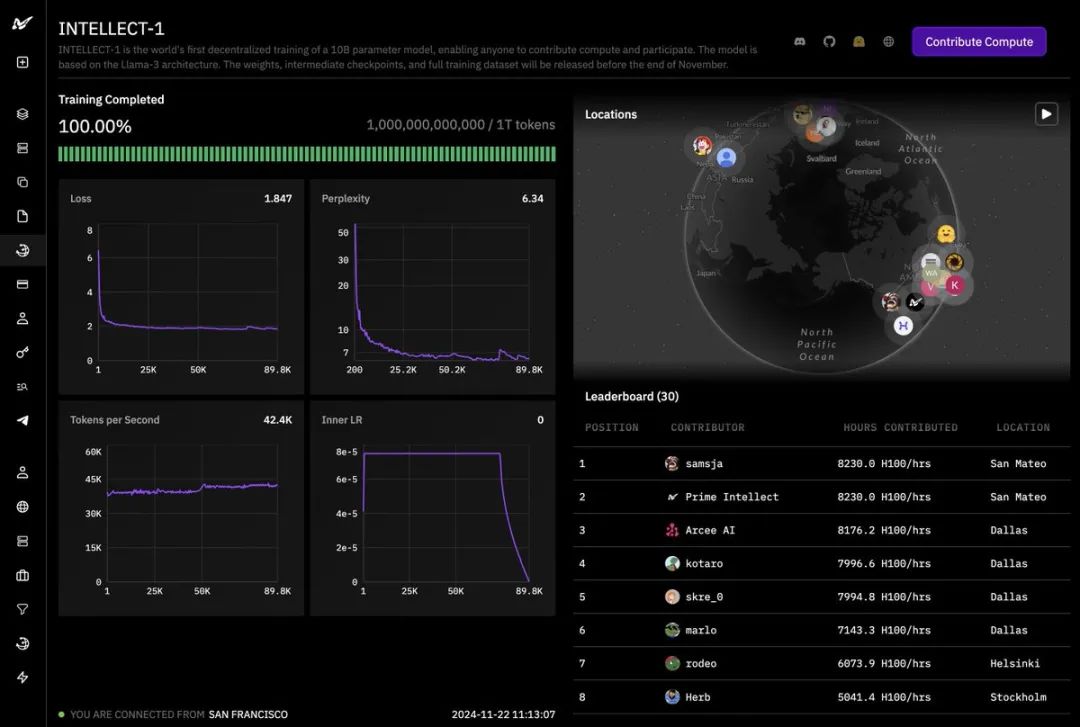

As you can see from the training panel, the project, called INTELLECT-1, has completed the training of 1 trillion (1T) tokens.

Both the loss and perplexity curves show a desirable downward trend, and the number of tokens generated per second remains stable, indicating that the training process was very successful. The success of this project would not have been possible without the support of our many partners.

The success of this project would not have been possible without the support of our many partners.

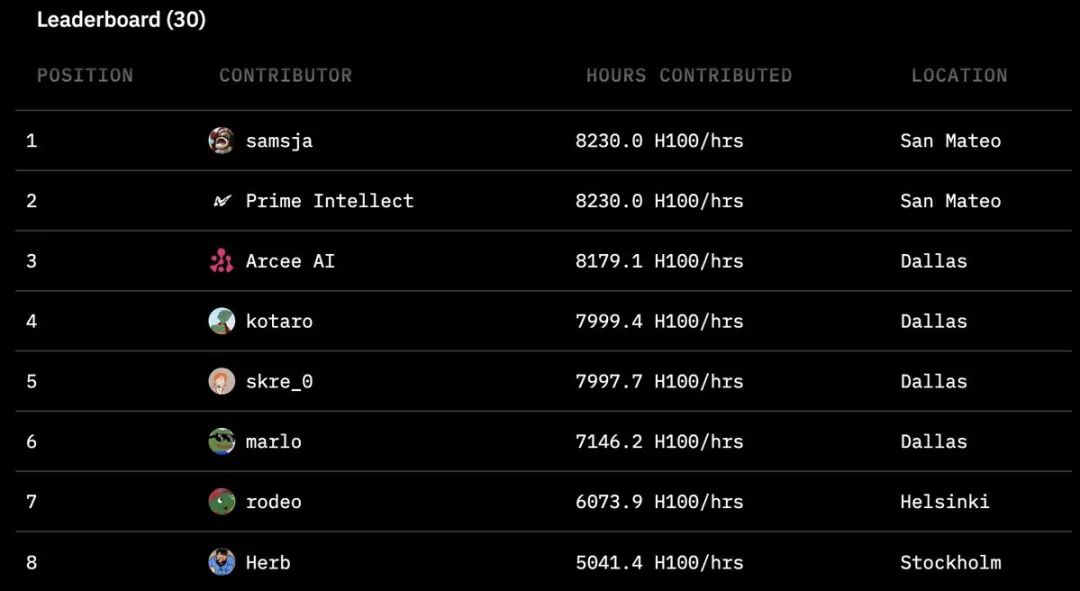

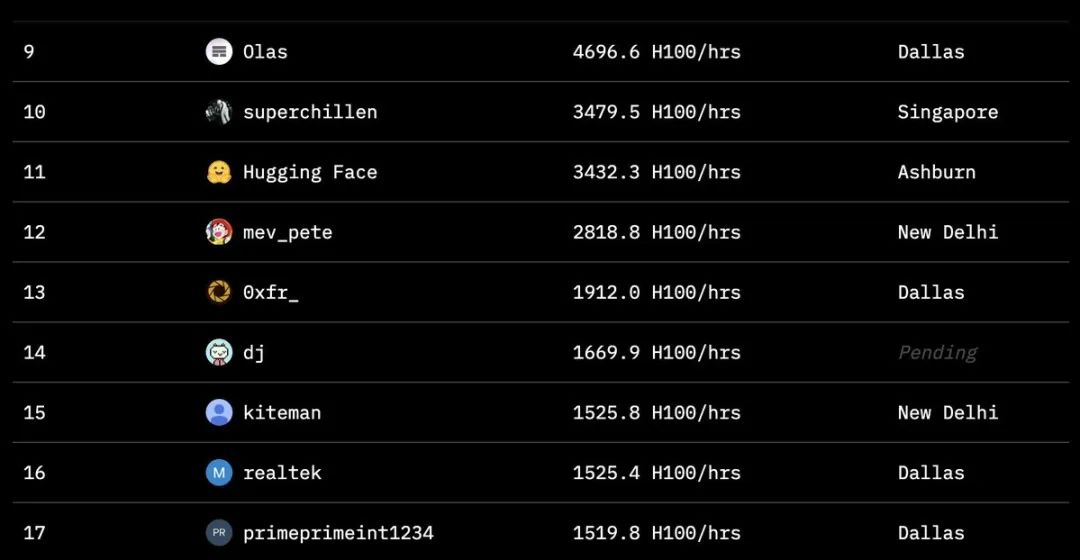

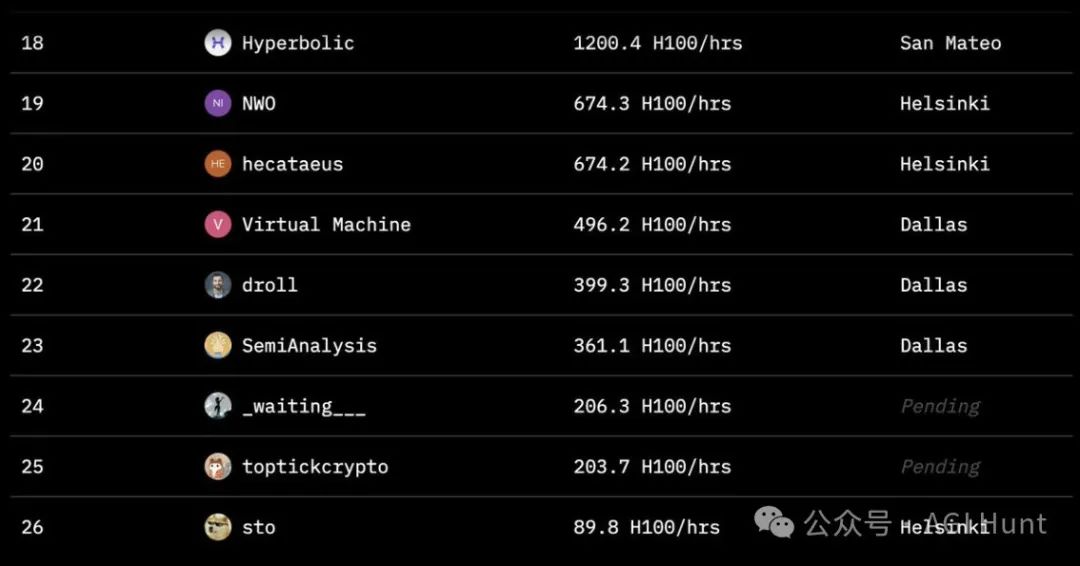

A number of organizations including Hugging Face, SemiAnalysis, Arcee.ai, Hyperbolic Labs, Olas, Akash, Schelling AI, and others contributed valuable arithmetic resources to this training. This unprecedented model of cooperation demonstrates a new type of collaboration in AI. As you can see from the project's leaderboard, contributors from all over the globe have provided a staggering amount of computing time. The highest contributor reached 8,230 hours, with participants spread across San Mateo, Dallas, Helsinki and Stockholm. This globalized model of arithmetic collaboration allows AI training to no longer be limited to the data centers of a handful of tech giants.

As you can see from the project's leaderboard, contributors from all over the globe have provided a staggering amount of computing time. The highest contributor reached 8,230 hours, with participants spread across San Mateo, Dallas, Helsinki and Stockholm. This globalized model of arithmetic collaboration allows AI training to no longer be limited to the data centers of a handful of tech giants.

On a technical level, the innovation of this program is equally impressive.

The team adopted the DiLoCo distributed training technique to solve the challenges of cross-region training. In order to cope with the various challenges in a distributed environment, the research team also implemented a fault-tolerant training mechanism and asynchronous distributed checkpointing techniques.

In terms of memory optimization, the team chose to upgrade to the FSDP2 framework, which successfully solved the memory allocation issues present in FSDP1.

Meanwhile, the training efficiency is significantly improved by the application of tensor parallel computing technology.

Behind these technological innovations is a strong research team working quietly. The project leaders especially thank Tristan Rice and Junjie Wang for their contributions to fault-tolerant training, and Chien-Chin Huang and Iris Zhang for their work on asynchronous distributed checkpointing. Also, Yifu Wang is credited for his advice on tensor parallel computing.

What's even more exciting is that the team has announced that it will be releasing the full open source version, including the base model, checkpoint file, post-training model, and training dataset, within a week. This means that researchers and developers around the world will soon be able to innovate and develop based on this model.

There are already developers who can't wait to start experimenting. One developer demonstrated an attempt at model inference on two 4090 graphics cards on the West Coast of the United States and in Europe. Although the network connection between the two locations was not ideal, this experiment proved the flexibility and adaptability of the model.

The success of this project is not just a technological breakthrough, but an important milestone in the democratization of AI for all.

It proves that through global collaboration, we are well positioned to break through the limitations of traditional AI training and engage more organizations and individuals in the wave of AI development.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...