Intelligent Agentic Retrieval Enhanced Generation: An Overview of Agentic RAG Technology

summaries

Large Language Models (LLMs), such as OpenAI's GPT-4, Google's PaLM, and Meta's LLaMA, have dramatically changed Artificial Intelligence (AI) by enabling human-like text generation and natural language understanding. However, their reliance on static training data limits their ability to respond to dynamic, real-time queries, resulting in outdated or inaccurate output. Retrieval Augmented Generation (RAG) has emerged as a solution to augment LLMs by integrating real-time data retrieval to provide contextually relevant and timely responses. Despite the promise of RAG, traditional RAG systems are limited in terms of static workflows and lack of flexibility needed to accommodate multi-step reasoning and complex task management.

Agentic Retrieval Augmented Generation (Agentic RAG) overcomes these limitations by embedding autonomous AI agents into the RAG process. These agents utilize agentic design patterns - reflection, planning, tool use, and multi-agent collaboration - to dynamically manage retrieval strategies, iteratively refine contextual understanding, and adapt workflows to meet the requirements of complex tasks. This integration enables the Agentic RAG system to provide unrivaled flexibility, scalability, and context-awareness across a wide range of applications.

This review comprehensively explores Agentic RAG, starting with its underlying principles and the evolution of the RAG paradigm. It details the categorization of Agentic RAG architectures, highlights their key applications in industries such as healthcare, finance and education, and explores practical implementation strategies. In addition, it discusses the challenges of scaling these systems, ensuring ethical decision-making, and optimizing the performance of real-world applications, while providing detailed insights into frameworks and tools for implementing Agentic RAG.

Keywords. Large Language Models (LLMs) - Artificial Intelligence (AI) - Natural Language Understanding - Retrieval Augmented Generation (RAG) - Agentic RAG - Autonomous AI Agents - Reflection - Planning - Tool Usage - Multi-Agent Collaboration - Agentic Patterns - Contextual Understanding - Dynamic Adaptation - Scalability - Real-Time Data Retrieval - Agentic RAG Classification - Healthcare Applications - Financial Applications - Education Applications - Ethical AI Decision Making - Performance Optimization - Multi-Step Reasoning

1 Introduction

Large Language Models (LLMs) [1, 2] [3], such as OpenAI's GPT-4, Google's PaLM, and Meta's LLaMA, have dramatically changed Artificial Intelligence (AI) by generating human-like text and performing complex natural language processing tasks. These models have driven innovation in the conversational domain [4], including conversational agents, automated content creation, and real-time translation. Recent advances have extended their capabilities to multimodal tasks such as text-to-image and text-to-video generation [5], enabling the creation and editing of videos and images based on detailed prompts [6], which broadens the range of potential applications for generative AI.

Despite these advances, LLMs still face significant limitations due to their reliance on static pre-training data. This dependency typically leads to outdated information, phantom responses [7], and an inability to adapt to dynamic real-world scenarios. These challenges emphasize the need for systems that can integrate real-time data and dynamically refine responses to maintain contextual relevance and accuracy.

Retrieval-enhanced generation (RAG) [8, 9] emerged as a promising solution to these challenges.RAG enhances the relevance and timeliness of responses by combining the generative capabilities of LLMs with external retrieval mechanisms [10]. These systems retrieve real-time information from sources such as knowledge bases [11], APIs, or the Web, effectively bridging the gap between static training data and dynamic application requirements. However, traditional RAG workflows remain constrained by their linear and static design, which limits their ability to perform complex multi-step reasoning, integrate deep contextual understanding, and iteratively refine responses.

The evolution of agents [12] has further enhanced the capabilities of AI systems. Modern agents, including LLM-based and mobile agents [13], are intelligent entities capable of perceiving, reasoning, and performing tasks autonomously. These agents utilize agent-based workflow patterns such as reflection [14], planning [15], tool use, and multi-agent collaboration [16], enabling them to manage dynamic workflows and solve complex problems.

The convergence of RAG and agentic intelligence has given rise to Agentic Retrieval Augmented Generation (Agentic RAG) [17], a paradigm that integrates agents into the RAG process.Agentic RAG implements dynamic retrieval strategies, contextual understanding, and iterative refinement [18], allowing for adaptive and efficient information processing. Unlike traditional RAG, Agentic RAG employs autonomous agents to orchestrate retrieval, filter relevant information, and refine responses, and excels in scenarios that require accuracy and adaptability.

This review explores the underlying principles, classification and applications of Agentic RAG. It provides a comprehensive overview of RAG paradigms such as Simple RAG, Modular RAG, and Graph RAG [19] and their evolution to Agentic RAG systems. Key contributions include a detailed categorization of Agentic RAG frameworks, applications in domains such as healthcare [20, 21], finance, and education [22], and insights into implementation strategies, benchmarking, and ethical considerations.

The paper is structured as follows: section 2 introduces RAG and its evolution, emphasizing the limitations of traditional approaches. Section 3 details the principles of agentic intelligence and agentic models. Section 4 provides a categorization of Agentic RAG systems, including single-agent, multi-agent and graph-based frameworks. Section 5 examines applications of Agentic RAG, while Section 6 discusses implementation tools and frameworks. Section 7 focuses on benchmarks and datasets, and Section 8 concludes with future directions for Agentic RAG systems.

2 Basis for the generation of retrieval enhancements

2.1 Overview of Retrieval Augmented Generation (RAG)

Retrieval-augmented generation (RAG) represents a major advance in artificial intelligence, combining the generative power of large language models (LLMs) with real-time data retrieval. While LLMs have demonstrated exceptional capabilities in natural language processing, their dependence on static pre-training data often results in outdated or incomplete responses.RAG addresses this limitation by dynamically retrieving relevant information from external sources and incorporating it into the generative process, enabling the generation of contextually-accurate and responsive outputs in a timely manner.

2.2 Core components of the RAG

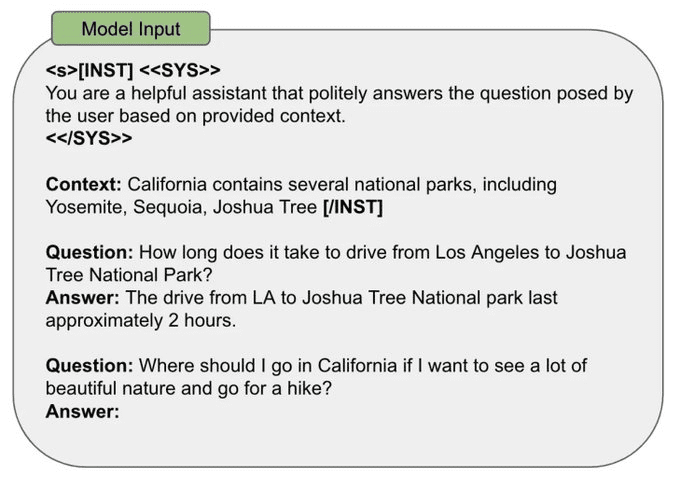

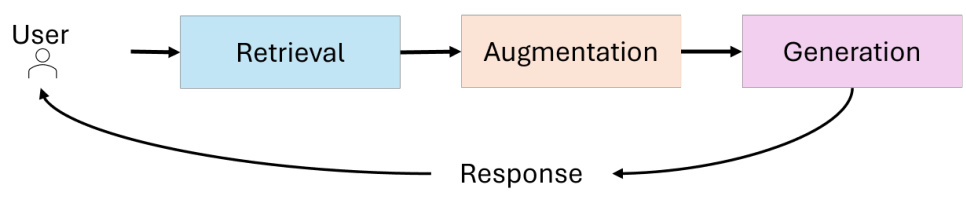

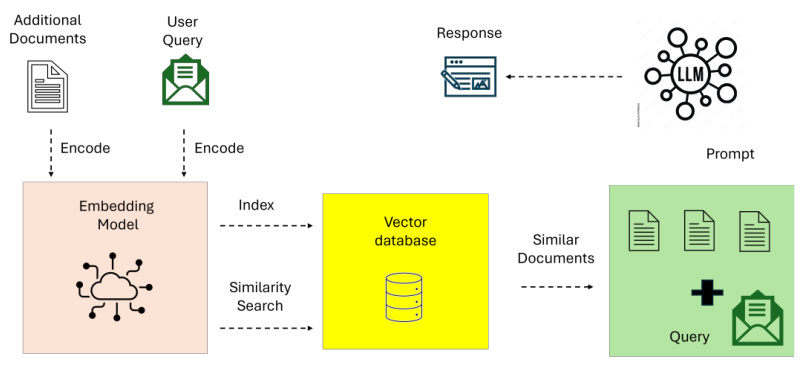

The architecture of the RAG system integrates three main components (see Figure 1):

- look up: Responsible for querying external data sources such as knowledge bases, APIs or vector databases. Advanced retrievers utilize dense vector search and Transformer-based models to improve retrieval accuracy and semantic relevance.

- reinforce: Process the retrieved data to extract and summarize the information most relevant to the query context.

- generating: Combine the retrieved information with the pre-trained knowledge of the LLM to generate coherent, contextually appropriate responses.

Figure 1: Core Components of RAG

2.3 Evolution of the RAG paradigm

The field of Retrieval Augmented Generation (RAG) has made significant advances to cope with the increasing complexity of real-world applications where contextual accuracy, scalability and multi-step reasoning are critical. Starting from simple keyword-based retrieval, it has transformed into complex, modular and adaptive systems capable of integrating various data sources and autonomous decision-making processes. This evolution highlights the growing need for RAG systems to efficiently and effectively handle complex queries.

This section examines the evolution of the RAG paradigm, describing the key stages of development - Simple RAG, Advanced RAG, Modular RAG, Graph RAG, and Agent-Based RAG - as well as their defining characteristics, strengths, and limitations. By understanding the evolution of these paradigms, the reader can appreciate the advances made in retrieval and generative capabilities, as well as their applications in various domains.

2.3.1 Simple RAG

Simple RAG [23] represents the base implementation of retrieval-enhanced generation. Figure 2 illustrates simple RAGs for simple retrieval-reading workflows, focusing on keyword-based retrieval and static datasets. These systems rely on simple keyword-based retrieval techniques such as TF-IDF and BM25 to retrieve documents from static datasets. The retrieved documents are then used to enhance the generation of language models.

Figure 2: An Overview of Naive RAG.

Simple RAG is characterized by its simplicity and ease of implementation, and is suitable for tasks involving fact-based queries with minimal contextual complexity. However, it suffers from several limitations:

- Lack of contextualization: Retrieved documents often fail to capture the semantic nuances of a query because they rely on lexical matching rather than semantic understanding.

- Output fragmentation: Lack of advanced preprocessing or contextual integration often leads to incoherent or overly generic responses.

- scalability issue: Keyword-based retrieval techniques tend to perform poorly when dealing with large datasets and usually fail to identify the most relevant information.

Despite these limitations, the simple RAG system provides a critical proof of concept for combining retrieval with generation, laying the groundwork for more complex paradigms.

2.3.2 Advanced RAG

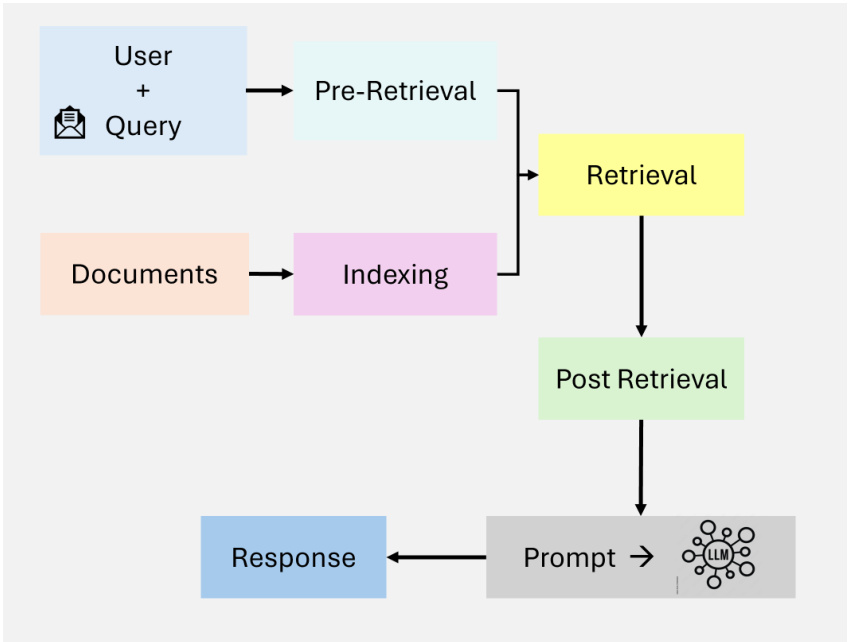

Advanced RAG [23] systems build on the limitations of simple RAG by integrating semantic understanding and enhanced retrieval techniques. Figure 3 highlights the semantic enhancement and iterative, context-aware process of advanced RAG in retrieval. These systems utilize dense retrieval models such as Dense Paragraph Retrieval (DPR) and neural ranking algorithms to improve retrieval accuracy.

Figure 3: Overview of Advanced RAG

Key features of Advanced RAG include:

- Dense Vector Search: Queries and documents are represented in a high level vector space, resulting in better semantic alignment between user queries and retrieved documents.

- context reordering: The neural model reorders the retrieved documents to prioritize the most contextually relevant information.

- Iterative search: Advanced RAG introduces a multi-hop retrieval mechanism that enables reasoning about complex queries across multiple documents.

These advances make advanced RAG suitable for applications that require high precision and nuanced understanding, such as research synthesis and personalized recommendations. However, issues of computational overhead and limited scalability remain, especially when dealing with large datasets or multi-step queries.

2.3.3 Modular RAG

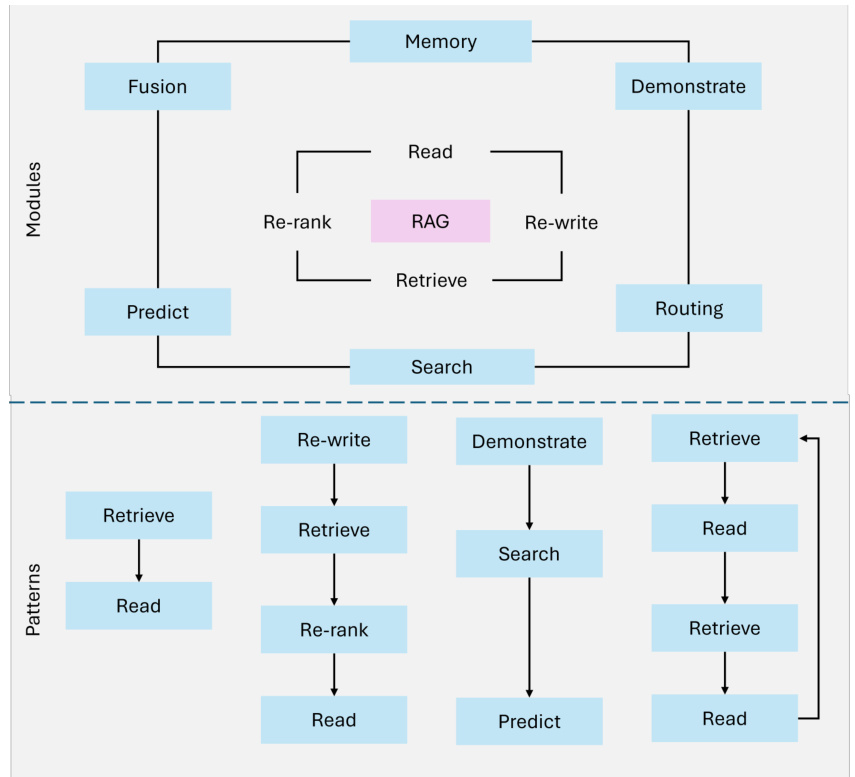

Modular RAGs [23] represent the latest development in the RAG paradigm, emphasizing flexibility and customization. These systems decompose the retrieval and generation processes into independent, reusable components that enable domain-specific optimization and task adaptability. Figure 4 illustrates the modular architecture, showing hybrid retrieval strategies, composable processes, and external tool integration.

Key innovations of the modular RAG include:

- hybrid search strategy: Combine sparse retrieval methods (e.g., Sparse Encoder - BM25) and dense retrieval techniques (e.g., DPR - Dense Paragraph Retrieval) to maximize accuracy for different query types.

- tool integration: Integrate external APIs, databases, or computational tools to handle specific tasks, such as real-time data analysis or domain-specific computations.

- Composable Processes: Modular RAG enables retrievers, generators, and other components to be replaced, augmented, or reconfigured, independently allowing for a high degree of adaptation to specific use cases.

For example, a modular RAG system designed for financial analytics might retrieve real-time stock prices through an API, analyze historical trends using intensive search, and generate actionable investment insights through a customized language model. This modularity and customization makes Modular RAG well suited for complex, multi-domain tasks, providing both scalability and accuracy.

Figure 4: Overview of Modular RAG

2.3.4 Figure RAG

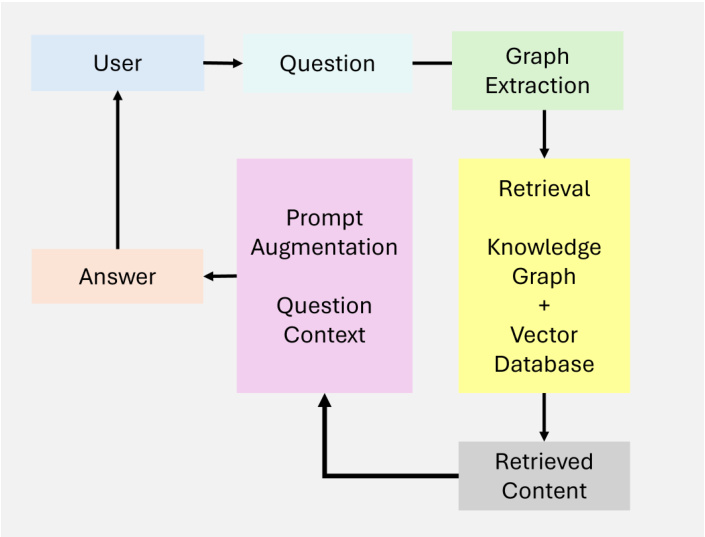

Graph RAG [19] extends traditional retrieval enhancement generation systems by integrating graph-based data structures, as shown in Figure 5. These systems utilize relationships and hierarchies in graph data to enhance multi-hop reasoning and contextual enhancement. By integrating graph-based retrieval, graph RAGs are able to produce richer and more accurate generated outputs, especially for tasks that require relational understanding.

Figure RAG is characterized by its ability to:

- node connectivity: Capture and reason about relationships between entities.

- Hierarchical Knowledge Management: Handling structured and unstructured data through graph hierarchies.

- contextual enhancement: Add relational understanding by utilizing graph paths.

However, the graph RAG has some limitations:

- Limited scalability: Dependence on graph structure may limit scalability, especially for a wide range of data sources.

- Data dependency: High-quality graph data is essential for meaningful output, which limits its application to unstructured or poorly annotated datasets.

- Integration complexity: Integrating graph data with unstructured retrieval systems increases the complexity of design and implementation.

Graph RAG is well suited for applications in medical diagnostics, legal research, and other fields where reasoning about structured relationships is critical.

Figure 5: Overview of Graph RAG

2.3.5 Proxy RAG

Agent-based RAG represents a paradigm shift by introducing autonomous agents capable of dynamic decision-making and workflow optimization. Unlike static systems, agent-based RAGs employ iterative refinement and adaptive retrieval strategies to cope with complex, real-time and multi-domain queries. This paradigm exploits the modularity of the retrieval and generation process while introducing agent-based autonomy.

Key features of agent-based RAG include:

- autonomous decision-making: Agents independently evaluate and manage retrieval strategies based on query complexity.

- Iterative refinement: Integrate feedback loops to improve retrieval accuracy and response relevance.

- Workflow optimization: Dynamically programmed tasks to make real-time applications more efficient.

Despite these advances, agent-based RAGs face a number of challenges:

- Coordination complexity: Managing interactions between agents requires complex coordination mechanisms.

- computational overhead: Using multiple agents increases the resource requirements for complex workflows.

- scalability constraints: Although scalable, the dynamic nature of the system may put pressure on high query volumes.

Agent-based RAGs have excelled in applications in areas such as customer support, financial analytics, and adaptive learning platforms, where dynamic adaptability and contextual accuracy are critical.

2.4 Challenges and limitations of traditional RAG systems

Traditional Retrieval Augmented Generation (RAG) systems have greatly extended the capabilities of large language models (LLMs) by integrating real-time data retrieval. However, these systems still face some key challenges that hinder their effectiveness in complex real-world applications. The most notable limitations center on context integration, multi-step reasoning, and scalability and latency issues.

2.4.1 Contextual integration

Even when RAG systems successfully retrieve relevant information, they often struggle to seamlessly integrate the information into the generated response. The static nature of the retrieval process and limited context-awareness leads to fragmented, inconsistent, or overly generic output.

EXAMPLE: A query such as, "Recent advances in Alzheimer's disease research and their implications for early treatment," may generate relevant scientific papers and medical guidelines. However, traditional RAG systems are often unable to synthesize these findings into coherent explanations that link new treatments to specific patient scenarios. Similarly, for a query such as, "What are the best sustainable practices for small-scale agriculture in arid regions," a traditional system may retrieve papers on general agricultural methods but omit critically important sustainable practices for arid environments.

Table 1. Comparative Analysis of RAG Paradigms

| Paradigm | Key Features | Strengths |

|---|---|---|

| Naïve RAG | - Keyword-based retrieval (e.g., TF-IDF. BM25) | - Simple and easy to implement - Suitable for fact-based queries |

| Advanced RAG | - Dense retrieval models (e.g., DPR) - Neural ranking and re-ranking - Multi-hop retrieval | - High precision retrieval - Improved contextual relevance |

| Modular RAG | - Hybrid retrieval (sparse and dense) - Tool and API integration - Composable, domain-specific pipelines | - High flexibility and customization - Suitable for diverse applications - Scalable |

| Graph RAG | - Integration of graph-based structures - Multi-hop reasoning - Contextual enrichment via nodes | - Relational reasoning capabilities - Mitigates hallucinations - Ideal for structured data tasks |

| Agentic RAG | - Autonomous agents - Dynamic decision-making - Iterative refinement and workflow optimization | - Adaptable to real-time changes - Scalable for multi-domain tasks - High accuracy |

2.4.2 Multi-step reasoning

Many real-world queries require iterative or multi-hop reasoning - retrieving and synthesizing information from multiple steps. Traditional RAG systems are often ill-prepared to refine retrieval based on intermediate insights or user feedback, resulting in incomplete or disjointed responses.

Example: a complex query such as, "What lessons from European renewable energy policies can be applied to developing countries and what are the potential economic impacts?" Multiple pieces of information need to be reconciled, including policy data, contextualization to developing regions, and economic analysis. Traditional RAG systems are often unable to connect these disparate elements into a coherent response.

2.4.3 Scalability and latency issues

As the number of external data sources increases, querying and ranking large data sets becomes increasingly computationally intensive. This leads to significant latency, which undermines the system's ability to provide timely responses in real-time applications.

Example: In time-sensitive environments such as financial analytics or real-time customer support, delays caused by querying multiple databases or processing large document sets can diminish the overall utility of the system. For example, delays in retrieving market trends in high-frequency trading can lead to missed opportunities.

2.5 Agent-based RAG: A Paradigm Shift

Traditional RAG systems, with their static workflows and limited adaptability, often struggle to handle dynamic, multi-step reasoning and complex real-world tasks. These limitations have led to the integration of agent intelligence, resulting in agent-based RAGs.By integrating autonomous agents capable of dynamic decision-making, iterative reasoning, and adaptive retrieval strategies, agent-based RAGs overcome their inherent limitations while maintaining the modularity of earlier paradigms. This evolution enables the solution of more complex, multi-domain tasks with enhanced precision and contextual understanding, positioning agent-based RAGs as the foundation for the next generation of AI applications. In particular, agent-based RAG systems reduce latency through optimized workflows and incrementally refine outputs, addressing challenges that have long hindered the scalability and effectiveness of traditional RAGs.

3 Core Principles and Context of Agent Intelligence

Agent intelligence forms the basis of agent-based retrieval-enhanced generation (RAG) systems, enabling them to go beyond the static and reactive nature of traditional RAG. By integrating autonomous agents capable of dynamic decision-making, iterative reasoning, and collaborative workflows, agent-based RAG systems exhibit greater adaptability and accuracy. This section explores the core principles underpinning agent intelligence.

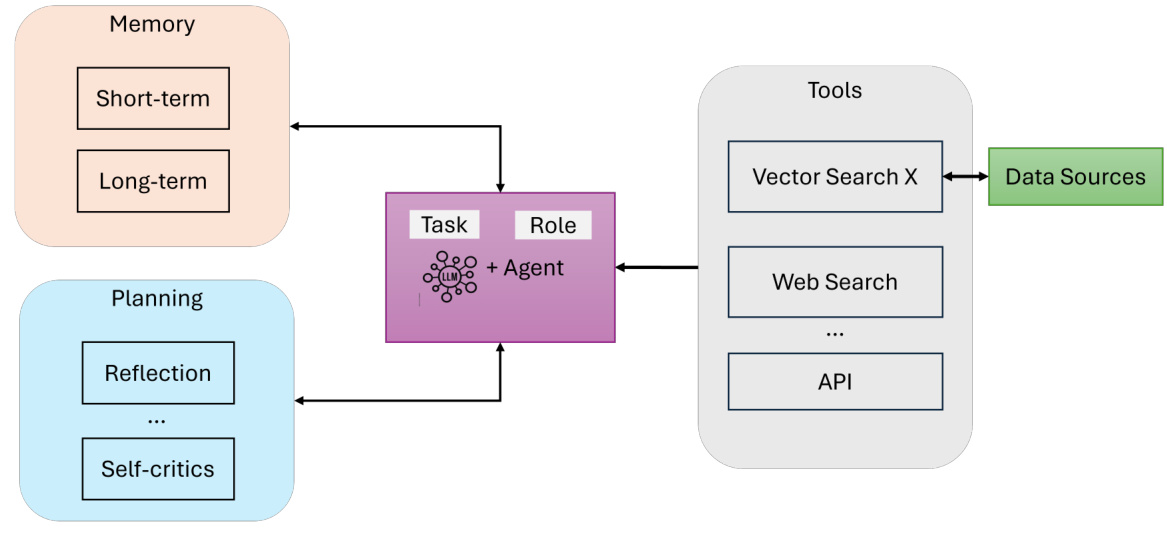

Components of an AI agent. Essentially, an AI agent consists of (see Figure 6):

- LLM (with defined roles and tasks): Serves as the agent's primary reasoning engine and dialog interface. It interprets user queries, generates responses, and maintains coherence.

- Memory (short and long term): Capturing context and relevant data during an interaction. Short-term memory [25] tracks the immediate dialog state, while long-term memory [25] stores accumulated knowledge and agent experience.

- Planning (reflection and self-criticism): Guiding the agent's iterative reasoning process through reflection, query routing, or self-criticism [26] ensures that complex tasks are decomposed efficiently [15].

- Tools (vector search, web search, APIs, etc.): Extend the ability of agents to go beyond text generation to access external resources, real-time data, or specialized computing.

Figure 6: An Overview of AI Agents

3.1 Proxy model

Agent patterns [27, 28] provide structured methods for guiding the behavior of agents in agent-based Retrieval Augmented Generation (RAG) systems. These patterns enable agents to dynamically adapt, plan, and collaborate, ensuring that the system can accurately and scalably handle complex real-world tasks. Four key patterns form the basis of the agent workflow:

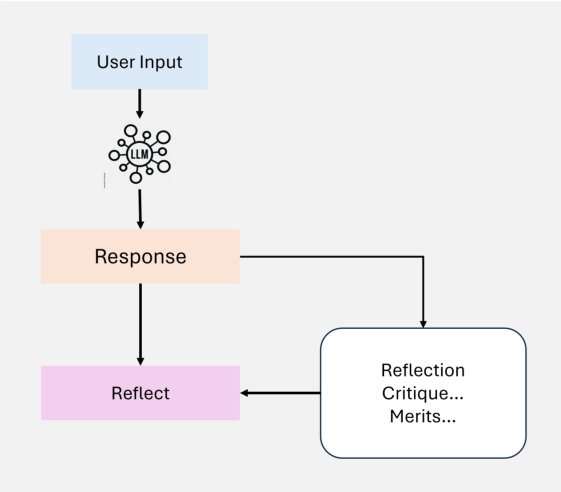

3.1.1 Reflection

Reflection is a foundational design pattern in the agent's workflow that enables the agent to iteratively evaluate and refine its output. By integrating a self-feedback mechanism, agents can identify and address errors, inconsistencies, and areas for improvement, thereby improving the performance of tasks such as code generation, text generation, and Q&A (as shown in Figure 7). In practice, reflection involves prompting the agent to critique its output in terms of correctness, style, and efficiency, and then incorporating this feedback into subsequent iterations. External tools, such as unit tests or web searches, can further enhance this process by validating results and highlighting gaps.

Figure 7: An Overview of Agentic Self- Reflection

In multi-agent systems, reflection can involve different roles, e.g., one agent generates the output while another agent critiques it, thus facilitating collaborative improvement. For example, in legal research, agents can iteratively refine responses to ensure accuracy and comprehensiveness by reassessing retrieved case law. Reflection has shown significant performance gains in studies such as Self-Refine [29], Reflexion [30] and CRITIC [26].

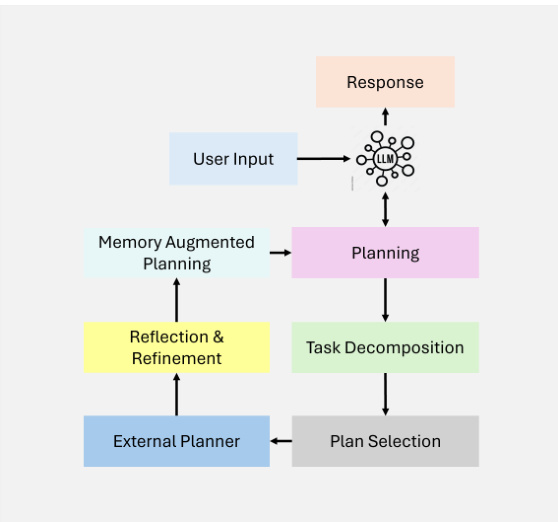

3.1.2 Planning

Planning [15] is a key design pattern in agent workflows that enables agents to autonomously decompose complex tasks into smaller, more manageable subtasks. This capability is critical for multi-hop reasoning and iterative problem solving in dynamic and uncertain scenarios (as shown in Figure 8).

Figure 8: An Overview of Agentic Planning

By utilizing planning, agents can dynamically determine the sequence of steps required to accomplish larger goals. This adaptability allows agents to handle tasks that cannot be pre-defined, ensuring flexibility in decision-making. While powerful, planning can produce less predictable results than deterministic workflows such as reflection. Planning is particularly suited to tasks that require dynamic adaptation, where predefined workflows are not sufficient. As the technology matures, its potential to drive innovative applications across domains will continue to grow.

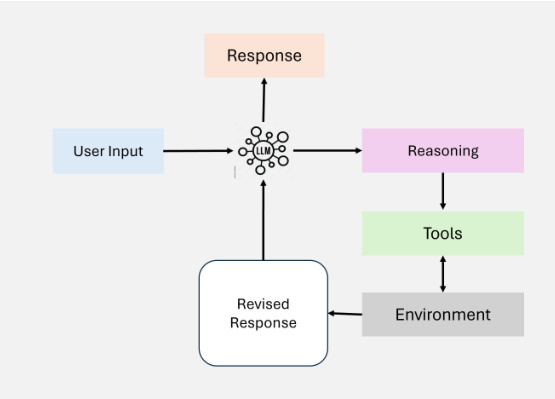

3.1.3 Tool use

Tool use enables agents to extend their capabilities by interacting with external tools, APIs, or computational resources, as shown in Figure 9. This model allows the agent to gather information, perform computations, and manipulate data beyond its pre-trained knowledge. By dynamically integrating tools into the workflow, agents can adapt to complex tasks and provide more accurate and contextually relevant output.

Figure 9: An Overview of Tool Use

Modern agent workflows integrate tool use into a variety of applications, including information retrieval, computational reasoning, and interfacing with external systems. The implementation of this model has evolved significantly with the development of GPT-4's feature invocation capabilities and systems capable of managing access to numerous tools. These developments have facilitated complex agent workflows where agents can autonomously select and execute the most relevant tools for a given task.

Although tool use has greatly enhanced agent workflows, challenges remain in optimizing tool selection, especially with the large number of available options. Techniques inspired by retrieval-enhanced generation (RAG), such as heuristic-based selection, have been proposed to address this issue.

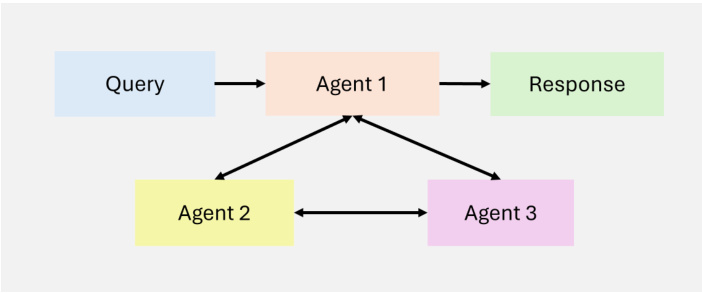

3.1.4 Multi-agent

Multi-agent collaboration [16] is a key design pattern in agent workflows that enables task specialization and parallel processing. Agents communicate with each other and share intermediate results, ensuring that the overall workflow remains efficient and coherent. By assigning subtasks to specialized agents, this pattern increases the scalability and adaptability of complex workflows. Multi-agent systems allow developers to break down complex tasks into smaller, more manageable subtasks that are assigned to different agents. This approach not only improves task performance, but also provides a powerful framework for managing complex interactions. Each agent has its own memory and workflow, which can include the use of tools, reflection, or planning, enabling dynamic and collaborative problem solving (see Figure 10).

Figure 10: An Overview of MultiAgent

While multi-agent collaboration offers great potential, it is a much less predictable design paradigm than more mature workflows such as reflection and tool use. That said, emerging frameworks like AutoGen, Crew AI, and LangGraph are providing new ways to implement effective multi-agent solutions.

These patterns are the cornerstone of the success of agent-based RAG systems, enabling them to dynamically adapt retrieval and generation workflows to meet the demands of diverse, dynamic environments. By utilizing these patterns, agents can handle iterative, context-aware tasks beyond the capabilities of traditional RAG systems.

4 Classification of agent-based RAG systems

Agent-based retrieval-enhanced generation (RAG) systems can be categorized into different architectural frameworks based on their complexity and design principles. These include single-agent architectures, multi-agent systems, and hierarchical agent architectures. Each framework aims to address specific challenges and optimize performance in different applications. This section provides a detailed categorization of these architectures, highlighting their features, benefits and limitations.

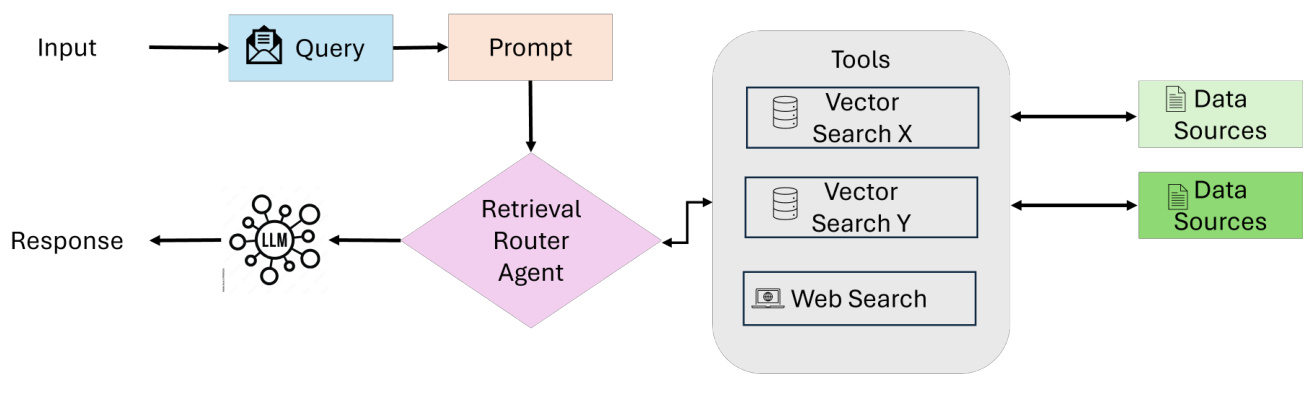

4.1 Single Agent Proxy RAG: Router

Single-agent agent-based RAGs [31] act as a centralized decision-making system where one agent manages retrieval, routing, and information integration (as shown in Figure 11). This architecture simplifies the system by combining these tasks into a single unified agent, making it particularly suitable for environments with a limited number of tools or data sources.

workflow

- Query submission and evaluation: The process starts when the user submits a query. A coordinating agent (or master retrieval agent) receives the query and analyzes it to determine the most appropriate source of information.

- Selection of knowledge sources: Depending on the type of query, the coordinating agent chooses from a variety of search options:

- Structured database: For queries that require access to tabular data, the system can use a Text-to-SQL engine that interacts with databases such as PostgreSQL or MySQL.

- semantic search: When dealing with unstructured information, it uses vector-based search to retrieve relevant documents (e.g., PDFs, books, organizational records).

- Internet search: For real-time or broad contextual information, the system utilizes web search tools to access the latest online data.

- recommender system: For personalized or contextual queries, the system uses a recommendation engine to provide tailored suggestions.

- Data integration and LLM synthesis: Once relevant data has been retrieved from the selected source, it is passed to the Large Language Model (LLM), which brings together the collected information, integrating insights from multiple sources into a coherent and contextually relevant response.

- Output generation: Finally, the system generates a comprehensive, user-oriented answer to the original query. The response is presented in an actionable, concise format and optionally includes references or citations to the sources used.

Main features and advantages

- Centralized simplicity: A single agent handles all retrieval and routing tasks, making the architecture easy to design, implement and maintain.

- Efficiency and resource optimization: With fewer agents and simpler coordination, the system requires fewer computational resources and can process queries faster.

- dynamic routing: The agent evaluates each query in real time and selects the most appropriate knowledge source (e.g., structured databases, semantic search, web search).

- Cross-tool versatility: Supports multiple data sources and external APIs to support both structured and unstructured workflows.

- Suitable for simple systems: For applications with well-defined tasks or limited integration requirements (e.g., file retrieval, SQL-based workflows).

Figure 11: An Overview of Single Agentic RAGs

Use Cases: Customer Support

draw attention to sth.: Can you tell me the delivery status of my order?

System processes (single agent workflow)::

- Query submission and evaluation::

- The user submits the query, which is received by the coordinating agent.

- Coordinate with agents to analyze queries and determine the most appropriate sources of information.

- Selection of knowledge sources::

- Retrieve tracking details from the order management database.

- Get real-time updates from the courier's API.

- Optionally, a web search can be conducted to identify local conditions affecting delivery, such as weather or logistical delays.

- Data integration and LLM synthesis::

- Pass relevant data to the LLM, which consolidates the information into a coherent response.

- Output generation::

- The system generates an actionable and concise response that provides real-time tracking of updates and possible alternatives.

responsive::

integrated response: Your package is currently in transit and is expected to arrive tomorrow night. real-time tracking from UPS shows it is located in a regional distribution center.

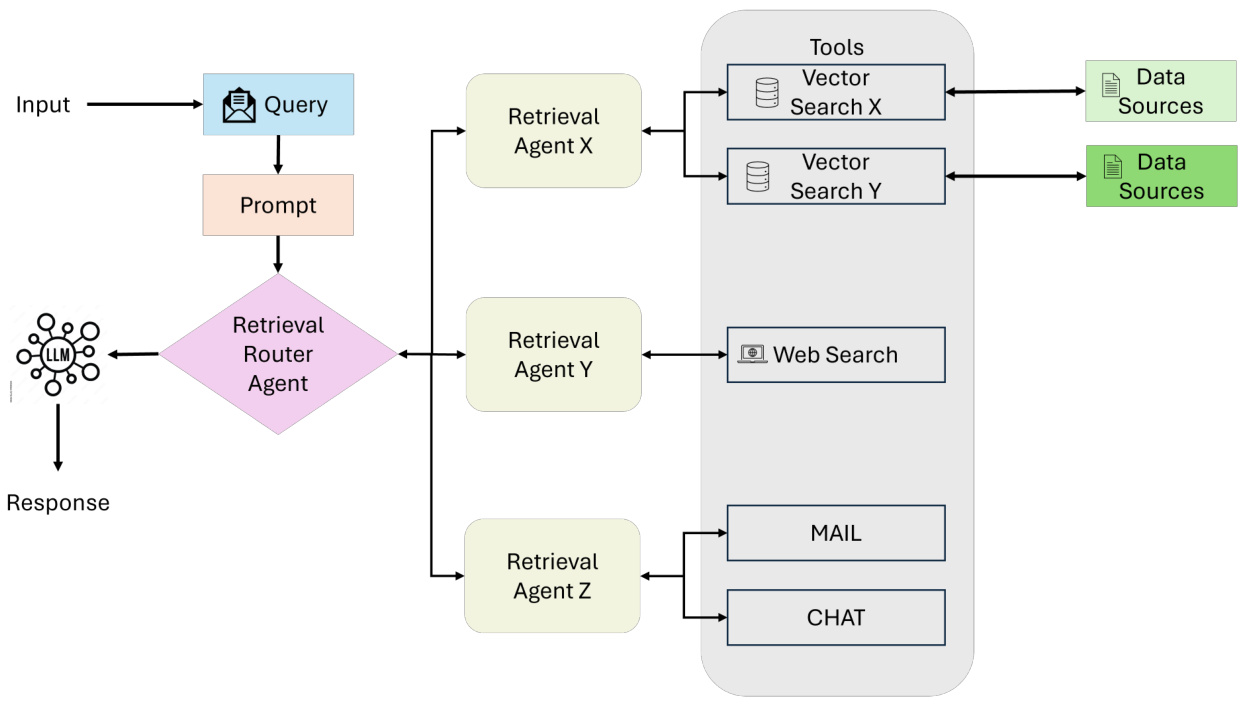

4.2 Multi-agent RAG system

Multi-agent RAG [31] represents a modular and scalable evolution of the single-agent architecture, designed to handle complex processes and diverse query types by leveraging multiple specialized agents (as shown in Figure 12). In contrast to relying on a single agent to manage all tasks - reasoning, retrieval, and response generation - the system assigns responsibilities to multiple agents, each optimized for a specific role or data source.

workflow

- Query Submission: The process starts with a user query, which is received by a coordinating agent or a master retrieval agent. This agent acts as a central coordinator and delegates the query to a specialized retrieval agent according to the requirements of the query.

Figure 12: An Overview of Multi-Agent Agentic RAG Systems

- Specialized search agents: Queries are assigned to multiple retrieval agents, each focusing on a specific type of data source or task. Example:

- Agent 1: Handles structured queries, such as interacting with SQL-based databases like PostgreSQL or MySQL.

- Agent 2: Manage semantic searches to retrieve unstructured data from sources such as PDFs, books, or internal records.

- Agent 3: Focus on retrieving real-time public information from web searches or APIs.

- Agent 4: Specializes in recommender systems that provide contextually relevant suggestions based on user behavior or profiles.

- Tool access and data retrieval: Each agent routes the query to the appropriate tool or data source within its domain, for example:

- vector search: for semantic relevance.

- Text to SQL: For structured data.

- Internet search: For real-time public information.

- API: For accessing external services or proprietary systems.

The retrieval process is executed in parallel, thus enabling efficient processing of different query types.

- Data integration and LLM synthesis: Once retrieval is complete, all of the agent's data is passed to the Large Language Model (LLM).The LLM consolidates the retrieved information into coherent and contextually relevant responses, seamlessly integrating insights from multiple sources.

- Output generation: The system generates a comprehensive response that is delivered back to the user in an actionable, concise format.

Main features and advantages

- modularization: Each agent operates independently, allowing agents to be seamlessly added or removed based on system requirements.

- scalability: Parallel processing of multiple agents enables the system to efficiently handle high query volumes.

- Specialization of tasks: Each agent is optimized for a specific type of query or data source, improving accuracy and retrieval relevance.

- efficiency: By assigning tasks to specialized agents, the system minimizes bottlenecks and enhances the performance of complex workflows.

- versatility: For applications across multiple domains, including research, analytics, decision-making, and customer support.

challenge

- Coordination complexity: Managing inter-agent communication and task delegation requires complex coordination mechanisms.

- computational overhead: Parallel processing of multiple agents may increase resource usage.

- data integration: Integrating output from different sources into a coherent response is not easy and requires advanced LLM capabilities.

Use case: multidisciplinary research assistant

draw attention to sth.: What are the economic and environmental impacts of renewable energy adoption in Europe?

System processes (multi-agent workflow)::

- Agent 1: Use SQL queries to retrieve statistical data from economic databases.

- Agent 2: Search for relevant academic papers using semantic search tools.

- Agent 3: Conduct a web search for the latest news and policy updates on renewable energy.

- Agent 4: Consult the recommender system to suggest relevant content, such as reports or expert commentary.

responsive::

integrated response:: "Over the past decade, the adoption of renewable energy in Europe has led to a reduction in greenhouse gas emissions of 20%, according to the EU policy report. On the economic front, renewable energy investments have created around 1.2 million jobs, with significant growth in the solar and wind sectors. Recent academic research has also highlighted potential trade-offs in terms of grid stability and energy storage costs."

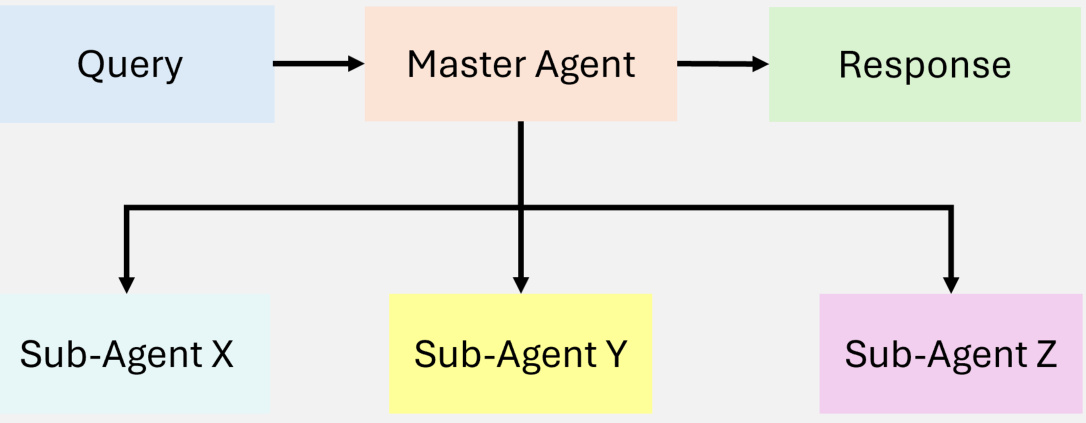

4.3 Hierarchical Agent-based RAG System

The hierarchical agent-based RAG [17] system uses a structured, multilevel approach to information retrieval and processing, which improves efficiency and strategic decision-making (as shown in Figure 13). Agents are organized into a hierarchical structure, with higher-level agents supervising and guiding lower-level agents. This structure enables multi-level decision making and ensures that queries are handled by the most appropriate resources.

Figure 13: An illustration of Hierarchical Agentic RAG

workflow

- Query Receiving: The user submits the query, which is received by the top-level agent, who is responsible for the initial evaluation and commissioning.

- strategic decision-making: The top-level agent evaluates the complexity of the query and decides which subordinate agents or data sources to prioritize. Depending on the domain of the query, certain databases, APIs, or search tools may be considered more reliable or relevant.

- Delegation to a subordinate: Top-level agents assign tasks to lower-level agents that specialize in particular retrieval methods (e.g., SQL databases, web searches, or proprietary systems). These agents perform their assigned tasks independently.

- Polymerization and synthesis: High-level agents collect and integrate results from subordinate agents, consolidating information into coherent responses.

- Response delivery: The final, synthesized answer is returned to the user, ensuring that the response is both comprehensive and contextually relevant.

Main features and advantages

- Strategic prioritization: Top-level agents may prioritize data sources or tasks based on query complexity, reliability, or context.

- scalability: Assigning tasks to multiple agent levels can handle highly complex or multifaceted queries.

- Enhanced decision-making capacity:: High-level agents apply strategic oversight to improve overall accuracy and consistency of response.

challenge

- Coordination complexity: Maintaining robust inter-agent communication across multiple levels may increase coordination overhead.

- Resource allocation: It is not easy to efficiently distribute tasks between levels while avoiding bottlenecks.

Use case: financial analysis system

draw attention to sth.: What are the investment options for renewable energy based on current market trends?

System processes (Hierarchical Agent Workflow)::

- agent at the top of the hierarchy: Assess the complexity of queries and prioritize reliable financial databases and economic indicators over less validated data sources.

- Mid-level agents: Retrieve real-time market data (e.g., stock prices, industry performance) from proprietary APIs and structured SQL databases.

- Low-level agents: Conduct web searches for recent announcements and policies and consult referral systems to track expert opinion and news analysis.

- Polymerization and synthesis: Top-level proxy aggregation results that integrate quantitative data with policy insights.

responsive::

integrated response: "Based on current market data, shares of renewable energy have grown 15% over the past quarter, driven primarily by supportive government policies and high investor interest. Analysts believe that the wind and solar sectors are likely to continue to gain momentum, while emerging technologies such as green hydrogen have moderate risk but may offer high returns."

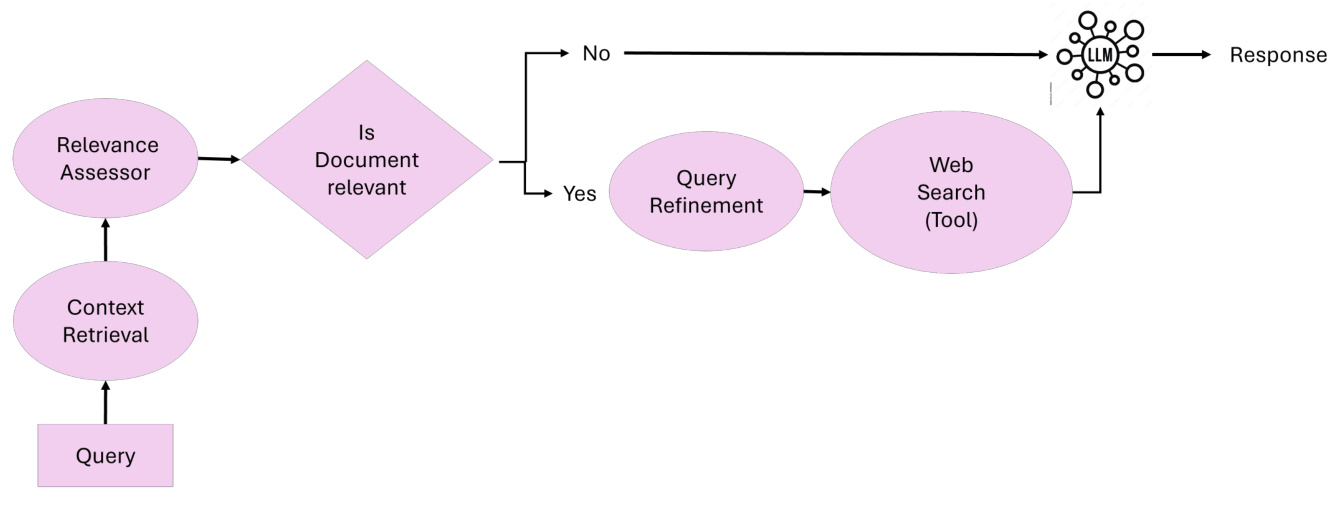

4.4 Proxy Corrective RAG

Corrective RAG [32] [33] introduces the ability to self-correct retrieval results, which enhances document utilization and improves the quality of response generation (as shown in Figure 14). By embedding intelligent agents into the workflow, Corrective RAG [32] [33] ensures iterative refinement of contextual documents and responses to minimize errors and maximize relevance.

Figure 14: Overview of Agentic Corrective RAG

Correcting the core idea of RAG: The core principle of Corrective RAG lies in its ability to dynamically evaluate retrieved documents, perform corrective actions, and refine the query to improve the quality of the generated response. Corrective RAG adjusts its methodology as follows:

- Document Relevance Assessment: Retrieved documents are evaluated by a relevance evaluation agent. Documents below the relevance threshold trigger a correction step.

- Query refinement and enhancement: Queries are refined by a query refinement agent that uses semantic understanding to optimize retrieval for better results.

- Dynamic retrieval from external sources: When the context is insufficient, the external knowledge retrieval agent performs a web search or accesses alternative data sources to supplement the retrieved documents.

- response synthesis: All validated and refined information is passed to the response synthesis agent for final response generation.

workflow: The Corrective RAG system is built on five key agents:

- contextual search agent: Responsible for retrieving the initial context document from the vector database.

- Relevance Assessment Agent: Evaluate the relevance of retrieved documents and flag any irrelevant or ambiguous documents for corrective action.

- Inquiry refinement agent: Rewriting queries to improve retrieval efficiency and using semantic understanding to optimize results.

- External Knowledge Retrieval Agent: Perform web searches or access alternative data sources when contextual documentation is insufficient.

- Response Synthesis Agent: Integrate all validated information into a coherent and accurate response.

Main features and advantages:

- Iterative correction: Ensure high response accuracy by dynamically recognizing and correcting irrelevant or ambiguous search results.

- Dynamic Adaptation: Integrate real-time web search and query refinement to improve retrieval accuracy.

- Proxy Modular: Each agent performs specialized tasks to ensure efficient and scalable operations.

- Factual guarantees: Correcting the RAG minimizes the risk of hallucinations or misinformation by validating all retrieved and generated content.

Use Case: Academic Research Assistant

draw attention to sth.: What are the latest findings from generative AI research?

System processes (correcting RAG workflows)::

- Query Submission: Users submit queries to the system.

- contextual search::

- contextual search agentRetrieve initial documents from the database of published papers on generative AI.

- The retrieved documents are passed to the next step for evaluation.

- Relevance assessment:

- Relevance Assessment AgentEvaluate the match between the document and the query.

- Categorize documents as relevant, ambiguous, or irrelevant. Irrelevant documents are flagged for corrective action.

- Corrective action (if needed):

- Inquiry refinement agentRewriting queries to improve specificity.

- External Knowledge Retrieval AgentConduct web searches to obtain additional papers and reports from outside sources.

- Response Synthesis.

- Response Synthesis AgentIntegrate validated documents into comprehensive and detailed summaries.

Response.

integrated response: "Recent generative AI research results include diffusion models, reinforcement learning in text-to-video tasks, and advances in optimization techniques for large-scale model training. See research presented at NeurIPS 2024 and AAAI 2025 for more details."

4.5 Adaptive Agent-based RAG

Adaptive Retrieval Augmented Generation (Adaptive RAG) [34] enhances the flexibility and efficiency of Large Language Models (LLMs) by dynamically adapting the query processing strategy based on the complexity of incoming queries. Unlike static retrieval workflows, Adaptive RAG [35] employs classifiers to analyze query complexity and determine the most appropriate approach, ranging from single-step retrieval to multi-step reasoning, or even bypassing retrieval altogether for simple queries, as shown in Figure 15.

Figure 15: An Overview of Adaptive Agentic RAG

The core idea of adaptive RAG The core principle of adaptive RAG is its ability to dynamically adjust the retrieval strategy according to the complexity of the query. Adaptive RAG adjusts its method as follows:

- simple query:: For factual questions that require additional retrieval (e.g., "What is the boiling point of water?") , the system generates answers directly using pre-existing knowledge.

- simple query:: For moderately complex tasks that require minimal context (e.g., "What's the status of my latest electric bill?") , the system performs a single-step retrieval to obtain the relevant details.

- complex query:: For multi-level queries requiring iterative reasoning (e.g., "How has the population of city X changed over the past decade, and what were the contributing factors?") , the system uses multi-step searches, progressively refining intermediate results to provide comprehensive answers.

workflow: The Adaptive RAG system is built on three main components:

- Sorter Role.

- A smaller language model analyzes queries to predict their complexity.

- The classifier is trained using automatically labeled datasets derived from past model results and query patterns.

- Dynamic Strategy Selection.

- For simple queries, the system avoids unnecessary searches and directly utilizes LLM to generate responses.

- For simple queries, it uses a single-step search process to obtain the relevant context.

- For complex queries, it activates multi-step retrieval to ensure iterative refinement and enhanced reasoning.

- LLM Integration.

- LLM integrates the retrieved information into a coherent response.

- The iterative interaction between LLM and the classifier allows for refinement of complex queries.

Main features and benefits.

- Dynamic Adaptation:: Adjust retrieval strategies based on query complexity to optimize computational efficiency and response accuracy.

- Resource efficiency:: Minimize unnecessary overhead for simple queries while ensuring thorough processing of complex queries.

- Enhanced accuracy:: Iterative refinement ensures that complex queries are resolved with high accuracy.

- dexterity: Can be extended to integrate additional paths, such as domain-specific tools or external APIs.

Use Cases. Customer Support Assistant

Tip. Why is my package delayed and what are my options?

System Flow (Adaptive RAG Workflow).

- Query Category.

- The classifier analyzes the query and determines that it is a complex query requiring multi-step reasoning.

- Dynamic Strategy Selection.

- The system activates a multi-step retrieval process based on complexity categorization.

- Multi-step search.

- Retrieve tracking details from the order database.

- Get real-time status updates from the Courier API.

- Conduct a web search to look for external factors such as weather conditions or local disruptions.

- Response Synthesis.

- LLM integrates all retrieved information and synthesizes a comprehensive and actionable response.

Response.

integrated response: "Your package has been delayed due to severe weather conditions in your area. It is currently located at a local distribution center and is expected to arrive within 2 days. Alternatively, you may choose to pick it up from the facility."

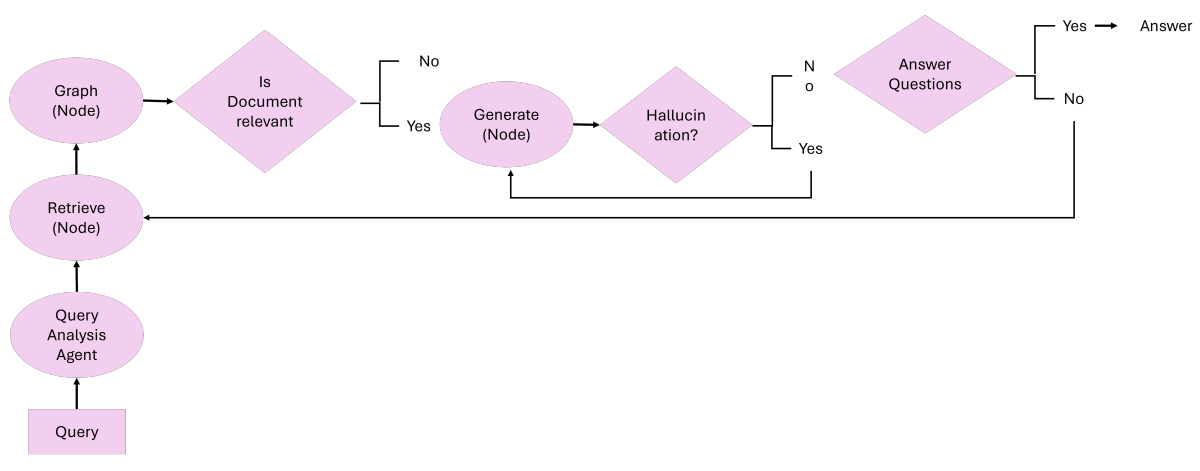

4.6 Graph-based agent-based RAGs

4.6.1 Agent-G: An agent-based framework for graph RAGs

Agent-G [8] introduces an innovative agent-based architecture that combines graph knowledge bases with unstructured document retrieval. By combining structured and unstructured data sources, this framework enhances the reasoning and retrieval accuracy of Retrieval Augmented Generation (RAG) systems. It employs modularized retriever libraries, dynamic agent interactions, and feedback loops to ensure high-quality output, as shown in Figure 16.

Figure 16: An Overview of Agent-G: Agentic Framework for Graph RAG

The core idea of Agent-G The core principle of Agent-G lies in its ability to dynamically assign retrieval tasks to specialized agents utilizing graph knowledge bases and text files. the Agent-G adapts its retrieval strategy as follows:

- Figure Knowledge Base: Extract relationships, hierarchies, and connections using structured data (e.g., disease-to-symptom mapping in the medical domain).

- unstructured document:: Traditional text retrieval systems provide contextual information to supplement graph data.

- Criticism module:: Assess the relevance and quality of retrieved information to ensure alignment with queries.

- feedback loop:: Refinement of retrieval and synthesis through iterative validation and re-queries.

workflowThe : Agent-G system is built on four main components:

- Retriever Library.

- A set of modular agents specialized in retrieving graph-based or unstructured data.

- The agent dynamically selects relevant sources based on the requirements of the query.

- Critique Module.

- Validate the relevance and quality of the retrieved data.

- Flag low confidence results for re-retrieval or refinement.

- Dynamic Agent Interaction.

- Task-specific agents collaborate to integrate different types of data.

- Ensure coordinated retrieval and synthesis between figure and text sources.

- LLM Integration.

- Synthesize the validated data into a coherent response.

- Iterative feedback from the critique module ensures alignment with query intent.

Main features and benefits.

- enhanced reasoning: Combine structured relationships in graphs with contextual information from unstructured documents.

- Dynamic Adaptation:: Dynamic adaptation of search strategies to query requirements.

- Improved accuracy: The Critique module reduces the risk of irrelevant or low-quality data in the response.

- Scalable Modularity:: Support for adding new agents to perform specialized tasks for increased scalability.

Use Cases: Medical Diagnostics

Tip. What are the common symptoms of type 2 diabetes and how are they related to heart disease?

System Processes (Agent-G Workflow).

- Query reception and distribution:: The system receives queries and determines the need to use both graph-structured and unstructured data to fully answer the question.

- Figure Finder.

- Extracting the relationship between type 2 diabetes and heart disease from the Medical Knowledge Graph.

- Identify common risk factors such as obesity and hypertension by exploring graph hierarchies and relationships.

- Document Retriever.

- Retrieve descriptions of type 2 diabetes symptoms (e.g., increased thirst, frequent urination, fatigue) from the medical literature.

- Add contextual information to supplement graph-based insights.

- Critique Module.

- Evaluate the relevance and quality of the retrieved graph data and document data.

- Flag low confidence results for refinement or re-query.

- response synthesis: LLM integrates validation data from the graph retriever and document retriever into a coherent response, ensuring alignment with the query intent.

Response.

integrated response: "Symptoms of type 2 diabetes include increased thirst, frequent urination and fatigue. Studies have shown a 50% correlation between diabetes and heart disease, primarily through shared risk factors such as obesity and high blood pressure."

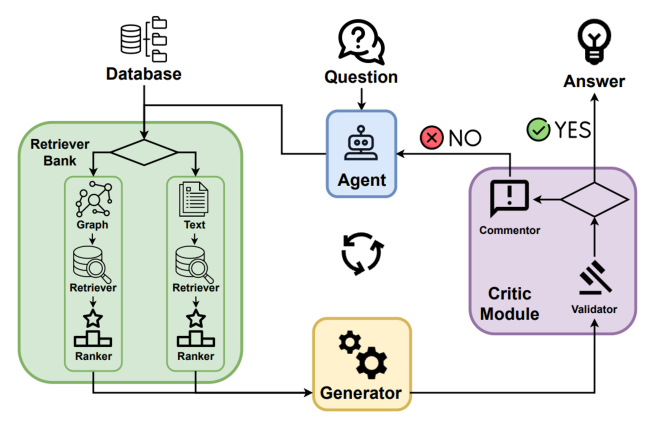

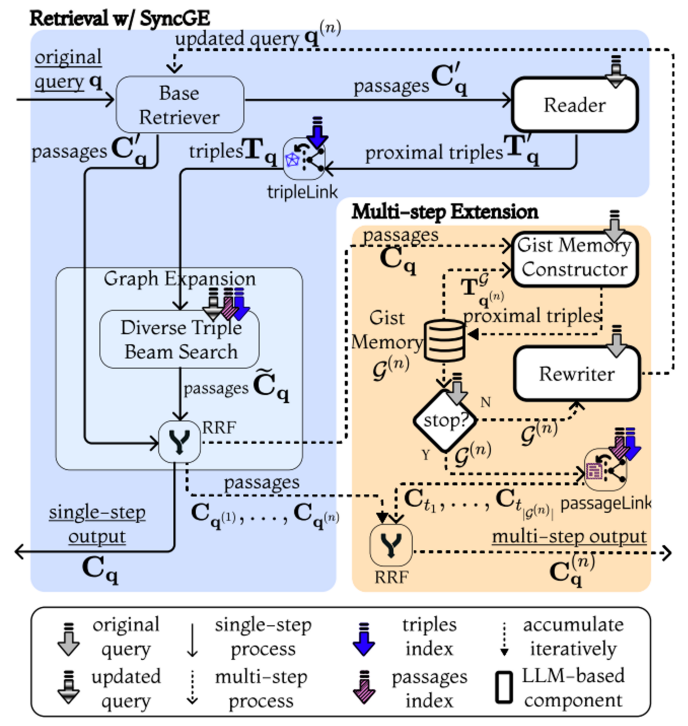

4.6.2 GeAR: Graph Augmentation Agent for Retrieval Augmentation Generation

GeAR [36] introduces an agent-based framework that enhances traditional retrieval augmented generation (RAG) systems by integrating graph-based retrieval mechanisms. By utilizing graph extension techniques and agent-based architecture, GeAR addresses the challenges in multi-hop retrieval scenarios and improves the system's ability to handle complex queries, as shown in Figure 17.

Figure 17: An Overview of GeAR: Graph-Enhanced Agent for Retrieval-Augmented Generation[36]

- graphical extension: Enhances traditional baseline retrievers (e.g., BM25) by extending the retrieval process to include graph-structured data, enabling the system to capture complex relationships and dependencies between entities.

- proxy framework: Integrates an agent-based architecture that utilizes graph extensions to manage retrieval tasks more efficiently, allowing for dynamic and autonomous decision-making during the retrieval process.

workflow: The GeAR system operates through the following components:

- Figure Extension Module.

- Integrating graph-based data into the retrieval process allows the system to consider relationships between entities in the retrieval process.

- Enhance the ability of the baseline searcher to handle multi-hop queries by extending the search space to include connected entities.

- Agent-Based Retrieval.

- An agent framework is used to manage the retrieval process, enabling agents to dynamically select and combine retrieval strategies based on the complexity of the query.

- Agents can autonomously decide on the search paths that utilize graph extensions to improve the relevance and accuracy of the retrieved information.

- LLM Integration.

- Combining the benefits of retrieved information with graph extensions with the capabilities of large-scale language modeling (LLM) to generate coherent and contextually relevant responses.

- This integration ensures that the generation process is inspired by both unstructured documents and structured graph data.

Main features and benefits.

- Enhanced multi-hop search:: GeAR's graph extensions enable the system to handle complex queries that require reasoning about multiple interrelated pieces of information.

- Proxy decision-making: The proxy framework enables dynamic and autonomous selection of retrieval strategies, improving efficiency and relevance.

- Improved accuracy:: By integrating structured graph data, GeAR improves the accuracy of retrieved information to generate more accurate and contextually appropriate responses.

- scalability: The modular nature of the agent framework allows for the integration of additional search strategies and data sources as needed.

Use Case: Multi-hop Quiz

Tip. Who influenced J.K. Rowling's mentor?

System Processes (GeAR Workflow).

- agent at the top of the hierarchy: Evaluate the multi-hop nature of the query and determine the need to combine graph expansion and document retrieval to answer the question.

- Figure Extension Module.

- Identify J.K. Rowling's mentor as the key entity in the query.

- Tracing the literary influences of mentors by exploring data on the structure of the Literary Relationship Map.

- Agent-Based Retrieval.

- An agent autonomously selects a graph-expanded search path to gather relevant information about the impact of the mentor.

- Integrate additional context for unstructured details of mentors and their influences by querying textual data sources.

- response synthesis: Use LLM to combine insights from the graph and document retrieval process to generate a response that accurately reflects the complex relationships in the query.

Response.

integrated response: "J.K. Rowling's mentor, [mentor's name], was heavily influenced by [author's name], who is known for his [famous work or genre]. This connection highlights the layered relationships in literary history, where influential ideas are often passed through multiple generations of writers."

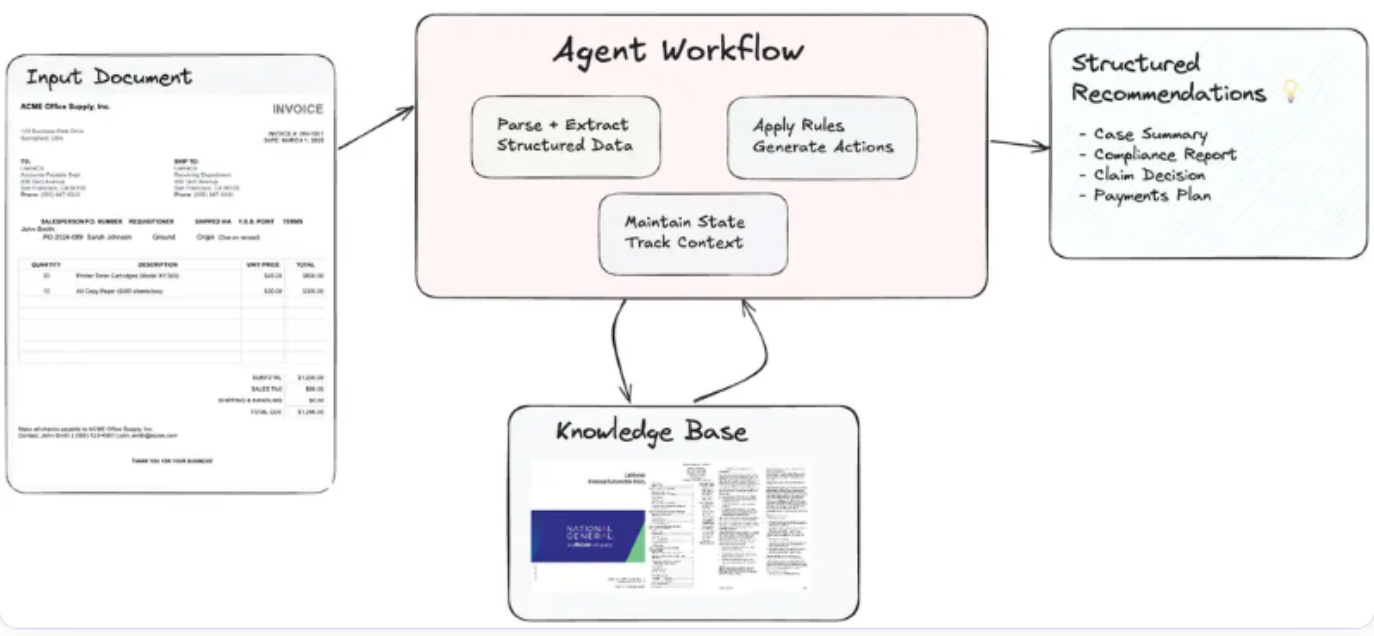

4.7 Agent-based document workflows in agent-based RAGs

Agentic Document Workflows (ADW)[37] extends the traditional Retrieval Augmented Generation (RAG) paradigm by automating end-to-end knowledge work. These workflows orchestrate complex document-centric processes that integrate document parsing, retrieval, reasoning, and structured output with intelligent agents (see Figure 18).ADW systems address the limitations of Intelligent Document Processing (IDP) and RAG by maintaining state, orchestrating multistep workflows, and applying domain-specific logic to documents.

workflow

- Document parsing and information structuring::

- Use enterprise-level tools (e.g., LlamaParse) to parse documents to extract relevant data fields such as invoice numbers, dates, vendor information, entries, and payment terms.

- Organize structured data for subsequent processing.

- Cross-process state maintenance::

- The system maintains the state of the document context in question, ensuring consistency and relevance across multi-step workflows.

- Track the progress of the document through the various stages of processing.

- knowledge retrieval::

- Retrieve relevant references from external knowledge bases (e.g., LlamaCloud) or vector indexes.

- Retrieve real-time, domain-specific guidance for enhanced decision-making.

- Agent-based scheduling::

- Intelligent agents apply business rules, perform multi-hop reasoning, and generate actionable recommendations.

- Orchestrate components such as parsers, retrievers, and external APIs for seamless integration.

- Actionable Output Generation::

- The output is presented in a structured format, tailored to specific use cases.

- Synthesize recommendations and extracted insights into concise, actionable reports.

Figure 18: An Overview of Agentic Document Workflows (ADW) [37]

Use Case: Invoice Payment Workflow

draw attention to sth.: Generate a payment advice report based on submitted invoices and relevant vendor contract terms.

System processes (ADW workflow)::

- Parsing invoices to extract key details such as invoice number, date, vendor information, entries and payment terms.

- Retrieve appropriate vendor contracts to validate payment terms and identify any applicable discounts or compliance requirements.

- Generate a payment recommendation report that includes the original amount due, possible early payment discounts, budget impact analysis, and strategic payment actions.

responsive: Consolidated Response: "Invoice INV-2025-045 in the amount of $15,000.00 has been processed. If payment is made by 2025-04-10, an early payment discount of 2% is available, reducing the amount due to $14,700.00.Since the subtotal exceeds $10,000.00, a bulk order discount of 5% has been applied. It is recommended that early payment be approved to save 21 TP3T and ensure timely allocation of funds for upcoming project phases."

Main features and advantages

- Condition Maintenance: Track document context and workflow stages to ensure consistency across processes.

- multistep programming: Handle complex workflows involving multiple components and external tools.

- Domain Specific Intelligence: Apply tailored business rules and guidelines for precise advice.

- scalability: Supports large-scale document processing with modular and dynamic agent integration.

- Increasing productivity: Automate repetitive tasks while enhancing human expertise in decision making.

4.8 Comparative Analysis of Agent-based RAG Frameworks

Table 2 provides a comprehensive comparative analysis of three architectural frameworks: traditional RAG, agent-based RAG, and agent-based document workflow (ADW). The analysis highlights their respective strengths, weaknesses, and best-fit scenarios, providing valuable insights for application in different use cases.

Table 2: Comparative analysis: traditional RAG vs. agent-based RAG vs. agent-based document workflow (ADW)

| characterization | Traditional RAG | Proxy RAG | Agent-based Document Workflow (ADW) |

|---|---|---|---|

| recount (e.g. results of election) | Isolated retrieval and generation tasks | Multi-agent collaboration and reasoning | Document-centric end-to-end workflows |

| Context maintenance | finite | Realized through memory modules | Maintaining status in a multi-step workflow |

| Dynamic Adaptation | minimal | your (honorific) | Tailored to document workflow |

| Workflow organization | deficiencies | Orchestrate multi-agent tasks | Integrated multi-step document processing |

| Use of external tools/APIs | Basic integration (e.g., search tools) | Extension through tools (e.g. APIs and knowledge bases) | Deep integration with business rules and domain-specific tools |

| scalability | Limited to small data sets or queries | Scalable multi-agent system | Scalable, multi-disciplinary enterprise workflows |

| complex inference | Basic (e.g., simple quiz) | Multi-step reasoning using agents | Structured Reasoning Across Documents |

| major application | Q&A system, knowledge retrieval | Multi-disciplinary knowledge and reasoning | Contract review, invoice processing, claims analysis |

| dominance | Simple, quick setup | High accuracy, collaborative reasoning | End-to-end automation, domain-specific intelligence |

| challenge | Poor contextual understanding | Coordination complexity | Resource overhead, domain standardization |

The comparative analysis highlights the evolutionary trajectory from traditional RAG to agent-based RAG to agent-based document workflow (ADW). While traditional RAG offers the benefits of simplicity and ease of deployment for basic tasks, agent-based RAG introduces enhanced reasoning capabilities and scalability through multi-agent collaboration.ADW builds on these advances by providing robust, document-centric workflows that facilitate end-to-end automation and integration with domain-specific processes. Understanding the strengths and limitations of each framework is critical to selecting the architecture that best suits specific application needs and operational requirements.

5 Application of Proxy RAG

Agent-based Retrieval Augmented Generation (RAG) systems demonstrate transformative potential in a variety of domains. By combining real-time data retrieval, generative capabilities, and autonomous decision-making, these systems address complex, dynamic, and multimodal challenges. This section explores key applications of agent-based RAG, detailing how these systems are shaping domains such as customer support, healthcare, finance, education, legal workflows, and creative industries.

5.1 Customer support and virtual assistants

Agent-based RAG systems are revolutionizing customer support by enabling real-time, context-aware query resolution. Traditional chatbots and virtual assistants often rely on static knowledge bases, resulting in generic or outdated responses. In contrast, agent-based RAG systems dynamically retrieve the most relevant information, adapt to the user's context, and generate personalized responses.

Use Case: Twitch Ad Sales Enhancement [38]

For example, Twitch utilizes an agency-style workflow with RAG on Amazon Bedrock to streamline ad sales. The system dynamically retrieves advertiser data, historical campaign performance and audience statistics to generate detailed ad proposals, significantly improving operational efficiency.

Key Benefits:

- Improving the quality of response: Personalized and contextually relevant responses enhance user engagement.

- Operational efficiency: Reduces the workload of manual support agents by automating complex queries.

- Real-time adaptability: Dynamically integrate evolving data, such as real-time service outages or pricing updates.

5.2 Medical treatment and personalized medicine

In healthcare, combining patient-specific data with the latest medical research is critical to making informed decisions. Agent-based RAG systems accomplish this by retrieving real-time clinical guidelines, medical literature, and patient histories to assist clinicians in diagnosis and treatment planning.

Use cases: summary of patient cases [39]

Agent-based RAG systems have been used to generate patient case summaries. For example, by integrating electronic health records (EHRs) and the latest medical literature, the system generates comprehensive summaries for clinicians so they can make faster, more informed decisions.

Key Benefits:

- Personalized care: Adapt recommendations to the needs of individual patients.

- time efficiency: Streamline the retrieval of relevant studies and save valuable time for healthcare providers.

- accuracy: Ensure that recommendations are based on the latest evidence and patient-specific parameters.

5.3 Legal and contractual analysis

Agent-based RAG systems are redefining the way legal workflows are executed, providing tools for rapid document analysis and decision-making.

Use cases: contract review [40]

A legal agent-based RAG system analyzes contracts, extracts key terms, and identifies potential risks. By combining semantic search capabilities and legal knowledge mapping, it automates the cumbersome process of contract review, ensuring compliance and mitigating risk.

Key Benefits:

- risk identification: Automatically flags clauses that deviate from the standard clauses.

- efficiency: Reduce the time spent on the contract review process.

- scalability: Handle a large number of contracts at the same time.

5.4 Financial and risk analysis

Agent-based RAG systems are transforming the financial industry by providing real-time insights for investment decisions, market analysis and risk management. These systems integrate real-time data streams, historical trends and predictive modeling to generate actionable outputs.

Use cases: automobile insurance claims processing [41]

In motor insurance, agent-based RAG automates claims processing. For example, by retrieving policy details and combining them with accident data, it generates claims advice while ensuring compliance with regulatory requirements.

Key Benefits:

- real time analysis: Provides insights based on real-time market data.

- Risk mitigation: Identify potential risks using predictive analytics and multi-step reasoning.

- Enhanced decision-making capacity: Combine historical and real-time data to develop a comprehensive strategy.

5.5 Education

Education is another area where agent-based RAG systems have made significant progress. These systems enable adaptive learning by generating explanations, learning materials, and feedback that adapt to the learner's progress and preferences.

Use cases: research paper generation [42]

In higher education, agent-based RAG has been used to assist researchers by synthesizing key findings from multiple sources. For example, researchers querying "What are the latest advances in quantum computing?" will receive a concise summary with references, thus improving the quality and efficiency of their work.

Key Benefits:

- Tailored Learning Paths: Adapt content to the needs and performance levels of individual students.

- Engaging interactions: Provide interactive explanations and personalized feedback.

- scalability: Supports large-scale deployments for a variety of educational environments.

5.6 Graph Enhancement Applications in Multimodal Workflows

Graph Enhanced Agent-based RAG (GEAR) combines graph structures and retrieval mechanisms and is particularly effective in multimodal workflows where interconnected data sources are critical.

Use case: market research generation

GEAR is able to synthesize text, images and video for use in marketing campaigns. For example, the query "What are the emerging trends in eco-friendly products?" generates a detailed report that includes customer preferences, competitor analysis and multimedia content.

Key Benefits:

- multimodal capability: Integrate text, image and video data for comprehensive output.

- Enhanced creativity: Generate innovative ideas and solutions for marketing and entertainment.

- Dynamic Adaptation: Adapt to changing market trends and customer needs.

Agent-based RAG systems are used in a wide range of applications, demonstrating their versatility and transformative potential. From personalized customer support to adaptive education and graph-enhanced multimodal workflows, these systems address complex, dynamic, and knowledge-intensive challenges. By integrating retrieval, generative and agent intelligence, agent-based RAG systems are paving the way for the next generation of AI applications.

6 Tools and frameworks for agent-based RAG

Agent-based retrieval-enhanced generation (RAG) systems represent a significant evolution in combining retrieval, generation, and agent intelligence. These systems extend the capabilities of traditional RAG by integrating decision making, query reconstruction, and adaptive workflows. The following tools and frameworks provide powerful support for developing agent-based RAG systems that address the complex requirements of real-world applications.

Key tools and frameworks:

- LangChain and LangGraph: LangChain [43] provides modular components for building RAG pipelines, seamlessly integrating retrievers, generators, and external tools.LangGraph complements this by introducing graph-based processes that support loops, state persistence, and human-computer interactions, enabling sophisticated orchestration and self-correcting mechanisms in agent systems.

- LlamaIndex: LlamaIndex's [44] Agent-based Document Workflow (ADW) automates end-to-end document processing, retrieval, and structured reasoning. It introduces a meta-agent architecture where sub-agents manage smaller sets of documents and tasks such as compliance analysis and contextual understanding are coordinated through top-level agents.

- Hugging Face Transformers and Qdrant: Hugging Face [45] provides pre-trained models for embedding and generative tasks, while Qdrant [46] enhances the retrieval workflow with adaptive vector search capabilities, enabling agents to optimize performance by dynamically switching between sparse and dense vector methods.

- CrewAI and AutoGen: These frameworks emphasize multi-agent architectures. crewAI [47] supports hierarchical and sequential processes, powerful memory systems, and tool integration. ag2 [48] (formerly known as AutoGen [49, 50]) excels in multi-agent collaboration with advanced code generation, tool execution, and decision support.

- OpenAI Swarm Framework: an educational framework designed to enable ergonomic, lightweight multi-agent orchestration [51] with an emphasis on agent autonomy and structured collaboration.

- Agent-based RAG with Vertex AI: Vertex AI [52], developed by Google, seamlessly integrates with agent-based Retrieval Augmentation Generation (RAG) to provide a platform for building, deploying, and scaling machine-learning models while leveraging advanced AI capabilities for powerful, context-aware retrieval and decision-making workflows.

- Amazon Bedrock for Agent-based RAGs: Amazon Bedrock [38] provides a powerful platform for implementing agent-based retrieval-enhanced generation (RAG) workflows.

- IBM Watson and Agent-Based RAG: IBM's watsonx.ai [53] supports the construction of agent-based RAG systems, e.g., using the Granite-3-8B-Instruct model to answer complex queries and improve response accuracy by integrating external information.

- Neo4j and vector databases: Neo4j, a well-known open source graph database, excels in handling complex relational and semantic queries. In addition to Neo4j, vector databases like Weaviate, Pinecone, Milvus, and Qdrant provide efficient similarity search and retrieval capabilities and form the backbone of a high-performance agent-based retrieval augmentation generation (RAG) workflow.

7 Benchmarks and data sets

Current benchmarks and datasets provide valuable insights for evaluating Retrieval Augmented Generation (RAG) systems, including those with agent-based and graph augmentation. While some are specifically designed for RAG, others have been adapted to test retrieval, inference, and generation capabilities in a variety of scenarios. The datasets are critical for testing the retrieval, inference, and generation components of the RAG system. Table 3 discusses some key datasets for RAG evaluation based on downstream tasks.

Benchmarks play a key role in standardizing the assessment of RAG systems by providing structured tasks and indicators. The following benchmarks are particularly relevant:

- BEIR (Benchmarking for Information Retrieval): a versatile benchmark designed to evaluate the performance of embedded models on a variety of information retrieval tasks, covering 17 datasets spanning the domains of bioinformatics, finance, and Q&A [54].

- MS MARCO (Microsoft Machine Reading Comprehension): Focusing on paragraph ranking and Q&A, this benchmark is widely used for intensive retrieval tasks in RAG systems [55].

- TREC (Text Retrieval Conference, Deep Learning Track): Providing datasets for paragraph and document retrieval emphasizes the quality of ranking models in the retrieval pipeline [56].

- MuSiQue (multi-hop sequential questioning): A benchmark for multi-hop reasoning across multiple documents that emphasizes the importance of retrieving and synthesizing information from discrete contexts [57].

- 2WikiMultihopQA: a dataset designed for a multi-hop QA task across two Wikipedia articles, focusing on the ability to connect knowledge from multiple sources [58].

- AgentG (agent-based RAG for knowledge fusion): Benchmarks specifically tailored for agent-based RAG tasks assessing dynamic information synthesis across multiple knowledge bases [8].

- HotpotQA: a multi-hop QA benchmark that requires retrieval and reasoning in interrelated contexts, suitable for evaluating complex RAG workflows [59].

- RAGBench: A large-scale, interpretable benchmark containing 100,000 examples across industry sectors with the TRACe assessment framework for actionable RAG metrics [60].

- BERGEN (retrieval enhancement generation benchmarking): A library for systematically benchmarking RAG systems with standardized experiments [61].

- FlashRAG Toolkit: implements 12 RAG methods and includes 32 benchmark datasets to support efficient and standardized RAG evaluation [62].

- GNN-RAG: This benchmark evaluates the performance of graph-based RAG systems on node-level and edge-level prediction tasks, focusing on retrieval quality and inference performance in Knowledge Graph Quizzing (KGQA) [63].

Table 3: Downstream tasks and datasets evaluated by RAG (adapted from [23])

| form | Type of mission | Data sets and references |

|---|---|---|

| Questions and Answers (QA) | Single Jump QA | Natural Questions (NQ) [64], TriviaQA [65], SQuAD [66], Web Questions (WebQ) [67], PopQA [68], MS MARCO [55] |

| Multi-hop QA | HotpotQA[59], 2WikiMultiHopQA[58], MuSiQue[57] | |

| long form question and answer | ELI5 [69], NarrativeQA (NQA) [70], ASQA [71], QMSum [72] | |

| Domain-specific QA | Qasper [73], COVID-QA [74], CMB/MMCU Medical [75] | |

| Multiple choice QA | QuALITY [76], ARC (no reference), CommonSenseQA [77] | |

| Figure QA | GraphQA [78] | |

| Graph-based Q&A | Event Theory Meta-Extraction | WikiEvent [79], RAMS [80] |

| Open Domain Dialogue | Wizards of Wikipedia (WoW)[81] | |

| dialogues | Personalized Dialogue | KBP [82], DuleMon [83] |

| Task-oriented dialogue | CamRest[84] | |

| Personalized content | Amazon Dataset (Toys, Sports, Beauty) [85] | |

| Recommended Reasoning | HellaSwag [86], CommonSenseQA [77]. | |

| common sense reasoning | CoT reasoning | CoT Reasoning [87], CSQA [88] |

| (sth. or sb) else | complex inference | MMLU (no reference), WikiText-103[64] |

| language understanding | ||

| Fact-checking/validation | FEVER [89], PubHealth [90] | |

| summaries | Summary of Strategy QA Text | StrategyQA [91] |

| text summary | WikiASP [92], XSum [93]. | |

| Text Generation | long abstract | NarrativeQA (NQA) [70], QMSum [72] |

| text categorization | story | Biographical dataset (no reference), SST-2 [94] |

| General Classification of Sentiment Analysis | ||

| Code Search | Programming Search | VioLens [95], TREC [56], CodeSearchNet [96] |

| robustness | retrieval robustness | NoMIRACL [97] |

| Language Modeling Robustness | WikiText-103 [98] | |

| math | mathematical reasoning | GSM8K [99] |

| machine translation | translation task | JRC-Acquis [100] |

8 Conclusion

Agent-based Retrieval Augmented Generation (RAG) represents a transformative advancement in artificial intelligence that overcomes the limitations of traditional RAG systems by integrating autonomous agents. By utilizing agent intelligence, these systems introduce the ability to make dynamic decisions, iterative reasoning, and collaborative workflows, enabling them to solve complex real-world tasks with greater accuracy and adaptability.

This review explores the evolution of RAG systems from initial implementations to advanced paradigms such as modular RAG, highlighting the contributions and limitations of each paradigm. The integration of agents into RAG processes has become a key development, leading to the emergence of agent-based RAG systems that overcome static workflows and limited contextual adaptation. Applications across healthcare, finance, education and creative industries demonstrate the transformative potential of these systems, demonstrating their ability to deliver personalized, real-time and context-aware solutions.

Despite their promise, agent-based RAG systems face challenges that require further research and innovation. Coordination complexity, scalability and latency issues in multi-agent architectures, as well as ethical considerations, must be addressed to ensure robust and responsible deployment. In addition, the lack of benchmarks and datasets specifically designed to evaluate agent capabilities poses a significant barrier. The development of evaluation methods to capture unique aspects of agent-based RAG, such as multi-agent collaboration and dynamic adaptability, is critical to advancing the field.