Yuxi-Know: A Knowledge Graph-based Intelligent Q&A Platform

General Introduction

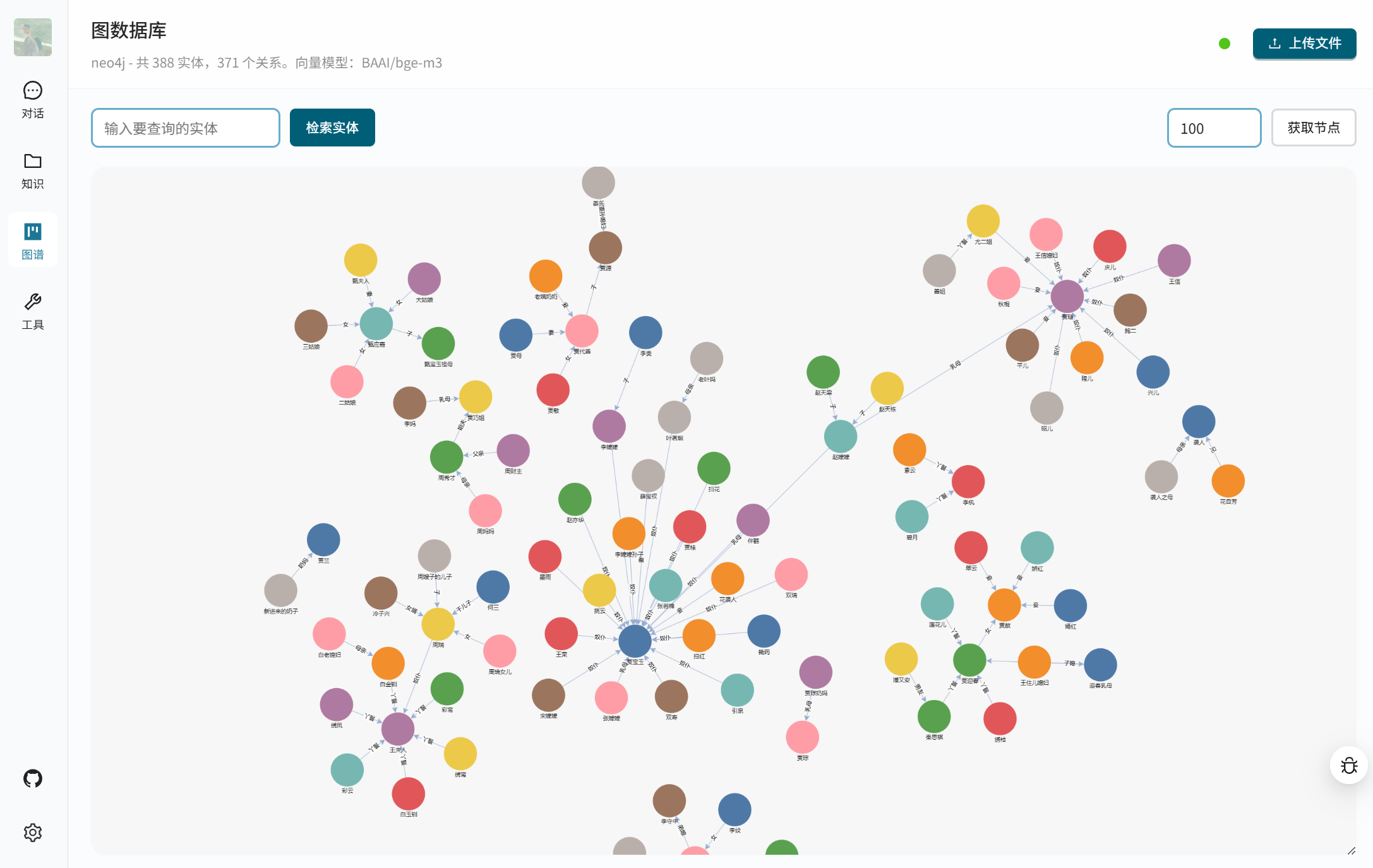

Yuxi-Know is an open source intelligent Q&A platform that combines knowledge graph and RAG (Retrieval Augmented Generation) technology to help users quickly get accurate answers. It is based on Neo4j to store the knowledge graph, uses FastAPI and VueJS to build the back-end and front-end, and supports a variety of big models, such as OpenAI, DeepSeek, Beanbag and so on. The system integrates Milvus vector databaseYuxi-Know provides networked search , DeepSeek-R1 inference model and tool call function , suitable for developers to build knowledge management or intelligent customer service system . The project is open source on GitHub, easy to deploy and extend.

Function List

- Supports a variety of large model calls, including OpenAI, DeepSeek, Beanbag, Smart Spectrum Clear Speech, and more.

- Provides knowledge graph querying and stores structured data based on Neo4j.

- be in favor of RAG technology, combined with knowledge base and networked search to generate accurate answers.

- integrated (as in integrated circuit) DeepSeek-R1 Reasoning models to deal with complex logic problems.

- Provides tool call functionality to perform external tasks via APIs.

- Supports multiple file formats (PDF, TXT, MD, Docx) to build a knowledge base.

- Use the Milvus Vector Database to store and retrieve document vectors.

- Provides a web interface, based on VueJS, simple and intuitive operation.

- Supports local model deployment through vLLM maybe Ollama Provides API services.

- Allow users to customize model and vector model configurations to suit different needs.

Using Help

Installation process

Yuxi-Know uses Docker deployment to simplify the installation process. Here are the detailed steps:

- Preparing the environment

Ensure that Docker and Docker Compose are installed. recommended for Linux or macOS, Windows users need to have WSL2 enabled. check that Docker is running properly:docker --versionMake sure Git is installed for cloning code.

- cloning project

Run the following command in the terminal to clone the Yuxi-Know code base:git clone https://github.com/xerrors/Yuxi-Know.gitGo to the project catalog:

cd Yuxi-Know - Configuring Environment Variables

Yuxi-Know needs to be configured with API keys and model parameters. Copy the template file:cp src/.env.template src/.envOpen with a text editor

src/.env, fill in the necessary key. For example:SILICONFLOW_API_KEY=sk-你的密钥 DEEPSEEK_API_KEY=你的密钥 TAVILY_API_KEY=你的密钥If using other models (e.g. OpenAI, beanbag), add the corresponding key:

OPENAI_API_KEY=你的密钥 ARK_API_KEY=你的密钥Default use SiliconFlow service, you must configure the

SILICONFLOW_API_KEY. If you are using a local model, you need to configure the model path:MODEL_DIR=/path/to/your/models - Starting services

Start all services by running the following command from the project root directory:docker compose -f docker/docker-compose.dev.yml --env-file src/.env up --buildThis will start Neo4j, Milvus, FastAPI backend and VueJS frontend. The first launch may take a few minutes. Upon success, the terminal will display:

[+] Running 7/7 ✔ Network docker_app-network Created ✔ Container graph-dev Started ✔ Container milvus-etcd-dev Started ✔ Container milvus-minio-dev Started ✔ Container milvus-standalone-dev Started ✔ Container api-dev Started ✔ Container web-dev StartedIf you need to run in the background, add

-dParameters:docker compose -f docker/docker-compose.dev.yml --env-file src/.env up --build -d - access system

Open your browser and visithttp://localhost:5173/You can access the Yuxi-Know interface. If it is not accessible, check the Docker container status:docker psMake sure all containers are running. If there is a port conflict, modify the

docker-compose.dev.ymlThe port configuration in the - Shut down services

Stop the service and delete the container:docker compose -f docker/docker-compose.dev.yml --env-file src/.env down

Using the main functions

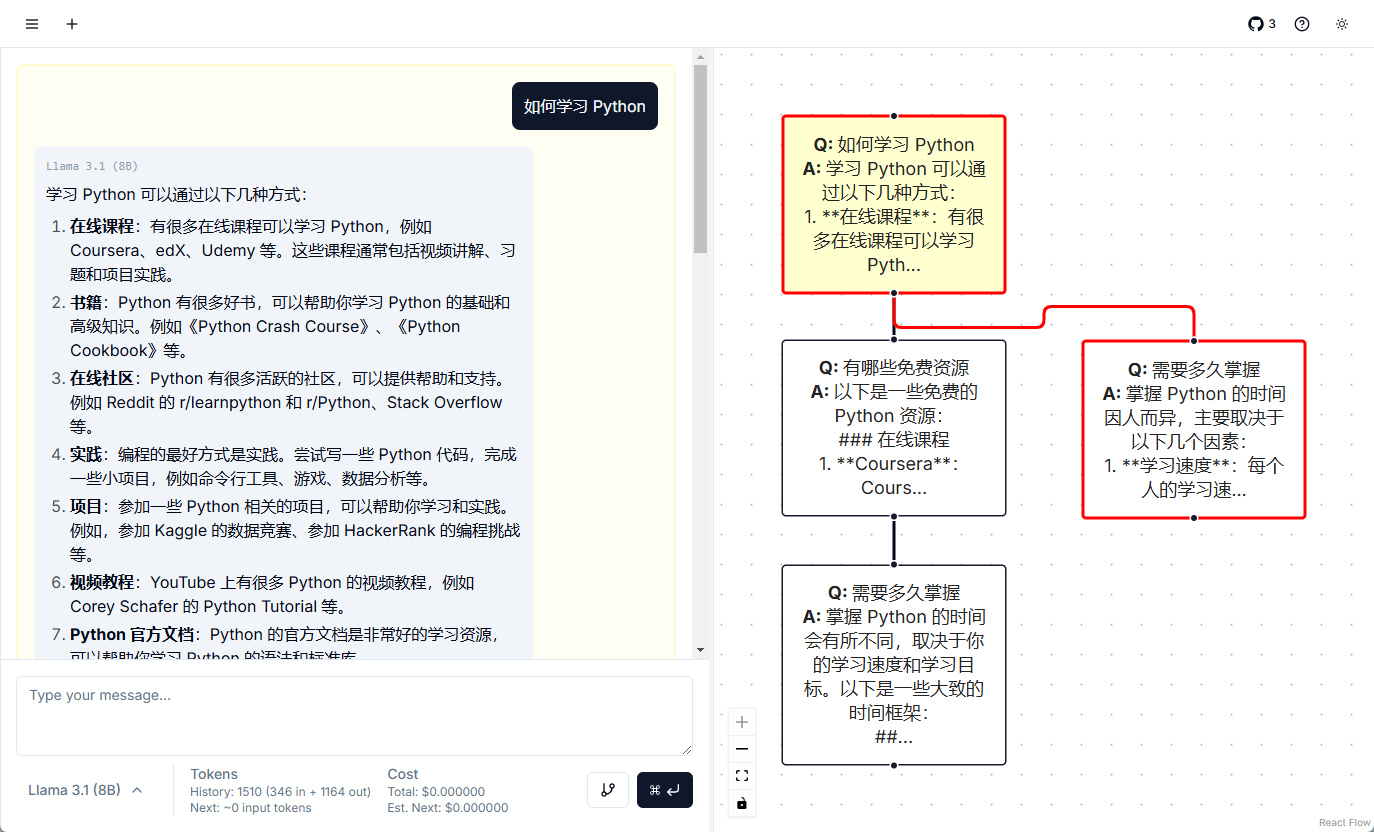

Yuxi-Know provides knowledge graph query, knowledge base search, networking search and tool calling functions. The following is the detailed operation method:

- Knowledge Graph Query

Yuxi-Know uses Neo4j to store knowledge graphs, which is suitable for querying structured data. For example, the query "What is the capital of Beijing?" The system will extract the answer from the knowledge graph.- Importing the Knowledge Graph: Prepare a JSONL format file with each line containing head nodes, tail nodes, and relationships, for example:

{"h": "北京", "t": "中国", "r": "首都"}Navigate to "Atlas Management" in the interface and upload the JSONL file. It will be automatically loaded into Neo4j.

- Visit Neo4j: Upon startup, the

http://localhost:7474/To access the Neo4j panel, the default account isneo4jThe password is0123456789The - take note of: The node needs to contain

Entitytag, otherwise the index cannot be triggered.

- Importing the Knowledge Graph: Prepare a JSONL format file with each line containing head nodes, tail nodes, and relationships, for example:

- knowledge base search

The system supports uploading PDF, TXT, MD, Docx files, which are automatically converted to vectors and stored in the Milvus database. Operation Steps:- Navigate to "Knowledge Base Management" and click "Upload Files".

- Selecting the file, the system converts the content to plain text, using a vector model (e.g., the

BAAI/bge-m3) generates vectors and stores them. - To query, enter a question such as, "What are the AI trends mentioned in the document?" The system will retrieve the relevant content and generate the answer.

- draw attention to sth.: Large files may be slow to process, segmented uploads are recommended.

- network search

When the local knowledge base cannot answer, the system enables networked search. Ensure that the configuration of theTAVILY_API_KEY. Method of operation:- Enter a question into the interface such as, "What is the latest AI technology in 2025?"

- The system works by Tavily The API crawls the web for information and combines it with a big model to generate answers.

- The results will show a link to the source, making it easy to verify the information.

- Tool Call

Yuxi-Know supports calling external tools via API, such as checking the weather or executing scripts. Procedure:- exist

src/static/models.yamlAdd the tool configuration in thetools: - name: weather url: https://api.weather.com/v3 api_key: 你的密钥 - Enter in the interface: "What is the weather in Shanghai today?" The system will call the tool and return the result.

- exist

Featured Function Operation

- DeepSeek-R1 inference model

DeepSeek-R1 is the highlight of Yuxi-Know for complex reasoning tasks. Method of Operation:- assure

DEEPSEEK_API_KEYmaybeSILICONFLOW_API_KEYConfigured. - In the interface model selection switch to

deepseek-r1-250120The - Enter a question such as, "An apple costs $2 more than an orange, three apples and two oranges total $16. How much are the oranges?" The system will reason and answer, "The oranges are $2."

- dominance: Can handle multi-step logic problems with more reliable answers.

- assure

- Multi-model support

The system supports switching multiple models to adapt to different scenarios. Operation method:- Select the model from the drop-down menu in the upper right corner of the interface, such as

Qwen2.5-7B-Instruct(SiliconFlow),gpt-4o(OpenAI) ordoubao-1-5-pro(beanbag). - Each model has different advantages, for example, Doubao is suitable for Chinese semantic understanding and OpenAI is suitable for complex tasks.

- Add new model:: Editorial

src/static/models.yaml, add vendor configurations, for example:zhipu: name: 智谱清言 url: https://api.zhipuai.com/v1 default: glm-4-flash env: - ZHIPUAI_API_KEYAfter restarting the service, the new model is ready to use.

- Select the model from the drop-down menu in the upper right corner of the interface, such as

- Local Model Deployment

If you need to use a local model (e.g. LLaMA), you can deploy it via vLLM or Ollama. Procedure:- Start the vLLM service:

vllm serve /path/to/model --host 0.0.0.0 --port 8000 - Add a local model in the "Settings" of the interface, enter a URL (e.g.

http://localhost:8000/v1). - After saving, the system will prioritize the use of the local model for network-less environments.

- Start the vLLM service:

- Vector Model Configuration

Default useBAAI/bge-m3Generate vectors. If you need to replace it, edit thesrc/static/models.yaml::local/nomic-embed-text: name: nomic-embed-text dimension: 768The local model will be downloaded automatically, if the download fails, it can be obtained through the HF-Mirror mirror station.

caveat

- network requirement: Stable network is required for network retrieval and model calling, it is recommended to check the

.envkey in the file. - Neo4j Configuration: If you already have a Neo4j service, you can modify the

docker-compose.ymlhit the nail on the headNEO4J_URI, avoiding duplicate deployments. - Mirror Download: If the Docker image pull fails, you can use the DaoCloud mirror station:

docker pull m.daocloud.io/docker.io/library/neo4j:latest docker tag m.daocloud.io/docker.io/library/neo4j:latest neo4j:latest - Log View: If the service is abnormal, check the container log:

docker logs api-dev

application scenario

- Enterprise Knowledge Management

Enterprises can upload internal documents (e.g., operation manuals, technical specifications) to the knowledge base or organize them into a knowledge graph. Employees use Yuxi-Know to ask questions such as "How to configure a server?" The system quickly returns answers, reducing training time. - Academic research support

Researchers can upload papers or organize subject knowledge maps. For example, build a chemical molecule relationship map and ask "What are the chemical bonds of carbon atoms?" The system combines maps and documents to return detailed answers, and can be networked to add the latest research. - Intelligent Customer Service System

Merchants can enter product information and frequently asked questions into the system. When a customer asks "How do I return a product?" Yuxi-Know pulls the answer from the knowledge base, or checks online for the latest policy and provides an accurate response.

QA

- How do I upload a Knowledge Base file?

Navigate to "Knowledge Base Management" in the interface, click "Upload" and select PDF, TXT, MD or Docx file. The system will automatically process and store them in the Milvus database. - What configurations are required for networked retrieval?

need to be at.envfile to configure theTAVILY_API_KEYIf you don't have a key, you can register for one through SiliconFlow or Tavily. If you don't have a key, you can sign up for one via SiliconFlow or the Tavily website. - How to debug a local model?

Ensure that the vLLM or Ollama service is listening0.0.0.0After startup, add the correct URL to the "Settings" section of the interface.docker logs api-devCheck the connection status. - What should I do if the knowledge graph import fails?

Check the JSONL file format to ensure that each line contains theh,t,rfields. After uploading, restart the Neo4j service and confirm that the node contains theEntityTags.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...