NVIDIA unveils personal AI supercomputer: NVIDIA Project DIGITS, capable of running big models with 200 billion parameters

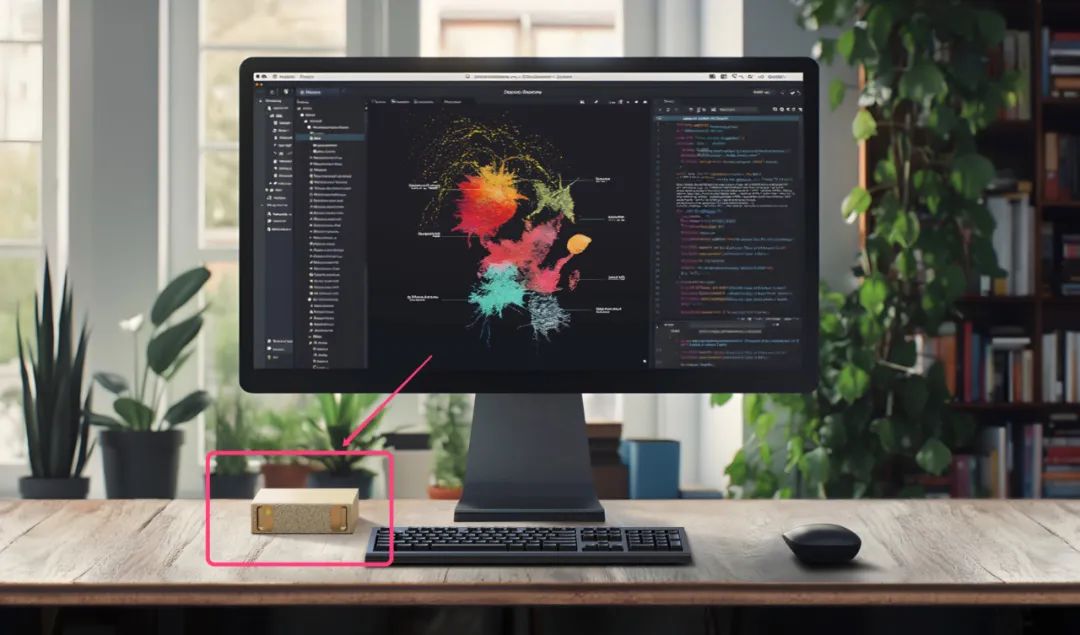

In a nutshell: a personal AI supercomputer that can sit on your desktop

NVIDIA unveiled today at CES 2025 the NVIDIA Project DIGITS, which is a personal AI supercomputer that can sit on your desktop.

- Bring AI computing, which has traditionally required large data centers to accomplish, to everyone's desktop.

- Provides cost-effective computing solutions that support the entire development process, from small-scale experiments to large-scale production.

What does this thing do?

Project DIGITS provides a superb AI computing tool that can be done by the average user on their own computer:

Project DIGITS utilizes the new NVIDIA GB10 Grace Blackwell Superchip, which is capable of delivering 1 PFLOP (1.5 Gigabit per second).One thousand trillion floating-point operations per second.) AI computing performance.

Designed for prototyping, fine-tuning, and running large AI models, it enables users to develop and run inference models on a local desktop system and then deploy them seamlessly to the cloud or data center.

That is, it can run super-sized AI models, right in your local area.Large language models capable of running up to 200 billion parameters(For example...) .

And it supports developing and testing AI models on local computers and then quickly deploying them to the cloud or data center. In a nutshell.It's like having a pocket-sized AI supercomputer for every developer!

That's all it is. It's smaller than a Mac mini.

What is its core technology?

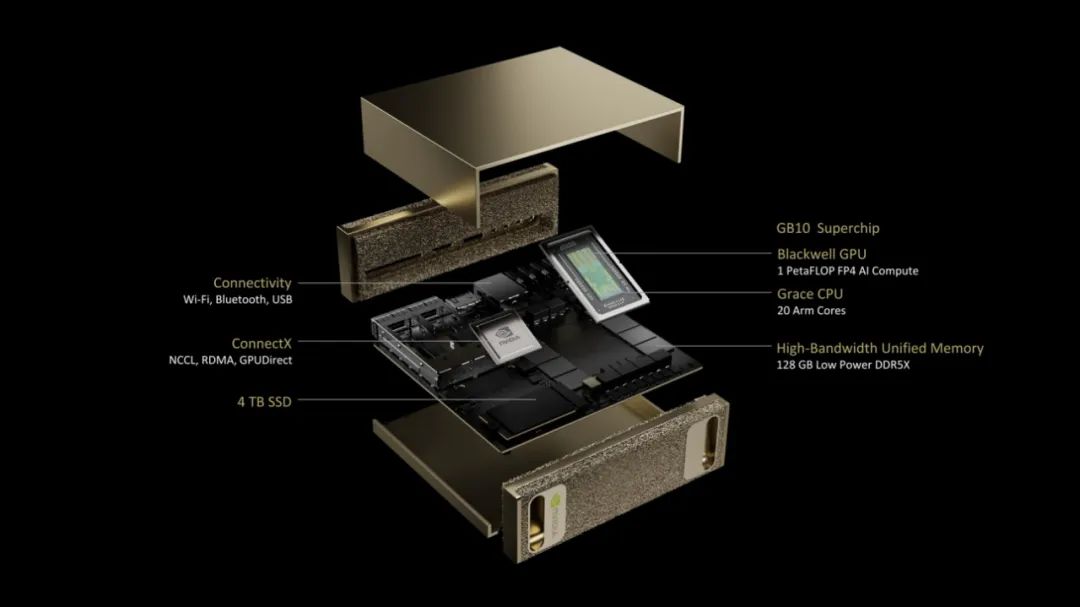

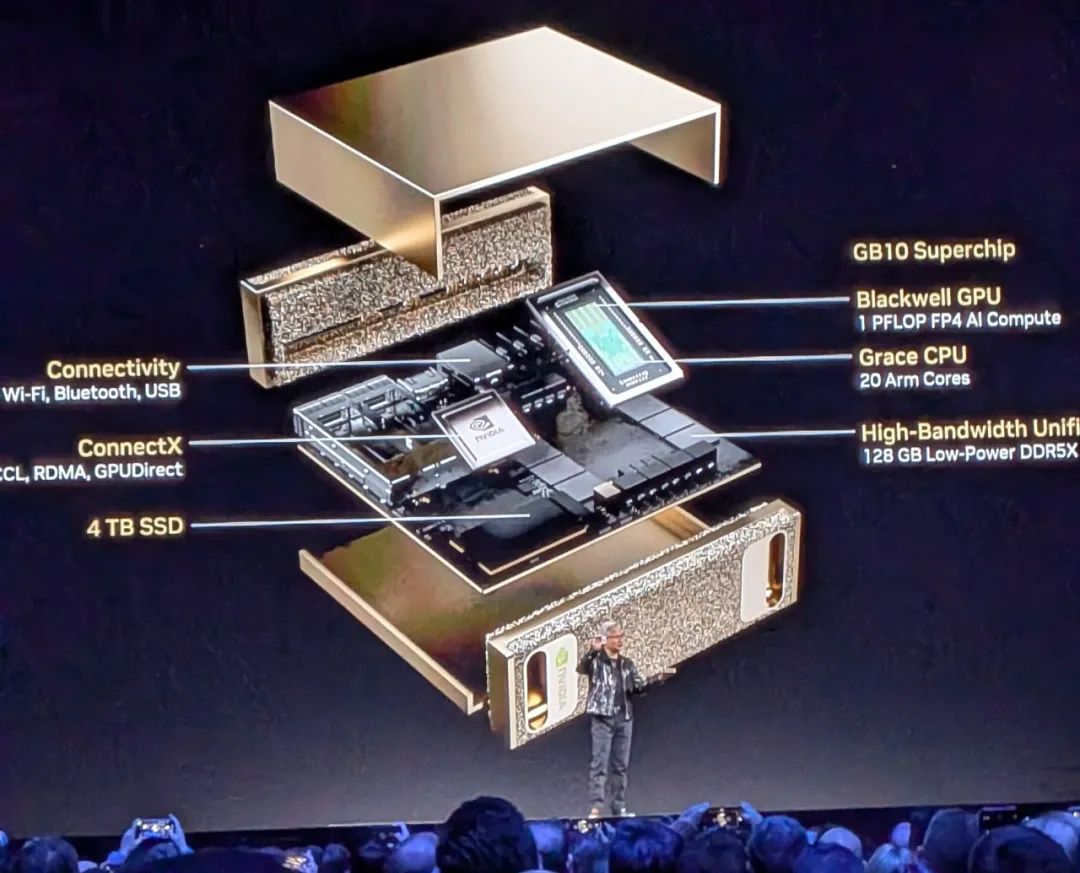

At the heart of Project DIGITS is a chip called the GB10 Superchip:

SoC design:Based on the NVIDIA Grace Blackwell architecture, it integrates NVIDIA Blackwell GPUs (with the latest generation of CUDA cores and 5th generation Tensor Cores) with high-performance NVIDIA Grace CPUs optimized for deep learning.

Efficient performance:Available at FP4 accuracy 1 PFLOP (one thousand trillion floating point operations per second) computational performanceThe Armor is a powerful, low-power, high-performance core with a high level of performance. It also features 20 high-performance cores based on the Arm architecture for low power consumption and high performance.

Energy consumption and storage:

- Only need ordinary power outlet power supply, no need special equipment equipped with

- 128GB of unified memory and 4TB of NVMe storage

Performance is superb:Can run very complex AI tasks that can handle 200 billion Parametersof a large language model.

Scalability:Through the NVIDIA ConnectX network.Two Project DIGITS supercomputers connected to run 405 billion parametric models.The

Connection Performance: High-speed interconnection of GPU and CPU through NVLink-C2C.

What's the point and for whom?

What's the point?

Local Development and Testing: Allows developers to rapidly prototype and experiment with AI models in a local environment.

Cloud Extension: Locally developed models can be migrated directly to the cloud to accelerate deployment.

Efficient Performance and Cost: Provides computing power comparable to large data centers, but at a fraction of the cost and energy consumption.

Diversified application scenarios: supports AI applications in multiple fields such as deep learning, natural language processing, computer vision, and so on.

Who is it for?

- Ideal for AI researchers, data scientists, students, and startups.

- Easier to get started: only the configuration of an ordinary computer is needed to use it.

- Flexibility: Seamless from local development to cloud deployment.

Typical Application Scenarios

AI Research and Innovation

Develop and test complex AI models such as large-scale language models (LLM) or generative AI applications.

Perform prototype development, model fine-tuning and experimentation.

data science

Use RAPIDS and other tools to quickly process and analyze large data sets.

Accelerate data cleaning, feature engineering and modeling.

Teaching and learning

Affordable AI computing resources for colleges and universities and students to support AI teaching and learning practices.

Software & Ecological Support

NVIDIA offers a rich software ecosystem that enables users to quickly begin developing and deploying AI models:

(1) Development tools

NVIDIA NGC: Provides a comprehensive software library of development kits (SDKs), frameworks and pre-trained models.

NVIDIA NeMo: a framework for fine-tuning large language models (LLMs).

NVIDIA RAPIDS: for accelerating data science workflows.

Compatible with popular frameworks: supports popular tools such as PyTorch, Python and Jupyter Notebook.

(2) Deployment support

Users can develop models locally and later deploy them directly to the NVIDIA DGX Cloud or other accelerated cloud and datacenter architectures with no code changes.

(3) Enterprise-level support

Provides NVIDIA AI Enterprise software for enterprise-grade security, support, and production environment releases.

Why is it important?

AI computing power that used to be affordable only for big enterprises or labs is now becoming an affordable tool for the average developer. What this means:

Small teams or individuals can develop and test their own AI models.

The barriers to AI research and innovation are greatly reduced.

Popularization of AI Computing: Driving the popularization of AI technology by lowering the cost of hardware and the difficulty of deployment. Driving Innovation: Providing individuals and small teams with unprecedented computing power to inspire more innovation. Ecosystem Integration: Seamlessly integrate NVIDIA's hardware and software ecosystems to provide users with a one-stop solution.

How much? When will it be available?

Release Date:May 2025

Selling price:Starting at $3,000.The new NVIDIA Cinema is available from NVIDIA and top-tier partners.

Sign up for notifications on the NVIDIA website.

meanwhile

NVIDIA has launched a fullNew GeForce RTX 50 SeriesGraphics cards and laptops.

These products are based on the Blackwell RTX architecture, which delivers revolutionary performance improvements and AI-driven neural rendering technology.

Delivering up to 8x performance improvements (via DLSS 4) and up to 75% latency reductions (via Reflex 2), the RTX 50 Series opens up a whole new world of possibilities for gamers and content creators.

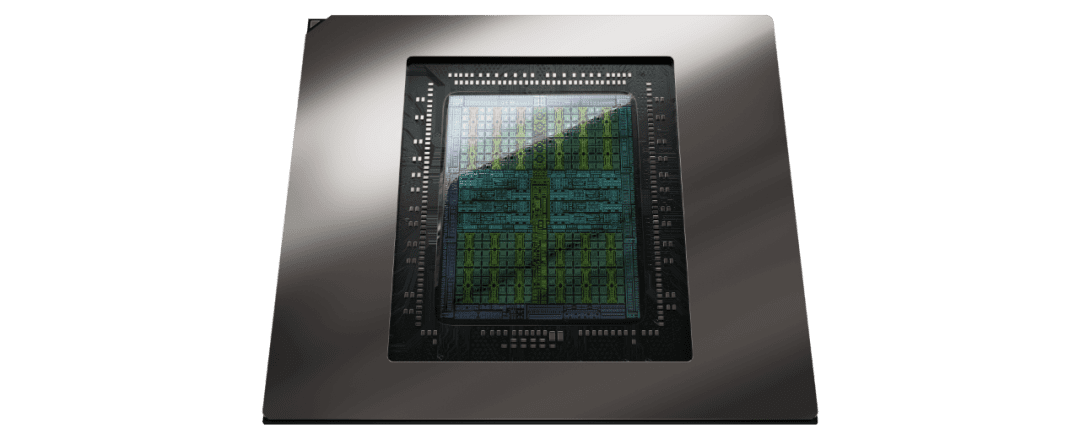

Powerful Blackwell Architecture

Containing 92 billion transistors, it utilizes the latest GDDR7 memory (at speeds up to 30Gbps) to deliver up to 1.8TB/s of memory bandwidth.

A new generation of Tensor cores and Ray Tracing (RT) cores to support real-time rendering and more efficient AI model processing.

DLSS 4 and Reflex 2 support to improve game smoothness and responsiveness.

GeForce RTX 5090

Specification Parameters:

Number of CUDA cores: 21,760

Memory: 32GB GDDR7

Memory Bandwidth: 1792 GB/sTensor

Core: 680 (5th generation)

RT core: 170 (4th generation)

Major performance enhancements:

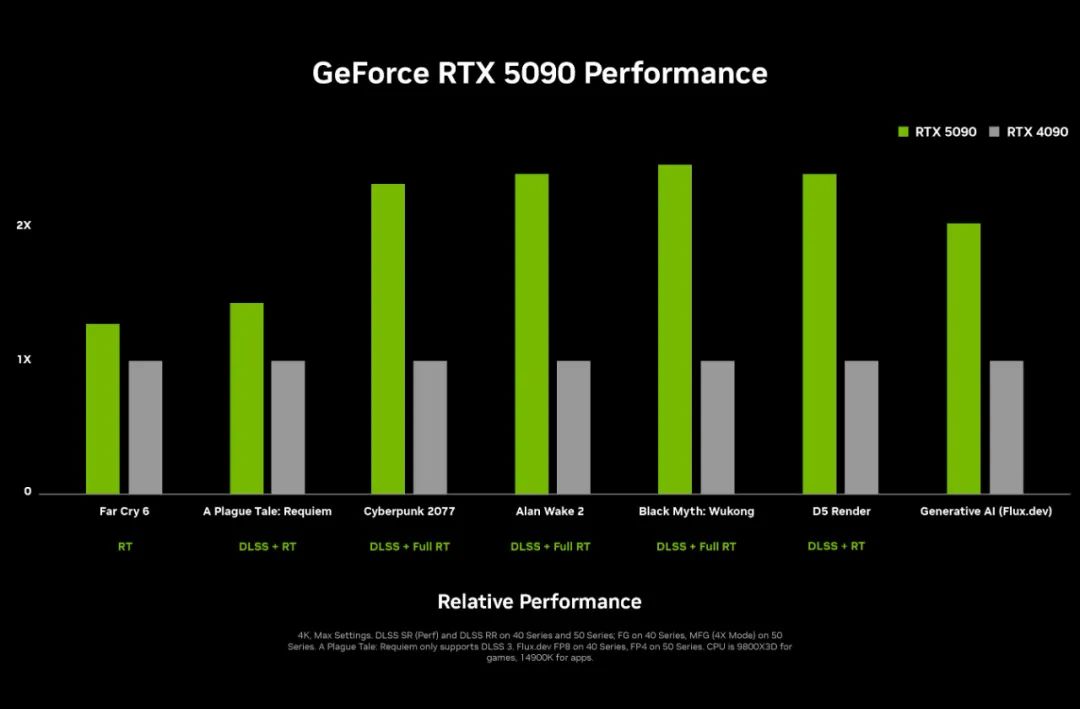

Twice the performance of the RTX 4090.

Supports 4K resolution, 240 FPS, and full light-tracing gaming with DLSS 4 and Multi Frame Generation. Support for generative AI applications with up to 2x faster image generation and reduced memory footprint (FP4 mode).

Price: Starting price $1,999

Listing date: January 30, 2025

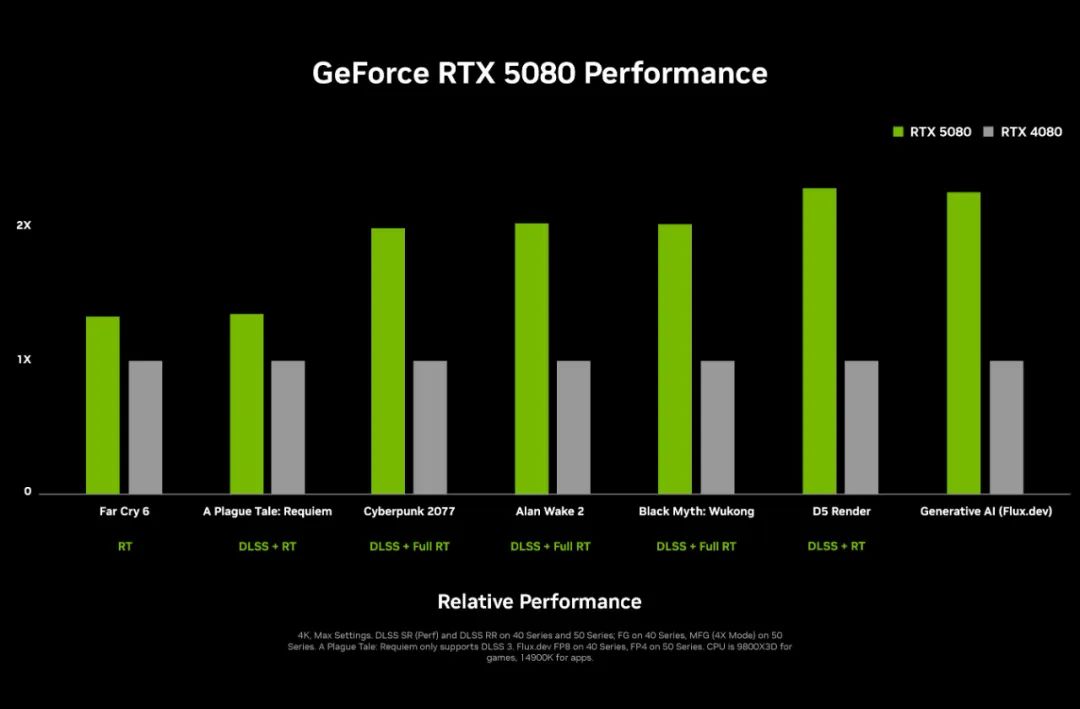

GeForce RTX 5080

Specification Parameters:

Number of CUDA cores: 16,384

Memory: 16GB GDDR7

Memory bandwidth: 960 GB/s

Major performance enhancements:

Twice the performance of the RTX 4080.

Play games that support optical tracking (such as Cyberpunk 2077 and Alan Wake 2), Black Myth: Goku, and more in 4K resolution to meet the high demand for 3D rendering and video editing from creators.

Price: Starting price $999

Listing date: January 30, 2025

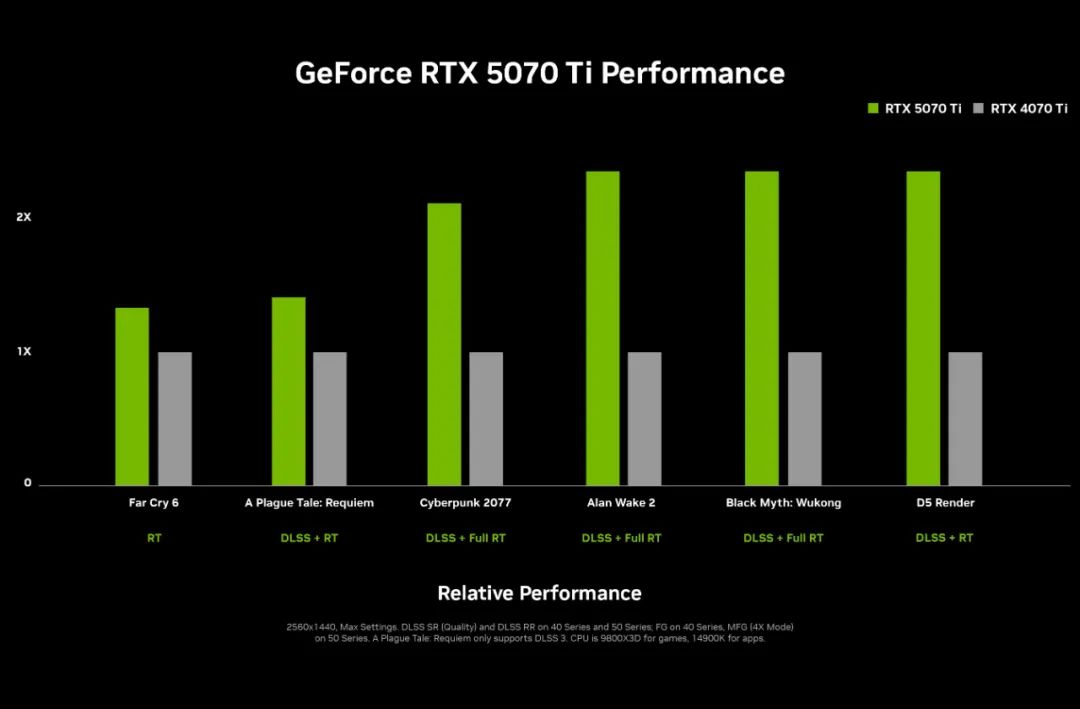

GeForce RTX 5070Ti

Specification Parameters:

Number of CUDA cores: 12,288

Memory: 16GB GDDR7

Memory bandwidth: 896 GB/s (78% over RTX 4070 Ti)

Major performance enhancements:

2x the performance of the RTX 4070 Ti.

Runs light pursuit games at high frame rates at 2560x1440 resolution.

Price: Starting price $749

Launch date: February 2025

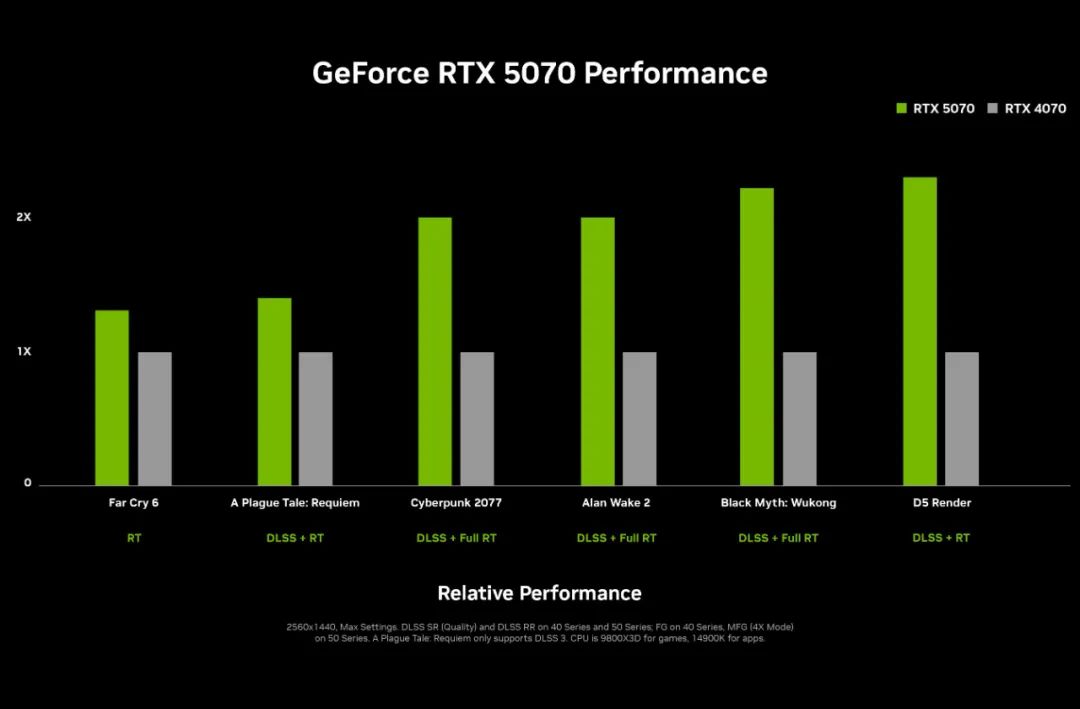

GeForce RTX 5070

Specification Parameters:

Number of CUDA cores: 10,240

Memory: 12GB GDDR7

Memory bandwidth: 672 GB/s (significant improvement over RTX 4070)

Major performance enhancements:

Twice the performance of the RTX 4070.

Runs light-tracing games at high frame rates at 2560x1440 resolution with DLSS Multi Frame Generation support.

Price: Starting price $549

Launch date: February 2025

Next Generation Innovative Technology

DLSS 4 Neural Rendering Technology

DLSS 4 is NVIDIA's latest neural rendering technology, powered by the GeForce RTX Tensor core, which delivers significant frame rate improvements while maintaining crisp image quality.

Generate up to 3 additional frames per traditional rendering frame

Up to 8x frame rate improvement

Supports 4K 240FPS full ray tracing gaming

First time in a game Transformer AI model

Improved timing stability and motion details

Reflex 2 frame morphing technology

NVIDIA Reflex 2 reduces gaming latency by up to 75% with new frame morphing technology for a smoother gaming experience.

Reduce game latency by 75%

Synchronizing CPU and GPU workflows

Updated rendering frames based on latest mouse input

Providing a competitive edge for multiplayer games

Making single-player games more responsive

NVIDIA ACE AI Role

NVIDIA ACE is a suite of digital character technologies that breathe life into game characters and digital assistants through generative AI.

AI-driven character behavior in games

Continuous Learning Enemy AI

Self-acting NPC system

Supports a number of well-known games

Real-time response to player behavior

Project R2X PC Digital Man

Project R2X is a vision-based PC avatar that helps users with everyday tasks and provides AI assistance.

R2X provides a vision-based avatar that acts as a desktop assistant for users.

It assists users with a variety of tasks such as reading and summarizing documents, managing applications, video conferencing, and more.

Support for video conferencing

Supporting Documentation Reading and Summarizing

Connecting GPT4, Grok, and other cloud-based AIs

Supports multiple development frameworks

Real-time desktop application assistance

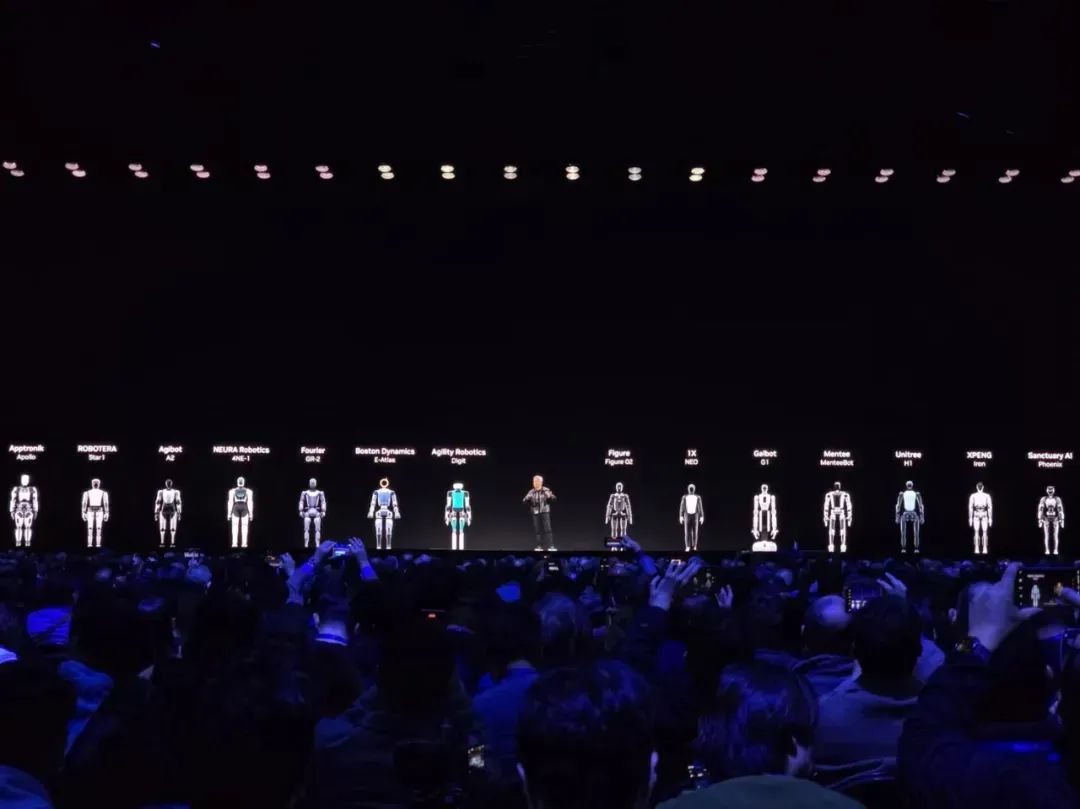

robot army

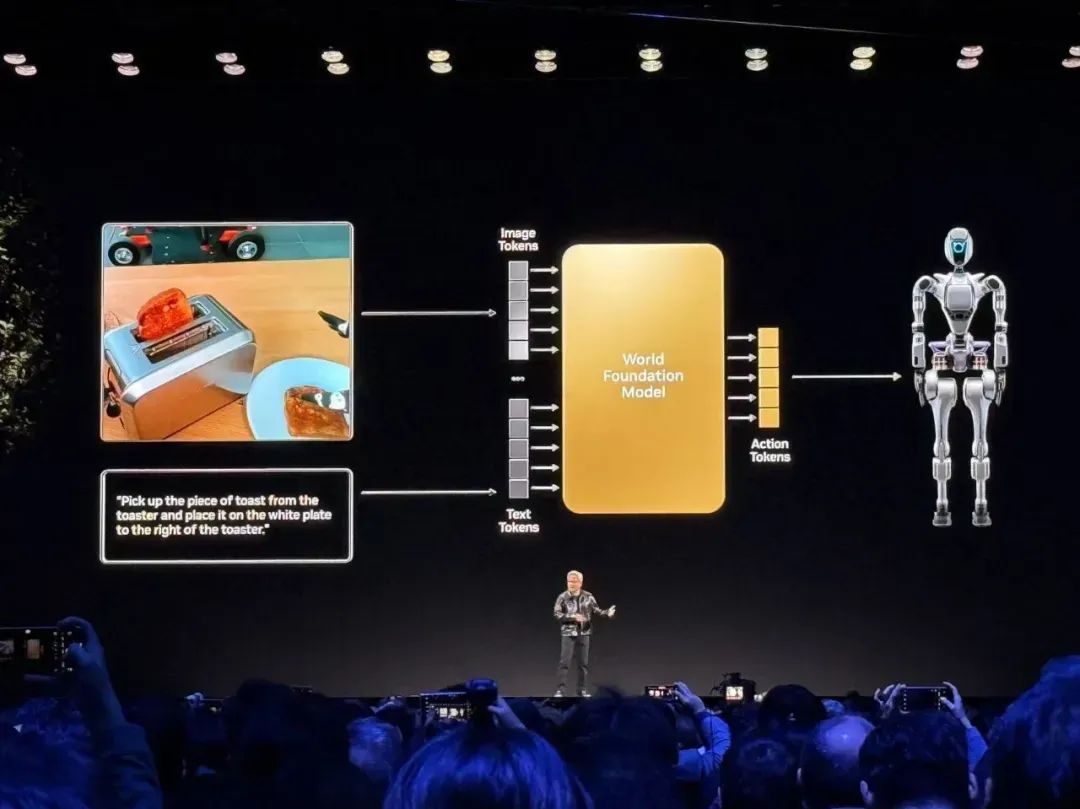

NVIDIA also announced the NVIDIA Cosmos™ platform, an advanced suite of tools designed to accelerate the development of physical AI systems such as self-driving vehicles and robots.

Cosmos Includes generative World Foundation Models (WFMs), advanced video tokenizers, security assurance mechanisms, and accelerated video processing pipelines.

The platform aims to help developers dramatically reduce the cost of physical AI model development by generating realistic, physically-based synthetic data.

The short answer is:Cosmos is specifically designed to help develop robotics and autonomous driving systems. It generates virtual data and simulated scenarios from AI models, allowing developers to train and test their AI systems faster and cheaper without having to spend a lot of time and money collecting real data.

- Text-to-world and video-to-world generation is possible.

- Three models are offered: Nano (low-latency edge deployment), Super (high-performance baseline model), and Ultra (high-fidelity model).

- Through 18,000,000,000,000,000,000,000,000,000,000,000,000,000,000 tokens of training, covering 20 million hours of real and synthetic data.

- Tokenizers are 8x more efficient in compression and 12x faster in processing.

Cosmos Key Capabilities

1. Generation of virtual data

- Driving conditions in snowy weather

- Complex operation of robots in warehouses

- Ultra-realistic virtual scenarios can be created, for example: this data can be used to train AI systems, reducing the dependence on real-world data.

2. Fast processing of video data

- Cosmos' tools can quickly organize and label large-scale video data more than 10 times faster than traditional methods, saving time and money.

3. Simulation and testing

- Simulate different weather and road conditions (rain, fog, congestion).

- Test the robot's ability to move around a factory or warehouse.

- It is possible to test robots or autonomous driving systems in virtual environments, for example:

4. Help develop AI models

- Provides open source base models that developers can customize to their needs for use in robotics or autonomous driving technology.

5. Multiple application scenarios

- For self-driving training: Uber and Waabi, for example, use it to create virtual driving test scenarios.

- For robotics development: Agility and XPENG use it to train and optimize robot operations.

Robotics companies on display at the site:

global

- Apptronik: Apollo Robotics

- Agility Robotics: Digit robots

- NEURA Robotics: 4NE-1 Robot

- figure: Figure 02 Robots

- 1X: NEO Robot

- Mentee: MenteeBot robot

- Sanctuary AI: Phoenix Robotics

sino

- Unitree Robotics: H1 Robot

- WiseGen Robotics: Expedition A2 Robot

- Star Trek (US TV and film series): Star1 Robot

- Galaxy General: G1 Robot

- Fourier Intelligence.: GR-2 Robot

- Xiaopeng Automobile Company (PRC car manufacturer): Iron Robot

summarize

This NVIDIA release will advance artificial intelligence to be more applied, making model training and fine-tuning enter the consumer-grade field, and will also promote AI applications, smart hardware and robotics to explode!

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...