XTuner V1 - Shanghai AI Lab open source large model training engine

What is XTuner V1?

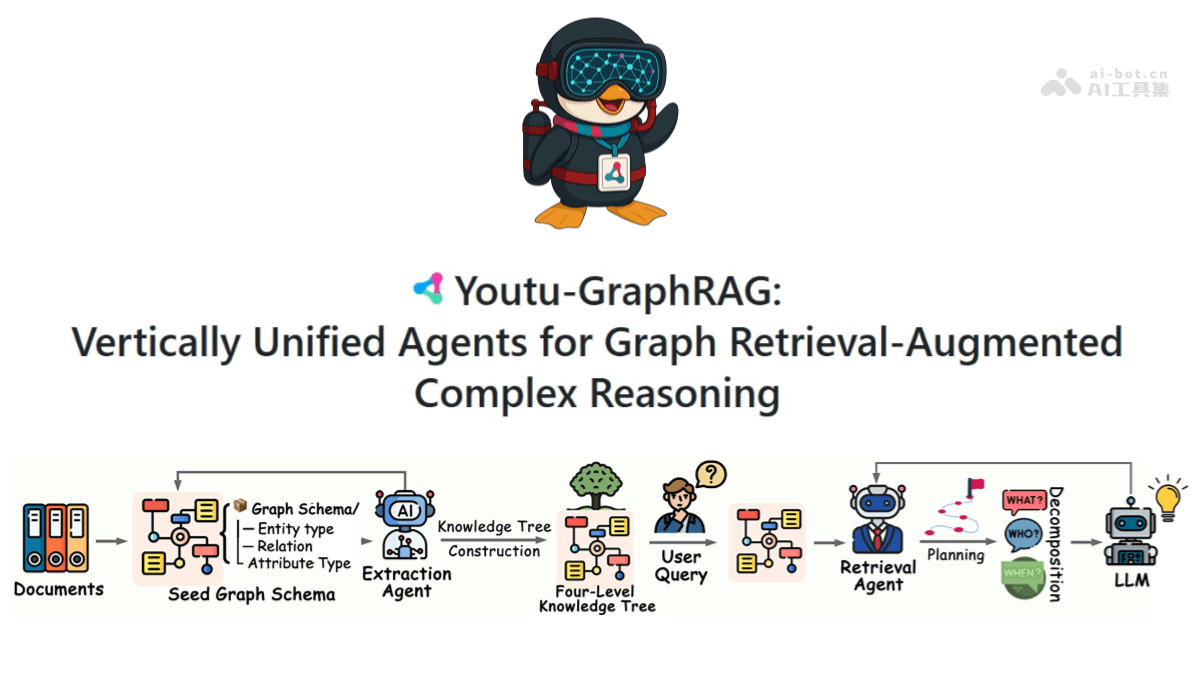

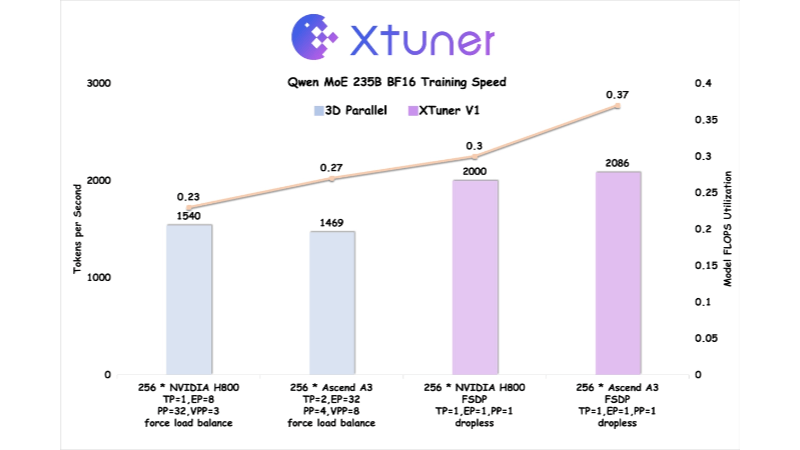

XTuner V1 is a new generation of large model training engine open-sourced by Shanghai Artificial Intelligence Laboratory, designed for ultra-large scale sparse Mixed Expert (MoE) model training. Developed based on PyTorch FSDP, it achieves high-performance training through multi-dimensional optimization of memory, communication and load, etc. XTuner V1 supports MoE model training with up to 1 trillion parameters, and for the first time, it surpasses the traditional 3D parallel scheme in training throughput on the scale of over 200 billion models. It supports 64k long sequence training without sequence parallelism technology, which significantly reduces expert parallelism dependency and improves long sequence training efficiency.

Features of XTuner V1

- non-destructive training: 200 billion scale MoE models can be trained without expert parallelism, and 600 billion models require only in-node expert parallelism.

- Long Sequence Support: Supports 64k sequence length training of 200 billion MoE models without sequence parallelism.

- high efficiency: Supports MoE model training with 1 trillion parameters and 200 billion+ model training throughput over traditional 3D parallelism.

- Video Memory Optimization: Reduces graphics memory spikes through the automatic Chunk Loss mechanism and Async Checkpointing Swap technology.

- communications coverage: Masks communication time-consumption through memory optimization and Intra-Node Domino-EP technology.

- DP load balancing: Mitigating the computational empty bubble problem caused by variable-length attention and maintaining load balancing in the parallel dimension of data.

- Hardware Co-Optimization: Optimized on Ascend A3 NPU supernode in cooperation with Huawei Rise, the training efficiency exceeds that of NVIDIA H800.

- Open source and toolchain support: XTuner V1, DeepTrace and ClusterX open source, providing a full range of support.

Core Benefits of XTuner V1

- Efficient training: Supports MoE model training with up to 1 trillion parameters, with training throughput exceeding traditional 3D parallel schemes in 200+ billion scale models.

- Long Sequence Processing: 64k sequence length training of 200 billion MoE models without sequence parallelism, suitable for long text processing scenarios such as reinforcement learning.

- low resource requirement: 200 billion parameter models do not require expert parallelism, and 600 billion models require only in-node expert parallelism, reducing hardware resource requirements.

- Video Memory OptimizationThe newest addition to the system is a new technology that significantly reduces memory spikes through automatic Chunk Loss and Async Checkpointing Swap to support larger model training.

- communications optimization: Reduce the impact of communication overhead on training efficiency by masking communication time consumption through memory optimization and Intra-Node Domino-EP techniques.

- load balancing: Mitigating the computational null bubble problem caused by variable-length attention, ensuring load balancing in the parallel dimension of data, and improving training efficiency.

What is the official website for XTuner V1

- Project website:: https://xtuner.readthedocs.io/zh-cn/latest/

- GitHub repository:: https://github.com/InternLM/xtuner

People for whom XTuner V1 is intended

- Large model researchers: For researchers who need to train ultra-large-scale sparse Mixed Expert (MoE) models, XTuner V1 provides a high-performance training engine that supports model training with up to 1 trillion parameters.

- Deep Learning EngineerFor engineers engaged in large-scale distributed training, XTuner V1 provides optimized communication and memory management features that significantly improve training efficiency.

- AI infrastructure developersFor developers focused on hardware co-optimization and high-performance computing, XTuner V1 works with Huawei's Rise technology team to provide deep hardware-specific optimizations.

- Open Source Community Contributors: Developers who are interested in open source projects and want to contribute, the open source code of XTuner V1 provides a wealth of development and optimization opportunities.

- Enterprise AI TeamXTuner V1 provides easy-to-use and high-performance toolchain support for enterprise teams that need an efficient, low-threshold solution for training large models.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...