XRAG: A Visual Evaluation Tool for Optimizing Retrieval Enhancement Generation Systems

General Introduction

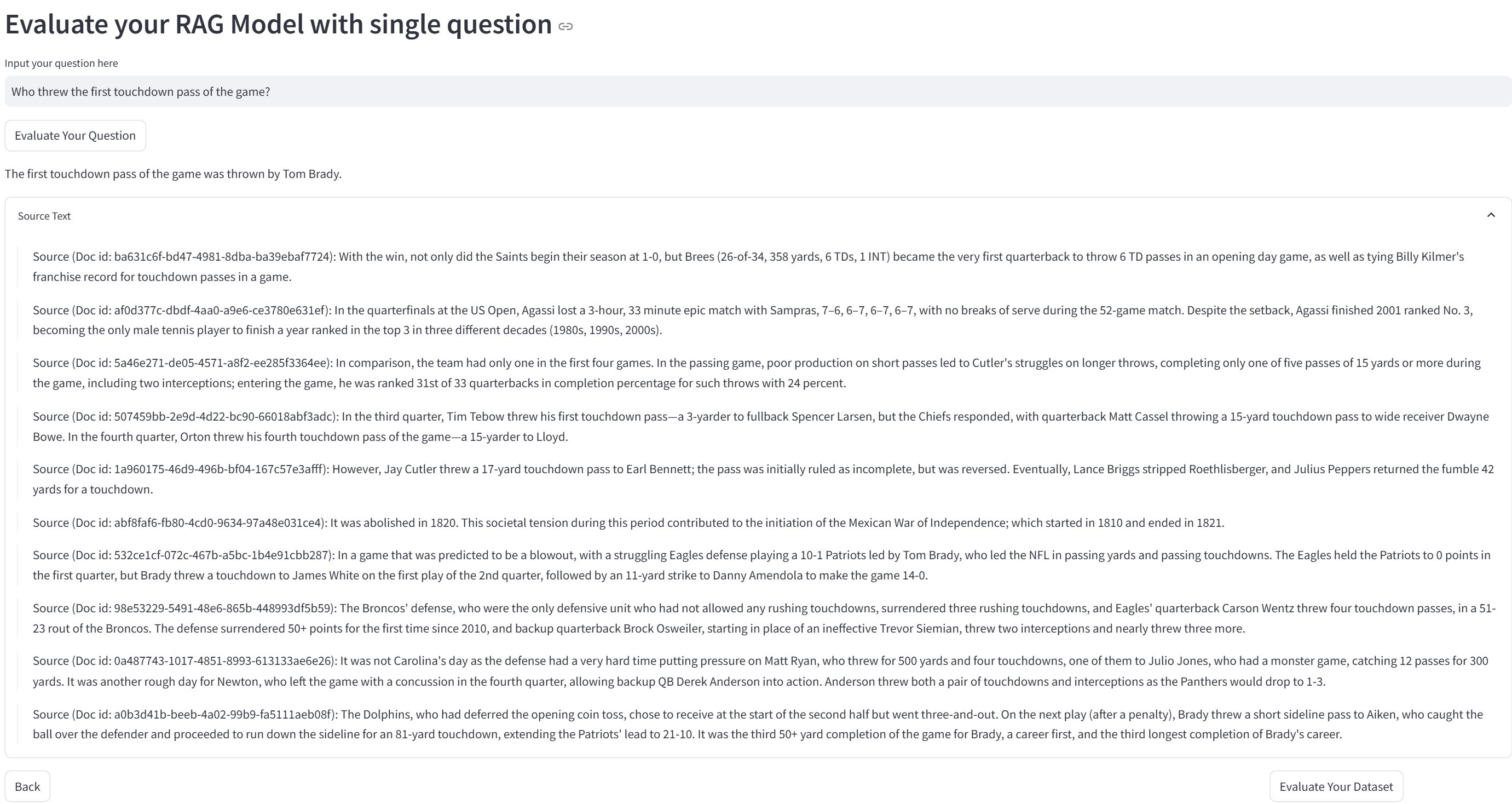

XRAG (eXamining the Core) is a benchmarking framework designed for evaluating the underlying components of advanced retrieval augmentation generation (RAG) systems. By profiling and analyzing each core module, XRAG provides insights into how different configurations and components affect the overall performance of a RAG system. The framework supports multiple retrieval methods and evaluation metrics designed to help researchers and developers optimize and improve all aspects of RAG systems.

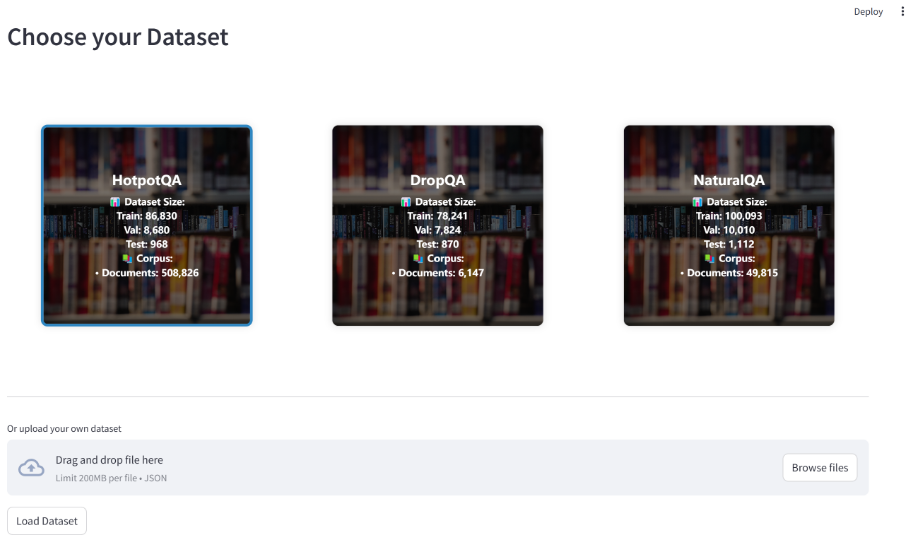

XRAG supports comprehensive RAG evaluation Benchmark and Toolkit, covering more than 50+ test metrics and RAG comprehensive evaluation and failure point optimization, supports the comparison of 4 types of Advanced RAG modules (Query Reconstruction, Advanced Retrieval, Question and Answer Modeling, and Post-Processing), integrates multiple specific implementations within the modules, and supports the OpenAI Big Model API. XRAG version 1.0 also provides a simple Web UI demo, light interactive data upload and unified standard format, and integrates RAG failure detection and optimization methods. The article and code are now open source.

Function List

- Integrated assessment framework: Supports multi-dimensional assessments, including LLM-based assessments, in-depth assessments, and traditional metrics.

- Flexible Architecture: Modular design to support multiple search methods and customized search strategies.

- Multi-LLM Support: Seamless integration with OpenAI models and support for native models (e.g. Qwen, LLaMA, etc.).

- Rich set of assessment indicators: Includes traditional metrics (F1, EM, MRR, etc.) and LLM-based metrics (veracity, correlation, etc.).

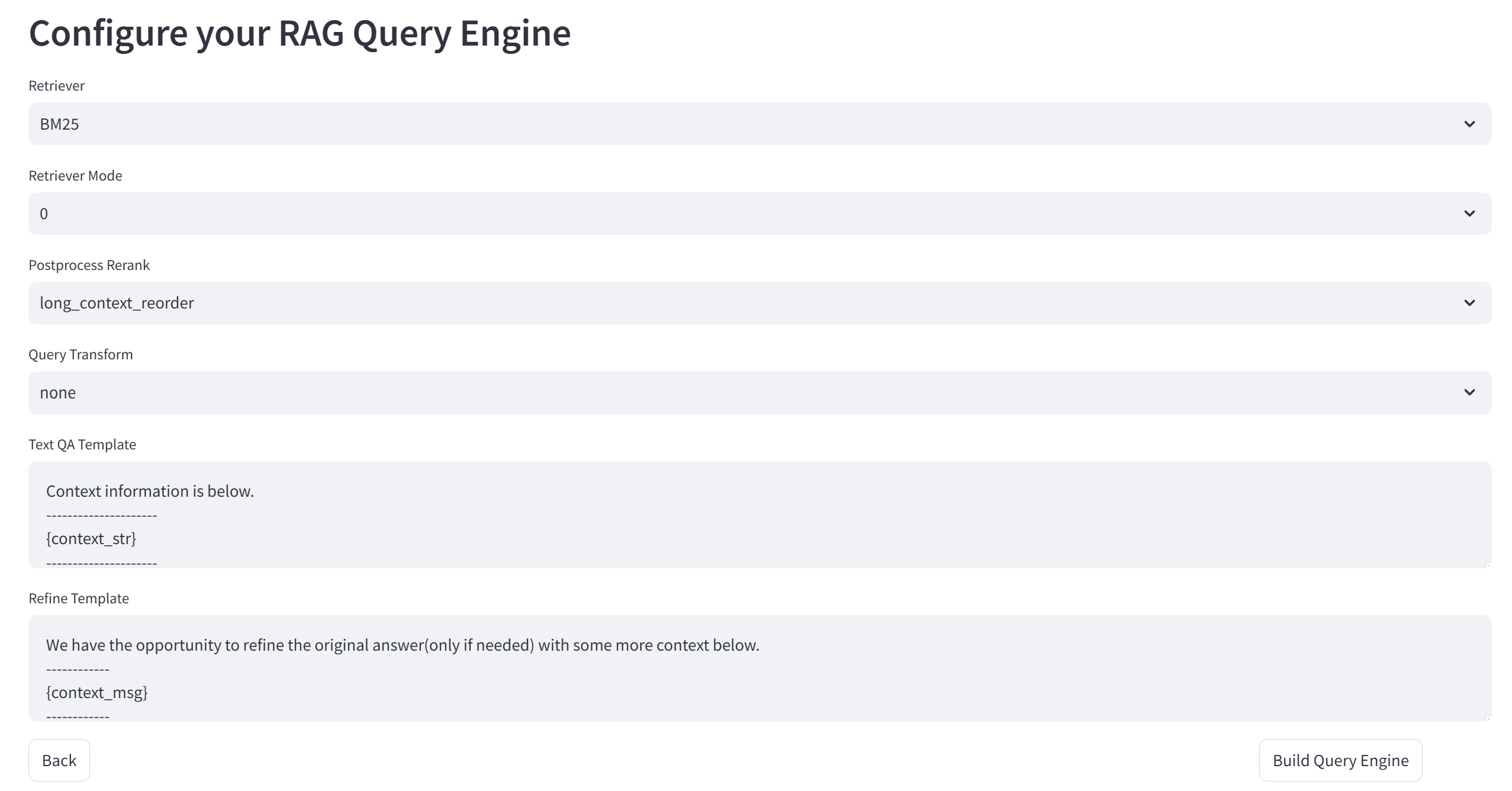

- Advanced Search Methods: Support BM25, vector semantic search, tree structure search and other search methods.

- user-friendly interface: Provides a command line interface and a Web UI to support interactive evaluation and visualization.

Using Help

Installation process

- clone warehouse::

git clone https://github.com/DocAILab/XRAG.git

cd XRAG

- Installation of dependencies::

pip install -r requirements.txt

- Configuration environment: Modified as required

config.tomlfile to configure model parameters and API settings.

Guidelines for use

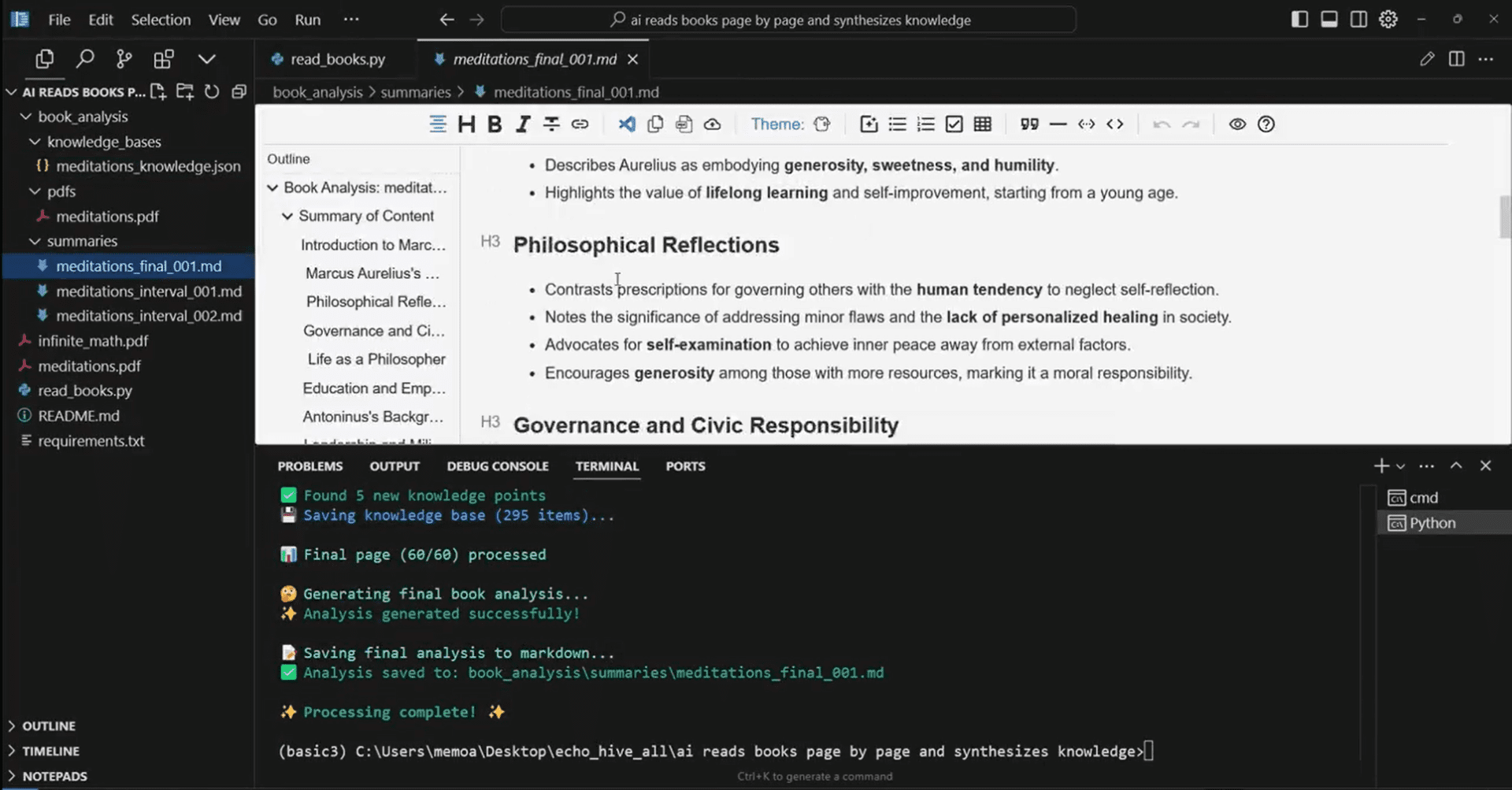

- Launching the Web UI::

xrag-cli webui

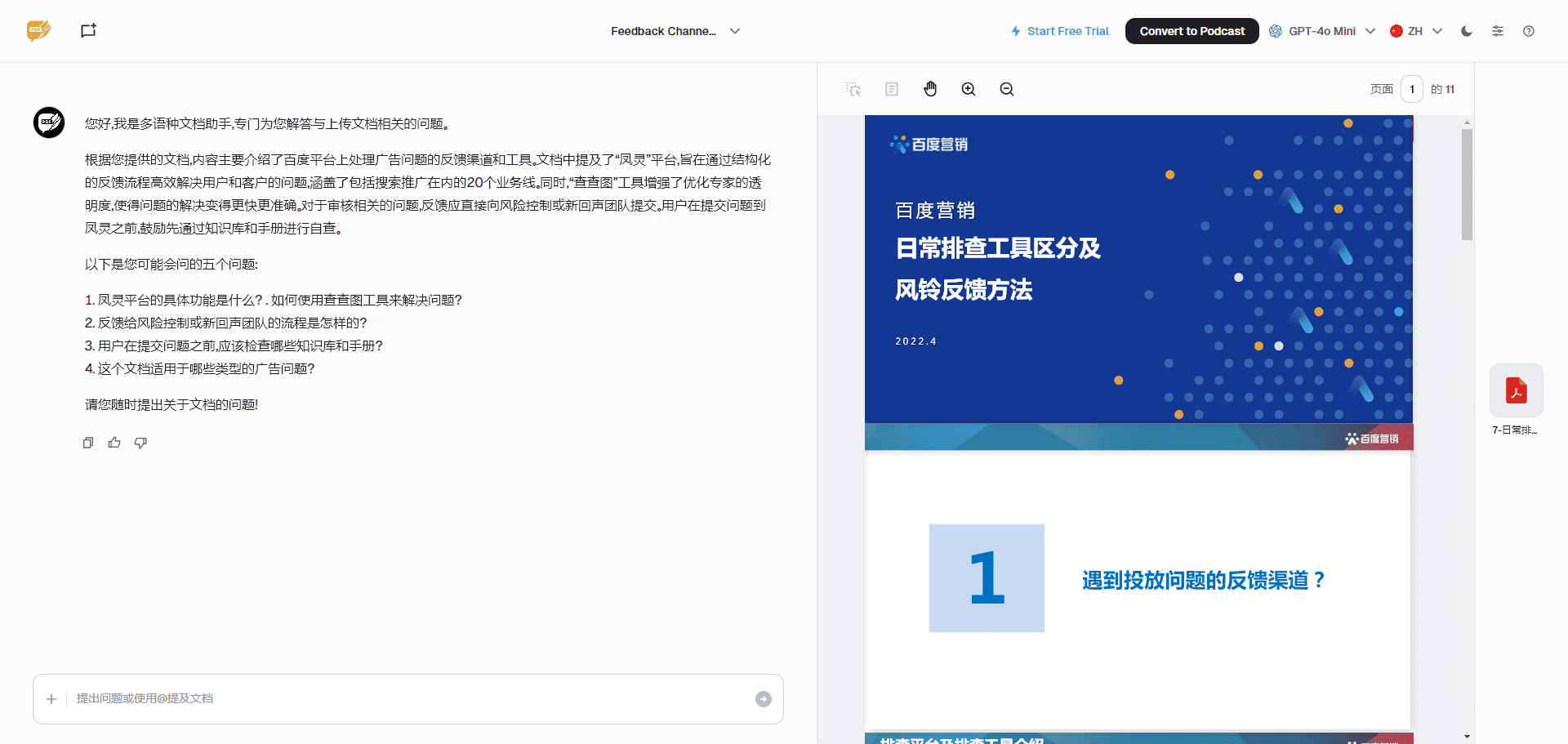

Upload and configure datasets via the Web UI with support for multiple benchmark datasets (e.g. HotpotQA, DropQA, NaturalQA).

- Operational assessment: Run the assessment using the command line tool:

xrag-cli evaluate --dataset <dataset_path> --config <config_path>

Detailed reports and visualization charts will be generated for the assessment results.

- Customized Search Strategies: Modification

src/xragdirectory code to add or adjust search strategies and evaluation models.

Main function operation flow

- Dataset upload and configuration: Upload the dataset via the Web UI and configure it as necessary.

- Evaluation runs: Select assessment indicators and search methods, run the assessment and view the results.

- Analysis of results: Analyze evaluation results and optimize system performance through generated reports and visual charts.

Ollama Framework: Enabling Efficient Localized Retrieval Reasoning in XRAG

Ollama plays a crucial role in the XRAG-Ollama localized retrieval inference framework. As an open-source, easy-to-use framework for running local large models, Ollama provides XRAG with powerful localized retrieval inference capabilities, enabling XRAG to take full advantage of its retrieval enhancement generation.

Why localize the deployment of XRAG?

- Reduced external risk: Using local deployment reduces dependence on external services and lowers the potential risks associated with unstable third-party services or data leakage.

- Offline Availability: The localized RAG system is not dependent on Internet connectivity and can operate normally even in the event of network disruption, ensuring continuity and stability of service.

- Data self-management: Local deployment gives users full control over how data is stored, managed and processed, for example by embedding private data into local vector databases, ensuring that data is processed in accordance with the organization's own security standards and business requirements.

- Data Privacy and Security: Running a RAG system in a local environment avoids the risk of sensitive data being compromised by transmitting it over the network and ensures that the data is always under local control. This is particularly important for organizations that handle confidential information.

Why Ollama?

Ollama is a lightweight, extensible framework for building and running large-scale language models (LLMs) on the local machine. It provides a simple API to create, run and manage models, as well as a library of pre-built models that can be easily used in a variety of applications. Not only does it support a wide range of models such as DeepSeek, Llama 3.3, Phi 3, Mistral, Gemma 2, etc., it is also able to utilize modern hardware-accelerated computation, thus providing high-performance inference support for XRAG. In addition, Ollama provides support for model quantization, which can significantly reduce graphics memory requirements. For example, 4-bit quantization compresses FP16-precision weight parameters to 4-bit integer precision, thereby significantly reducing the model weight volume and the graphics memory required for inference. This makes it possible to run large models on an average home computer.

By combining with Ollama, XRAG is able to efficiently run large-scale language models locally without relying on complex environment configurations and large amounts of computational resources, greatly reducing deployment and operation costs. At the same time, the local deployment solution empowers developers with complete control over data processing, supporting full chain customization from raw data cleansing, vectorized processing (e.g., building private knowledge bases through ChromaDB) to final application implementation. Its local infrastructure-based deployment architecture is naturally capable of offline operation, which not only ensures service continuity, but also meets the stringent requirements for system reliability in special environments (e.g., classified networks, etc.).

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...