Xorbits Inference: one-click deployment of multiple AI models, a distributed inference framework

General Introduction

Xorbits Inference (Xinference) is a powerful and comprehensive distributed inference framework that supports inference for a wide range of AI models such as Large Language Models (LLMs), Speech Recognition Models and Multimodal Models. With Xorbits Inference, users can easily deploy their own models with a single click or use the built-in cutting-edge open-source models, whether they are running in the cloud, on a local server or on a personal computer.

Function List

- Supports reasoning with multiple AI models, including large language models, speech recognition models, and multimodal models

- One-click deployment and service modeling to simplify setup of experimental and production environments

- Supports running in the cloud, on local servers and on PCs

- Built-in a variety of cutting-edge open-source models for direct user convenience

- Provide rich documentation and community support

Using Help

Installation process

- environmental preparation: Ensure that Python 3.7 or above is installed.

- Installation of Xorbits Inference::

pip install xorbits-inference - Configuration environment: Configure environment variables and dependencies as needed.

Usage Process

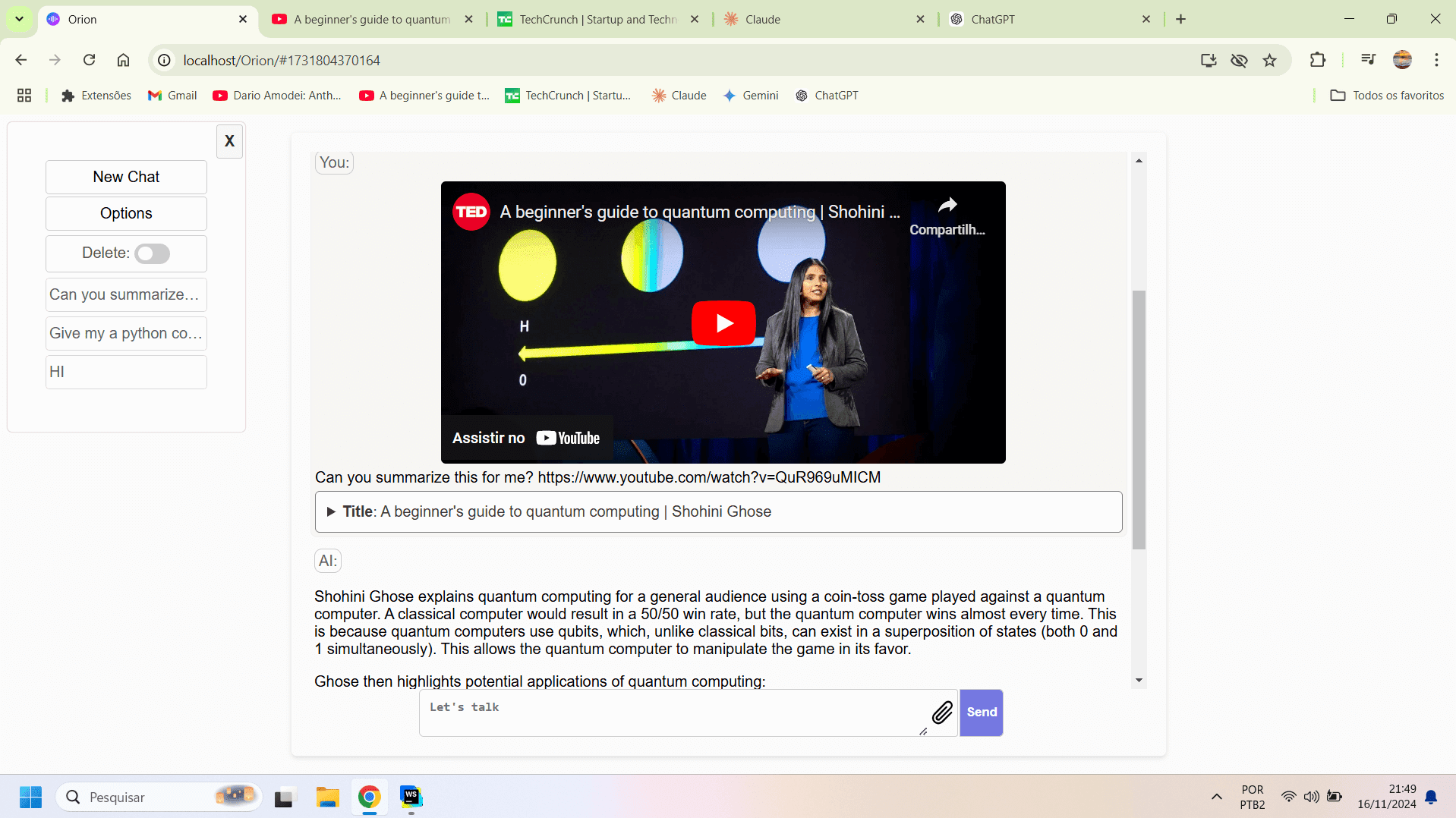

- Loading Models::

from xinference import InferenceEngine engine = InferenceEngine(model_name="gpt-3") - running inference::

result = engine.infer("你好,世界!") print(result) - Deployment models::

xinference deploy --model gpt-3 --host 0.0.0.0 --port 8080

Detailed Function Operation

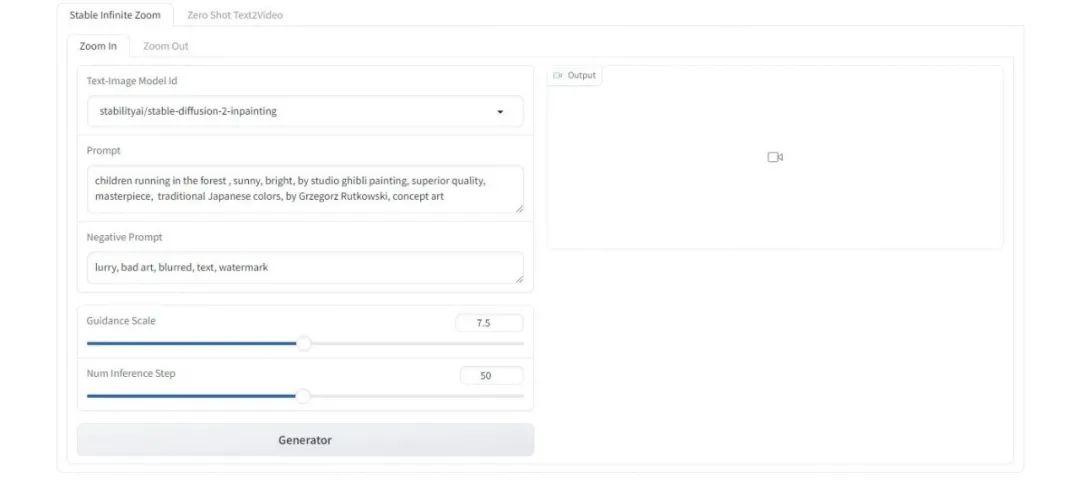

- Model Selection: Users can choose from a wide range of built-in cutting-edge open-source models, such as GPT-3, BERT, etc., or upload their own models for inference.

- Parameter Configuration: When deploying a model, you can configure the model's parameters, such as batch size, GPU usage, etc., according to your needs.

- Monitoring and Management: Through the management interface provided, users can monitor the model's operation status and performance indicators in real time, facilitating optimization and adjustment.

- Community Support: Join Xorbits Inference's Slack community to share experiences and questions with other users and get timely help and support.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...