Small Model, Big Power: QwQ-32B Takes on Full-Blooded DeepSeek-R1 with 1/20 Parameters

Recently, there have been impressive advances in the field of AI, especially in improving the reasoning capability of large-scale language models (LLMs). Among them, Reinforcement Learning (RL) is becoming a key technology to break the performance bottleneck of traditional models. Many studies have confirmed that RL can significantly enhance the reasoning ability of models. For example, the DeepSeek R1 model achieved deep thinking and complex reasoning by integrating cold-start data and multi-stage training, reaching the leading level at that time.

Against this backdrop, AliCloud launched the QwQ-32B model, which once again drew attention from the industry. This model with 32 billion parameters is comparable in performance to the DeepSeek-R1 model, which has a high number of 671 billion parameters (37 billion activated parameters). The outstanding performance of QwQ-32B is a strong demonstration of the effectiveness of reinforcement learning in improving the level of intelligence of powerful base models pre-trained on massive world knowledge.

What's more, Aliyun has also incorporated Agent-related capabilities into the QwQ-32B's reasoning model, enabling it to not only think critically, but also utilize tools and adjust the reasoning process based on environmental feedback. These technological advances demonstrate the transformative potential of RL technology and pave the way to General Artificial Intelligence (AGI).

Currently, QwQ-32B has been released on the Hugging Face and ModelScope platforms under the Apache 2.0 open-source protocol, and users can access it via the Qwen Chat Experience.

Introduction

QwQ is an inference model for the "Qwen" family of models. QwQ models are equipped with enhanced thinking and reasoning capabilities compared to traditional instruction fine-tuning models, and show significant performance gains in downstream tasks, especially when solving complex puzzles. qwQ-32B, as a medium-sized inference model, has performance is comparable to advanced inference models such as DeepSeek-R1 and o1-mini.

Model Features.

- typology: Causal Language Models

- training phase: Pretraining & Post-training including Supervised Finetuning and Reinforcement Learning

- build: Transformers structure with RoPE positional coding, SwiGLU activation function, RMSNorm normalization, and Attention QKV bias Attention mechanism bias

- parameter scale: $32.5 billion (32.5B)

- Non-embedded layer parameter sizes: $31 billion (31.0B)

- storey: 64

- Heads of Attention (GQA): 40 on the Query side, 8 on the Key/Value side

- Context length:: Complete 131,072 tokens

take note of:: For the best experience, be sure to refer to the Guidelines for use The QwQ model is deployed later.

Users can use the Demo Have an experience, or go through the QwenChat To access the QwQ model, remember to turn on Thinking (QwQ).

For more detailed information, please refer to GitHub Repositories as well as official documentThe

Performance

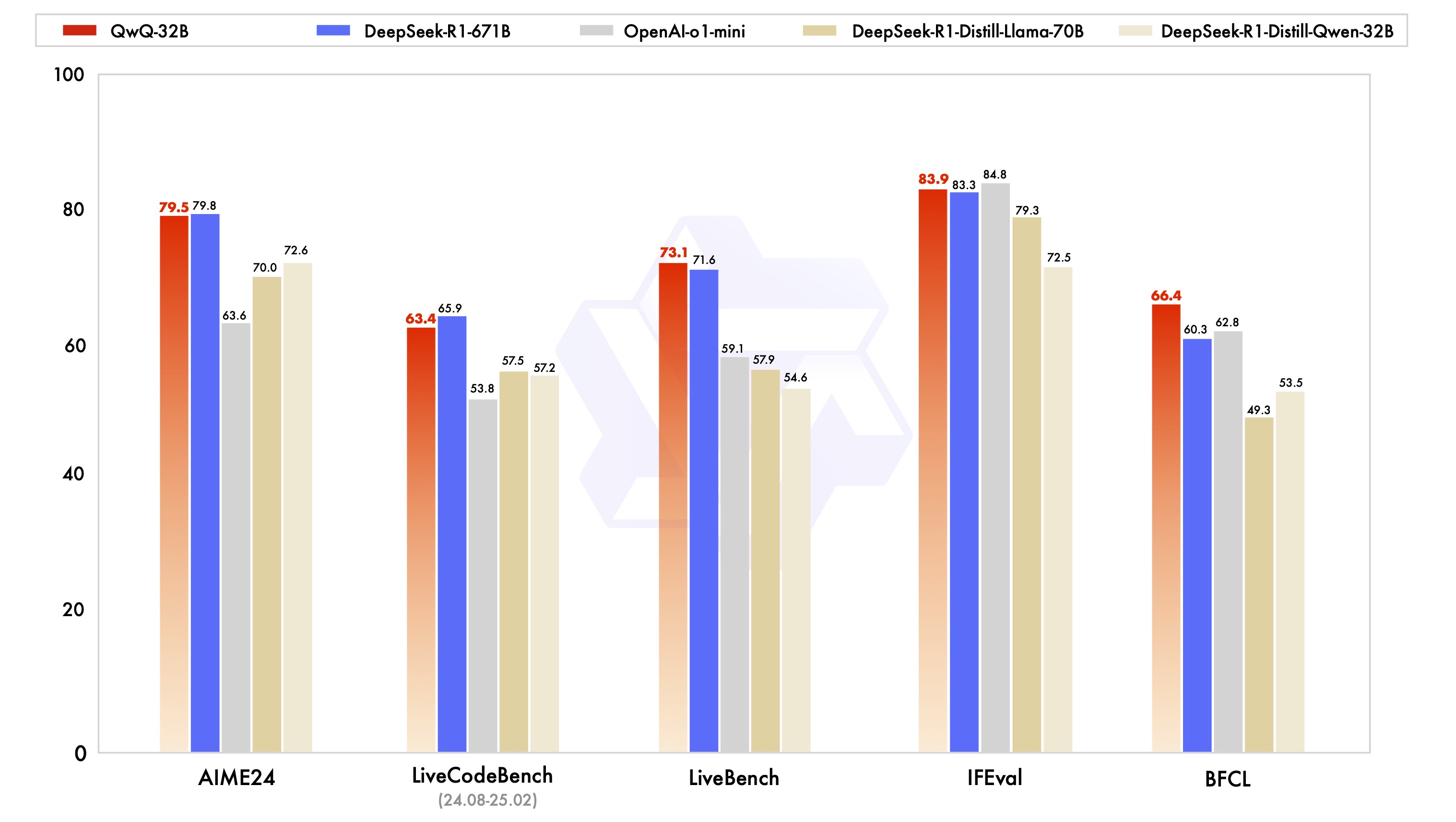

The QwQ-32B model was evaluated in a series of benchmark tests designed to comprehensively examine its capabilities in mathematical reasoning, code writing, and generalized problem solving. The chart below illustrates the performance of QwQ-32B against other leading models including DeepSeek-R1-Distilled-Qwen-32B, DeepSeek-R1-Distilled-Llama-70B, o1-mini, and the original DeepSeek-R1.

From the results, QwQ-32B shows similar or even better performance than the top model in several key benchmarks. It is especially noteworthy that QwQ-32B still maintains its competitiveness against DeepSeek-R1, which has a much higher number of parameters than QwQ-32B, which further proves the great potential of reinforcement learning in improving model performance.

Reinforcement Learning

The outstanding performance of the QwQ-32B is due in large part to the Reinforcement Learning (RL) technology employed behind it. Simply put, Reinforcement Learning is a method that guides a model to learn to make optimal decisions in a given environment through reward or punishment mechanisms. Unlike traditional supervised learning, Reinforcement Learning does not rely on large amounts of labeled data, but rather interacts with the environment to learn by trial and error, and ultimately masters the strategies needed to complete the task.

During the training process of QwQ-32B, the research team at Aliyun implemented a reinforcement learning extension method based on outcome rewards, starting from a cold-start checkpoint. In the initial phase, they focused on RL extension for math and code tasks. Instead of relying on traditional reward models, the team used an Accuracy Verifier for math problems to ensure the correctness of the final answers, and a Code Execution Server to evaluate whether the generated code successfully passed the predefined test cases.

As the training progressed, the model's performance in both the math and code domains showed a consistent improvement. After the first phase, the research team added an RL training phase for generalized capabilities. This phase of training used reward signals from a generic reward model and some rule-based validators. The experimental results show that a small number of steps of RL training can effectively improve the model's generalized capabilities in terms of instruction adherence, human preference alignment, and Agent performance without causing significant performance degradation in mathematical and coding capabilities.

Here's an article on why Qwen-2.5-3B has excellent reasoning skills:How do big models get "smarter"? Stanford reveals the key to self-improvement: four cognitive behaviors

Usage Guidelines

For optimal performance, the following settings are recommended:

- Forcing models to think about output:: Ensure that the model is based on

<think>\nbeginning to avoid generating empty think content, which may reduce the quality of the output. If you use theapply_chat_templateand setadd_generation_prompt=TrueThis is automatically implemented. Note, however, that this may cause the response to be missing at the beginning of the<think>Labeling, which is normal. - Sampling parameters:

- utilization

Temperature=0.6cap (a poem)TopP=0.95Instead of Greedy decoding to avoid endless repetition. - utilization

TopKBetween 20 and 40 to filter out rare Token appear, while maintaining the diversity of the generated output.

- utilization

- Standardized output formats: When benchmarking, it is recommended to use the Prompt prompt to standardize the model output.

- math problem: In the Prompt add "Please reason step by step, and put your final answer within \boxed{}. " (Please reason step by step, and put your final answer within \boxed{}.

- multiple-choice question: Add the following JSON structure to the Prompt to standardize the response: "Please show your choice in the

answerfield with only the choice letter, e.g..\"answer\": \"C\". " (Please add the following to theanswerThe field displays your selection and contains only the selection letter, e.g.\"answer\": \"C\").

- Handling long inputs: For inputs with more than 32,768 Tokens, enable the YaRN techniques to improve the model's ability to effectively capture long sequence information.

For supported frameworks, the following can be added to the config.json file to enable YaRN:

{

...,

"rope_scaling": {

"factor": 4.0,

"original_max_position_embeddings": 32768,

"type": "yarn"

}

}

For deployment, vLLM is recommended. if you are unfamiliar with vLLM, please refer to the official document to get the usage. Currently, vLLM only supports static YARNs, which means that the scaling factor remains constant as the input length changes, theThis may affect the performance of the model when dealing with shorter texts. Therefore, it is recommended that you only add the rope_scaling Configuration.

How to use QwQ-32B (Use QwQ-32B)

The following brief example shows how to use the QwQ-32B model via Hugging Face Transformers and the AliCloud DashScope API.

Via Hugging Face Transformers.

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "Qwen/QwQ-32B"

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained(model_name)

prompt = "How many r's are in the word \"strawberry\""

messages = [

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

generated_ids = model.generate(

**model_inputs,

max_new_tokens=32768

)

generated_ids = [

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

print(response)

Via AliCloud DashScope API.

from openai import OpenAI

import os

# Initialize OpenAI client

client = OpenAI(

# If the environment variable is not configured, replace with your API Key: api_key="sk-xxx"

# How to get an API Key:https://help.aliyun.com/zh/model-studio/developer-reference/get-api-key

api_key=os.getenv("DASHSCOPE_API_KEY"),

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1"

)

reasoning_content = ""

content = ""

is_answering = False

completion = client.chat.completions.create(

model="qwq-32b",

messages=[

{"role": "user", "content": "Which is larger, 9.9 or 9.11?"}

],

stream=True,

# Uncomment the following line to return token usage in the last chunk

# stream_options={

# "include_usage": True

# }

)

print("\n" + "=" * 20 + "reasoning content" + "=" * 20 + "\n")

for chunk in completion:

# If chunk.choices is empty, print usage

if not chunk.choices:

print("\nUsage:")

print(chunk.usage)

else:

delta = chunk.choices[0].delta

# Print reasoning content

if hasattr(delta, 'reasoning_content') and delta.reasoning_content is not None:

print(delta.reasoning_content, end='', flush=True)

reasoning_content += delta.reasoning_content

else:

if delta.content != "" and is_answering is False:

print("\n" + "=" * 20 + "content" + "=" * 20 + "\n")

is_answering = True

# Print content

print(delta.content, end='', flush=True)

content += delta.content

Future Work

The release of QwQ-32B marks an initial but critical step in extending Reinforcement Learning (RL) for enhanced reasoning with the Qwen family of models. Through this exploration, Aliyun not only witnesses the great potential of extended reinforcement learning applications, but also recognizes the huge untapped potential within pre-trained language models.

Looking ahead to the development of the next generation of "Thousand Questions" models, Aliyun is confident. They believe that by combining a more robust base model with reinforcement learning techniques powered by scaled compute resources, it is expected to accelerate the ultimate goal of General Artificial Intelligence (AGI). In addition, AliCloud is actively exploring deeper integration of Agents with RL to enable longer-range reasoning capabilities, and is committed to unlocking greater intelligence through scaling at the point of inference.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...