Large model fine-tuning knowledge points that even a novice can understand

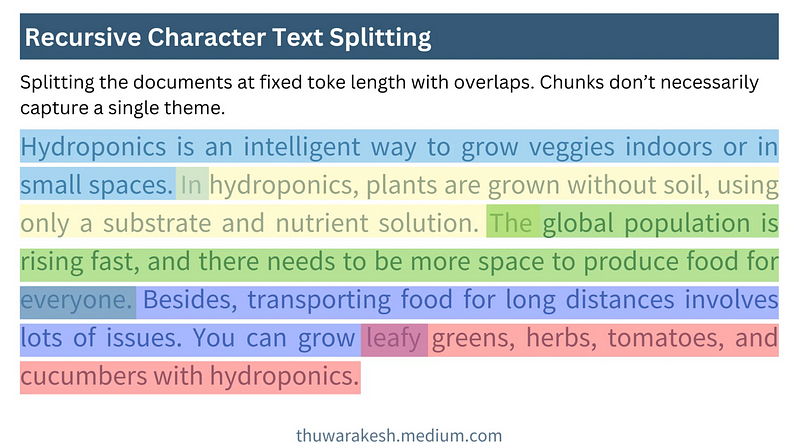

Large model fine-tuning of the entire process

It is recommended to strictly follow the above process during the fine-tuning process and avoid skipping steps, which may lead to ineffective labor. For example, if the dataset is not adequately constructed and the poor effect of the fine-tuned model is eventually found to be a problem with the quality of the dataset, then the preliminary efforts will be wasted and half the effort will be wasted.

Data set collection and organization

Based on the availability of datasets, datasets can be categorized into two types: publicly available datasets and datasets that are difficult to access.

How do I get access to publicly available datasets?

The easiest way to access publicly available datasets is to search and download them through relevant open source platforms. For example, platforms such as GitHub, Hugging Face, Kaggle, Magic Hitch, etc. provide a large number of open datasets. In addition, you can also try to get data from some websites through crawler technology, such as posting, Zhihu, industry vertical websites, etc. Using crawlers to grab data usually requires some technical support and following relevant laws and regulations.

What if the required data is not available or difficult to obtain across the network?

When existing publicly available datasets do not meet the demand, another option is to build the dataset yourself. However, building hundreds to thousands of datasets manually is often both tedious and time-consuming. So, how can you build datasets efficiently? Two common ideas for building datasets quickly are described below:

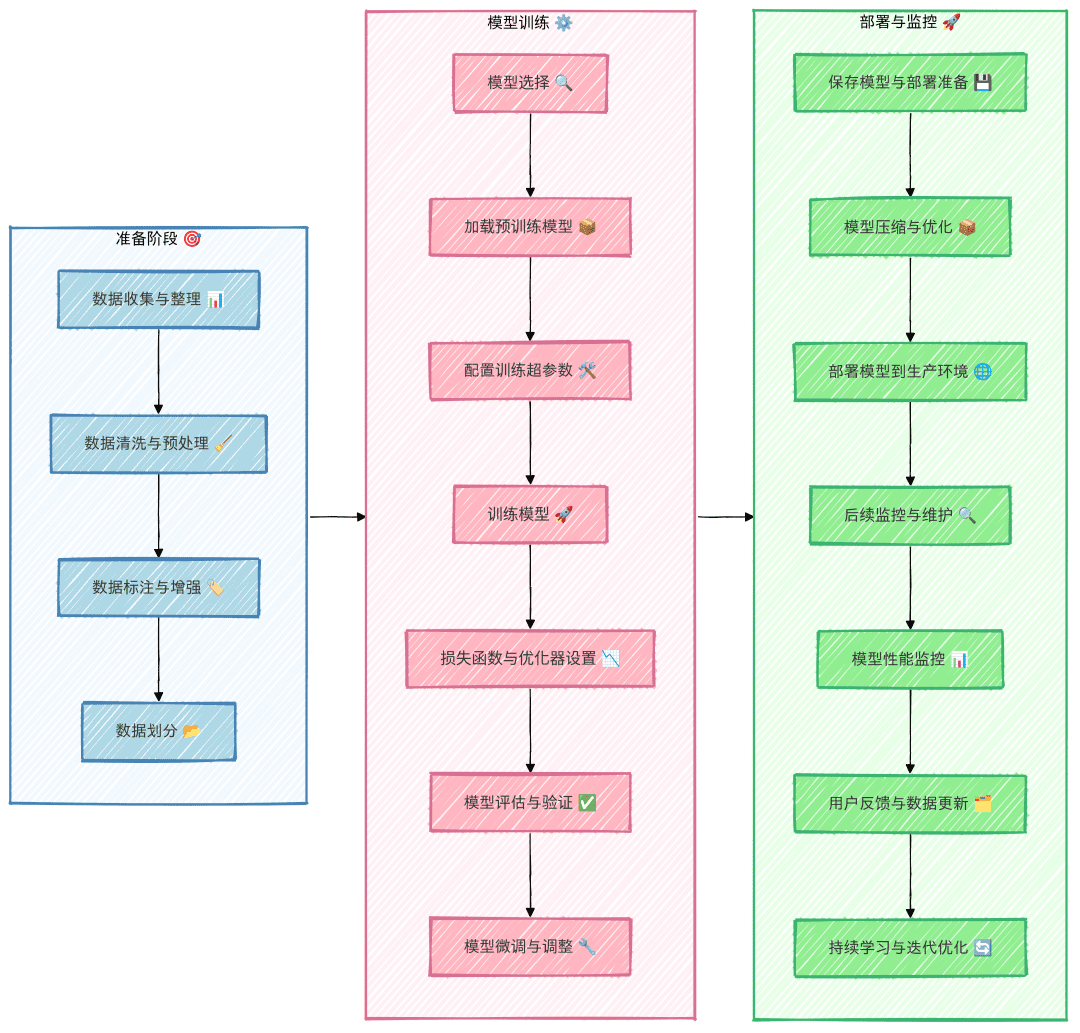

1. Leveraging the "data enhancement" capabilities of the Big Model platform

Currently many big modeling platforms provide the function of data enhancement, which can effectively help us expand the dataset. For example, mass spectrometry open platform, Xunfei open platform, volcano open platform, etc., can be enhanced by these platforms to quickly generate more samples using the original data. The specific operation is: first, manually prepare a small amount of data (e.g., 50 pieces) and upload it to these platforms. The platforms will expand the data through data enhancement technology to quickly realize the expansion of the data set.

2. Generating data using large models

Another efficient way to generate data is with the help of a big model. First, a small amount (e.g., a few dozen) of data is prepared and fed as examples to the big model. The big model can generate similar data content based on these examples. To ensure the quality of the generated data, it is recommended that you do not generate too much data at once in the first round of generation; it is recommended that you generate 20 pieces of data and review them carefully to identify common mistakes that the Big Model may make. These examples of errors are then fed back to the big model to improve the generation.

示例Prompt: 参照以下示例,输出20个类似的“近期运气”问题。请注意,语言要生活化、不要过于夸张,避免使用诸如“在活动中”、“在比赛中”等定语或状语,语句尽量简洁,避免多余修饰。示例: 我这个季度顺利吗? 我这个季度会发财吗? 最近有哪些事情在等着我? 最近会发生什么事情? 近期我能否被幸运之神垂青?

Data cleaning and fine-tuning dataset construction

Raw datasets are usually not compatible with model training, so they need to be cleaned and processed to make them compatible with the format in which the model was learned. In most cases, fine-tuned datasets are usually constructed using the AIpaca format.

Introduction to the AIpaca format

The AIpaca format has a simple structure that makes it particularly suitable for tasks such as text generation, translation, summarization, and especially single-round task-oriented fine-tuning of instructions. The following is a detailed explanation of its main components:

- instruction: A task instruction, similar to a user input (required field) that explicitly tells the model what to do.

- input: The input required by the task. Usually can be an empty string when the task is open-ended or does not require explicit input.

- output: The desired output that the model needs to generate given the instructions and inputs, i.e. the reference answer (required).

- system: can be regarded as the model's pre-prompt, which is used to set the model's behavior, usually some initial prompts (optional) for the model.

- history: Historical dialog information that helps the model build contextual relationships. In a multi-round task, the model generates answers (optional) based on the history dialog.

For example, AIpaca data is formatted as follows:

[

{

"instruction": "用‘梦想’、‘坚持’和‘成功’这三个词组成一个句子。",

"input": "",

"output": "只有坚持追逐梦想,才能最终取得成功。"

},

{

"instruction": "对《三国演义》做一个简短的介绍。",

"input": "",

"output": "《三国演义》是中国古典四大名著之一,描写了从东汉末年到西晋初年近百年的历史,讲述了东汉末年的群雄割据混战及魏、蜀、吴三国之间的政治和军事斗争,塑造了一批英雄人物。"

}

]

Data set construction methodology

In order to make a fine-tuning dataset, we usually need to convert the data into a JSON file that conforms to the AIpaca format. Below are two common methods of making datasets:

- Manual construction of datasets: After organizing the required data and constructing it according to the AIpaca format, you can manually construct a dataset by writing Python code. This method is suitable for simple scenarios and small datasets.

- Automated construction using large models: automate the generation of datasets by invoking the large model interface. This approach is suitable for large-scale datasets, especially when the instructions and output patterns of the task are relatively fixed.

Full dataset format

The complete AIpaca format is shown below and contains the task's commands, inputs, outputs, system prompt words, and historical dialog information:

[

{

"instruction": "人类指令(必填)",

"input": "人类输入(选填)",

"output": "模型回答(必填)",

"system": "系统提示词(选填)",

"history": [

["第一轮指令(选填)", "第一轮回答(选填)"],

["第二轮指令(选填)", "第二轮回答(选填)"]

]

}

]

The format helps the model learn the mapping relationship from instructions to outputs, similar to giving the model practice problems where instruction + input = question and output = answer.

Base Model Selection

- Model Type Selection: Select the base model, such as GPT, LLaMA, or BERT, based on the task requirements.

- Size and Parameters: decide on the model size (e.g., 7B, 13B, or 65B parameter sizes), taking into account computational resources, training time, and inference speed.

- Open Source vs Commercial Models: Analyze the need to choose between open source models (e.g., LLaMA, Falcon) or commercial closed source models (e.g., OpenAI GPT family).

- Using the test data, do a comparison test to find the best fit among the multiple models chosen.

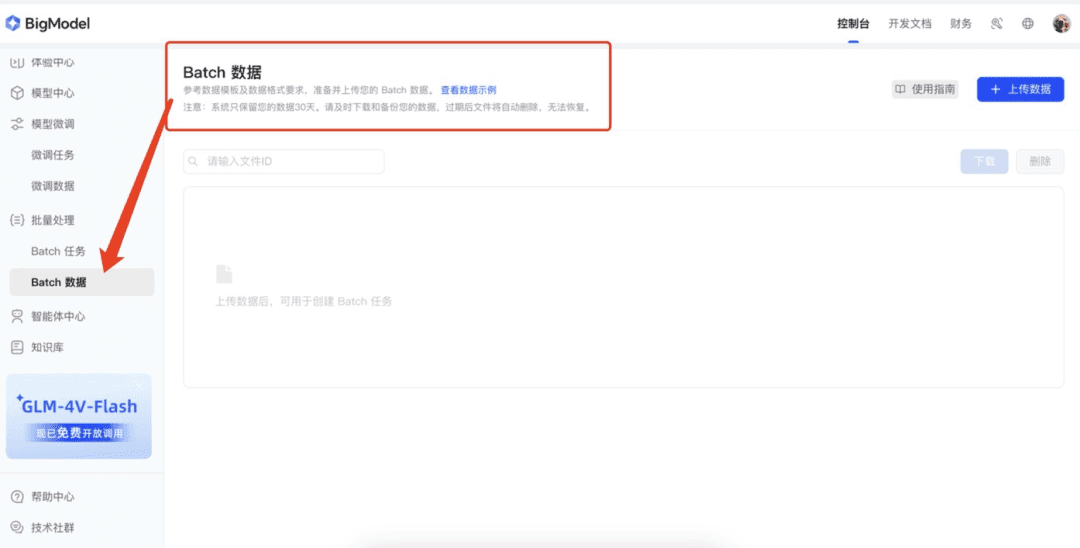

Description of model parameters

The Five Questions of the Soul

I. What is fine-tuning?

Fine-tuning is the process of further training an already pre-trained model with a new dataset. These pre-trained models usually have already learned rich features and knowledge on large datasets, and have certain generalized capabilities. The core goal of fine-tuning is to migrate this generalized knowledge to a new, more specific task or domain so that the model can better solve a specific problem.

II. Why fine-tuning?

1. Savings in computing resources

Training a large model from scratch requires a lot of computational resources and time and is very costly. Fine-tuning utilizes pre-trained models as a starting point and requires less training on new datasets to achieve good results, greatly reducing computational cost and time.

2. Enhancing model performance

Pre-trained models, while having generalized capabilities, may not perform well on specific tasks. Fine-tuning improves accuracy and efficiency by tuning model parameters with domain-specific data to make them more adept at handling the target task.

3. Adapting to new areas

Generalized pre-trained models may not understand the data characteristics of a specific domain well, and fine-tuning can help models adapt to new domains and make them better at handling the data in a specific task.

III. What does fine-tuning get you?

Fine-tuning yields an optimized and tuned model. This model is based on the structure of the original pre-trained model, but his parameters have been updated to better adapt to new tasks or domain requirements.

Examples:

Suppose there is a pre-trained image classification model that recognizes common objects. If there is a need to recognize specific types of flowers, the model can be fine-tuned with a new dataset containing various flower images and labels. After fine-tuning, the parameters of the model are updated to more accurately recognize these flower types.

IV. How to put the fine-tuned model into production use?

1. Deployment to production environment

Integration of models into websites, mobile apps, or other systems can be deployed using model servers or cloud services such as the APIs provided by TensorFlow Serving, TorchServe, or Hugging Face.

2. Reasoning tasks

Use the fine-tuned model for inference, such as making predictions given inputs or analyzing results.

3. Continuous updating and optimization

Based on new requirements or feedback, the model is further fine-tuned or more data is added for training to maintain optimal model performance.

V. How to choose a fine-tuning method?

- LoRA: Low-rank adaptation for reducing the size of fine-tuning parameters for resource-constrained environments.

- QLoRA: LoRA-based quantitative optimization for more efficient handling of large model fine-tuning.

- P-tuning: cue learning technique for small sample tasks or small amounts of labeled data.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...